Public Member Functions | |

| bool | register_path (const vec< basic_block > &, edge taken) |

| bool | register_jump_thread (vec< jump_thread_edge * > *) |

| bool | thread_through_all_blocks (bool peel_loop_headers) |

| void | push_edge (vec< jump_thread_edge * > *path, edge, jump_thread_edge_type) |

| vec< jump_thread_edge * > * | allocate_thread_path () |

| void | debug () |

Protected Member Functions | |

| void | debug_path (FILE *, int pathno) |

Protected Attributes | |

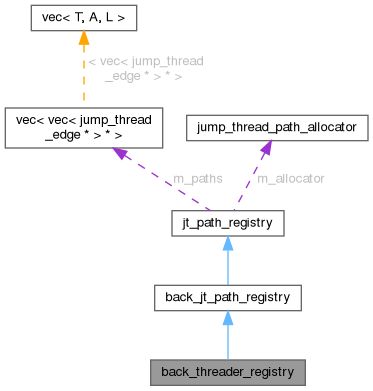

| vec< vec< jump_thread_edge * > * > | m_paths |

| unsigned long | m_num_threaded_edges |

Private Member Functions | |

| bool | update_cfg (bool peel_loop_headers) override |

| void | adjust_paths_after_duplication (unsigned curr_path_num) |

| bool | duplicate_thread_path (edge entry, edge exit, basic_block *region, unsigned n_region, unsigned current_path_no) |

| bool | rewire_first_differing_edge (unsigned path_num, unsigned edge_num) |

| bool | cancel_invalid_paths (vec< jump_thread_edge * > &path) |

| DISABLE_COPY_AND_ASSIGN (jt_path_registry) | |

Private Attributes | |

| jump_thread_path_allocator | m_allocator |

| bool | m_backedge_threads |

Detailed Description

SSA Jump Threading Copyright (C) 2005-2026 Free Software Foundation, Inc. This file is part of GCC. GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version. GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details. You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see <http://www.gnu.org/licenses/>.

Member Function Documentation

◆ adjust_paths_after_duplication()

|

privateinherited |

After a path has been jump threaded, adjust the remaining paths that are subsets of this path, so these paths can be safely threaded within the context of the new threaded path. For example, suppose we have just threaded: 5 -> 6 -> 7 -> 8 -> 12 => 5 -> 6' -> 7' -> 8' -> 12' And we have an upcoming threading candidate: 5 -> 6 -> 7 -> 8 -> 15 -> 20 This function adjusts the upcoming path into: 8' -> 15 -> 20 CURR_PATH_NUM is an index into the global paths table. It specifies the path that was just threaded.

References cancel_thread(), jt_path_registry::debug_path(), dump_file, dump_flags, gcc_assert, jt_path_registry::m_paths, MIN, rewire_first_differing_edge(), and TDF_DETAILS.

Referenced by duplicate_thread_path().

◆ allocate_thread_path()

|

inherited |

References m_allocator.

Referenced by back_threader_registry::register_path().

◆ cancel_invalid_paths()

|

privateinherited |

References cancel_thread(), cfun, curr_loop, empty_block_p(), flow_loop_nested_p(), gcc_assert, gcc_checking_assert, i, loop::latch, m_backedge_threads, NULL, path, and PROP_loop_opts_done.

Referenced by register_jump_thread().

◆ debug()

|

inherited |

References debug_path(), i, and m_paths.

◆ debug_path()

|

protectedinherited |

References dump_file, i, and m_paths.

Referenced by back_jt_path_registry::adjust_paths_after_duplication(), and debug().

◆ DISABLE_COPY_AND_ASSIGN()

|

privateinherited |

References jt_path_registry().

◆ duplicate_thread_path()

|

privateinherited |

Duplicates a jump-thread path of N_REGION basic blocks. The ENTRY edge is redirected to the duplicate of the region. Remove the last conditional statement in the last basic block in the REGION, and create a single fallthru edge pointing to the same destination as the EXIT edge. CURRENT_PATH_NO is an index into the global paths[] table specifying the jump-thread path. Returns false if it is unable to copy the region, true otherwise.

References add_phi_args_after_copy(), adjust_paths_after_duplication(), profile_probability::always(), bb_in_bbs(), can_copy_bbs_p(), copy_bbs(), basic_block_def::count, count, find_edge(), flush_pending_stmts(), FOR_EACH_EDGE, free(), free_original_copy_tables(), gcc_assert, get_bb_copy(), get_bb_original(), loop::header, i, initialize_original_copy_tables(), profile_count::initialized_p(), make_edge(), mark_loop_for_removal(), NULL, redirect_edge_and_branch(), redirect_edge_and_branch_force(), remove_ctrl_stmt_and_useless_edges(), rescan_loop_exit(), scale_bbs_frequencies_profile_count(), set_loop_copy(), single_succ_edge(), single_succ_p(), split_edge_bb_loc(), basic_block_def::succs, update_bb_profile_for_threading(), and verify_jump_thread().

Referenced by update_cfg().

◆ push_edge()

|

inherited |

References m_allocator, and path.

Referenced by back_threader_registry::register_path().

◆ register_jump_thread()

|

inherited |

Register a jump threading opportunity. We queue up all the jump threading opportunities discovered by a pass and update the CFG and SSA form all at once. E is the edge we can thread, E2 is the new target edge, i.e., we are effectively recording that E->dest can be changed to E2->dest after fixing the SSA graph. Return TRUE if PATH was successfully threaded.

References cancel_invalid_paths(), dbg_cnt(), dump_file, dump_flags, dump_jump_thread_path(), gcc_checking_assert, m_paths, path, and TDF_DETAILS.

Referenced by back_threader_registry::register_path().

◆ register_path()

| bool back_threader_registry::register_path | ( | const vec< basic_block > & | m_path, |

| edge | taken_edge ) |

The current path PATH is a vector of blocks forming a jump threading path in reverse order. TAKEN_EDGE is the edge taken from path[0]. Convert the current path into the form used by register_jump_thread and register it. Return TRUE if successful or FALSE otherwise.

References jt_path_registry::allocate_thread_path(), EDGE_COPY_SRC_BLOCK, EDGE_NO_COPY_SRC_BLOCK, find_edge(), gcc_assert, jt_path_registry::push_edge(), and jt_path_registry::register_jump_thread().

◆ rewire_first_differing_edge()

|

privateinherited |

Rewire a jump_thread_edge so that the source block is now a threaded source block. PATH_NUM is an index into the global path table PATHS. EDGE_NUM is the jump thread edge number into said path. Returns TRUE if we were able to successfully rewire the edge.

References dump_file, dump_flags, find_edge(), get_bb_copy(), jt_path_registry::m_paths, NULL, path, and TDF_DETAILS.

Referenced by adjust_paths_after_duplication().

◆ thread_through_all_blocks()

Thread all paths that have been queued for jump threading, and update the CFG accordingly. It is the caller's responsibility to fix the dominance information and rewrite duplicated SSA_NAMEs back into SSA form. If PEEL_LOOP_HEADERS is false, avoid threading edges through loop headers if it does not simplify the loop. Returns true if one or more edges were threaded.

References cfun, LOOPS_NEED_FIXUP, loops_state_set(), m_num_threaded_edges, m_paths, statistics_counter_event(), and update_cfg().

◆ update_cfg()

This is the backward threader version of thread_through_all_blocks using a generic BB copier.

Implements jt_path_registry.

References hash_set< KeyId, Lazy, Traits >::add(), cancel_thread(), CDI_DOMINATORS, hash_set< KeyId, Lazy, Traits >::contains(), duplicate_thread_path(), free(), free_dominance_info(), jt_path_registry::m_num_threaded_edges, jt_path_registry::m_paths, path, and valid_jump_thread_path().

Field Documentation

◆ m_allocator

|

privateinherited |

Referenced by allocate_thread_path(), and push_edge().

◆ m_backedge_threads

|

privateinherited |

Referenced by cancel_invalid_paths(), and jt_path_registry().

◆ m_num_threaded_edges

|

protectedinherited |

◆ m_paths

|

protectedinherited |

Referenced by back_jt_path_registry::adjust_paths_after_duplication(), debug(), debug_path(), jt_path_registry(), fwd_jt_path_registry::mark_threaded_blocks(), register_jump_thread(), fwd_jt_path_registry::remove_jump_threads_including(), back_jt_path_registry::rewire_first_differing_edge(), thread_through_all_blocks(), back_jt_path_registry::update_cfg(), fwd_jt_path_registry::update_cfg(), and ~jt_path_registry().

The documentation for this class was generated from the following file: