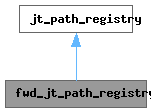

#include <tree-ssa-threadupdate.h>

Public Member Functions | |

| fwd_jt_path_registry () | |

| ~fwd_jt_path_registry () | |

| void | remove_jump_threads_including (edge) |

| bool | register_jump_thread (vec< jump_thread_edge * > *) |

| bool | thread_through_all_blocks (bool peel_loop_headers) |

| void | push_edge (vec< jump_thread_edge * > *path, edge, jump_thread_edge_type) |

| vec< jump_thread_edge * > * | allocate_thread_path () |

| void | debug () |

Protected Member Functions | |

| void | debug_path (FILE *, int pathno) |

Protected Attributes | |

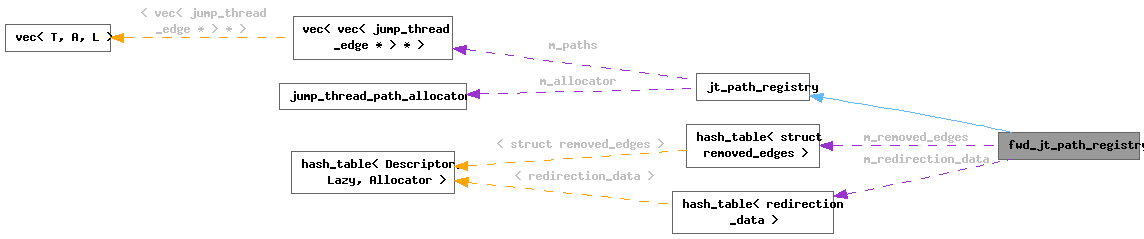

| vec< vec< jump_thread_edge * > * > | m_paths |

| unsigned long | m_num_threaded_edges |

Private Member Functions | |

| bool | update_cfg (bool peel_loop_headers) override |

| void | mark_threaded_blocks (bitmap threaded_blocks) |

| bool | thread_block_1 (basic_block, bool noloop_only, bool joiners) |

| bool | thread_block (basic_block, bool noloop_only) |

| bool | thread_through_loop_header (class loop *loop, bool may_peel_loop_headers) |

| class redirection_data * | lookup_redirection_data (edge e, enum insert_option) |

| bool | cancel_invalid_paths (vec< jump_thread_edge * > &path) |

| DISABLE_COPY_AND_ASSIGN (jt_path_registry) | |

Private Attributes | |

| hash_table< struct removed_edges > * | m_removed_edges |

| hash_table< redirection_data > * | m_redirection_data |

| jump_thread_path_allocator | m_allocator |

| bool | m_backedge_threads |

Constructor & Destructor Documentation

◆ fwd_jt_path_registry()

| fwd_jt_path_registry::fwd_jt_path_registry | ( | ) |

References false, jt_path_registry::jt_path_registry(), m_redirection_data, m_removed_edges, and NULL.

◆ ~fwd_jt_path_registry()

| fwd_jt_path_registry::~fwd_jt_path_registry | ( | ) |

References m_removed_edges.

Member Function Documentation

◆ allocate_thread_path()

|

inherited |

References m_allocator.

Referenced by back_threader_registry::register_path().

◆ cancel_invalid_paths()

|

privateinherited |

References cancel_thread(), cfun, curr_loop, empty_block_p(), flow_loop_nested_p(), gcc_assert, gcc_checking_assert, i, loop::latch, m_backedge_threads, NULL, path, and PROP_loop_opts_done.

Referenced by register_jump_thread().

◆ debug()

|

inherited |

References debug_path(), i, and m_paths.

◆ debug_path()

|

protectedinherited |

References dump_file, i, and m_paths.

Referenced by back_jt_path_registry::adjust_paths_after_duplication(), and debug().

◆ DISABLE_COPY_AND_ASSIGN()

|

privateinherited |

References jt_path_registry().

◆ lookup_redirection_data()

|

private |

Given an outgoing edge E lookup and return its entry in our hash table. If INSERT is true, then we insert the entry into the hash table if it is not already present. INCOMING_EDGE is added to the list of incoming edges associated with E in the hash table.

References redirection_data::dup_blocks, el::e, free(), redirection_data::incoming_edges, insert(), m_redirection_data, el::next, NULL, path, redirection_data::path, and THREAD_PATH.

Referenced by thread_block_1().

◆ mark_threaded_blocks()

|

private |

Walk through the registered jump threads and convert them into a form convenient for this pass. Any block which has incoming edges threaded to outgoing edges will have its entry in THREADED_BLOCK set. Any threaded edge will have its new outgoing edge stored in the original edge's AUX field. This form avoids the need to walk all the edges in the CFG to discover blocks which need processing and avoids unnecessary hash table lookups to map from threaded edge to new target.

References BASIC_BLOCK_FOR_FN, bitmap_copy(), bitmap_set_bit, cancel_thread(), cfun, count_stmts_and_phis_in_block(), EDGE_COPY_SRC_BLOCK, EDGE_COPY_SRC_JOINER_BLOCK, estimate_threading_killed_stmts(), EXECUTE_IF_SET_IN_BITMAP, find_edge(), basic_block_def::flags, flow_loop_nested_p(), FOR_EACH_EDGE, gcc_assert, i, basic_block_def::loop_father, LOOPS_HAVE_MARKED_IRREDUCIBLE_REGIONS, loops_state_satisfies_p(), jt_path_registry::m_paths, NULL, optimize_function_for_size_p(), path, phi_args_equal_on_edges(), basic_block_def::preds, redirection_block_p(), THREAD_PATH, and vect_free_loop_info_assumptions().

Referenced by update_cfg().

◆ push_edge()

|

inherited |

References m_allocator, and path.

Referenced by back_threader_registry::register_path().

◆ register_jump_thread()

|

inherited |

Register a jump threading opportunity. We queue up all the jump threading opportunities discovered by a pass and update the CFG and SSA form all at once. E is the edge we can thread, E2 is the new target edge, i.e., we are effectively recording that E->dest can be changed to E2->dest after fixing the SSA graph. Return TRUE if PATH was successfully threaded.

References cancel_invalid_paths(), dbg_cnt(), dump_file, dump_flags, dump_jump_thread_path(), gcc_checking_assert, m_paths, path, and TDF_DETAILS.

Referenced by back_threader_registry::register_path().

◆ remove_jump_threads_including()

| void fwd_jt_path_registry::remove_jump_threads_including | ( | edge | ) |

Remove any queued jump threads that include edge E. We don't actually remove them here, just record the edges into ax hash table. That way we can do the search once per iteration of DOM/VRP rather than for every case where DOM optimizes away a COND_EXPR.

References jt_path_registry::m_paths, and m_removed_edges.

◆ thread_block()

|

private |

Wrapper for thread_block_1 so that we can first handle jump thread paths which do not involve copying joiner blocks, then handle jump thread paths which have joiner blocks. By doing things this way we can be as aggressive as possible and not worry that copying a joiner block will create a jump threading opportunity.

References thread_block_1().

Referenced by thread_through_loop_header(), and update_cfg().

◆ thread_block_1()

|

private |

BB is a block which ends with a COND_EXPR or SWITCH_EXPR and when BB is reached via one or more specific incoming edges, we know which outgoing edge from BB will be traversed. We want to redirect those incoming edges to the target of the appropriate outgoing edge. Doing so avoids a conditional branch and may expose new optimization opportunities. Note that we have to update dominator tree and SSA graph after such changes. The key to keeping the SSA graph update manageable is to duplicate the side effects occurring in BB so that those side effects still occur on the paths which bypass BB after redirecting edges. We accomplish this by creating duplicates of BB and arranging for the duplicates to unconditionally pass control to one specific successor of BB. We then revector the incoming edges into BB to the appropriate duplicate of BB. If NOLOOP_ONLY is true, we only perform the threading as long as it does not affect the structure of the loops in a nontrivial way. If JOINERS is true, then thread through joiner blocks as well.

References ssa_local_info_t::bb, BITMAP_ALLOC, BITMAP_FREE, cancel_thread(), CDI_DOMINATORS, ssa_local_info_t::duplicate_blocks, EDGE_COPY_SRC_BLOCK, EDGE_COPY_SRC_JOINER_BLOCK, EDGE_COUNT, EDGE_NO_COPY_SRC_BLOCK, flow_loop_nested_p(), FOR_EACH_EDGE, free_dominance_info(), gsi_end_p(), gsi_start_nondebug_bb(), gsi_stmt(), loop::header, i, is_ctrl_stmt(), ssa_local_info_t::jumps_threaded, last, lookup_redirection_data(), loop_exit_edge_p(), loop_exits_from_bb_p(), basic_block_def::loop_father, loop_outer(), jt_path_registry::m_num_threaded_edges, m_redirection_data, ssa_local_info_t::need_profile_correction, NULL, ssa_local_info_t::num_threaded_edges, path, basic_block_def::preds, set_loop_copy(), ssa_create_duplicates(), ssa_fixup_template_block(), ssa_redirect_edges(), basic_block_def::succs, ssa_local_info_t::template_block, and THREAD_PATH.

Referenced by thread_block().

◆ thread_through_all_blocks()

Thread all paths that have been queued for jump threading, and update the CFG accordingly. It is the caller's responsibility to fix the dominance information and rewrite duplicated SSA_NAMEs back into SSA form. If PEEL_LOOP_HEADERS is false, avoid threading edges through loop headers if it does not simplify the loop. Returns true if one or more edges were threaded.

References cfun, LOOPS_NEED_FIXUP, loops_state_set(), m_num_threaded_edges, m_paths, statistics_counter_event(), and update_cfg().

◆ thread_through_loop_header()

|

private |

Thread jumps through the header of LOOP. Returns true if cfg changes. If MAY_PEEL_LOOP_HEADERS is false, we avoid threading from entry edges to the inside of the loop.

References cancel_thread(), create_preheader(), determine_bb_domination_status(), DOMST_LOOP_BROKEN, DOMST_NONDOMINATING, EDGE_COPY_SRC_JOINER_BLOCK, EDGE_COUNT, empty_block_p(), FOR_EACH_EDGE, gcc_assert, header, loop::header, loop::latch, basic_block_def::loop_father, loop_latch_edge(), loop_outer(), make_forwarder_block(), mark_loop_for_removal(), mfb_keep_just(), mfb_kj_edge, NULL, path, basic_block_def::preds, redirection_block_p(), set_loop_copy(), single_succ_edge(), single_succ_p(), split_edge(), thread_block(), and THREAD_PATH.

Referenced by update_cfg().

◆ update_cfg()

This is the forward threader version of thread_through_all_blocks, using a custom BB copier.

Implements jt_path_registry.

References BASIC_BLOCK_FOR_FN, bitmap_bit_p, bitmap_empty_p(), can_duplicate_block_p(), cancel_thread(), cfun, EDGE_COPY_SRC_BLOCK, EDGE_COPY_SRC_JOINER_BLOCK, FOR_EACH_BB_FN, FOR_EACH_EDGE, free(), free_original_copy_tables(), gcc_assert, loop::header, i, basic_block_def::index, initialize_original_copy_tables(), LI_FROM_INNERMOST, jt_path_registry::m_paths, m_removed_edges, mark_threaded_blocks(), n_basic_blocks_for_fn, NULL, path, post_order_compute(), basic_block_def::preds, thread_block(), and thread_through_loop_header().

Field Documentation

◆ m_allocator

|

privateinherited |

Referenced by allocate_thread_path(), and push_edge().

◆ m_backedge_threads

|

privateinherited |

Referenced by cancel_invalid_paths(), and jt_path_registry().

◆ m_num_threaded_edges

|

protectedinherited |

◆ m_paths

|

protectedinherited |

Referenced by back_jt_path_registry::adjust_paths_after_duplication(), debug(), debug_path(), jt_path_registry(), fwd_jt_path_registry::mark_threaded_blocks(), register_jump_thread(), fwd_jt_path_registry::remove_jump_threads_including(), back_jt_path_registry::rewire_first_differing_edge(), thread_through_all_blocks(), back_jt_path_registry::update_cfg(), fwd_jt_path_registry::update_cfg(), and ~jt_path_registry().

◆ m_redirection_data

|

private |

Referenced by fwd_jt_path_registry(), lookup_redirection_data(), and thread_block_1().

◆ m_removed_edges

|

private |

Referenced by fwd_jt_path_registry(), remove_jump_threads_including(), update_cfg(), and ~fwd_jt_path_registry().

The documentation for this class was generated from the following files: