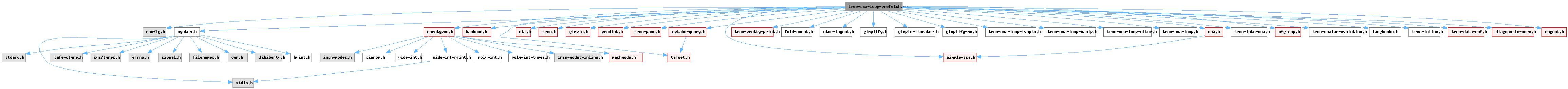

#include "config.h"#include "system.h"#include "coretypes.h"#include "backend.h"#include "target.h"#include "rtl.h"#include "tree.h"#include "gimple.h"#include "predict.h"#include "tree-pass.h"#include "gimple-ssa.h"#include "optabs-query.h"#include "tree-pretty-print.h"#include "fold-const.h"#include "stor-layout.h"#include "gimplify.h"#include "gimple-iterator.h"#include "gimplify-me.h"#include "tree-ssa-loop-ivopts.h"#include "tree-ssa-loop-manip.h"#include "tree-ssa-loop-niter.h"#include "tree-ssa-loop.h"#include "ssa.h"#include "tree-into-ssa.h"#include "cfgloop.h"#include "tree-scalar-evolution.h"#include "langhooks.h"#include "tree-inline.h"#include "tree-data-ref.h"#include "diagnostic-core.h"#include "dbgcnt.h"

Data Structures | |

| struct | mem_ref_group |

| struct | mem_ref |

| struct | ar_data |

Macros | |

| #define | WRITE_CAN_USE_READ_PREFETCH 1 |

| #define | READ_CAN_USE_WRITE_PREFETCH 0 |

| #define | PREFETCH_BLOCK param_l1_cache_line_size |

| #define | HAVE_FORWARD_PREFETCH 0 |

| #define | HAVE_BACKWARD_PREFETCH 0 |

| #define | ACCEPTABLE_MISS_RATE 50 |

| #define | L1_CACHE_SIZE_BYTES ((unsigned) (param_l1_cache_size * 1024)) |

| #define | L2_CACHE_SIZE_BYTES ((unsigned) (param_l2_cache_size * 1024)) |

| #define | NONTEMPORAL_FRACTION 16 |

| #define | FENCE_FOLLOWING_MOVNT NULL_TREE |

| #define | TRIP_COUNT_TO_AHEAD_RATIO 4 |

| #define | PREFETCH_ALL HOST_WIDE_INT_M1U |

| #define | PREFETCH_MOD_TO_UNROLL_FACTOR_RATIO 4 |

| #define | PREFETCH_MAX_MEM_REFS_PER_LOOP 200 |

Functions | |

| static void | dump_mem_details (FILE *file, tree base, tree step, HOST_WIDE_INT delta, bool write_p) |

| static void | dump_mem_ref (FILE *file, struct mem_ref *ref) |

| static struct mem_ref_group * | find_or_create_group (struct mem_ref_group **groups, tree base, tree step) |

| static void | record_ref (struct mem_ref_group *group, gimple *stmt, tree mem, HOST_WIDE_INT delta, bool write_p) |

| static void | release_mem_refs (struct mem_ref_group *groups) |

| static bool | idx_analyze_ref (tree base, tree *index, void *data) |

| static bool | analyze_ref (class loop *loop, tree *ref_p, tree *base, tree *step, HOST_WIDE_INT *delta, gimple *stmt) |

| static bool | gather_memory_references_ref (class loop *loop, struct mem_ref_group **refs, tree ref, bool write_p, gimple *stmt) |

| static struct mem_ref_group * | gather_memory_references (class loop *loop, bool *no_other_refs, unsigned *ref_count) |

| static void | prune_ref_by_self_reuse (struct mem_ref *ref) |

| static HOST_WIDE_INT | ddown (HOST_WIDE_INT x, unsigned HOST_WIDE_INT by) |

| static bool | is_miss_rate_acceptable (unsigned HOST_WIDE_INT cache_line_size, HOST_WIDE_INT step, HOST_WIDE_INT delta, unsigned HOST_WIDE_INT distinct_iters, int align_unit) |

| static void | prune_ref_by_group_reuse (struct mem_ref *ref, struct mem_ref *by, bool by_is_before) |

| static void | prune_ref_by_reuse (struct mem_ref *ref, struct mem_ref *refs) |

| static void | prune_group_by_reuse (struct mem_ref_group *group) |

| static void | prune_by_reuse (struct mem_ref_group *groups) |

| static bool | should_issue_prefetch_p (struct mem_ref *ref) |

| static bool | schedule_prefetches (struct mem_ref_group *groups, unsigned unroll_factor, unsigned ahead) |

| static bool | nothing_to_prefetch_p (struct mem_ref_group *groups) |

| static int | estimate_prefetch_count (struct mem_ref_group *groups, unsigned unroll_factor) |

| static void | issue_prefetch_ref (struct mem_ref *ref, unsigned unroll_factor, unsigned ahead) |

| static void | issue_prefetches (struct mem_ref_group *groups, unsigned unroll_factor, unsigned ahead) |

| static bool | nontemporal_store_p (struct mem_ref *ref) |

| static bool | mark_nontemporal_store (struct mem_ref *ref) |

| static void | emit_mfence_after_loop (class loop *loop) |

| static bool | may_use_storent_in_loop_p (class loop *loop) |

| static bool | mark_nontemporal_stores (class loop *loop, struct mem_ref_group *groups) |

| static bool | should_unroll_loop_p (class loop *loop, class tree_niter_desc *desc, unsigned factor) |

| static unsigned | determine_unroll_factor (class loop *loop, struct mem_ref_group *refs, unsigned ninsns, class tree_niter_desc *desc, HOST_WIDE_INT est_niter) |

| static unsigned | volume_of_references (struct mem_ref_group *refs) |

| static unsigned | volume_of_dist_vector (lambda_vector vec, unsigned *loop_sizes, unsigned n) |

| static void | add_subscript_strides (tree access_fn, unsigned stride, HOST_WIDE_INT *strides, unsigned n, class loop *loop) |

| static unsigned | self_reuse_distance (data_reference_p dr, unsigned *loop_sizes, unsigned n, class loop *loop) |

| static bool | determine_loop_nest_reuse (class loop *loop, struct mem_ref_group *refs, bool no_other_refs) |

| static bool | trip_count_to_ahead_ratio_too_small_p (unsigned ahead, HOST_WIDE_INT est_niter) |

| static bool | mem_ref_count_reasonable_p (unsigned ninsns, unsigned mem_ref_count) |

| static bool | insn_to_prefetch_ratio_too_small_p (unsigned ninsns, unsigned prefetch_count, unsigned unroll_factor) |

| static bool | loop_prefetch_arrays (class loop *loop, bool &need_lc_ssa_update) |

| unsigned int | tree_ssa_prefetch_arrays (void) |

| gimple_opt_pass * | make_pass_loop_prefetch (gcc::context *ctxt) |

Macro Definition Documentation

◆ ACCEPTABLE_MISS_RATE

| #define ACCEPTABLE_MISS_RATE 50 |

In some cases we are only able to determine that there is a certain probability that the two accesses hit the same cache line. In this case, we issue the prefetches for both of them if this probability is less then (1000 - ACCEPTABLE_MISS_RATE) per thousand.

Referenced by is_miss_rate_acceptable().

◆ FENCE_FOLLOWING_MOVNT

| #define FENCE_FOLLOWING_MOVNT NULL_TREE |

In case we have to emit a memory fence instruction after the loop that uses nontemporal stores, this defines the builtin to use.

Referenced by emit_mfence_after_loop(), mark_nontemporal_stores(), and may_use_storent_in_loop_p().

◆ HAVE_BACKWARD_PREFETCH

| #define HAVE_BACKWARD_PREFETCH 0 |

Do we have a backward hardware sequential prefetching?

Referenced by prune_ref_by_self_reuse().

◆ HAVE_FORWARD_PREFETCH

| #define HAVE_FORWARD_PREFETCH 0 |

Do we have a forward hardware sequential prefetching?

Referenced by prune_ref_by_self_reuse().

◆ L1_CACHE_SIZE_BYTES

| #define L1_CACHE_SIZE_BYTES ((unsigned) (param_l1_cache_size * 1024)) |

Referenced by determine_loop_nest_reuse(), self_reuse_distance(), and tree_ssa_prefetch_arrays().

◆ L2_CACHE_SIZE_BYTES

| #define L2_CACHE_SIZE_BYTES ((unsigned) (param_l2_cache_size * 1024)) |

Referenced by determine_loop_nest_reuse(), issue_prefetch_ref(), nontemporal_store_p(), and prune_ref_by_group_reuse().

◆ NONTEMPORAL_FRACTION

| #define NONTEMPORAL_FRACTION 16 |

We consider a memory access nontemporal if it is not reused sooner than after L2_CACHE_SIZE_BYTES of memory are accessed. However, we ignore accesses closer than L1_CACHE_SIZE_BYTES / NONTEMPORAL_FRACTION, so that we use nontemporal prefetches e.g. if single memory location is accessed several times in a single iteration of the loop.

Referenced by determine_loop_nest_reuse(), and self_reuse_distance().

◆ PREFETCH_ALL

| #define PREFETCH_ALL HOST_WIDE_INT_M1U |

Assigned to PREFETCH_BEFORE when all iterations are to be prefetched.

Referenced by prune_group_by_reuse(), prune_ref_by_group_reuse(), record_ref(), should_issue_prefetch_p(), and volume_of_references().

◆ PREFETCH_BLOCK

| #define PREFETCH_BLOCK param_l1_cache_line_size |

The size of the block loaded by a single prefetch. Usually, this is the same as cache line size (at the moment, we only consider one level of cache hierarchy).

Referenced by prune_ref_by_group_reuse(), prune_ref_by_self_reuse(), and tree_ssa_prefetch_arrays().

◆ PREFETCH_MAX_MEM_REFS_PER_LOOP

| #define PREFETCH_MAX_MEM_REFS_PER_LOOP 200 |

Some of the prefetch computations have quadratic complexity. We want to avoid huge compile times and, therefore, want to limit the amount of memory references per loop where we consider prefetching.

Referenced by mem_ref_count_reasonable_p().

◆ PREFETCH_MOD_TO_UNROLL_FACTOR_RATIO

| #define PREFETCH_MOD_TO_UNROLL_FACTOR_RATIO 4 |

Do not generate a prefetch if the unroll factor is significantly less than what is required by the prefetch. This is to avoid redundant prefetches. For example, when prefetch_mod is 16 and unroll_factor is 2, prefetching requires unrolling the loop 16 times, but the loop is actually unrolled twice. In this case (ratio = 8), prefetching is not likely to be beneficial.

Referenced by schedule_prefetches().

◆ READ_CAN_USE_WRITE_PREFETCH

| #define READ_CAN_USE_WRITE_PREFETCH 0 |

True if read can be prefetched by a write prefetch.

Referenced by prune_ref_by_reuse(), and record_ref().

◆ TRIP_COUNT_TO_AHEAD_RATIO

| #define TRIP_COUNT_TO_AHEAD_RATIO 4 |

It is not profitable to prefetch when the trip count is not at least TRIP_COUNT_TO_AHEAD_RATIO times the prefetch ahead distance. For example, in a loop with a prefetch ahead distance of 10, supposing that TRIP_COUNT_TO_AHEAD_RATIO is equal to 4, it is profitable to prefetch when the trip count is greater or equal to 40. In that case, 30 out of the 40 iterations will benefit from prefetching.

Referenced by trip_count_to_ahead_ratio_too_small_p().

◆ WRITE_CAN_USE_READ_PREFETCH

| #define WRITE_CAN_USE_READ_PREFETCH 1 |

Array prefetching. Copyright (C) 2005-2026 Free Software Foundation, Inc. This file is part of GCC. GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version. GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details. You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see <http://www.gnu.org/licenses/>.

This pass inserts prefetch instructions to optimize cache usage during

accesses to arrays in loops. It processes loops sequentially and:

1) Gathers all memory references in the single loop.

2) For each of the references it decides when it is profitable to prefetch

it. To do it, we evaluate the reuse among the accesses, and determines

two values: PREFETCH_BEFORE (meaning that it only makes sense to do

prefetching in the first PREFETCH_BEFORE iterations of the loop) and

PREFETCH_MOD (meaning that it only makes sense to prefetch in the

iterations of the loop that are zero modulo PREFETCH_MOD). For example

(assuming cache line size is 64 bytes, char has size 1 byte and there

is no hardware sequential prefetch):

char *a;

for (i = 0; i < max; i++)

{

a[255] = ...; (0)

a[i] = ...; (1)

a[i + 64] = ...; (2)

a[16*i] = ...; (3)

a[187*i] = ...; (4)

a[187*i + 50] = ...; (5)

}

(0) obviously has PREFETCH_BEFORE 1

(1) has PREFETCH_BEFORE 64, since (2) accesses the same memory

location 64 iterations before it, and PREFETCH_MOD 64 (since

it hits the same cache line otherwise).

(2) has PREFETCH_MOD 64

(3) has PREFETCH_MOD 4

(4) has PREFETCH_MOD 1. We do not set PREFETCH_BEFORE here, since

the cache line accessed by (5) is the same with probability only

7/32.

(5) has PREFETCH_MOD 1 as well.

Additionally, we use data dependence analysis to determine for each

reference the distance till the first reuse; this information is used

to determine the temporality of the issued prefetch instruction.

3) We determine how much ahead we need to prefetch. The number of

iterations needed is time to fetch / time spent in one iteration of

the loop. The problem is that we do not know either of these values,

so we just make a heuristic guess based on a magic (possibly)

target-specific constant and size of the loop.

4) Determine which of the references we prefetch. We take into account

that there is a maximum number of simultaneous prefetches (provided

by machine description). We prefetch as many prefetches as possible

while still within this bound (starting with those with lowest

prefetch_mod, since they are responsible for most of the cache

misses).

5) We unroll and peel loops so that we are able to satisfy PREFETCH_MOD

and PREFETCH_BEFORE requirements (within some bounds), and to avoid

prefetching nonaccessed memory.

TODO -- actually implement peeling.

6) We actually emit the prefetch instructions. ??? Perhaps emit the

prefetch instructions with guards in cases where 5) was not sufficient

to satisfy the constraints?

A cost model is implemented to determine whether or not prefetching is

profitable for a given loop. The cost model has three heuristics:

1. Function trip_count_to_ahead_ratio_too_small_p implements a

heuristic that determines whether or not the loop has too few

iterations (compared to ahead). Prefetching is not likely to be

beneficial if the trip count to ahead ratio is below a certain

minimum.

2. Function mem_ref_count_reasonable_p implements a heuristic that

determines whether the given loop has enough CPU ops that can be

overlapped with cache missing memory ops. If not, the loop

won't benefit from prefetching. In the implementation,

prefetching is not considered beneficial if the ratio between

the instruction count and the mem ref count is below a certain

minimum.

3. Function insn_to_prefetch_ratio_too_small_p implements a

heuristic that disables prefetching in a loop if the prefetching

cost is above a certain limit. The relative prefetching cost is

estimated by taking the ratio between the prefetch count and the

total intruction count (this models the I-cache cost).

The limits used in these heuristics are defined as parameters with

reasonable default values. Machine-specific default values will be

added later.

Some other TODO:

-- write and use more general reuse analysis (that could be also used

in other cache aimed loop optimizations)

-- make it behave sanely together with the prefetches given by user

(now we just ignore them; at the very least we should avoid

optimizing loops in that user put his own prefetches)

-- we assume cache line size alignment of arrays; this could be

improved. Magic constants follow. These should be replaced by machine specific numbers.

True if write can be prefetched by a read prefetch.

Referenced by prune_ref_by_reuse(), and record_ref().

Function Documentation

◆ add_subscript_strides()

|

static |

Add the steps of ACCESS_FN multiplied by STRIDE to the array STRIDE at the position corresponding to the loop of the step. N is the depth of the considered loop nest, and, LOOP is its innermost loop.

References CHREC_LEFT, CHREC_RIGHT, get_chrec_loop(), loop_depth(), TREE_CODE, tree_fits_shwi_p(), and tree_to_shwi().

Referenced by self_reuse_distance().

◆ analyze_ref()

|

static |

Tries to express REF_P in shape &BASE + STEP * iter + DELTA, where DELTA and STEP are integer constants and iter is number of iterations of LOOP. The reference occurs in statement STMT. Strips nonaddressable component references from REF_P.

References DECL_FIELD_BIT_OFFSET, DECL_NONADDRESSABLE_P, ar_data::delta, for_each_index(), gcc_assert, idx_analyze_ref(), int_size_in_bytes(), ar_data::loop, NULL_TREE, ar_data::step, ar_data::stmt, TREE_CODE, TREE_INT_CST_LOW, TREE_OPERAND, TREE_TYPE, and unshare_expr().

Referenced by gather_memory_references_ref().

◆ ddown()

|

static |

◆ determine_loop_nest_reuse()

|

static |

Determines the distance till the first reuse of each reference in REFS in the loop nest of LOOP. NO_OTHER_REFS is true if there are no other memory references in the loop. Return false if the analysis fails.

References data_reference::aux, chrec_dont_know, chrec_known, compute_all_dependences(), create_data_ref(), current_loops, DDR_A, DDR_ARE_DEPENDENT, DDR_B, DDR_COULD_BE_INDEPENDENT_P, DDR_DIST_VECT, DDR_NUM_DIST_VECTS, dump_file, dump_flags, estimated_stmt_executions_int(), expected_loop_iterations(), find_loop_nest(), FOR_EACH_VEC_ELT, free(), free_data_refs(), free_dependence_relations(), mem_ref::group, i, mem_ref::independent_p, loop::inner, L1_CACHE_SIZE_BYTES, L2_CACHE_SIZE_BYTES, lambda_vector_zerop(), loop_containing_stmt(), loop_outer(), loop_preheader_edge(), mem_ref::mem, loop::next, mem_ref::next, mem_ref_group::next, NONTEMPORAL_FRACTION, mem_ref_group::refs, mem_ref::reuse_distance, self_reuse_distance(), mem_ref::stmt, TDF_DETAILS, mem_ref::uid, mem_ref_group::uid, vNULL, volume_of_dist_vector(), volume_of_references(), and mem_ref::write_p.

Referenced by loop_prefetch_arrays().

◆ determine_unroll_factor()

|

static |

Determine the coefficient by that unroll LOOP, from the information contained in the list of memory references REFS. Description of number of iterations of LOOP is stored to DESC. NINSNS is the number of insns of the LOOP. EST_NITER is the estimated number of iterations of the loop, or -1 if no estimate is available.

References least_common_multiple(), mem_ref::next, mem_ref_group::next, mem_ref::prefetch_mod, mem_ref_group::refs, should_issue_prefetch_p(), and should_unroll_loop_p().

Referenced by loop_prefetch_arrays().

◆ dump_mem_details()

|

static |

Dumps information about memory reference

References cst_and_fits_in_hwi(), mem_ref::delta, HOST_WIDE_INT_PRINT_DEC, int_cst_value(), print_generic_expr(), TDF_SLIM, and mem_ref::write_p.

Referenced by gather_memory_references_ref(), and record_ref().

◆ dump_mem_ref()

|

static |

Dumps information about reference REF to FILE.

References mem_ref::group, mem_ref::mem, print_generic_expr(), TDF_SLIM, mem_ref::uid, and mem_ref_group::uid.

Referenced by prune_group_by_reuse(), and record_ref().

◆ emit_mfence_after_loop()

|

static |

Issue a memory fence instruction after LOOP.

References FENCE_FOLLOWING_MOVNT, FOR_EACH_VEC_ELT, get_loop_exit_edges(), gimple_build_call(), gsi_after_labels(), gsi_insert_before(), GSI_NEW_STMT, i, single_pred_p(), and split_loop_exit_edge().

Referenced by mark_nontemporal_stores().

◆ estimate_prefetch_count()

|

static |

Estimate the number of prefetches in the given GROUPS. UNROLL_FACTOR is the factor by which LOOP was unrolled.

References mem_ref::next, mem_ref_group::next, mem_ref::prefetch_mod, mem_ref_group::refs, and should_issue_prefetch_p().

Referenced by loop_prefetch_arrays().

◆ find_or_create_group()

|

static |

Finds a group with BASE and STEP in GROUPS, or creates one if it does not exist.

References mem_ref_group::base, cst_and_fits_in_hwi(), int_cst_value(), mem_ref_group::next, NULL, operand_equal_p(), mem_ref_group::refs, mem_ref_group::step, and mem_ref_group::uid.

Referenced by gather_memory_references_ref().

◆ gather_memory_references()

|

static |

Record the suitable memory references in LOOP. NO_OTHER_REFS is set to true if there are no other memory references inside the loop.

References ECF_CONST, free(), gather_memory_references_ref(), get_loop_body_in_dom_order(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_call_flags(), gimple_vuse(), gsi_end_p(), gsi_next(), gsi_start_bb(), gsi_stmt(), i, is_gimple_call(), basic_block_def::loop_father, NULL, loop::num_nodes, REFERENCE_CLASS_P, and mem_ref_group::refs.

Referenced by loop_prefetch_arrays().

◆ gather_memory_references_ref()

|

static |

Record a memory reference REF to the list REFS. The reference occurs in LOOP in statement STMT and it is write if WRITE_P. Returns true if the reference was recorded, false otherwise.

References analyze_ref(), mem_ref_group::base, cst_and_fits_in_hwi(), ar_data::delta, dump_file, dump_flags, dump_mem_details(), expr_invariant_in_loop_p(), find_or_create_group(), get_base_address(), loop::inner, loop_outermost(), may_be_nonaddressable_p(), NULL, NULL_TREE, print_generic_expr(), record_ref(), mem_ref_group::refs, ar_data::step, mem_ref_group::step, TDF_DETAILS, and TDF_SLIM.

Referenced by gather_memory_references().

◆ idx_analyze_ref()

Analyzes a single INDEX of a memory reference to obtain information described at analyze_ref. Callback for for_each_index.

References array_ref_element_size(), iv::base, build_int_cst(), cst_and_fits_in_hwi(), ar_data::delta, fold_build2, fold_convert, int_cst_value(), ar_data::loop, loop_containing_stmt(), NULL_TREE, simple_iv(), sizetype, ar_data::step, iv::step, ar_data::stmt, TREE_CODE, TREE_OPERAND, and TREE_TYPE.

Referenced by analyze_ref().

◆ insn_to_prefetch_ratio_too_small_p()

|

static |

Determine whether or not the instruction to prefetch ratio in the loop is too small based on the profitablity consideration. NINSNS: estimated number of instructions in the loop, PREFETCH_COUNT: an estimate of the number of prefetches, UNROLL_FACTOR: the factor to unroll the loop if prefetching.

References dump_file, dump_flags, and TDF_DETAILS.

Referenced by loop_prefetch_arrays().

◆ is_miss_rate_acceptable()

|

static |

Given a CACHE_LINE_SIZE and two inductive memory references with a common STEP greater than CACHE_LINE_SIZE and an address difference DELTA, compute the probability that they will fall in different cache lines. Return true if the computed miss rate is not greater than the ACCEPTABLE_MISS_RATE. DISTINCT_ITERS is the number of distinct iterations after which the pattern repeats itself. ALIGN_UNIT is the unit of alignment in bytes.

References ACCEPTABLE_MISS_RATE, gcc_assert, and mem_ref_group::step.

Referenced by prune_ref_by_group_reuse().

◆ issue_prefetch_ref()

|

static |

Issue prefetches for the reference REF into loop as decided before. HEAD is the number of iterations to prefetch ahead. UNROLL_FACTOR is the factor by which LOOP was unrolled.

References ap, build_fold_addr_expr_with_type, build_int_cst(), builtin_decl_explicit(), cst_and_fits_in_hwi(), mem_ref::delta, dump_file, dump_flags, duplicate_ssa_name_ptr_info(), fold_build2, fold_build_pointer_plus, fold_build_pointer_plus_hwi, fold_convert, force_gimple_operand_gsi(), gimple_build_call(), mem_ref::group, gsi_for_stmt(), gsi_insert_before(), GSI_SAME_STMT, int_cst_value(), integer_one_node, integer_type_node, integer_zero_node, L2_CACHE_SIZE_BYTES, mark_ptr_info_alignment_unknown(), mem_ref::mem, NULL, prefetch, mem_ref::prefetch_mod, ptr_type_node, mem_ref::reuse_distance, size_int, sizetype, SSA_NAME_PTR_INFO, mem_ref_group::step, mem_ref::stmt, TDF_DETAILS, TREE_CODE, mem_ref::uid, mem_ref_group::uid, unshare_expr(), and mem_ref::write_p.

Referenced by issue_prefetches().

◆ issue_prefetches()

|

static |

Issue prefetches for the references in GROUPS into loop as decided before. HEAD is the number of iterations to prefetch ahead. UNROLL_FACTOR is the factor by that LOOP was unrolled.

References mem_ref::issue_prefetch_p, issue_prefetch_ref(), mem_ref::next, mem_ref_group::next, and mem_ref_group::refs.

Referenced by loop_prefetch_arrays().

◆ loop_prefetch_arrays()

Issue prefetch instructions for array references in LOOP. Returns true if the LOOP was unrolled and updates NEED_LC_SSA_UPDATE if we need to update SSA for virtual operands and LC SSA for a split edge.

References determine_loop_nest_reuse(), determine_unroll_factor(), dump_file, dump_flags, eni_size_weights, eni_time_weights, estimate_prefetch_count(), estimated_stmt_executions_int(), gather_memory_references(), HOST_WIDE_INT_PRINT_DEC, insn_to_prefetch_ratio_too_small_p(), issue_prefetches(), likely_max_stmt_executions_int(), mark_nontemporal_stores(), mem_ref_count_reasonable_p(), nothing_to_prefetch_p(), optimize_loop_nest_for_size_p(), prune_by_reuse(), mem_ref_group::refs, release_mem_refs(), schedule_prefetches(), TDF_DETAILS, tree_num_loop_insns(), tree_unroll_loop(), and trip_count_to_ahead_ratio_too_small_p().

Referenced by tree_ssa_prefetch_arrays().

◆ make_pass_loop_prefetch()

| gimple_opt_pass * make_pass_loop_prefetch | ( | gcc::context * | ctxt | ) |

◆ mark_nontemporal_store()

If REF is a nontemporal store, we mark the corresponding modify statement and return true. Otherwise, we return false.

References dump_file, dump_flags, gimple_assign_set_nontemporal_move(), mem_ref::group, nontemporal_store_p(), mem_ref::stmt, mem_ref::storent_p, TDF_DETAILS, mem_ref::uid, and mem_ref_group::uid.

Referenced by mark_nontemporal_stores().

◆ mark_nontemporal_stores()

|

static |

Marks nontemporal stores in LOOP. GROUPS contains the description of memory references in the loop. Returns whether we inserted any mfence call.

References emit_mfence_after_loop(), FENCE_FOLLOWING_MOVNT, mark_nontemporal_store(), may_use_storent_in_loop_p(), mem_ref::next, mem_ref_group::next, NULL_TREE, and mem_ref_group::refs.

Referenced by loop_prefetch_arrays().

◆ may_use_storent_in_loop_p()

Returns true if we can use storent in loop, false otherwise.

References cfun, EXIT_BLOCK_PTR_FOR_FN, FENCE_FOLLOWING_MOVNT, FOR_EACH_VEC_ELT, get_loop_exit_edges(), i, loop::inner, NULL, and NULL_TREE.

Referenced by mark_nontemporal_stores().

◆ mem_ref_count_reasonable_p()

|

static |

Determine whether or not the number of memory references in the loop is reasonable based on the profitablity and compilation time considerations. NINSNS: estimated number of instructions in the loop, MEM_REF_COUNT: total number of memory references in the loop.

References dump_file, dump_flags, PREFETCH_MAX_MEM_REFS_PER_LOOP, and TDF_DETAILS.

Referenced by loop_prefetch_arrays().

◆ nontemporal_store_p()

Returns true if REF is a memory write for that a nontemporal store insn can be used.

References mem_ref::independent_p, L2_CACHE_SIZE_BYTES, mem_ref::mem, optab_handler(), mem_ref::reuse_distance, TREE_TYPE, TYPE_MODE, and mem_ref::write_p.

Referenced by mark_nontemporal_store().

◆ nothing_to_prefetch_p()

|

static |

Return TRUE if no prefetch is going to be generated in the given GROUPS.

References mem_ref::next, mem_ref_group::next, mem_ref_group::refs, and should_issue_prefetch_p().

Referenced by loop_prefetch_arrays().

◆ prune_by_reuse()

|

static |

Prune the list of prefetch candidates GROUPS using the reuse analysis.

References mem_ref_group::next, and prune_group_by_reuse().

Referenced by loop_prefetch_arrays().

◆ prune_group_by_reuse()

|

static |

Prune the prefetch candidates in GROUP using the reuse analysis.

References dump_file, dump_flags, dump_mem_ref(), mem_ref::group, HOST_WIDE_INT_PRINT_DEC, mem_ref::next, PREFETCH_ALL, mem_ref::prefetch_before, mem_ref::prefetch_mod, prune_ref_by_reuse(), mem_ref_group::refs, and TDF_DETAILS.

Referenced by prune_by_reuse().

◆ prune_ref_by_group_reuse()

|

static |

Prune the prefetch candidate REF using the reuse with BY. If BY_IS_BEFORE is true, BY is before REF in the loop.

References absu_hwi(), cst_and_fits_in_hwi(), ddown(), mem_ref::delta, mem_ref::group, int_cst_value(), is_miss_rate_acceptable(), L2_CACHE_SIZE_BYTES, mem_ref::mem, PREFETCH_ALL, mem_ref::prefetch_before, PREFETCH_BLOCK, mem_ref_group::step, TREE_TYPE, and TYPE_ALIGN.

Referenced by prune_ref_by_reuse().

◆ prune_ref_by_reuse()

Prune the prefetch candidate REF using the reuses with other references in REFS.

References mem_ref::next, prune_ref_by_group_reuse(), prune_ref_by_self_reuse(), READ_CAN_USE_WRITE_PREFETCH, WRITE_CAN_USE_READ_PREFETCH, and mem_ref::write_p.

Referenced by prune_group_by_reuse().

◆ prune_ref_by_self_reuse()

|

static |

Prune the prefetch candidate REF using the self-reuse.

References cst_and_fits_in_hwi(), mem_ref::group, HAVE_BACKWARD_PREFETCH, HAVE_FORWARD_PREFETCH, int_cst_value(), mem_ref::prefetch_before, PREFETCH_BLOCK, mem_ref::prefetch_mod, and mem_ref_group::step.

Referenced by prune_ref_by_reuse().

◆ record_ref()

|

static |

Records a memory reference MEM in GROUP with offset DELTA and write status WRITE_P. The reference occurs in statement STMT.

References mem_ref_group::base, mem_ref::delta, dump_file, dump_flags, dump_mem_details(), dump_mem_ref(), mem_ref::group, mem_ref::mem, mem_ref::next, mem_ref_group::next, NULL, PREFETCH_ALL, READ_CAN_USE_WRITE_PREFETCH, mem_ref_group::refs, mem_ref_group::step, mem_ref::stmt, TDF_DETAILS, mem_ref_group::uid, WRITE_CAN_USE_READ_PREFETCH, and mem_ref::write_p.

Referenced by gather_memory_references_ref().

◆ release_mem_refs()

|

static |

Release memory references in GROUPS.

References free(), mem_ref::next, mem_ref_group::next, and mem_ref_group::refs.

Referenced by loop_prefetch_arrays().

◆ schedule_prefetches()

|

static |

Decide which of the prefetch candidates in GROUPS to prefetch. AHEAD is the number of iterations to prefetch ahead (which corresponds to the number of simultaneous instances of one prefetch running at a time). UNROLL_FACTOR is the factor by that the loop is going to be unrolled. Returns true if there is anything to prefetch.

References dbg_cnt(), dump_file, dump_flags, mem_ref::group, mem_ref::issue_prefetch_p, mem_ref::next, mem_ref_group::next, prefetch, mem_ref::prefetch_mod, PREFETCH_MOD_TO_UNROLL_FACTOR_RATIO, mem_ref_group::refs, should_issue_prefetch_p(), TDF_DETAILS, mem_ref::uid, and mem_ref_group::uid.

Referenced by loop_prefetch_arrays().

◆ self_reuse_distance()

|

static |

Returns the volume of memory references accessed between two consecutive self-reuses of the reference DR. We consider the subscripts of DR in N loops, and LOOP_SIZES contains the volumes of accesses in each of the loops. LOOP is the innermost loop of the current loop nest.

References add_subscript_strides(), DR_ACCESS_FNS, DR_REF, FOR_EACH_VEC_ELT, free(), handled_component_p(), i, L1_CACHE_SIZE_BYTES, NONTEMPORAL_FRACTION, TREE_CODE, tree_fits_uhwi_p(), TREE_OPERAND, tree_to_uhwi(), TREE_TYPE, and TYPE_SIZE_UNIT.

Referenced by determine_loop_nest_reuse().

◆ should_issue_prefetch_p()

Returns true if we should issue prefetch for REF.

References abs_hwi(), cst_and_fits_in_hwi(), dump_file, dump_flags, mem_ref::group, HOST_WIDE_INT_PRINT_DEC, int_cst_value(), PREFETCH_ALL, mem_ref::prefetch_before, mem_ref_group::step, mem_ref::storent_p, TDF_DETAILS, mem_ref::uid, and mem_ref_group::uid.

Referenced by determine_unroll_factor(), estimate_prefetch_count(), nothing_to_prefetch_p(), and schedule_prefetches().

◆ should_unroll_loop_p()

|

static |

Determines whether we can profitably unroll LOOP FACTOR times, and if this is the case, fill in DESC by the description of number of iterations.

References can_unroll_loop_p(), and loop::num_nodes.

Referenced by determine_unroll_factor().

◆ tree_ssa_prefetch_arrays()

| unsigned int tree_ssa_prefetch_arrays | ( | void | ) |

Issue prefetch instructions for array references in loops.

References add_builtin_function(), build_function_type_list(), BUILT_IN_NORMAL, builtin_decl_explicit_p(), cfun, const_ptr_type_node, DECL_IS_NOVOPS, dump_file, dump_flags, free_original_copy_tables(), initialize_original_copy_tables(), L1_CACHE_SIZE_BYTES, LI_FROM_INNERMOST, loop_prefetch_arrays(), NULL, NULL_TREE, loop::num, PREFETCH_BLOCK, rewrite_into_loop_closed_ssa(), scev_reset(), set_builtin_decl(), targetm, TDF_DETAILS, TODO_cleanup_cfg, TODO_update_ssa_only_virtuals, and void_type_node.

◆ trip_count_to_ahead_ratio_too_small_p()

|

static |

Determine whether or not the trip count to ahead ratio is too small based on prefitablility consideration. AHEAD: the iteration ahead distance, EST_NITER: the estimated trip count.

References dump_file, dump_flags, TDF_DETAILS, and TRIP_COUNT_TO_AHEAD_RATIO.

Referenced by loop_prefetch_arrays().

◆ volume_of_dist_vector()

|

static |

Returns the volume of memory references accessed across VEC iterations of loops, whose sizes are described in the LOOP_SIZES array. N is the number of the loops in the nest (length of VEC and LOOP_SIZES vectors).

References gcc_assert, and i.

Referenced by determine_loop_nest_reuse().

◆ volume_of_references()

|

static |

Returns the total volume of the memory references REFS, taking into account reuses in the innermost loop and cache line size. TODO -- we should also take into account reuses across the iterations of the loops in the loop nest.

References mem_ref::next, mem_ref_group::next, PREFETCH_ALL, mem_ref::prefetch_before, mem_ref::prefetch_mod, and mem_ref_group::refs.

Referenced by determine_loop_nest_reuse().