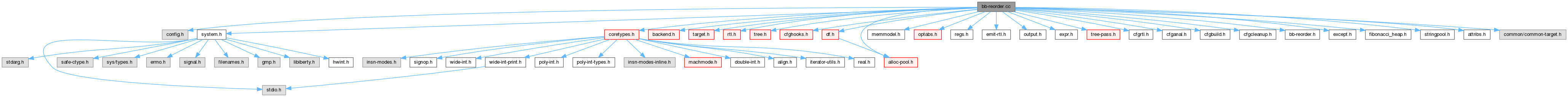

#include "config.h"#include "system.h"#include "coretypes.h"#include "backend.h"#include "target.h"#include "rtl.h"#include "tree.h"#include "cfghooks.h"#include "df.h"#include "memmodel.h"#include "optabs.h"#include "regs.h"#include "emit-rtl.h"#include "output.h"#include "expr.h"#include "tree-pass.h"#include "cfgrtl.h"#include "cfganal.h"#include "cfgbuild.h"#include "cfgcleanup.h"#include "bb-reorder.h"#include "except.h"#include "alloc-pool.h"#include "fibonacci_heap.h"#include "stringpool.h"#include "attribs.h"#include "common/common-target.h"

Data Structures | |

| struct | bbro_basic_block_data |

| struct | trace |

Macros | |

| #define | N_ROUNDS 5 |

| #define | uncond_jump_length (this_target_bb_reorder->x_uncond_jump_length) |

| #define | DUPLICATION_THRESHOLD 100 |

| #define | GET_ARRAY_SIZE(X) |

| #define | FREE(P) |

Typedefs | |

| typedef fibonacci_heap< long, basic_block_def > | bb_heap_t |

| typedef fibonacci_node< long, basic_block_def > | bb_heap_node_t |

Variables | |

| struct target_bb_reorder | default_target_bb_reorder |

| static const int | branch_threshold [N_ROUNDS] = {400, 200, 100, 0, 0} |

| static const int | exec_threshold [N_ROUNDS] = {500, 200, 50, 0, 0} |

| static int | array_size |

| static bbro_basic_block_data * | bbd |

| static profile_count | max_entry_count |

Macro Definition Documentation

◆ DUPLICATION_THRESHOLD

| #define DUPLICATION_THRESHOLD 100 |

If edge count is lower than DUPLICATION_THRESHOLD per mille of entry block the edge destination is not duplicated while connecting traces.

Referenced by connect_traces().

◆ FREE

| #define FREE | ( | P | ) |

Free the memory and set the pointer to NULL.

Referenced by connect_traces(), and reorder_basic_blocks_software_trace_cache().

◆ GET_ARRAY_SIZE

| #define GET_ARRAY_SIZE | ( | X | ) |

To avoid frequent reallocation the size of arrays is greater than needed, the number of elements is (not less than) 1.25 * size_wanted.

Referenced by copy_bb(), and reorder_basic_blocks_software_trace_cache().

◆ N_ROUNDS

| #define N_ROUNDS 5 |

Basic block reordering routines for the GNU compiler. Copyright (C) 2000-2026 Free Software Foundation, Inc. This file is part of GCC. GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version. GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details. You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see <http://www.gnu.org/licenses/>.

This file contains the "reorder blocks" pass, which changes the control flow of a function to encounter fewer branches; the "partition blocks" pass, which divides the basic blocks into "hot" and "cold" partitions, which are kept separate; and the "duplicate computed gotos" pass, which duplicates blocks ending in an indirect jump. There are two algorithms for "reorder blocks": the "simple" algorithm, which just rearranges blocks, trying to minimize the number of executed unconditional branches; and the "software trace cache" algorithm, which also copies code, and in general tries a lot harder to have long linear pieces of machine code executed. This algorithm is described next.

This (greedy) algorithm constructs traces in several rounds. The construction starts from "seeds". The seed for the first round is the entry point of the function. When there are more than one seed, the one with the lowest key in the heap is selected first (see bb_to_key). Then the algorithm repeatedly adds the most probable successor to the end of a trace. Finally it connects the traces. There are two parameters: Branch Threshold and Exec Threshold. If the probability of an edge to a successor of the current basic block is lower than Branch Threshold or its count is lower than Exec Threshold, then the successor will be the seed in one of the next rounds. Each round has these parameters lower than the previous one. The last round has to have these parameters set to zero so that the remaining blocks are picked up. The algorithm selects the most probable successor from all unvisited successors and successors that have been added to this trace. The other successors (that has not been "sent" to the next round) will be other seeds for this round and the secondary traces will start from them. If the successor has not been visited in this trace, it is added to the trace (however, there is some heuristic for simple branches). If the successor has been visited in this trace, a loop has been found. If the loop has many iterations, the loop is rotated so that the source block of the most probable edge going out of the loop is the last block of the trace. If the loop has few iterations and there is no edge from the last block of the loop going out of the loop, the loop header is duplicated. When connecting traces, the algorithm first checks whether there is an edge from the last block of a trace to the first block of another trace. When there are still some unconnected traces it checks whether there exists a basic block BB such that BB is a successor of the last block of a trace and BB is a predecessor of the first block of another trace. In this case, BB is duplicated, added at the end of the first trace and the traces are connected through it. The rest of traces are simply connected so there will be a jump to the beginning of the rest of traces. The above description is for the full algorithm, which is used when the function is optimized for speed. When the function is optimized for size, in order to reduce long jumps and connect more fallthru edges, the algorithm is modified as follows: (1) Break long traces to short ones. A trace is broken at a block that has multiple predecessors/ successors during trace discovery. When connecting traces, only connect Trace n with Trace n + 1. This change reduces most long jumps compared with the above algorithm. (2) Ignore the edge probability and count for fallthru edges. (3) Keep the original order of blocks when there is no chance to fall through. We rely on the results of cfg_cleanup. To implement the change for code size optimization, block's index is selected as the key and all traces are found in one round. References: "Software Trace Cache" A. Ramirez, J. Larriba-Pey, C. Navarro, J. Torrellas and M. Valero; 1999 http://citeseer.nj.nec.com/15361.html

The number of rounds. In most cases there will only be 4 rounds, but when partitioning hot and cold basic blocks into separate sections of the object file there will be an extra round.

Referenced by find_traces().

◆ uncond_jump_length

| #define uncond_jump_length (this_target_bb_reorder->x_uncond_jump_length) |

Referenced by copy_bb_p(), duplicate_computed_gotos(), and reorder_basic_blocks_software_trace_cache().

Typedef Documentation

◆ bb_heap_node_t

| typedef fibonacci_node<long, basic_block_def> bb_heap_node_t |

◆ bb_heap_t

| typedef fibonacci_heap<long, basic_block_def> bb_heap_t |

Function Documentation

◆ add_labels_and_missing_jumps()

If any destination of a crossing edge does not have a label, add label; Convert any easy fall-through crossing edges to unconditional jumps.

References BB_END, block_label(), cfun, control_flow_insn_p(), emit_barrier_after_bb(), emit_jump_insn_after(), ENTRY_BLOCK_PTR_FOR_FN, EXIT_BLOCK_PTR_FOR_FN, FOR_EACH_VEC_ELT, gcc_assert, i, JUMP_LABEL, LABEL_NUSES, single_succ_p(), and targetm.

◆ bb_to_key()

|

static |

Compute and return the key (for the heap) of the basic block BB.

References BB_FREQ_MAX, BB_PARTITION, bbd, cfun, basic_block_def::count, EDGE_FREQUENCY, ENTRY_BLOCK_PTR_FOR_FN, FOR_EACH_EDGE, basic_block_def::index, optimize_function_for_size_p(), basic_block_def::preds, probably_never_executed_bb_p(), and profile_count::to_frequency().

Referenced by find_traces(), and find_traces_1_round().

◆ bb_visited_trace()

|

static |

Return the trace number in which BB was visited.

References array_size, bbd, gcc_assert, and basic_block_def::index.

Referenced by copy_bb(), find_traces_1_round(), and rotate_loop().

◆ better_edge_p()

|

static |

Return true when the edge E from basic block BB is better than the temporary best edge (details are in function). The probability of edge E is PROB. The count of the successor is COUNT. The current best probability is BEST_PROB, the best count is BEST_COUNT. The edge is considered to be equivalent when PROB does not differ much from BEST_PROB; similarly for count.

References cfun, count, profile_probability::guessed_never(), profile_count::initialized_p(), profile_probability::initialized_p(), and optimize_function_for_size_p().

Referenced by find_traces_1_round().

◆ connect_better_edge_p()

|

static |

Return true when the edge E is better than the temporary best edge CUR_BEST_EDGE. If SRC_INDEX_P is true, the function compares the src bb of E and CUR_BEST_EDGE; otherwise it will compare the dest bb. BEST_LEN is the trace length of src (or dest) bb in CUR_BEST_EDGE. TRACES record the information about traces. When optimizing for size, the edge with smaller index is better. When optimizing for speed, the edge with bigger probability or longer trace is better.

References bbd, cfun, trace::length, and optimize_function_for_size_p().

Referenced by connect_traces().

◆ connect_traces()

|

static |

Connect traces in array TRACES, N_TRACES is the count of traces.

References basic_block_def::aux, BB_PARTITION, bbd, cfun, connect_better_edge_p(), copy_bb(), copy_bb_p(), count, crtl, current_pass, dump_file, DUPLICATION_THRESHOLD, EDGE_COMPLEX, EDGE_COUNT, ENTRY_BLOCK_PTR_FOR_FN, EXIT_BLOCK_PTR_FOR_FN, trace::first, FOR_EACH_EDGE, fputc(), FREE, gcc_assert, i, basic_block_def::index, INT_MAX, last, trace::last, trace::length, max_entry_count, NULL, optimize_edge_for_speed_p(), optimize_function_for_size_p(), basic_block_def::preds, and si.

◆ copy_bb()

|

static |

Create a duplicate of the basic block OLD_BB and redirect edge E to it, add it to trace after BB, mark OLD_BB visited and update pass' data structures (TRACE is a number of trace which OLD_BB is duplicated to).

References array_size, basic_block_def::aux, BB_COPY_PARTITION, bb_visited_trace(), bbd, cfun, dump_file, duplicate_block(), gcc_assert, GET_ARRAY_SIZE, i, basic_block_def::index, last_basic_block_for_fn, mark_bb_visited(), MAX, and NULL.

Referenced by connect_traces(), find_traces_1_round(), and rotate_loop().

◆ copy_bb_p()

|

static |

Return true when BB can and should be copied. CODE_MAY_GROW is true when code size is allowed to grow by duplication.

References can_duplicate_block_p(), dump_file, EDGE_COUNT, FOR_BB_INSNS, get_attr_min_length(), basic_block_def::index, INSN_P, optimize_bb_for_speed_p(), basic_block_def::preds, basic_block_def::succs, and uncond_jump_length.

Referenced by connect_traces(), find_traces_1_round(), and rotate_loop().

◆ create_eh_forwarder_block()

|

static |

Create a forwarder block to OLD_BB starting with NEW_LABEL and in the other partition wrt OLD_BB.

References basic_block_def::aux, BB_PARTITION, BB_SET_PARTITION, block_label(), cfun, basic_block_def::count, create_basic_block(), emit_barrier_after_bb(), emit_jump_insn(), emit_label(), EXIT_BLOCK_PTR_FOR_FN, JUMP_LABEL, make_single_succ_edge(), split_block_after_labels(), and targetm.

Referenced by dw2_fix_up_crossing_landing_pad(), and sjlj_fix_up_crossing_landing_pad().

◆ duplicate_computed_gotos()

|

static |

Duplicate the blocks containing computed gotos. This basically unfactors computed gotos that were factored early on in the compilation process to speed up edge based data flow. We used to not unfactor them again, which can seriously pessimize code with many computed jumps in the source code, such as interpreters. See e.g. PR15242.

References BB_END, can_duplicate_block_p(), changed, cleanup_cfg(), computed_jump_p(), fixup_partitions(), FOR_EACH_BB_FN, get_uncond_jump_length(), maybe_duplicate_computed_goto(), and uncond_jump_length.

◆ dw2_fix_up_crossing_landing_pad()

|

static |

The landing pad OLD_LP, in block OLD_BB, has edges from both partitions. Add a new landing pad that will just jump to the old one and split the edges so that no EH edge crosses partitions.

References BB_END, BB_PARTITION, create_eh_forwarder_block(), ei_next(), ei_safe_edge(), ei_start, find_reg_note(), gcc_assert, gcc_checking_assert, gen_eh_landing_pad(), GEN_INT, gen_label_rtx(), eh_landing_pad_d::index, INTVAL, LABEL_PRESERVE_P, eh_landing_pad_d::landing_pad, NULL, NULL_RTX, eh_landing_pad_d::post_landing_pad, basic_block_def::preds, redirect_edge_succ(), eh_landing_pad_d::region, and XEXP.

Referenced by find_rarely_executed_basic_blocks_and_crossing_edges().

◆ edge_order()

|

static |

Order edges by execution frequency, higher first.

Return:

1 iff frequency (VE1) < frequency (VE2)

0 iff frequency (VE1) == frequency (VE2)

-1 iff frequency (VE1) > frequency (VE2)

References profile_count::initialized_p(), MAX, and profile_count::to_gcov_type().

Referenced by reorder_basic_blocks_simple().

◆ find_jump_block()

|

static |

This function checks the destination block of a "crossing jump" to see if it has any crossing predecessors that begin with a code label and end with an unconditional jump. If so, it returns that predecessor block. (This is to avoid creating lots of new basic blocks that all contain unconditional jumps to the same destination).

References any_condjump_p(), BB_END, BB_HEAD, FOR_EACH_EDGE, INSN_P, JUMP_P, LABEL_P, NEXT_INSN(), NULL, and basic_block_def::preds.

Referenced by fix_crossing_conditional_branches().

◆ find_rarely_executed_basic_blocks_and_crossing_edges()

Find the basic blocks that are rarely executed and need to be moved to a separate section of the .o file (to cut down on paging and improve cache locality). Return a vector of all edges that cross.

References BB_PARTITION, BB_SET_PARTITION, BLOCK_FOR_INSN(), cfun, basic_block_def::count, dw2_fix_up_crossing_landing_pad(), ENTRY_BLOCK_PTR_FOR_FN, EXIT_BLOCK_PTR_FOR_FN, find_bbs_reachable_by_hot_paths(), FOR_EACH_BB_FN, FOR_EACH_EDGE, FOR_EACH_VEC_ELT, gcc_assert, gcc_checking_assert, global_options, i, LABEL_P, eh_landing_pad_d::landing_pad, mark_dfs_back_edges(), NULL, NULL_RTX, profile_count::precise_p(), basic_block_def::preds, probably_never_executed_bb_p(), probably_never_executed_edge_p(), propagate_unlikely_bbs_forward(), sanitize_hot_paths(), sjlj_fix_up_crossing_landing_pad(), basic_block_def::succs, UI_SJLJ, and vNULL.

◆ find_traces()

|

static |

Find the traces for Software Trace Cache. Chain each trace through RBI()->next. Store the number of traces to N_TRACES and description of traces to TRACES.

References basic_block_def::aux, bb_to_key(), bbd, branch_threshold, cfun, basic_block_def::count, profile_count::dump(), dump_file, ENTRY_BLOCK_PTR_FOR_FN, exec_threshold, find_traces_1_round(), FOR_EACH_EDGE, i, basic_block_def::index, fibonacci_heap< K, V >::insert(), trace::last, LONG_MIN, max_entry_count, N_ROUNDS, REG_BR_PROB_BASE, and profile_count::zero().

Referenced by reorder_basic_blocks_software_trace_cache().

◆ find_traces_1_round()

|

static |

Local function prototypes.

One round of finding traces. Find traces for BRANCH_TH and EXEC_TH i.e. do not include basic blocks whose probability is lower than BRANCH_TH or whose count is lower than EXEC_TH into traces (or whose count is lower than COUNT_TH). Store the new traces into TRACES and modify the number of traces *N_TRACES. Set the round (which the trace belongs to) to ROUND. The function expects starting basic blocks to be in *HEAP and will delete *HEAP and store starting points for the next round into new *HEAP.

References basic_block_def::aux, BB_PARTITION, bb_to_key(), bb_visited_trace(), bbd, better_edge_p(), block_ends_with_call_p(), cfun, copy_bb(), copy_bb_p(), count, dump_file, EDGE_COMPLEX, EDGE_COUNT, EDGE_FREQUENCY, ENTRY_BLOCK_PTR_FOR_FN, EXIT_BLOCK_PTR_FOR_FN, trace::first, FOR_EACH_EDGE, gcc_assert, basic_block_def::index, profile_probability::initialized_p(), fibonacci_heap< K, V >::insert(), trace::last, trace::length, LONG_MIN, mark_bb_visited(), NULL, optimize_edge_for_speed_p(), optimize_function_for_size_p(), push_to_next_round_p(), rotate_loop(), trace::round, single_pred_p(), single_succ(), single_succ_edge(), single_succ_p(), basic_block_def::succs, profile_probability::to_reg_br_prob_base(), profile_count::uninitialized(), and profile_probability::uninitialized().

Referenced by find_traces().

◆ fix_crossing_conditional_branches()

|

static |

Find all BB's with conditional jumps that are crossing edges; insert a new bb and make the conditional jump branch to the new bb instead (make the new bb same color so conditional branch won't be a 'crossing' edge). Insert an unconditional jump from the new bb to the original destination of the conditional jump.

References any_condjump_p(), as_a(), basic_block_def::aux, BB_COPY_PARTITION, BB_END, block_label(), cfun, create_basic_block(), EDGE_COUNT, EDGE_SUCC, emit_barrier_after_bb(), emit_jump_insn(), emit_label(), EXIT_BLOCK_PTR_FOR_FN, find_jump_block(), FOR_EACH_BB_FN, gcc_assert, gen_label_rtx(), GET_CODE, rtx_jump_insn::jump_target(), make_single_succ_edge(), NULL, NULL_RTX, PATTERN(), basic_block_def::prev_bb, redirect_edge_succ(), redirect_jump(), SET, rtx_jump_insn::set_jump_target(), SET_SRC, basic_block_def::succs, targetm, XEXP, and XVECEXP.

◆ fix_crossing_unconditional_branches()

|

static |

Find any unconditional branches that cross between hot and cold sections. Convert them into indirect jumps instead.

References any_condjump_p(), asm_noperands(), BARRIER_P, BB_END, BLOCK_FOR_INSN(), cfun, computed_jump_p(), delete_insn(), EDGE_COUNT, EDGE_SUCC, emit_indirect_jump(), emit_insn_before(), emit_move_insn(), end_sequence(), FOR_EACH_BB_FN, gcc_assert, gen_reg_rtx(), JUMP_LABEL, JUMP_P, LABEL_NUSES, NEXT_INSN(), NULL, PATTERN(), start_sequence(), basic_block_def::succs, and tablejump_p().

◆ fix_up_fall_thru_edges()

|

static |

Find any bb's where the fall-through edge is a crossing edge (note that these bb's must also contain a conditional jump or end with a call instruction; we've already dealt with fall-through edges for blocks that didn't have a conditional jump or didn't end with call instruction in the call to add_labels_and_missing_jumps). Convert the fall-through edge to non-crossing edge by inserting a new bb to fall-through into. The new bb will contain an unconditional jump (crossing edge) to the original fall through destination.

References asm_noperands(), basic_block_def::aux, BB_END, BB_PARTITION, block_label(), cfun, dyn_cast(), EDGE_COUNT, EDGE_SUCC, emit_barrier_after_bb(), EXIT_BLOCK_PTR_FOR_FN, find_edge(), find_fallthru_edge(), FOR_EACH_BB_FN, force_nonfallthru(), gcc_assert, gcc_checking_assert, invert_jump(), JUMP_P, NULL, PATTERN(), single_succ_edge(), basic_block_def::succs, and update_br_prob_note().

◆ get_uncond_jump_length()

| int get_uncond_jump_length | ( | void | ) |

Return the length of unconditional jump instruction.

References emit_jump_insn(), emit_label(), end_sequence(), gcc_assert, gen_label_rtx(), get_attr_min_length(), INT_MAX, trace::length, start_sequence(), and targetm.

Referenced by duplicate_computed_gotos(), reorder_basic_blocks_software_trace_cache(), and try_shrink_wrapping().

◆ insert_section_boundary_note()

| void insert_section_boundary_note | ( | void | ) |

Determine which partition the first basic block in the function belongs to, then find the first basic block in the current function that belongs to a different section, and insert a NOTE_INSN_SWITCH_TEXT_SECTIONS note immediately before it in the instruction stream. When writing out the assembly code, encountering this note will make the compiler switch between the hot and cold text sections.

References BB_HEAD, BB_PARTITION, cfun, crtl, emit_note_before(), FOR_EACH_BB_FN, and gcc_assert.

◆ make_pass_duplicate_computed_gotos()

| rtl_opt_pass * make_pass_duplicate_computed_gotos | ( | gcc::context * | ctxt | ) |

◆ make_pass_partition_blocks()

| rtl_opt_pass * make_pass_partition_blocks | ( | gcc::context * | ctxt | ) |

◆ make_pass_reorder_blocks()

| rtl_opt_pass * make_pass_reorder_blocks | ( | gcc::context * | ctxt | ) |

◆ mark_bb_visited()

|

static |

This function marks BB that it was visited in trace number TRACE.

References bbd, basic_block_def::index, and NULL.

Referenced by copy_bb(), and find_traces_1_round().

◆ maybe_duplicate_computed_goto()

|

static |

Duplicate a block (that we already know ends in a computed jump) into its predecessors, where possible. Return whether anything is changed.

References BB_END, BB_HEAD, can_duplicate_block_p(), changed, CROSSING_JUMP_P, dump_file, duplicate_block(), EDGE_COMPLEX, ei_next(), ei_safe_edge(), ei_start, emit_barrier_after_bb(), FOR_BB_INSNS, get_attr_min_length(), basic_block_def::index, INSN_P, JUMP_P, maybe_duplicate_computed_goto(), merge_blocks(), NULL, NUM_FIXED_BLOCKS, basic_block_def::preds, reorder_insns_nobb(), simplejump_p(), and single_succ_p().

Referenced by duplicate_computed_gotos(), and maybe_duplicate_computed_goto().

◆ push_to_next_round_p()

|

static |

Check to see if bb should be pushed into the next round of trace collections or not. Reasons for pushing the block forward are 1). If the block is cold, we are doing partitioning, and there will be another round (cold partition blocks are not supposed to be collected into traces until the very last round); or 2). There will be another round, and the basic block is not "hot enough" for the current round of trace collection.

References cfun, basic_block_def::count, and probably_never_executed_bb_p().

Referenced by find_traces_1_round().

◆ reorder_basic_blocks()

|

static |

Reorder basic blocks. The main entry point to this file.

References cfun, crtl, current_ir_type(), dump_file, dump_flags, dump_flow_info(), dump_reg_info(), gcc_assert, gcc_unreachable, IR_RTL_CFGLAYOUT, mark_dfs_back_edges(), n_basic_blocks_for_fn, NUM_FIXED_BLOCKS, relink_block_chain(), reorder_basic_blocks_simple(), reorder_basic_blocks_software_trace_cache(), REORDER_BLOCKS_ALGORITHM_SIMPLE, REORDER_BLOCKS_ALGORITHM_STC, set_edge_can_fallthru_flag(), and TDF_DETAILS.

◆ reorder_basic_blocks_simple()

|

static |

Reorder basic blocks using the "simple" algorithm. This tries to maximize the dynamic number of branches that are fallthrough, without copying instructions. The algorithm is greedy, looking at the most frequently executed branch first.

References any_condjump_p(), basic_block_def::aux, BB_END, BB_PARTITION, BB_VISITED, cfun, computed_jump_p(), dump_file, edge_order(), EDGE_SUCC, end(), ENTRY_BLOCK_PTR_FOR_FN, EXIT_BLOCK_PTR_FOR_FN, extract_asm_operands(), basic_block_def::flags, FOR_ALL_BB_FN, FOR_EACH_BB_FN, force_nonfallthru(), gcc_stablesort(), JUMP_P, n_basic_blocks_for_fn, NULL, optimize_function_for_speed_p(), single_succ_p(), and tablejump_p().

Referenced by reorder_basic_blocks().

◆ reorder_basic_blocks_software_trace_cache()

|

static |

Reorder basic blocks using the software trace cache (STC) algorithm.

References array_size, bbd, cfun, connect_traces(), dump_file, find_traces(), FREE, GET_ARRAY_SIZE, get_uncond_jump_length(), i, last_basic_block_for_fn, n_basic_blocks_for_fn, NULL, and uncond_jump_length.

Referenced by reorder_basic_blocks().

◆ rotate_loop()

|

static |

Rotate loop whose back edge is BACK_EDGE in the tail of trace TRACE (with sequential number TRACE_N).

References any_condjump_p(), basic_block_def::aux, BB_END, bb_visited_trace(), bbd, cfun, copy_bb(), copy_bb_p(), CROSSING_JUMP_P, EDGE_COMPLEX, EXIT_BLOCK_PTR_FOR_FN, trace::first, FOR_EACH_EDGE, header, NULL, single_succ(), single_succ_edge(), single_succ_p(), basic_block_def::succs, and profile_count::uninitialized().

Referenced by anti_adjust_stack_and_probe_stack_clash(), and find_traces_1_round().

◆ sanitize_hot_paths()

|

static |

Ensure that all hot bbs are included in a hot path through the procedure. This is done by calling this function twice, once with WALK_UP true (to look for paths from the entry to hot bbs) and once with WALK_UP false (to look for paths from hot bbs to the exit). Returns the updated value of COLD_BB_COUNT and adds newly-hot bbs to BBS_IN_HOT_PARTITION.

References BB_PARTITION, BB_SET_PARTITION, dump_file, FOR_EACH_EDGE, gcc_checking_assert, basic_block_def::index, profile_count::initialized_p(), profile_probability::initialized_p(), profile_probability::never(), basic_block_def::preds, basic_block_def::succs, profile_count::uninitialized(), profile_probability::uninitialized(), and profile_count::zero().

Referenced by find_rarely_executed_basic_blocks_and_crossing_edges().

◆ set_edge_can_fallthru_flag()

|

static |

Set the flag EDGE_CAN_FALLTHRU for edges that can be fallthru.

References any_condjump_p(), as_a(), BB_END, cfun, EDGE_COUNT, EDGE_SUCC, FOR_EACH_BB_FN, FOR_EACH_EDGE, invert_jump(), JUMP_LABEL, and basic_block_def::succs.

Referenced by reorder_basic_blocks().

◆ sjlj_fix_up_crossing_landing_pad()

|

static |

The common landing pad in block OLD_BB has edges from both partitions. Add a new landing pad that will just jump to the old one and split the edges so that no EH edge crosses partitions.

References alloca, BB_END, BB_PARTITION, cfun, create_eh_forwarder_block(), ei_next(), ei_safe_edge(), ei_start, find_reg_note(), gcc_assert, gen_eh_landing_pad(), GEN_INT, gen_label_rtx(), eh_landing_pad_d::index, INTVAL, LABEL_PRESERVE_P, eh_landing_pad_d::landing_pad, NULL, NULL_RTX, eh_landing_pad_d::post_landing_pad, basic_block_def::preds, redirect_edge_succ(), eh_landing_pad_d::region, and XEXP.

Referenced by find_rarely_executed_basic_blocks_and_crossing_edges().

◆ update_crossing_jump_flags()

|

static |

Update CROSSING_JUMP_P flags on all jump insns.

References BB_END, cfun, CROSSING_JUMP_P, FOR_EACH_BB_FN, FOR_EACH_EDGE, JUMP_P, and basic_block_def::succs.

Variable Documentation

◆ array_size

|

static |

The current size of the following dynamic array.

Referenced by base_bitmap_view< T, Traits >::base_bitmap_view(), bb_visited_trace(), copy_bb(), get_vec_alignment_for_array_type(), getbyterep(), and reorder_basic_blocks_software_trace_cache().

◆ bbd

|

static |

The array which holds needed information for basic blocks.

Referenced by bb_to_key(), bb_visited_trace(), connect_better_edge_p(), connect_traces(), copy_bb(), find_traces(), find_traces_1_round(), tree_switch_conversion::switch_conversion::gen_inbound_check(), mark_bb_visited(), tree_switch_conversion::switch_conversion::prune_bbs(), reorder_basic_blocks_software_trace_cache(), and rotate_loop().

◆ branch_threshold

|

static |

Branch thresholds in thousandths (per mille) of the REG_BR_PROB_BASE.

Referenced by find_traces().

◆ default_target_bb_reorder

| struct target_bb_reorder default_target_bb_reorder |

◆ exec_threshold

|

static |

Exec thresholds in thousandths (per mille) of the count of bb 0.

Referenced by find_traces().

◆ max_entry_count

|

static |

Maximum count of one of the entry blocks.

Referenced by connect_traces(), and find_traces().