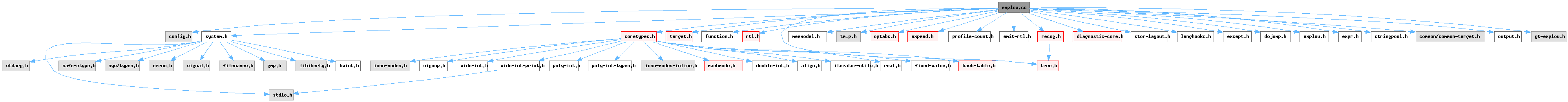

#include "config.h"#include "system.h"#include "coretypes.h"#include "target.h"#include "function.h"#include "rtl.h"#include "tree.h"#include "memmodel.h"#include "tm_p.h"#include "optabs.h"#include "expmed.h"#include "profile-count.h"#include "emit-rtl.h"#include "recog.h"#include "diagnostic-core.h"#include "stor-layout.h"#include "langhooks.h"#include "except.h"#include "dojump.h"#include "explow.h"#include "expr.h"#include "stringpool.h"#include "common/common-target.h"#include "output.h"#include "gt-explow.h"

Macros | |

| #define | PROBE_INTERVAL (1 << STACK_CHECK_PROBE_INTERVAL_EXP) |

| #define | STACK_GROW_OP PLUS |

| #define | STACK_GROW_OPTAB add_optab |

| #define | STACK_GROW_OFF(off) |

Functions | |

| static rtx | break_out_memory_refs (rtx) |

| HOST_WIDE_INT | trunc_int_for_mode (HOST_WIDE_INT c, machine_mode mode) |

| poly_int64 | trunc_int_for_mode (poly_int64 x, machine_mode mode) |

| rtx | plus_constant (machine_mode mode, rtx x, poly_int64 c, bool inplace) |

| rtx | eliminate_constant_term (rtx x, rtx *constptr) |

| rtx | convert_memory_address_addr_space_1 (scalar_int_mode to_mode, rtx x, addr_space_t as, bool in_const, bool no_emit) |

| rtx | convert_memory_address_addr_space (scalar_int_mode to_mode, rtx x, addr_space_t as) |

| rtx | memory_address_addr_space (machine_mode mode, rtx x, addr_space_t as) |

| rtx | validize_mem (rtx ref) |

| rtx | use_anchored_address (rtx x) |

| rtx | copy_to_reg (rtx x) |

| rtx | copy_addr_to_reg (rtx x) |

| rtx | copy_to_mode_reg (machine_mode mode, rtx x) |

| rtx | force_reg (machine_mode mode, rtx x) |

| rtx | force_subreg (machine_mode outermode, rtx op, machine_mode innermode, poly_uint64 byte) |

| rtx | force_lowpart_subreg (machine_mode outermode, rtx op, machine_mode innermode) |

| rtx | force_highpart_subreg (machine_mode outermode, rtx op, machine_mode innermode) |

| rtx | force_not_mem (rtx x) |

| rtx | copy_to_suggested_reg (rtx x, rtx target, machine_mode mode) |

| machine_mode | promote_function_mode (const_tree type, machine_mode mode, int *punsignedp, const_tree funtype, int for_return) |

| machine_mode | promote_mode (const_tree type, machine_mode mode, int *punsignedp) |

| machine_mode | promote_decl_mode (const_tree decl, int *punsignedp) |

| machine_mode | promote_ssa_mode (const_tree name, int *punsignedp) |

| static void | adjust_stack_1 (rtx adjust, bool anti_p) |

| void | adjust_stack (rtx adjust) |

| void | anti_adjust_stack (rtx adjust) |

| static rtx | round_push (rtx size) |

| void | emit_stack_save (enum save_level save_level, rtx *psave) |

| void | emit_stack_restore (enum save_level save_level, rtx sa) |

| void | update_nonlocal_goto_save_area (void) |

| void | record_new_stack_level (void) |

| rtx | align_dynamic_address (rtx target, unsigned required_align) |

| void | get_dynamic_stack_size (rtx *psize, unsigned size_align, unsigned required_align, HOST_WIDE_INT *pstack_usage_size) |

| HOST_WIDE_INT | get_stack_check_protect (void) |

| rtx | allocate_dynamic_stack_space (rtx size, unsigned size_align, unsigned required_align, HOST_WIDE_INT max_size, bool cannot_accumulate) |

| rtx | get_dynamic_stack_base (poly_int64 offset, unsigned required_align, rtx base) |

| void | set_stack_check_libfunc (const char *libfunc_name) |

| void | emit_stack_probe (rtx address) |

| void | probe_stack_range (HOST_WIDE_INT first, rtx size) |

| void | compute_stack_clash_protection_loop_data (rtx *rounded_size, rtx *last_addr, rtx *residual, HOST_WIDE_INT *probe_interval, rtx size) |

| void | emit_stack_clash_protection_probe_loop_start (rtx *loop_lab, rtx *end_lab, rtx last_addr, bool rotated) |

| void | emit_stack_clash_protection_probe_loop_end (rtx loop_lab, rtx end_loop, rtx last_addr, bool rotated) |

| void | anti_adjust_stack_and_probe_stack_clash (rtx size) |

| void | anti_adjust_stack_and_probe (rtx size, bool adjust_back) |

| rtx | hard_function_value (const_tree valtype, const_tree func, const_tree fntype, int outgoing) |

| rtx | hard_libcall_value (machine_mode mode, rtx fun) |

| int | rtx_to_tree_code (enum rtx_code code) |

Variables | |

| static bool | suppress_reg_args_size |

| static rtx | stack_check_libfunc |

Macro Definition Documentation

◆ PROBE_INTERVAL

| #define PROBE_INTERVAL (1 << STACK_CHECK_PROBE_INTERVAL_EXP) |

Probe a range of stack addresses from FIRST to FIRST+SIZE, inclusive. FIRST is a constant and size is a Pmode RTX. These are offsets from the current stack pointer. STACK_GROWS_DOWNWARD says whether to add or subtract them from the stack pointer.

Referenced by anti_adjust_stack_and_probe(), and probe_stack_range().

◆ STACK_GROW_OFF

| #define STACK_GROW_OFF | ( | off | ) |

Referenced by probe_stack_range().

◆ STACK_GROW_OP

| #define STACK_GROW_OP PLUS |

Referenced by anti_adjust_stack_and_probe(), compute_stack_clash_protection_loop_data(), and probe_stack_range().

◆ STACK_GROW_OPTAB

| #define STACK_GROW_OPTAB add_optab |

Referenced by probe_stack_range().

Function Documentation

◆ adjust_stack()

| void adjust_stack | ( | rtx | adjust | ) |

Adjust the stack pointer by ADJUST (an rtx for a number of bytes). This pops when ADJUST is positive. ADJUST need not be constant.

References adjust_stack_1(), const0_rtx, poly_int_rtx_p(), and stack_pointer_delta.

Referenced by anti_adjust_stack_and_probe(), do_pending_stack_adjust(), and emit_call_1().

◆ adjust_stack_1()

A helper for adjust_stack and anti_adjust_stack.

References add_args_size_note(), emit_move_insn(), expand_binop(), gcc_assert, get_last_insn(), NULL, OPTAB_LIB_WIDEN, SET_DEST, single_set(), STACK_GROWS_DOWNWARD, stack_pointer_delta, stack_pointer_rtx, and suppress_reg_args_size.

Referenced by adjust_stack(), and anti_adjust_stack().

◆ align_dynamic_address()

Return an rtx doing runtime alignment to REQUIRED_ALIGN on TARGET.

References expand_binop(), expand_divmod(), expand_mult(), gen_int_mode(), NULL_RTX, and OPTAB_LIB_WIDEN.

Referenced by allocate_dynamic_stack_space(), assign_parm_setup_block(), and get_dynamic_stack_base().

◆ allocate_dynamic_stack_space()

| rtx allocate_dynamic_stack_space | ( | rtx | size, |

| unsigned | size_align, | ||

| unsigned | required_align, | ||

| HOST_WIDE_INT | max_size, | ||

| bool | cannot_accumulate ) |

Return an rtx representing the address of an area of memory dynamically pushed on the stack. Any required stack pointer alignment is preserved. SIZE is an rtx representing the size of the area. SIZE_ALIGN is the alignment (in bits) that we know SIZE has. This parameter may be zero. If so, a proper value will be extracted from SIZE if it is constant, otherwise BITS_PER_UNIT will be assumed. REQUIRED_ALIGN is the alignment (in bits) required for the region of memory. MAX_SIZE is an upper bound for SIZE, if SIZE is not constant, or -1 if no such upper bound is known. If CANNOT_ACCUMULATE is set to TRUE, the caller guarantees that the stack space allocated by the generated code cannot be added with itself in the course of the execution of the function. It is always safe to pass FALSE here and the following criterion is sufficient in order to pass TRUE: every path in the CFG that starts at the allocation point and loops to it executes the associated deallocation code.

References align_dynamic_address(), anti_adjust_stack(), anti_adjust_stack_and_probe(), anti_adjust_stack_and_probe_stack_clash(), cfun, const0_rtx, CONST_INT_P, create_convert_operand_to(), create_fixed_operand(), crtl, current_function_dynamic_stack_size, current_function_has_unbounded_dynamic_stack_size, do_pending_stack_adjust(), emit_barrier(), emit_cmp_and_jump_insns(), emit_insn(), emit_jump(), emit_label(), emit_library_call_value(), emit_move_insn(), error(), expand_binop(), expand_insn(), find_reg_equal_equiv_note(), force_operand(), gcc_assert, gen_int_mode(), gen_label_rtx(), gen_reg_rtx(), GENERIC_STACK_CHECK, get_dynamic_stack_size(), get_last_insn(), get_stack_check_protect(), get_stack_dynamic_offset(), init_one_libfunc(), INTVAL, LCT_NORMAL, MALLOC_ABI_ALIGNMENT, mark_reg_pointer(), NULL, NULL_RTX, OPTAB_LIB_WIDEN, OPTAB_WIDEN, plus_constant(), PREFERRED_STACK_BOUNDARY, probe_stack_range(), record_new_stack_level(), REG_P, rtx_equal_p(), SET_DEST, SET_SRC, single_set(), STACK_GROWS_DOWNWARD, stack_limit_rtx, stack_pointer_delta, stack_pointer_rtx, STATIC_BUILTIN_STACK_CHECK, suppress_reg_args_size, expand_operand::target, targetm, virtual_stack_dynamic_rtx, virtuals_instantiated, and XEXP.

Referenced by expand_builtin_alloca(), expand_builtin_apply(), expand_call(), and initialize_argument_information().

◆ anti_adjust_stack()

| void anti_adjust_stack | ( | rtx | adjust | ) |

Adjust the stack pointer by minus ADJUST (an rtx for a number of bytes). This pushes when ADJUST is positive. ADJUST need not be constant.

References adjust_stack_1(), const0_rtx, poly_int_rtx_p(), and stack_pointer_delta.

Referenced by allocate_dynamic_stack_space(), anti_adjust_stack_and_probe(), anti_adjust_stack_and_probe_stack_clash(), emit_call_1(), emit_library_call_value_1(), emit_push_insn(), expand_call(), push_block(), and store_one_arg().

◆ anti_adjust_stack_and_probe()

Adjust the stack pointer by minus SIZE (an rtx for a number of bytes) while probing it. This pushes when SIZE is positive. SIZE need not be constant. If ADJUST_BACK is true, adjust back the stack pointer by plus SIZE at the end.

References adjust_stack(), anti_adjust_stack(), const0_rtx, CONST_INT_P, convert_to_mode(), emit_cmp_and_jump_insns(), emit_jump(), emit_label(), emit_stack_probe(), force_operand(), GEN_INT, gen_int_mode(), gen_label_rtx(), GET_CODE, GET_MODE, i, INTVAL, NULL_RTX, plus_constant(), PROBE_INTERVAL, simplify_gen_binary(), STACK_GROW_OP, and stack_pointer_rtx.

Referenced by allocate_dynamic_stack_space(), and expand_function_end().

◆ anti_adjust_stack_and_probe_stack_clash()

| void anti_adjust_stack_and_probe_stack_clash | ( | rtx | size | ) |

Adjust the stack pointer by minus SIZE (an rtx for a number of bytes)

while probing it. This pushes when SIZE is positive. SIZE need not

be constant.

This is subtly different than anti_adjust_stack_and_probe to try and

prevent stack-clash attacks

1. It must assume no knowledge of the probing state, any allocation

must probe.

Consider the case of a 1 byte alloca in a loop. If the sum of the

allocations is large, then this could be used to jump the guard if

probes were not emitted.

2. It never skips probes, whereas anti_adjust_stack_and_probe will

skip the probe on the first PROBE_INTERVAL on the assumption it

was already done in the prologue and in previous allocations.

3. It only allocates and probes SIZE bytes, it does not need to

allocate/probe beyond that because this probing style does not

guarantee signal handling capability if the guard is hit.

References anti_adjust_stack(), compute_stack_clash_protection_loop_data(), CONST0_RTX, CONST_INT_P, convert_to_mode(), emit_cmp_and_jump_insns(), emit_insn(), emit_label(), emit_stack_clash_protection_probe_loop_end(), emit_stack_clash_protection_probe_loop_start(), emit_stack_probe(), gcc_assert, gen_blockage(), GEN_INT, gen_label_rtx(), gen_rtx_CONST_INT(), GET_MODE, GET_MODE_SIZE(), i, INTVAL, NULL_RTX, plus_constant(), rotate_loop(), stack_pointer_rtx, targetm, and word_mode.

Referenced by allocate_dynamic_stack_space().

◆ break_out_memory_refs()

Subroutines for manipulating rtx's in semantically interesting ways. Copyright (C) 1987-2026 Free Software Foundation, Inc. This file is part of GCC. GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version. GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details. You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see <http://www.gnu.org/licenses/>.

Return a copy of X in which all memory references and all constants that involve symbol refs have been replaced with new temporary registers. Also emit code to load the memory locations and constants into those registers. If X contains no such constants or memory references, X itself (not a copy) is returned. If a constant is found in the address that is not a legitimate constant in an insn, it is left alone in the hope that it might be valid in the address. X may contain no arithmetic except addition, subtraction and multiplication. Values returned by expand_expr with 1 for sum_ok fit this constraint.

References break_out_memory_refs(), CONSTANT_ADDRESS_P, CONSTANT_P, force_reg(), GET_CODE, GET_MODE, MEM_P, simplify_gen_binary(), and XEXP.

Referenced by break_out_memory_refs(), and memory_address_addr_space().

◆ compute_stack_clash_protection_loop_data()

| void compute_stack_clash_protection_loop_data | ( | rtx * | rounded_size, |

| rtx * | last_addr, | ||

| rtx * | residual, | ||

| HOST_WIDE_INT * | probe_interval, | ||

| rtx | size ) |

Compute parameters for stack clash probing a dynamic stack allocation of SIZE bytes. We compute ROUNDED_SIZE, LAST_ADDR, RESIDUAL and PROBE_INTERVAL. Additionally we conditionally dump the type of probing that will be needed given the values computed.

References CONST0_RTX, CONST_INT_P, dump_file, force_operand(), GEN_INT, INTVAL, NULL_RTX, simplify_gen_binary(), STACK_GROW_OP, and stack_pointer_rtx.

Referenced by anti_adjust_stack_and_probe_stack_clash().

◆ convert_memory_address_addr_space()

| rtx convert_memory_address_addr_space | ( | scalar_int_mode | to_mode, |

| rtx | x, | ||

| addr_space_t | as ) |

Given X, a memory address in address space AS' pointer mode, convert it to an address in the address space's address mode, or vice versa (TO_MODE says which way). We take advantage of the fact that pointers are not allowed to overflow by commuting arithmetic operations over conversions so that address arithmetic insns can be used.

References convert_memory_address_addr_space_1().

Referenced by expand_assignment(), expand_expr_addr_expr(), expand_expr_addr_expr_1(), make_tree(), and memory_address_addr_space().

◆ convert_memory_address_addr_space_1()

| rtx convert_memory_address_addr_space_1 | ( | scalar_int_mode | to_mode, |

| rtx | x, | ||

| addr_space_t | as, | ||

| bool | in_const, | ||

| bool | no_emit ) |

Given X, a memory address in address space AS' pointer mode, convert it to an address in the address space's address mode, or vice versa (TO_MODE says which way). We take advantage of the fact that pointers are not allowed to overflow by commuting arithmetic operations over conversions so that address arithmetic insns can be used. IN_CONST is true if this conversion is inside a CONST. NO_EMIT is true if no insns should be emitted, and instead it should return NULL if it can't be simplified without emitting insns.

References CASE_CONST_SCALAR_INT, CONST_INT_P, convert_memory_address_addr_space_1(), convert_modes(), gcc_assert, gen_rtvec(), GET_CODE, get_last_insn(), GET_MODE, GET_MODE_SIZE(), i, label_ref_label(), LABEL_REF_NONLOCAL_P, last, NULL_RTX, PUT_MODE(), REG_POINTER, RTVEC_ELT, shallow_copy_rtx(), simplify_unary_operation(), SUBREG_PROMOTED_VAR_P, SUBREG_REG, targetm, XEXP, XINT, XVECEXP, and XVECLEN.

Referenced by convert_memory_address_addr_space(), convert_memory_address_addr_space_1(), and simplify_context::simplify_unary_operation_1().

◆ copy_addr_to_reg()

Like copy_to_reg but always give the new register mode Pmode in case X is a constant.

References copy_to_mode_reg().

Referenced by emit_block_op_via_libcall(), expand_builtin_apply(), expand_builtin_apply_args_1(), expand_builtin_cexpi(), expand_builtin_eh_return(), expand_builtin_frame_address(), expand_call(), set_storage_via_libcall(), sjlj_emit_function_enter(), and try_store_by_multiple_pieces().

◆ copy_to_mode_reg()

Like copy_to_reg but always give the new register mode MODE in case X is a constant.

References emit_move_insn(), force_operand(), gcc_assert, gen_reg_rtx(), general_operand(), and GET_MODE.

Referenced by asan_clear_shadow(), copy_addr_to_reg(), pieces_addr::decide_autoinc(), do_tablejump(), emit_block_op_via_libcall(), expand_divmod(), expand_expr_real_2(), expand_mult_const(), expand_sdiv_pow2(), expand_sjlj_dispatch_table(), force_subreg(), maybe_legitimize_operand(), maybe_legitimize_operand_same_code(), precompute_register_parameters(), prepare_call_address(), prepare_operand(), push_block(), replace_read(), set_storage_via_libcall(), and try_store_by_multiple_pieces().

◆ copy_to_reg()

Copy the value or contents of X to a new temp reg and return that reg.

References emit_move_insn(), force_operand(), gen_reg_rtx(), general_operand(), and GET_MODE.

Referenced by assign_parm_setup_block(), convert_mode_scalar(), default_internal_arg_pointer(), do_jump(), emit_group_move_into_temps(), emit_push_insn(), expand_atomic_compare_and_swap(), expand_builtin_apply_args_1(), expand_builtin_longjmp(), expand_builtin_nonlocal_goto(), expand_builtin_return_addr(), expand_builtin_setjmp_receiver(), expand_builtin_stack_address(), expand_builtin_strub_leave(), expand_call(), expand_copysign_absneg(), expand_expr_real_1(), extract_bit_field(), extract_integral_bit_field(), gen_lowpart_general(), gen_memset_value_from_prev(), instantiate_virtual_regs_in_insn(), memory_address_addr_space(), operand_subword_force(), prepare_cmp_insn(), store_bit_field(), and store_integral_bit_field().

◆ copy_to_suggested_reg()

Copy X to TARGET (if it's nonzero and a reg) or to a new temp reg and return that reg. MODE is the mode to use for X in case it is a constant.

References emit_move_insn(), gen_reg_rtx(), and REG_P.

◆ eliminate_constant_term()

If X is a sum, return a new sum like X but lacking any constant terms. Add all the removed constant terms into *CONSTPTR. X itself is not altered. The result != X if and only if it is not isomorphic to X.

References const0_rtx, CONST_INT_P, eliminate_constant_term(), GET_CODE, GET_MODE, simplify_binary_operation(), and XEXP.

Referenced by eliminate_constant_term(), and memory_address_addr_space().

◆ emit_stack_clash_protection_probe_loop_end()

| void emit_stack_clash_protection_probe_loop_end | ( | rtx | loop_lab, |

| rtx | end_loop, | ||

| rtx | last_addr, | ||

| bool | rotated ) |

Emit the end of a stack clash probing loop. This consists of just the jump back to LOOP_LAB and emitting END_LOOP after the loop.

References emit_cmp_and_jump_insns(), emit_jump(), emit_label(), NULL_RTX, and stack_pointer_rtx.

Referenced by anti_adjust_stack_and_probe_stack_clash().

◆ emit_stack_clash_protection_probe_loop_start()

| void emit_stack_clash_protection_probe_loop_start | ( | rtx * | loop_lab, |

| rtx * | end_lab, | ||

| rtx | last_addr, | ||

| bool | rotated ) |

Emit the start of an allocate/probe loop for stack clash protection. LOOP_LAB and END_LAB are returned for use when we emit the end of the loop. LAST addr is the value for SP which stops the loop.

References emit_cmp_and_jump_insns(), emit_label(), gen_label_rtx(), NULL_RTX, and stack_pointer_rtx.

Referenced by anti_adjust_stack_and_probe_stack_clash().

◆ emit_stack_probe()

| void emit_stack_probe | ( | rtx | address | ) |

Emit one stack probe at ADDRESS, an address within the stack.

References const0_rtx, create_address_operand(), emit_insn(), emit_move_insn(), expand_insn(), gen_rtx_MEM(), maybe_legitimize_operands(), MEM_VOLATILE_P, targetm, validize_mem(), and word_mode.

Referenced by anti_adjust_stack_and_probe(), anti_adjust_stack_and_probe_stack_clash(), and probe_stack_range().

◆ emit_stack_restore()

| void emit_stack_restore | ( | enum save_level save_level | , |

| rtx | sa ) |

Restore the stack pointer for the purpose in SAVE_LEVEL. SA is the save area made by emit_stack_save. If it is zero, we have nothing to do.

References crtl, discard_pending_stack_adjust(), emit_clobber(), emit_insn(), gen_move_insn(), gen_rtx_MEM(), SAVE_BLOCK, SAVE_FUNCTION, SAVE_NONLOCAL, stack_pointer_rtx, SUPPORTS_STACK_ALIGNMENT, targetm, and validize_mem().

Referenced by expand_builtin_apply(), expand_builtin_longjmp(), expand_builtin_nonlocal_goto(), expand_call(), expand_function_end(), and expand_stack_restore().

◆ emit_stack_save()

| void emit_stack_save | ( | enum save_level save_level | , |

| rtx * | psave ) |

Save the stack pointer for the purpose in SAVE_LEVEL. PSAVE is a pointer to a previously-created save area. If no save area has been allocated, this function will allocate one. If a save area is specified, it must be of the proper mode.

References assign_stack_local(), do_pending_stack_adjust(), emit_insn(), gen_move_insn(), gen_reg_rtx(), GET_MODE_SIZE(), SAVE_BLOCK, SAVE_FUNCTION, SAVE_NONLOCAL, stack_pointer_rtx, targetm, and validize_mem().

Referenced by expand_builtin_apply(), expand_builtin_setjmp_setup(), expand_builtin_update_setjmp_buf(), expand_call(), expand_function_end(), expand_stack_save(), initialize_argument_information(), and update_nonlocal_goto_save_area().

◆ force_highpart_subreg()

Try to return an rvalue expression for the OUTERMODE highpart of OP, which has mode INNERMODE. Allow OP to be forced into a new register if necessary. Return null on failure.

References force_subreg(), and subreg_highpart_offset().

Referenced by emit_store_flag_1(), and expand_builtin_issignaling().

◆ force_lowpart_subreg()

Try to return an rvalue expression for the OUTERMODE lowpart of OP, which has mode INNERMODE. Allow OP to be forced into a new register if necessary. Return null on failure.

References force_subreg(), and subreg_lowpart_offset().

Referenced by convert_mode_scalar(), expand_absneg_bit(), expand_builtin_issignaling(), expand_copysign_bit(), expand_doubleword_mod(), and expand_expr_real_2().

◆ force_not_mem()

If X is a memory ref, copy its contents to a new temp reg and return that reg. Otherwise, return X.

References emit_move_insn(), gen_reg_rtx(), GET_MODE, MEM_P, MEM_POINTER, and REG_POINTER.

Referenced by expand_assignment(), expand_return(), and prepare_call_address().

◆ force_reg()

Load X into a register if it is not already one. Use mode MODE for the register. X should be valid for mode MODE, but it may be a constant which is valid for all integer modes; that's why caller must specify MODE. The caller must not alter the value in the register we return, since we mark it as a "constant" register.

References CONST_INT_P, CONSTANT_P, ctz_hwi(), DECL_ALIGN, DECL_P, emit_move_insn(), force_operand(), gen_reg_rtx(), general_operand(), GET_CODE, get_last_insn(), INTVAL, mark_reg_pointer(), MEM_P, MEM_POINTER, MIN, NULL_RTX, REG_P, rtx_equal_p(), SET_DEST, SET_SRC, set_unique_reg_note(), single_set(), SYMBOL_REF_DECL, and XEXP.

Referenced by asan_clear_shadow(), assign_parm_setup_block(), assign_parm_setup_stack(), avoid_expensive_constant(), break_out_memory_refs(), builtin_memset_gen_str(), calculate_table_based_CRC(), compress_float_constant(), convert_extracted_bit_field(), convert_float_to_wider_int(), convert_mode_scalar(), convert_wider_int_to_float(), emit_cmp_and_jump_insns(), emit_conditional_move(), emit_group_load_1(), emit_group_load_into_temps(), emit_library_call_value_1(), expand_asm_stmt(), expand_binop(), expand_builtin_frob_return_addr(), expand_builtin_longjmp(), expand_builtin_memset_args(), expand_builtin_setjmp_setup(), expand_builtin_signbit(), expand_builtin_stack_address(), expand_builtin_strub_leave(), expand_builtin_strub_update(), expand_call(), expand_divmod(), expand_doubleword_clz_ctz_ffs(), expand_doubleword_mult(), expand_expr_real_1(), expand_expr_real_2(), expand_HWASAN_ALLOCA_POISON(), expand_ifn_atomic_compare_exchange_into_call(), expand_movstr(), expand_mul_overflow(), expand_mult(), expand_mult_const(), expand_sdiv_pow2(), expand_shift_1(), expand_smod_pow2(), expand_vec_perm_1(), expand_vec_perm_const(), extract_bit_field_1(), extract_fixed_bit_field_1(), extract_low_bits(), force_operand(), force_reload_address(), gen_lowpart_general(), hwasan_emit_untag_frame(), hwasan_frame_base(), instantiate_virtual_regs_in_insn(), memory_address_addr_space(), offset_address(), operand_subword_force(), precompute_register_parameters(), prepare_call_address(), prepare_cmp_insn(), resolve_shift_zext(), sjlj_emit_dispatch_table(), store_bit_field_using_insv(), store_constructor(), store_fixed_bit_field_1(), store_split_bit_field(), try_store_by_multiple_pieces(), use_anchored_address(), and widen_operand().

◆ force_subreg()

| rtx force_subreg | ( | machine_mode | outermode, |

| rtx | op, | ||

| machine_mode | innermode, | ||

| poly_uint64 | byte ) |

Like simplify_gen_subreg, but force OP into a new register if the subreg cannot be formed directly.

References copy_to_mode_reg(), delete_insns_since(), get_last_insn(), and simplify_gen_subreg().

Referenced by convert_modes(), convert_move(), emit_group_load_1(), emit_group_store(), emit_store_flag_1(), expand_assignment(), extract_bit_field_as_subreg(), extract_integral_bit_field(), force_highpart_subreg(), force_lowpart_subreg(), instantiate_virtual_regs_in_insn(), and store_bit_field_1().

◆ get_dynamic_stack_base()

| rtx get_dynamic_stack_base | ( | poly_int64 | offset, |

| unsigned | required_align, | ||

| rtx | base ) |

Return an rtx representing the address of an area of memory already statically pushed onto the stack in the virtual stack vars area. (It is assumed that the area is allocated in the function prologue.) Any required stack pointer alignment is preserved. OFFSET is the offset of the area into the virtual stack vars area. REQUIRED_ALIGN is the alignment (in bits) required for the region of memory. BASE is the rtx of the base of this virtual stack vars area. The only time this is not `virtual_stack_vars_rtx` is when tagging pointers on the stack.

References align_dynamic_address(), crtl, emit_move_insn(), expand_binop(), gen_int_mode(), gen_reg_rtx(), mark_reg_pointer(), NULL_RTX, OPTAB_LIB_WIDEN, PREFERRED_STACK_BOUNDARY, and expand_operand::target.

Referenced by expand_stack_vars().

◆ get_dynamic_stack_size()

| void get_dynamic_stack_size | ( | rtx * | psize, |

| unsigned | size_align, | ||

| unsigned | required_align, | ||

| HOST_WIDE_INT * | pstack_usage_size ) |

Return an rtx through *PSIZE, representing the size of an area of memory to be dynamically pushed on the stack. *PSIZE is an rtx representing the size of the area. SIZE_ALIGN is the alignment (in bits) that we know SIZE has. This parameter may be zero. If so, a proper value will be extracted from SIZE if it is constant, otherwise BITS_PER_UNIT will be assumed. REQUIRED_ALIGN is the alignment (in bits) required for the region of memory. If PSTACK_USAGE_SIZE is not NULL it points to a value that is increased for the additional size returned.

References CONST_INT_P, convert_to_mode(), crtl, force_operand(), GET_MODE, HOST_BITS_PER_INT, INTVAL, MAX_SUPPORTED_STACK_ALIGNMENT, NULL_RTX, plus_constant(), PREFERRED_STACK_BOUNDARY, REGNO_POINTER_ALIGN, round_push(), UINT_MAX, and VIRTUAL_STACK_DYNAMIC_REGNUM.

Referenced by allocate_dynamic_stack_space(), assign_parm_setup_block(), and expand_stack_vars().

◆ get_stack_check_protect()

| HOST_WIDE_INT get_stack_check_protect | ( | void | ) |

Return the number of bytes to "protect" on the stack for -fstack-check. "protect" in the context of -fstack-check means how many bytes we need to always ensure are available on the stack; as a consequence, this is also how many bytes are first skipped when probing the stack. On some targets we want to reuse the -fstack-check prologue support to give a degree of protection against stack clashing style attacks. In that scenario we do not want to skip bytes before probing as that would render the stack clash protections useless. So we never use STACK_CHECK_PROTECT directly. Instead we indirectly use it through this helper, which allows to provide different values for -fstack-check and -fstack-clash-protection.

Referenced by allocate_dynamic_stack_space().

◆ hard_function_value()

| rtx hard_function_value | ( | const_tree | valtype, |

| const_tree | func, | ||

| const_tree | fntype, | ||

| int | outgoing ) |

Return an rtx representing the register or memory location in which a scalar value of data type VALTYPE was returned by a function call to function FUNC. FUNC is a FUNCTION_DECL, FNTYPE a FUNCTION_TYPE node if the precise function is known, otherwise 0. OUTGOING is 1 if on a machine with register windows this function should return the register in which the function will put its result and 0 otherwise.

References arg_int_size_in_bytes(), FOR_EACH_MODE_IN_CLASS, GET_MODE, GET_MODE_SIZE(), PUT_MODE(), REG_P, opt_mode< T >::require(), and targetm.

Referenced by aggregate_value_p(), emit_library_call_value_1(), expand_call(), expand_function_start(), and vectorizable_store().

◆ hard_libcall_value()

Return an rtx representing the register or memory location in which a scalar value of mode MODE was returned by a library call.

References expand_operand::mode, and targetm.

Referenced by emit_library_call_value_1(), and expand_unop().

◆ memory_address_addr_space()

| rtx memory_address_addr_space | ( | machine_mode | mode, |

| rtx | x, | ||

| addr_space_t | as ) |

Return something equivalent to X but valid as a memory address for something of mode MODE in the named address space AS. When X is not itself valid, this works by copying X or subexpressions of it into registers.

References break_out_memory_refs(), const0_rtx, CONST_INT_P, CONSTANT_ADDRESS_P, CONSTANT_P, convert_memory_address_addr_space(), copy_to_reg(), cse_not_expected, eliminate_constant_term(), force_operand(), force_reg(), gcc_assert, GET_CODE, GET_MODE, mark_reg_pointer(), memory_address_addr_space_p(), NULL_RTX, REG_P, targetm, update_temp_slot_address(), XEXP, and y.

Referenced by change_address_1(), and expand_expr_real_1().

◆ plus_constant()

| rtx plus_constant | ( | machine_mode | mode, |

| rtx | x, | ||

| poly_int64 | c, | ||

| bool | inplace ) |

Return an rtx for the sum of X and the integer C, given that X has mode MODE. INPLACE is true if X can be modified inplace or false if it must be treated as immutable.

References wi::add(), CASE_CONST_SCALAR_INT, const0_rtx, CONST_POLY_INT_P, const_poly_int_value(), CONSTANT_P, CONSTANT_POOL_ADDRESS_P, copy_rtx(), find_constant_term_loc(), force_const_mem(), gcc_assert, gen_int_mode(), gen_lowpart, GET_CODE, GET_MODE, GET_MODE_INNER, get_pool_constant(), get_pool_mode(), immed_wide_int_const(), known_eq, memory_address_p, plus_constant(), RTX_CODE, shared_const_p(), XEXP, and y.

Referenced by addr_side_effect_eval(), adjust_address_1(), adjust_mems(), allocate_dynamic_stack_space(), anti_adjust_stack_and_probe(), anti_adjust_stack_and_probe_stack_clash(), asan_clear_shadow(), asan_emit_stack_protection(), assign_stack_local_1(), autoinc_split(), check_mem_read_rtx(), combine_simplify_rtx(), compute_argument_addresses(), compute_cfa_pointer(), cselib_init(), cselib_record_sp_cfa_base_equiv(), cselib_reset_table(), cselib_subst_to_values(), pieces_addr::decide_autoinc(), default_memtag_add_tag(), drop_writeback(), eliminate_regs_1(), eliminate_regs_in_insn(), eliminate_regs_in_insn(), emit_library_call_value_1(), emit_move_resolve_push(), emit_push_insn(), equiv_address_substitution(), expand_builtin_adjust_descriptor(), expand_builtin_apply(), expand_builtin_apply_args_1(), expand_builtin_extract_return_addr(), expand_builtin_frob_return_addr(), expand_builtin_longjmp(), expand_builtin_nonlocal_goto(), expand_builtin_return_addr(), expand_builtin_setjmp_setup(), expand_builtin_stack_address(), expand_builtin_stpcpy_1(), expand_builtin_strub_leave(), expand_builtin_strub_update(), expand_builtin_update_setjmp_buf(), expand_call(), expand_debug_expr(), expand_divmod(), expand_expr_addr_expr_1(), expand_expr_real_2(), expand_movstr(), expand_one_stack_var_at(), extract_writebacks(), find_reg_offset_for_const(), find_reloads_address(), fixup_debug_use(), fold_rtx(), force_int_to_mode(), form_sum(), form_sum(), pair_fusion_bb_info::fuse_pair(), get_addr(), get_dynamic_stack_size(), hwasan_emit_prologue(), hwasan_get_frame_extent(), init_alias_analysis(), init_reload(), insert_const_anchor(), instantiate_virtual_regs_in_rtx(), internal_arg_pointer_based_exp(), lra_eliminate_regs_1(), memory_load_overlap(), offsettable_address_addr_space_p(), plus_constant(), prepare_call_address(), prepare_call_arguments(), prepare_cmp_insn(), probe_stack_range(), push_block(), record_store(), round_push(), rtl_for_decl_location(), simplify_context::simplify_binary_operation_1(), simplify_context::simplify_plus_minus(), simplify_context::simplify_unary_operation_1(), sjlj_emit_function_enter(), sjlj_mark_call_sites(), store_expr(), try_apply_stack_adjustment(), try_store_by_multiple_pieces(), use_anchored_address(), use_related_value(), vt_add_function_parameter(), vt_canonicalize_addr(), and vt_initialize().

◆ probe_stack_range()

| void probe_stack_range | ( | HOST_WIDE_INT | first, |

| rtx | size ) |

Probe a range of stack addresses from FIRST to FIRST+SIZE, inclusive. FIRST is a constant and size is a Pmode RTX. These are offsets from the current stack pointer. STACK_GROWS_DOWNWARD says whether to add or subtract them from the stack pointer.

References const0_rtx, CONST_INT_P, convert_to_mode(), create_input_operand(), emit_cmp_and_jump_insns(), emit_insn(), emit_jump(), emit_label(), emit_library_call(), emit_move_insn(), emit_stack_probe(), expand_binop(), force_operand(), gcc_assert, gen_blockage(), gen_int_mode(), gen_label_rtx(), GET_MODE, i, INTVAL, LCT_THROW, maybe_expand_insn(), memory_address, NULL_RTX, OPTAB_WIDEN, plus_constant(), PROBE_INTERVAL, simplify_gen_binary(), stack_check_libfunc, STACK_GROW_OFF, STACK_GROW_OP, STACK_GROW_OPTAB, stack_pointer_rtx, and targetm.

Referenced by allocate_dynamic_stack_space(), and expand_function_end().

◆ promote_decl_mode()

| machine_mode promote_decl_mode | ( | const_tree | decl, |

| int * | punsignedp ) |

Use one of promote_mode or promote_function_mode to find the promoted mode of DECL. If PUNSIGNEDP is not NULL, store there the unsignedness of DECL after promotion.

References current_function_decl, DECL_BY_REFERENCE, DECL_MODE, promote_function_mode(), promote_mode(), TREE_CODE, TREE_TYPE, and TYPE_UNSIGNED.

Referenced by expand_expr_real_1(), expand_function_start(), expand_one_register_var(), promote_ssa_mode(), and store_constructor().

◆ promote_function_mode()

| machine_mode promote_function_mode | ( | const_tree | type, |

| machine_mode | mode, | ||

| int * | punsignedp, | ||

| const_tree | funtype, | ||

| int | for_return ) |

Return the mode to use to pass or return a scalar of TYPE and MODE. PUNSIGNEDP points to the signedness of the type and may be adjusted to show what signedness to use on extension operations. FOR_RETURN is nonzero if the caller is promoting the return value of FNDECL, else it is for promoting args.

References bitint_info::extended, gcc_assert, INTEGRAL_MODE_P, NULL_TREE, targetm, TREE_CODE, TYPE_MODE, and TYPE_PRECISION.

Referenced by assign_parm_find_data_types(), assign_parm_setup_reg(), emit_library_call_value_1(), expand_call(), expand_expr_real_1(), expand_function_end(), expand_value_return(), initialize_argument_information(), prepare_libcall_arg(), promote_decl_mode(), and setup_incoming_promotions().

◆ promote_mode()

| machine_mode promote_mode | ( | const_tree | type, |

| machine_mode | mode, | ||

| int * | punsignedp ) |

Return the mode to use to store a scalar of TYPE and MODE. PUNSIGNEDP points to the signedness of the type and may be adjusted to show what signedness to use on extension operations.

References as_a(), bitint_info::extended, gcc_assert, NULL_TREE, targetm, TREE_CODE, TREE_TYPE, TYPE_ADDR_SPACE, TYPE_MODE, and TYPE_PRECISION.

Referenced by assign_temp(), default_promote_function_mode(), default_promote_function_mode_always_promote(), default_promote_function_mode_sign_extend(), expand_cond_expr_using_cmove(), precompute_arguments(), promote_decl_mode(), and promote_ssa_mode().

◆ promote_ssa_mode()

| machine_mode promote_ssa_mode | ( | const_tree | name, |

| int * | punsignedp ) |

Return the promoted mode for name. If it is a named SSA_NAME, it is the same as promote_decl_mode. Otherwise, it is the promoted mode of a temp decl of same type as the SSA_NAME, if we had created one.

References gcc_assert, promote_decl_mode(), promote_mode(), SSA_NAME_VAR, TREE_CODE, TREE_TYPE, TYPE_MODE, and TYPE_UNSIGNED.

Referenced by assign_parm_setup_block(), expand_expr_real_1(), expand_function_start(), expand_one_ssa_partition(), get_temp_reg(), gimple_can_coalesce_p(), insert_value_copy_on_edge(), and set_rtl().

◆ record_new_stack_level()

| void record_new_stack_level | ( | void | ) |

Record a new stack level for the current function. This should be called whenever we allocate or deallocate dynamic stack space.

References cfun, global_options, UI_SJLJ, update_nonlocal_goto_save_area(), and update_sjlj_context().

Referenced by allocate_dynamic_stack_space(), expand_call(), and expand_stack_restore().

◆ round_push()

Round the size of a block to be pushed up to the boundary required by this machine. SIZE is the desired size, which need not be constant.

References CONST_INT_P, crtl, expand_binop(), expand_divmod(), expand_mult(), force_operand(), GEN_INT, INTVAL, MAX_SUPPORTED_STACK_ALIGNMENT, NULL_RTX, OPTAB_LIB_WIDEN, plus_constant(), SUPPORTS_STACK_ALIGNMENT, and virtual_preferred_stack_boundary_rtx.

Referenced by get_dynamic_stack_size().

◆ rtx_to_tree_code()

| int rtx_to_tree_code | ( | enum rtx_code | code | ) |

Look up the tree code for a given rtx code to provide the arithmetic operation for real_arithmetic. The function returns an int because the caller may not know what `enum tree_code' means.

Referenced by simplify_const_binary_operation().

◆ set_stack_check_libfunc()

| void set_stack_check_libfunc | ( | const char * | libfunc_name | ) |

In explow.cc

References build_decl(), build_function_type_list(), DECL_EXTERNAL, gcc_assert, get_identifier(), NULL_RTX, NULL_TREE, ptr_mode, ptr_type_node, set_stack_check_libfunc(), SET_SYMBOL_REF_DECL, stack_check_libfunc, lang_hooks_for_types::type_for_mode, lang_hooks::types, UNKNOWN_LOCATION, and void_type_node.

Referenced by set_stack_check_libfunc().

◆ trunc_int_for_mode() [1/2]

| HOST_WIDE_INT trunc_int_for_mode | ( | HOST_WIDE_INT | c, |

| machine_mode | mode ) |

Truncate and perhaps sign-extend C as appropriate for MODE.

References as_a(), gcc_assert, GET_MODE_PRECISION(), HOST_BITS_PER_WIDE_INT, SCALAR_INT_MODE_P, and STORE_FLAG_VALUE.

Referenced by adjust_address_1(), assign_stack_local_1(), avoid_expensive_constant(), const_int_operand(), cse_insn(), cselib_hash_plus_const_int(), cselib_hash_rtx(), do_SUBST(), eliminate_regs_in_insn(), eliminate_regs_in_insn(), expand_one_stack_var_at(), find_split_point(), gen_int_mode(), general_operand(), immediate_operand(), make_compound_operation_int(), maybe_legitimize_operand(), mem_loc_descriptor(), merge_outer_ops(), move2add_note_store(), noce_try_cmove(), noce_try_store_flag_constants(), reload_combine_recognize_pattern(), reload_cse_move2add(), simplify_context::simplify_binary_operation_1(), simplify_compare_const(), simplify_comparison(), simplify_shift_const_1(), simplify_context::simplify_truncation(), and trunc_int_for_mode().

◆ trunc_int_for_mode() [2/2]

| poly_int64 trunc_int_for_mode | ( | poly_int64 | x, |

| machine_mode | mode ) |

Likewise for polynomial values, using the sign-extended representation for each individual coefficient.

References poly_int< N, C >::coeffs, i, NUM_POLY_INT_COEFFS, and trunc_int_for_mode().

◆ update_nonlocal_goto_save_area()

| void update_nonlocal_goto_save_area | ( | void | ) |

Invoke emit_stack_save on the nonlocal_goto_save_area for the current function. This should be called whenever we allocate or deallocate dynamic stack space.

References build4(), cfun, emit_stack_save(), expand_expr(), EXPAND_WRITE, integer_one_node, NULL_RTX, NULL_TREE, SAVE_NONLOCAL, and TREE_TYPE.

Referenced by expand_function_start(), and record_new_stack_level().

◆ use_anchored_address()

If X is a memory reference to a member of an object block, try rewriting it to use an anchor instead. Return the new memory reference on success and the old one on failure.

References CONST_INT_P, cse_not_expected, force_reg(), GET_CODE, GET_MODE, get_section_anchor(), INTVAL, MEM_P, NULL, place_block_symbol(), plus_constant(), replace_equiv_address(), SYMBOL_REF_ANCHOR_P, SYMBOL_REF_BLOCK, SYMBOL_REF_BLOCK_OFFSET, SYMBOL_REF_HAS_BLOCK_INFO_P, SYMBOL_REF_TLS_MODEL, targetm, and XEXP.

Referenced by emit_move_insn(), emit_move_multi_word(), expand_expr_constant(), expand_expr_real_1(), and validize_mem().

◆ validize_mem()

Convert a mem ref into one with a valid memory address. Pass through anything else unchanged.

References GET_MODE, MEM_ADDR_SPACE, MEM_P, memory_address_addr_space_p(), replace_equiv_address(), use_anchored_address(), and XEXP.

Referenced by assign_parm_adjust_entry_rtl(), assign_parm_setup_block(), assign_parm_setup_reg(), assign_parm_setup_stack(), calculate_table_based_CRC(), compress_float_constant(), emit_library_call_value_1(), emit_move_insn(), emit_push_insn(), emit_stack_probe(), emit_stack_restore(), emit_stack_save(), expand_asm_stmt(), expand_builtin_setjmp_setup(), expand_constructor(), expand_expr_real_1(), get_arg_pointer_save_area(), get_builtin_sync_mem(), init_set_costs(), load_register_parameters(), move_block_to_reg(), and store_one_arg().

Variable Documentation

◆ stack_check_libfunc

|

static |

A front end may want to override GCC's stack checking by providing a run-time routine to call to check the stack, so provide a mechanism for calling that routine.

Referenced by probe_stack_range(), and set_stack_check_libfunc().

◆ suppress_reg_args_size

|

static |

Controls the behavior of {anti_,}adjust_stack.

Referenced by adjust_stack_1(), and allocate_dynamic_stack_space().