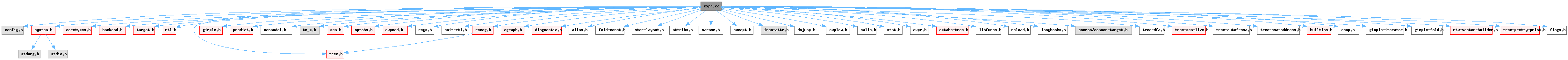

#include "config.h"#include "system.h"#include "coretypes.h"#include "backend.h"#include "target.h"#include "rtl.h"#include "tree.h"#include "gimple.h"#include "predict.h"#include "memmodel.h"#include "tm_p.h"#include "ssa.h"#include "optabs.h"#include "expmed.h"#include "regs.h"#include "emit-rtl.h"#include "recog.h"#include "cgraph.h"#include "diagnostic.h"#include "alias.h"#include "fold-const.h"#include "stor-layout.h"#include "attribs.h"#include "varasm.h"#include "except.h"#include "insn-attr.h"#include "dojump.h"#include "explow.h"#include "calls.h"#include "stmt.h"#include "expr.h"#include "optabs-tree.h"#include "libfuncs.h"#include "reload.h"#include "langhooks.h"#include "common/common-target.h"#include "tree-dfa.h"#include "tree-ssa-live.h"#include "tree-outof-ssa.h"#include "tree-ssa-address.h"#include "builtins.h"#include "ccmp.h"#include "gimple-iterator.h"#include "gimple-fold.h"#include "rtx-vector-builder.h"#include "tree-pretty-print.h"#include "flags.h"#include "internal-fn.h"

Data Structures | |

| class | pieces_addr |

| class | op_by_pieces_d |

| class | move_by_pieces_d |

| class | store_by_pieces_d |

| class | compare_by_pieces_d |

Macros | |

| #define | PUSHG_P(to) |

| #define | REDUCE_BIT_FIELD(expr) |

| #define | EXTEND_BITINT(expr) |

Functions | |

| static bool | block_move_libcall_safe_for_call_parm (void) |

| static bool | emit_block_move_via_pattern (rtx, rtx, rtx, unsigned, unsigned, HOST_WIDE_INT, unsigned HOST_WIDE_INT, unsigned HOST_WIDE_INT, unsigned HOST_WIDE_INT, bool) |

| static void | emit_block_move_via_loop (rtx, rtx, rtx, unsigned, int) |

| static void | emit_block_move_via_sized_loop (rtx, rtx, rtx, unsigned, unsigned) |

| static void | emit_block_move_via_oriented_loop (rtx, rtx, rtx, unsigned, unsigned) |

| static rtx | emit_block_cmp_via_loop (rtx, rtx, rtx, tree, rtx, bool, unsigned, unsigned) |

| static rtx_insn * | compress_float_constant (rtx, rtx) |

| static rtx | get_subtarget (rtx) |

| static rtx | store_field (rtx, poly_int64, poly_int64, poly_uint64, poly_uint64, machine_mode, tree, alias_set_type, bool, bool) |

| static unsigned HOST_WIDE_INT | highest_pow2_factor_for_target (const_tree, const_tree) |

| static bool | is_aligning_offset (const_tree, const_tree) |

| static rtx | reduce_to_bit_field_precision (rtx, rtx, tree) |

| static rtx | do_store_flag (const_sepops, rtx, machine_mode) |

| static void | do_tablejump (rtx, machine_mode, rtx, rtx, rtx, profile_probability) |

| static rtx | const_vector_from_tree (tree) |

| static tree | tree_expr_size (const_tree) |

| static void | convert_mode_scalar (rtx, rtx, int) |

| void | init_expr_target (void) |

| void | init_expr (void) |

| void | convert_move (rtx to, rtx from, int unsignedp) |

| rtx | convert_to_mode (machine_mode mode, rtx x, int unsignedp) |

| rtx | convert_modes (machine_mode mode, machine_mode oldmode, rtx x, int unsignedp) |

| rtx | convert_float_to_wider_int (machine_mode mode, machine_mode fmode, rtx x) |

| rtx | convert_wider_int_to_float (machine_mode mode, machine_mode imode, rtx x) |

| static unsigned int | alignment_for_piecewise_move (unsigned int max_pieces, unsigned int align) |

| static bool | can_use_qi_vectors (by_pieces_operation op) |

| static bool | by_pieces_mode_supported_p (fixed_size_mode mode, by_pieces_operation op) |

| static fixed_size_mode | widest_fixed_size_mode_for_size (unsigned int size, by_pieces_operation op) |

| static bool | can_do_by_pieces (unsigned HOST_WIDE_INT len, unsigned int align, enum by_pieces_operation op) |

| bool | can_move_by_pieces (unsigned HOST_WIDE_INT len, unsigned int align) |

| unsigned HOST_WIDE_INT | by_pieces_ninsns (unsigned HOST_WIDE_INT l, unsigned int align, unsigned int max_size, by_pieces_operation op) |

| rtx | move_by_pieces (rtx to, rtx from, unsigned HOST_WIDE_INT len, unsigned int align, memop_ret retmode) |

| bool | can_store_by_pieces (unsigned HOST_WIDE_INT len, by_pieces_constfn constfun, void *constfundata, unsigned int align, bool memsetp) |

| rtx | store_by_pieces (rtx to, unsigned HOST_WIDE_INT len, by_pieces_constfn constfun, void *constfundata, unsigned int align, bool memsetp, memop_ret retmode) |

| void | clear_by_pieces (rtx to, unsigned HOST_WIDE_INT len, unsigned int align) |

| static rtx | compare_by_pieces (rtx arg0, rtx arg1, unsigned HOST_WIDE_INT len, rtx target, unsigned int align, by_pieces_constfn a1_cfn, void *a1_cfn_data) |

| rtx | emit_block_move_hints (rtx x, rtx y, rtx size, enum block_op_methods method, unsigned int expected_align, HOST_WIDE_INT expected_size, unsigned HOST_WIDE_INT min_size, unsigned HOST_WIDE_INT max_size, unsigned HOST_WIDE_INT probable_max_size, bool bail_out_libcall, bool *is_move_done, bool might_overlap, unsigned ctz_size) |

| rtx | emit_block_move (rtx x, rtx y, rtx size, enum block_op_methods method, unsigned int ctz_size) |

| static bool | emit_block_move_via_pattern (rtx x, rtx y, rtx size, unsigned int align, unsigned int expected_align, HOST_WIDE_INT expected_size, unsigned HOST_WIDE_INT min_size, unsigned HOST_WIDE_INT max_size, unsigned HOST_WIDE_INT probable_max_size, bool might_overlap) |

| static void | emit_block_move_via_sized_loop (rtx x, rtx y, rtx size, unsigned int align, unsigned int ctz_size) |

| static void | emit_block_move_via_oriented_loop (rtx x, rtx y, rtx size, unsigned int align, unsigned int ctz_size) |

| static void | emit_block_move_via_loop (rtx x, rtx y, rtx size, unsigned int align, int incr) |

| rtx | emit_block_op_via_libcall (enum built_in_function fncode, rtx dst, rtx src, rtx size, bool tailcall) |

| rtx | expand_cmpstrn_or_cmpmem (insn_code icode, rtx target, rtx arg1_rtx, rtx arg2_rtx, tree arg3_type, rtx arg3_rtx, HOST_WIDE_INT align) |

| static rtx | emit_block_cmp_via_cmpmem (rtx x, rtx y, rtx len, tree len_type, rtx target, unsigned align) |

| rtx | emit_block_cmp_hints (rtx x, rtx y, rtx len, tree len_type, rtx target, bool equality_only, by_pieces_constfn y_cfn, void *y_cfndata, unsigned ctz_len) |

| void | move_block_to_reg (int regno, rtx x, int nregs, machine_mode mode) |

| void | move_block_from_reg (int regno, rtx x, int nregs) |

| rtx | gen_group_rtx (rtx orig) |

| static void | emit_group_load_1 (rtx *tmps, rtx dst, rtx orig_src, tree type, poly_int64 ssize) |

| void | emit_group_load (rtx dst, rtx src, tree type, poly_int64 ssize) |

| rtx | emit_group_load_into_temps (rtx parallel, rtx src, tree type, poly_int64 ssize) |

| void | emit_group_move (rtx dst, rtx src) |

| rtx | emit_group_move_into_temps (rtx src) |

| void | emit_group_store (rtx orig_dst, rtx src, tree type, poly_int64 ssize) |

| rtx | maybe_emit_group_store (rtx x, tree type) |

| static void | copy_blkmode_from_reg (rtx target, rtx srcreg, tree type) |

| rtx | copy_blkmode_to_reg (machine_mode mode_in, tree src) |

| void | use_reg_mode (rtx *call_fusage, rtx reg, machine_mode mode) |

| void | clobber_reg_mode (rtx *call_fusage, rtx reg, machine_mode mode) |

| void | use_regs (rtx *call_fusage, int regno, int nregs) |

| void | use_group_regs (rtx *call_fusage, rtx regs) |

| static gimple * | get_def_for_expr (tree name, enum tree_code code) |

| static gimple * | get_def_for_expr_class (tree name, enum tree_code_class tclass) |

| rtx | clear_storage_hints (rtx object, rtx size, enum block_op_methods method, unsigned int expected_align, HOST_WIDE_INT expected_size, unsigned HOST_WIDE_INT min_size, unsigned HOST_WIDE_INT max_size, unsigned HOST_WIDE_INT probable_max_size, unsigned ctz_size) |

| rtx | clear_storage (rtx object, rtx size, enum block_op_methods method) |

| rtx | set_storage_via_libcall (rtx object, rtx size, rtx val, bool tailcall) |

| bool | set_storage_via_setmem (rtx object, rtx size, rtx val, unsigned int align, unsigned int expected_align, HOST_WIDE_INT expected_size, unsigned HOST_WIDE_INT min_size, unsigned HOST_WIDE_INT max_size, unsigned HOST_WIDE_INT probable_max_size) |

| void | write_complex_part (rtx cplx, rtx val, bool imag_p, bool undefined_p) |

| rtx | read_complex_part (rtx cplx, bool imag_p) |

| static rtx | emit_move_change_mode (machine_mode new_mode, machine_mode old_mode, rtx x, bool force) |

| static rtx_insn * | emit_move_via_integer (machine_mode mode, rtx x, rtx y, bool force) |

| rtx | emit_move_resolve_push (machine_mode mode, rtx x) |

| rtx_insn * | emit_move_complex_push (machine_mode mode, rtx x, rtx y) |

| rtx_insn * | emit_move_complex_parts (rtx x, rtx y) |

| static rtx_insn * | emit_move_complex (machine_mode mode, rtx x, rtx y) |

| static rtx_insn * | emit_move_ccmode (machine_mode mode, rtx x, rtx y) |

| static bool | undefined_operand_subword_p (const_rtx op, int i) |

| static rtx_insn * | emit_move_multi_word (machine_mode mode, rtx x, rtx y) |

| rtx_insn * | emit_move_insn_1 (rtx x, rtx y) |

| rtx_insn * | emit_move_insn (rtx x, rtx y) |

| rtx_insn * | gen_move_insn (rtx x, rtx y) |

| rtx | push_block (rtx size, poly_int64 extra, int below) |

| static rtx | mem_autoinc_base (rtx mem) |

| poly_int64 | find_args_size_adjust (rtx_insn *insn) |

| poly_int64 | fixup_args_size_notes (rtx_insn *prev, rtx_insn *last, poly_int64 end_args_size) |

| static int | memory_load_overlap (rtx x, rtx y, HOST_WIDE_INT size) |

| bool | emit_push_insn (rtx x, machine_mode mode, tree type, rtx size, unsigned int align, int partial, rtx reg, poly_int64 extra, rtx args_addr, rtx args_so_far, int reg_parm_stack_space, rtx alignment_pad, bool sibcall_p) |

| static bool | optimize_bitfield_assignment_op (poly_uint64 pbitsize, poly_uint64 pbitpos, poly_uint64 pbitregion_start, poly_uint64 pbitregion_end, machine_mode mode1, rtx str_rtx, tree to, tree src, bool reverse) |

| void | get_bit_range (poly_uint64 *bitstart, poly_uint64 *bitend, tree exp, poly_int64 *bitpos, tree *offset) |

| bool | non_mem_decl_p (tree base) |

| bool | mem_ref_refers_to_non_mem_p (tree ref) |

| static bool | store_field_updates_msb_p (poly_int64 bitpos, poly_int64 bitsize, rtx to_rtx) |

| void | expand_assignment (tree to, tree from, bool nontemporal) |

| bool | emit_storent_insn (rtx to, rtx from) |

| static rtx | string_cst_read_str (void *data, void *, HOST_WIDE_INT offset, fixed_size_mode mode) |

| static rtx | raw_data_cst_read_str (void *data, void *, HOST_WIDE_INT offset, fixed_size_mode mode) |

| rtx | store_expr (tree exp, rtx target, int call_param_p, bool nontemporal, bool reverse) |

| static bool | flexible_array_member_p (const_tree f, const_tree type) |

| static HOST_WIDE_INT | count_type_elements (const_tree type, bool for_ctor_p) |

| static bool | categorize_ctor_elements_1 (const_tree ctor, HOST_WIDE_INT *p_nz_elts, HOST_WIDE_INT *p_unique_nz_elts, HOST_WIDE_INT *p_init_elts, int *p_complete) |

| bool | categorize_ctor_elements (const_tree ctor, HOST_WIDE_INT *p_nz_elts, HOST_WIDE_INT *p_unique_nz_elts, HOST_WIDE_INT *p_init_elts, int *p_complete) |

| bool | immediate_const_ctor_p (const_tree ctor, unsigned int words) |

| bool | complete_ctor_at_level_p (const_tree type, HOST_WIDE_INT num_elts, const_tree last_type) |

| static bool | mostly_zeros_p (const_tree exp) |

| static bool | all_zeros_p (const_tree exp) |

| static void | store_constructor_field (rtx target, poly_uint64 bitsize, poly_int64 bitpos, poly_uint64 bitregion_start, poly_uint64 bitregion_end, machine_mode mode, tree exp, int cleared, alias_set_type alias_set, bool reverse) |

| static int | fields_length (const_tree type) |

| void | store_constructor (tree exp, rtx target, int cleared, poly_int64 size, bool reverse) |

| tree | get_inner_reference (tree exp, poly_int64 *pbitsize, poly_int64 *pbitpos, tree *poffset, machine_mode *pmode, int *punsignedp, int *preversep, int *pvolatilep) |

| static unsigned HOST_WIDE_INT | target_align (const_tree target) |

| rtx | force_operand (rtx value, rtx target) |

| bool | safe_from_p (const_rtx x, tree exp, int top_p) |

| unsigned HOST_WIDE_INT | highest_pow2_factor (const_tree exp) |

| static enum rtx_code | convert_tree_comp_to_rtx (enum tree_code tcode, int unsignedp) |

| void | expand_operands (tree exp0, tree exp1, rtx target, rtx *op0, rtx *op1, enum expand_modifier modifier) |

| static rtx | expand_expr_constant (tree exp, int defer, enum expand_modifier modifier) |

| static rtx | expand_expr_addr_expr_1 (tree exp, rtx target, scalar_int_mode tmode, enum expand_modifier modifier, addr_space_t as) |

| static rtx | expand_expr_addr_expr (tree exp, rtx target, machine_mode tmode, enum expand_modifier modifier) |

| static rtx | expand_constructor (tree exp, rtx target, enum expand_modifier modifier, bool avoid_temp_mem) |

| rtx | expand_expr_real (tree exp, rtx target, machine_mode tmode, enum expand_modifier modifier, rtx *alt_rtl, bool inner_reference_p) |

| static rtx | expand_cond_expr_using_cmove (tree treeop0, tree treeop1, tree treeop2) |

| static rtx | expand_misaligned_mem_ref (rtx temp, machine_mode mode, int unsignedp, unsigned int align, rtx target, rtx *alt_rtl) |

| static rtx | expand_expr_divmod (tree_code code, machine_mode mode, tree treeop0, tree treeop1, rtx op0, rtx op1, rtx target, int unsignedp) |

| static bool | expr_has_boolean_range (tree exp, gimple *stmt) |

| rtx | expand_expr_real_2 (const_sepops ops, rtx target, machine_mode tmode, enum expand_modifier modifier) |

| static bool | stmt_is_replaceable_p (gimple *stmt) |

| rtx | expand_expr_real_gassign (gassign *g, rtx target, machine_mode tmode, enum expand_modifier modifier, rtx *alt_rtl, bool inner_reference_p) |

| rtx | expand_expr_real_1 (tree exp, rtx target, machine_mode tmode, enum expand_modifier modifier, rtx *alt_rtl, bool inner_reference_p) |

| static tree | constant_byte_string (tree arg, tree *ptr_offset, tree *mem_size, tree *decl, bool valrep=false) |

| tree | string_constant (tree arg, tree *ptr_offset, tree *mem_size, tree *decl) |

| tree | byte_representation (tree arg, tree *ptr_offset, tree *mem_size, tree *decl) |

| enum tree_code | maybe_optimize_pow2p_mod_cmp (enum tree_code code, tree *arg0, tree *arg1) |

| enum tree_code | maybe_optimize_mod_cmp (enum tree_code code, tree *arg0, tree *arg1) |

| void | maybe_optimize_sub_cmp_0 (enum tree_code code, tree *arg0, tree *arg1) |

| static rtx | expand_single_bit_test (location_t loc, enum tree_code code, tree inner, int bitnum, tree result_type, rtx target, machine_mode mode) |

| bool | try_casesi (tree index_type, tree index_expr, tree minval, tree range, rtx table_label, rtx default_label, rtx fallback_label, profile_probability default_probability) |

| bool | try_tablejump (tree index_type, tree index_expr, tree minval, tree range, rtx table_label, rtx default_label, profile_probability default_probability) |

| static rtx | const_vector_mask_from_tree (tree exp) |

| tree | build_personality_function (const char *lang) |

| rtx | get_personality_function (tree decl) |

| rtx | expr_size (tree exp) |

| HOST_WIDE_INT | int_expr_size (const_tree exp) |

| unsigned HOST_WIDE_INT | gf2n_poly_long_div_quotient (unsigned HOST_WIDE_INT polynomial, unsigned short n) |

| static unsigned HOST_WIDE_INT | calculate_crc (unsigned HOST_WIDE_INT crc, unsigned HOST_WIDE_INT polynomial, unsigned short crc_bits) |

| static rtx | assemble_crc_table (unsigned HOST_WIDE_INT polynom, unsigned short crc_bits) |

| static rtx | generate_crc_table (unsigned HOST_WIDE_INT polynom, unsigned short crc_bits) |

| static void | calculate_table_based_CRC (rtx *crc, const rtx &input_data, const rtx &polynomial, machine_mode data_mode) |

| void | expand_crc_table_based (rtx op0, rtx op1, rtx op2, rtx op3, machine_mode data_mode) |

| void | gen_common_operation_to_reflect (rtx *op, unsigned HOST_WIDE_INT and1_value, unsigned HOST_WIDE_INT and2_value, unsigned shift_val) |

| void | reflect_64_bit_value (rtx *op) |

| void | reflect_32_bit_value (rtx *op) |

| void | reflect_16_bit_value (rtx *op) |

| void | reflect_8_bit_value (rtx *op) |

| void | generate_reflecting_code_standard (rtx *op) |

| void | expand_reversed_crc_table_based (rtx op0, rtx op1, rtx op2, rtx op3, machine_mode data_mode, void(*gen_reflecting_code)(rtx *op)) |

Variables | |

| int | cse_not_expected |

| int | bitint_extended = -1 |

Macro Definition Documentation

◆ EXTEND_BITINT

| #define EXTEND_BITINT | ( | expr | ) |

Referenced by expand_expr_real_1().

◆ PUSHG_P

| #define PUSHG_P | ( | to | ) |

Derived class from op_by_pieces_d, providing support for block move operations.

Referenced by move_by_pieces_d::move_by_pieces_d().

◆ REDUCE_BIT_FIELD

| #define REDUCE_BIT_FIELD | ( | expr | ) |

Referenced by expand_expr_real_2().

Function Documentation

◆ alignment_for_piecewise_move()

|

static |

Return the largest alignment we can use for doing a move (or store) of MAX_PIECES. ALIGN is the largest alignment we could use.

References FOR_EACH_MODE_IN_CLASS, GET_MODE_ALIGNMENT, GET_MODE_SIZE(), int_mode_for_size(), MAX, NARROWEST_INT_MODE, opt_mode< T >::require(), and targetm.

Referenced by by_pieces_ninsns(), can_store_by_pieces(), and op_by_pieces_d::op_by_pieces_d().

◆ all_zeros_p()

|

static |

Return true if EXP contains all zeros.

References categorize_ctor_elements(), exp(), initializer_zerop(), and TREE_CODE.

Referenced by expand_constructor().

◆ assemble_crc_table()

|

static |

Assemble CRC table with 256 elements for the given POLYNOM and CRC_BITS. POLYNOM is the polynomial used to calculate the CRC table's elements. CRC_BITS is the size of CRC, may be 8, 16, ... .

References build_array_type(), build_constructor_from_vec(), build_index_type(), build_int_cstu(), calculate_crc(), dump_file, dump_flags, gcc_assert, HOST_WIDE_INT_PRINT_HEX, i, make_unsigned_type(), MEM_P, output_constant_def(), print_rtl_single(), size_int, TDF_DETAILS, vec_alloc(), vec_safe_push(), and XEXP.

Referenced by generate_crc_table().

◆ block_move_libcall_safe_for_call_parm()

|

static |

A subroutine of emit_block_move. Returns true if calling the block move libcall will not clobber any parameters which may have already been placed on the stack.

References builtin_decl_implicit(), NULL_RTX, NULL_TREE, OUTGOING_REG_PARM_STACK_SPACE, REG_P, targetm, TREE_CHAIN, TREE_TYPE, TREE_VALUE, TYPE_ARG_TYPES, TYPE_MODE, and void_list_node.

Referenced by emit_block_move_hints().

◆ build_personality_function()

| tree build_personality_function | ( | const char * | lang | ) |

Build a decl for a personality function given a language prefix.

References build_decl(), build_function_type_list(), DECL_ARTIFICIAL, DECL_EXTERNAL, DECL_RTL, gcc_unreachable, get_identifier(), global_options, integer_type_node, long_long_unsigned_type_node, NULL, NULL_TREE, ptr_type_node, SET_SYMBOL_REF_DECL, TREE_PUBLIC, UI_DWARF2, UI_NONE, UI_SEH, UI_SJLJ, UI_TARGET, UNKNOWN_LOCATION, unsigned_type_node, and XEXP.

Referenced by lhd_gcc_personality().

◆ by_pieces_mode_supported_p()

|

static |

Return true if optabs exists for the mode and certain by pieces operations.

References can_compare_p(), ccp_jump, CLEAR_BY_PIECES, COMPARE_BY_PIECES, optab_handler(), SET_BY_PIECES, and VECTOR_MODE_P.

Referenced by op_by_pieces_d::smallest_fixed_size_mode_for_size(), and widest_fixed_size_mode_for_size().

◆ by_pieces_ninsns()

| unsigned HOST_WIDE_INT by_pieces_ninsns | ( | unsigned HOST_WIDE_INT | l, |

| unsigned int | align, | ||

| unsigned int | max_size, | ||

| by_pieces_operation | op ) |

Return number of insns required to perform operation OP by pieces for L bytes. ALIGN (in bits) is maximum alignment we can assume.

References alignment_for_piecewise_move(), COMPARE_BY_PIECES, gcc_assert, GET_MODE_ALIGNMENT, GET_MODE_SIZE(), MOVE_MAX_PIECES, optab_handler(), ROUND_UP, targetm, and widest_fixed_size_mode_for_size().

Referenced by default_use_by_pieces_infrastructure_p(), and op_by_pieces_d::op_by_pieces_d().

◆ byte_representation()

Similar to string_constant, return a STRING_CST corresponding to the value representation of the first argument if it's a constant.

References constant_byte_string().

Referenced by getbyterep(), and gimple_fold_builtin_memchr().

◆ calculate_crc()

|

static |

Calculate CRC for the initial CRC and given POLYNOMIAL. CRC_BITS is CRC size.

References CHAR_BIT, HOST_WIDE_INT_1U, and i.

Referenced by assemble_crc_table().

◆ calculate_table_based_CRC()

|

static |

Generate table-based CRC code for the given CRC, INPUT_DATA and the

POLYNOMIAL (without leading 1).

First, using POLYNOMIAL's value generates CRC table of 256 elements,

then generates the assembly for the following code,

where crc_bit_size and data_bit_size may be 8, 16, 32, 64, depending on CRC:

for (int i = 0; i < data_bit_size / 8; i++)

crc = (crc << 8) ^ crc_table[(crc >> (crc_bit_size - 8))

^ (data >> (data_bit_size - (i + 1) * 8)

& 0xFF))];

So to take values from the table, we need 8-bit data.

If input data size is not 8, then first we extract upper 8 bits,

then the other 8 bits, and so on.

References convert_move(), exact_log2(), expand_and(), expand_binop(), expand_shift(), force_reg(), gen_int_mode(), gen_reg_rtx(), gen_rtx_MEM(), generate_crc_table(), GET_MODE, GET_MODE_BITSIZE(), GET_MODE_SIZE(), i, expand_operand::mode, NULL_RTX, OPTAB_DIRECT, OPTAB_WIDEN, poly_int< N, C >::to_constant(), UINTVAL, and validize_mem().

Referenced by expand_crc_table_based(), and expand_reversed_crc_table_based().

◆ can_do_by_pieces()

|

static |

Determine whether an operation OP on LEN bytes with alignment ALIGN can and should be performed piecewise.

References optimize_insn_for_speed_p(), and targetm.

Referenced by can_move_by_pieces(), emit_block_cmp_hints(), and emit_block_cmp_via_loop().

◆ can_move_by_pieces()

| bool can_move_by_pieces | ( | unsigned HOST_WIDE_INT | len, |

| unsigned int | align ) |

Determine whether the LEN bytes can be moved by using several move instructions. Return nonzero if a call to move_by_pieces should succeed.

References can_do_by_pieces(), and MOVE_BY_PIECES.

Referenced by emit_block_move_hints(), emit_block_move_via_loop(), emit_block_move_via_sized_loop(), emit_push_insn(), expand_constructor(), gimple_stringops_transform(), and gimplify_init_constructor().

◆ can_store_by_pieces()

| bool can_store_by_pieces | ( | unsigned HOST_WIDE_INT | len, |

| by_pieces_constfn | constfun, | ||

| void * | constfundata, | ||

| unsigned int | align, | ||

| bool | memsetp ) |

Determine whether the LEN bytes generated by CONSTFUN can be stored to memory using several move instructions. CONSTFUNDATA is a pointer which will be passed as argument in every CONSTFUN call. ALIGN is maximum alignment we can assume. MEMSETP is true if this is a memset operation and false if it's a copy of a constant string. Return true if a call to store_by_pieces should succeed.

References alignment_for_piecewise_move(), gcc_assert, GET_MODE_ALIGNMENT, GET_MODE_SIZE(), HAVE_POST_DECREMENT, HAVE_PRE_DECREMENT, optab_handler(), optimize_insn_for_speed_p(), SET_BY_PIECES, STORE_BY_PIECES, STORE_MAX_PIECES, targetm, VECTOR_MODE_P, and widest_fixed_size_mode_for_size().

Referenced by asan_emit_stack_protection(), can_store_by_multiple_pieces(), expand_builtin_memory_copy_args(), expand_builtin_memset_args(), expand_builtin_strncpy(), gimple_stringops_transform(), simplify_builtin_memcpy_memset(), store_constructor(), store_expr(), and try_store_by_multiple_pieces().

◆ can_use_qi_vectors()

|

static |

Return true if we know how to implement OP using vectors of bytes.

References CLEAR_BY_PIECES, COMPARE_BY_PIECES, and SET_BY_PIECES.

Referenced by op_by_pieces_d::smallest_fixed_size_mode_for_size(), and widest_fixed_size_mode_for_size().

◆ categorize_ctor_elements()

| bool categorize_ctor_elements | ( | const_tree | ctor, |

| HOST_WIDE_INT * | p_nz_elts, | ||

| HOST_WIDE_INT * | p_unique_nz_elts, | ||

| HOST_WIDE_INT * | p_init_elts, | ||

| int * | p_complete ) |

Examine CTOR to discover: * how many scalar fields are set to nonzero values, and place it in *P_NZ_ELTS; * the same, but counting RANGE_EXPRs as multiplier of 1 instead of high - low + 1 (this can be useful for callers to determine ctors that could be cheaply initialized with - perhaps nested - loops compared to copied from huge read-only data), and place it in *P_UNIQUE_NZ_ELTS; * how many scalar fields in total are in CTOR, and place it in *P_ELT_COUNT. * whether the constructor is complete -- in the sense that every meaningful byte is explicitly given a value -- and place it in *P_COMPLETE: - 0 if any field is missing - 1 if all fields are initialized, and there's no padding - -1 if all fields are initialized, but there's padding Return whether or not CTOR is a valid static constant initializer, the same as "initializer_constant_valid_p (CTOR, TREE_TYPE (CTOR)) != 0".

References categorize_ctor_elements_1().

Referenced by all_zeros_p(), gimplify_init_constructor(), and mostly_zeros_p().

◆ categorize_ctor_elements_1()

|

static |

Helper for categorize_ctor_elements. Identical interface.

References categorize_ctor_elements_1(), complete_ctor_at_level_p(), CONSTRUCTOR_ELTS, constructor_static_from_elts_p(), CONSTRUCTOR_ZERO_PADDING_BITS, count_type_elements(), FOR_EACH_CONSTRUCTOR_ELT, i, initializer_constant_valid_p(), initializer_zerop(), mult, NULL_TREE, RAW_DATA_LENGTH, simple_cst_equal(), TREE_CODE, tree_fits_uhwi_p(), TREE_IMAGPART, TREE_OPERAND, TREE_REALPART, TREE_STATIC, TREE_STRING_LENGTH, tree_to_uhwi(), TREE_TYPE, true, type_has_padding_at_level_p(), TYPE_REVERSE_STORAGE_ORDER, TYPE_SIZE, TYPE_VECTOR_SUBPARTS(), expand_operand::value, VECTOR_CST_ELT, and ZERO_INIT_PADDING_BITS_ALL.

Referenced by categorize_ctor_elements(), and categorize_ctor_elements_1().

◆ clear_by_pieces()

| void clear_by_pieces | ( | rtx | to, |

| unsigned | HOST_WIDE_INT, | ||

| unsigned int | align ) |

Generate several move instructions to clear LEN bytes of block TO. (A MEM rtx with BLKmode). ALIGN is maximum alignment we can assume.

References builtin_memset_read_str(), and CLEAR_BY_PIECES.

Referenced by clear_storage_hints().

◆ clear_storage()

Write zeros through the storage of OBJECT. If OBJECT has BLKmode, SIZE is its length in bytes.

References clear_storage_hints(), GET_CODE, GET_MODE, GET_MODE_MASK, and UINTVAL.

Referenced by asan_clear_shadow(), expand_constructor(), store_constructor(), and store_expr().

◆ clear_storage_hints()

| rtx clear_storage_hints | ( | rtx | object, |

| rtx | size, | ||

| enum block_op_methods | method, | ||

| unsigned int | expected_align, | ||

| HOST_WIDE_INT | expected_size, | ||

| unsigned HOST_WIDE_INT | min_size, | ||

| unsigned HOST_WIDE_INT | max_size, | ||

| unsigned HOST_WIDE_INT | probable_max_size, | ||

| unsigned | ctz_size ) |

Write zeros through the storage of OBJECT. If OBJECT has BLKmode, SIZE is its length in bytes.

References ADDR_SPACE_GENERIC_P, BLOCK_OP_NORMAL, BLOCK_OP_TAILCALL, CLEAR_BY_PIECES, clear_by_pieces(), COMPLEX_MODE_P, CONST0_RTX, const0_rtx, CONST_INT_P, emit_move_insn(), gcc_assert, gcc_unreachable, GET_MODE, GET_MODE_INNER, GET_MODE_SIZE(), INTVAL, known_eq, MEM_ADDR_SPACE, MEM_ALIGN, expand_operand::mode, NULL, NULL_RTX, optimize_insn_for_speed_p(), poly_int_rtx_p(), set_storage_via_libcall(), set_storage_via_setmem(), targetm, try_store_by_multiple_pieces(), and write_complex_part().

Referenced by clear_storage(), and expand_builtin_memset_args().

◆ clobber_reg_mode()

Add a CLOBBER expression for REG to the (possibly empty) list pointed to by CALL_FUSAGE. REG must denote a hard register.

References gcc_assert, gen_rtx_EXPR_LIST(), expand_operand::mode, REG_P, and REGNO.

Referenced by clobber_reg().

◆ compare_by_pieces()

|

static |

Generate several move instructions to compare LEN bytes from blocks ARG0 and ARG1. (These are MEM rtx's with BLKmode). If PUSH_ROUNDING is defined and TO is NULL, emit_single_push_insn is used to push FROM to the stack. ALIGN is maximum stack alignment we can assume. Optionally, the caller can pass a constfn and associated data in A1_CFN and A1_CFN_DATA. describing that the second operand being compared is a known constant and how to obtain its data.

References const0_rtx, const1_rtx, emit_barrier(), emit_jump(), emit_label(), emit_move_insn(), fail_label, gen_label_rtx(), gen_reg_rtx(), integer_type_node, NULL_RTX, REG_P, REGNO, and TYPE_MODE.

Referenced by emit_block_cmp_hints(), and emit_block_cmp_via_loop().

◆ complete_ctor_at_level_p()

| bool complete_ctor_at_level_p | ( | const_tree | type, |

| HOST_WIDE_INT | num_elts, | ||

| const_tree | last_type ) |

TYPE is initialized by a constructor with NUM_ELTS elements, the last of which had type LAST_TYPE. Each element was itself a complete initializer, in the sense that every meaningful byte was explicitly given a value. Return true if the same is true for the constructor as a whole.

References count_type_elements(), DECL_CHAIN, gcc_assert, simple_cst_equal(), TREE_CODE, TYPE_FIELDS, TYPE_SIZE, and ZERO_INIT_PADDING_BITS_UNIONS.

Referenced by categorize_ctor_elements_1().

◆ compress_float_constant()

If Y is representable exactly in a narrower mode, and the target can perform the extension directly from constant or memory, then emit the move as an extension.

References can_extend_p(), const_double_from_real_value(), CONST_DOUBLE_REAL_VALUE, emit_move_insn(), emit_unop_insn(), exact_real_truncate(), float_extend_from_mem, FOR_EACH_MODE_UNTIL, force_const_mem(), force_reg(), gen_reg_rtx(), get_last_insn(), GET_MODE, HARD_REGISTER_P, insn_operand_matches(), NULL, optimize_insn_for_speed_p(), r, REAL_VALUE_TYPE, REG_P, set_src_cost(), set_unique_reg_note(), expand_operand::target, targetm, validize_mem(), and y.

Referenced by emit_move_insn().

◆ const_vector_from_tree()

Return a CONST_VECTOR rtx for a VECTOR_CST tree.

References rtx_vector_builder::build(), CONST0_RTX, const_double_from_real_value(), CONST_FIXED_FROM_FIXED_VALUE, const_vector_mask_from_tree(), count, vector_builder< T, Shape, Derived >::encoded_nelts(), exp(), GET_MODE_INNER, i, immed_wide_int_const(), initializer_zerop(), expand_operand::mode, wi::to_poly_wide(), TREE_CODE, TREE_FIXED_CST, TREE_REAL_CST, TREE_TYPE, TYPE_MODE, VECTOR_BOOLEAN_TYPE_P, VECTOR_CST_ELT, VECTOR_CST_NELTS_PER_PATTERN, and VECTOR_CST_NPATTERNS.

Referenced by expand_expr_real_1().

◆ const_vector_mask_from_tree()

Return a CONST_VECTOR rtx representing vector mask for a VECTOR_CST of booleans.

References rtx_vector_builder::build(), CONST0_RTX, CONSTM1_RTX, count, vector_builder< T, Shape, Derived >::encoded_nelts(), exp(), gcc_assert, gcc_unreachable, GET_MODE_INNER, i, integer_minus_onep(), integer_onep(), integer_zerop(), expand_operand::mode, TREE_CODE, TREE_TYPE, TYPE_MODE, VECTOR_CST_ELT, VECTOR_CST_NELTS_PER_PATTERN, and VECTOR_CST_NPATTERNS.

Referenced by const_vector_from_tree().

◆ constant_byte_string()

|

static |

Return a STRING_CST corresponding to ARG's constant initializer either if it's a string constant, or, when VALREP is set, any other constant, or null otherwise. On success, set *PTR_OFFSET to the (possibly non-constant) byte offset within the byte string that ARG is references. If nonnull set *MEM_SIZE to the size of the byte string. If nonnull, set *DECL to the constant declaration ARG refers to.

References array_ref_element_size(), array_ref_low_bound(), build_int_cst(), build_string_literal(), CHAR_BIT, char_type_node, ctor_for_folding(), DECL_P, DECL_SIZE_UNIT, error_mark_node, wi::fits_uhwi_p(), fold_build2, fold_convert, fold_ctor_reference(), gcc_assert, gcc_checking_assert, get_addr_base_and_unit_offset(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), initializer_zerop(), INT_MAX, int_size_in_bytes(), integer_onep(), integer_zero_node, integer_zerop(), INTEGRAL_TYPE_P, poly_int< N, C >::is_constant(), is_gimple_assign(), native_encode_expr(), native_encode_initializer(), NULL, NULL_TREE, POINTER_TYPE_P, r, size_int, sizetype, SSA_NAME_DEF_STMT, ssizetype, string_constant(), STRIP_NOPS, poly_int< N, C >::to_uhwi(), TREE_CODE, tree_fits_shwi_p(), tree_fits_uhwi_p(), tree_int_cst_equal(), TREE_OPERAND, TREE_STRING_LENGTH, tree_to_shwi(), tree_to_uhwi(), TREE_TYPE, TYPE_MAIN_VARIANT, TYPE_PRECISION, TYPE_SIZE_UNIT, VAR_P, and wide_int_to_tree().

Referenced by byte_representation(), and string_constant().

◆ convert_float_to_wider_int()

Variant of convert_modes for ABI parameter passing/return. Return an rtx for a value that would result from converting X from a floating point mode FMODE to wider integer mode MODE.

References convert_modes(), force_reg(), gcc_assert, gen_lowpart, int_mode_for_mode(), opt_mode< T >::require(), SCALAR_FLOAT_MODE_P, and SCALAR_INT_MODE_P.

Referenced by expand_value_return(), and precompute_register_parameters().

◆ convert_mode_scalar()

Like convert_move, but deals only with scalar modes.

References ALL_SCALAR_FIXED_POINT_MODE_P, arm_bfloat_half_format, as_a(), BITS_PER_WORD, can_extend_p(), CEIL, const0_rtx, const1_rtx, convert_mode_scalar(), convert_move(), convert_optab_handler(), convert_optab_libfunc(), convert_to_mode(), copy_to_reg(), DECIMAL_FLOAT_MODE_P, direct_load, emit_clobber(), emit_insn(), emit_libcall_block(), emit_library_call_value(), emit_move_insn(), emit_store_flag_force(), emit_unop_insn(), end_sequence(), expand_binop(), expand_fixed_convert(), expand_shift(), FOR_EACH_MODE_FROM, force_lowpart_subreg(), force_reg(), gcc_assert, gcc_unreachable, gen_int_mode(), gen_lowpart, gen_reg_rtx(), GET_CODE, GET_MODE, GET_MODE_BITSIZE(), GET_MODE_CLASS, GET_MODE_PRECISION(), GET_MODE_SIZE(), HONOR_NANS(), HOST_WIDE_INT_1U, i, ibm_extended_format, ieee_half_format, ieee_quad_format, ieee_single_format, insns, int_mode_for_size(), LCT_CONST, maybe_expand_shift(), MEM_ADDR_SPACE, MEM_P, MEM_VOLATILE_P, mode_dependent_address_p(), NULL_RTX, operand_subword(), OPTAB_DIRECT, optimize_insn_for_speed_p(), REAL_MODE_FORMAT, reg_overlap_mentioned_p(), REG_P, REGNO, opt_mode< T >::require(), SCALAR_FLOAT_MODE_P, shift, simplify_unary_operation(), smallest_int_mode_for_size(), start_sequence(), targetm, TRULY_NOOP_TRUNCATION_MODES_P, word_mode, and XEXP.

Referenced by convert_mode_scalar(), and convert_move().

◆ convert_modes()

Return an rtx for a value that would result from converting X from mode OLDMODE to mode MODE. Both modes may be floating, or both integer. UNSIGNEDP is nonzero if X is an unsigned value. This can be done by referring to a part of X in place or by copying to a new temporary with conversion. You can give VOIDmode for OLDMODE, if you are sure X has a nonvoid mode.

References CONST_POLY_INT_P, CONST_SCALAR_INT_P, convert_move(), direct_load, force_subreg(), wide_int_storage::from(), gcc_assert, gen_lowpart, gen_reg_rtx(), GET_CODE, GET_MODE, GET_MODE_BITSIZE(), GET_MODE_PRECISION(), HARD_REGISTER_P, immed_wide_int_const(), is_a(), is_int_mode(), known_eq, MEM_P, MEM_VOLATILE_P, REG_P, REGNO, SIGNED, SUBREG_CHECK_PROMOTED_SIGN, subreg_promoted_mode(), SUBREG_PROMOTED_SET, SUBREG_PROMOTED_VAR_P, SUBREG_REG, targetm, TRULY_NOOP_TRUNCATION_MODES_P, UNSIGNED, and VECTOR_MODE_P.

Referenced by avoid_expensive_constant(), can_widen_mult_without_libcall(), convert_float_to_wider_int(), convert_memory_address_addr_space_1(), convert_to_mode(), emit_block_cmp_via_loop(), emit_block_move_via_loop(), emit_block_move_via_oriented_loop(), emit_library_call_value_1(), emit_store_flag_1(), emit_store_flag_int(), expand_arith_overflow_result_store(), expand_asan_emit_allocas_unpoison(), expand_binop_directly(), expand_builtin_extend_pointer(), expand_builtin_issignaling(), expand_cond_expr_using_cmove(), expand_divmod(), expand_doubleword_mod(), expand_expr_addr_expr_1(), expand_expr_force_mode(), expand_expr_real_1(), expand_expr_real_2(), expand_gimple_stmt_1(), expand_ifn_atomic_compare_exchange(), expand_ifn_atomic_compare_exchange_into_call(), expand_mul_overflow(), expand_speculation_safe_value(), expand_twoval_binop(), expand_twoval_unop(), expand_value_return(), expand_widening_mult(), expmed_mult_highpart_optab(), extract_high_half(), extract_low_bits(), inline_string_cmp(), insert_value_copy_on_edge(), maybe_legitimize_operand(), optimize_bitfield_assignment_op(), precompute_arguments(), precompute_register_parameters(), prepare_operand(), push_block(), store_expr(), store_field(), store_one_arg(), try_tablejump(), and widen_operand().

◆ convert_move()

Copy data from FROM to TO, where the machine modes are not the same. Both modes may be integer, or both may be floating, or both may be fixed-point. UNSIGNEDP should be nonzero if FROM is an unsigned type. This causes zero-extension instead of sign-extension.

References CONSTANT_P, convert_mode_scalar(), convert_move(), convert_optab_handler(), emit_move_insn(), emit_unop_insn(), force_subreg(), gcc_assert, gen_lowpart, GET_CODE, GET_MODE, GET_MODE_BITSIZE(), GET_MODE_PRECISION(), GET_MODE_UNIT_PRECISION, is_a(), known_eq, simplify_gen_subreg(), SUBREG_CHECK_PROMOTED_SIGN, subreg_promoted_mode(), SUBREG_PROMOTED_SET, SUBREG_PROMOTED_VAR_P, SUBREG_REG, VECTOR_MODE_P, and XEXP.

Referenced by assign_call_lhs(), calculate_table_based_CRC(), convert_mode_scalar(), convert_modes(), convert_move(), do_tablejump(), doloop_modify(), emit_block_cmp_via_loop(), emit_conditional_add(), emit_conditional_move_1(), emit_conditional_neg_or_complement(), emit_cstore(), emit_store_flag_1(), emit_store_flag_int(), expand_assignment(), expand_binop(), expand_builtin_memcmp(), expand_builtin_strcmp(), expand_builtin_strlen(), expand_builtin_strncmp(), expand_crc_table_based(), expand_doubleword_clz_ctz_ffs(), expand_expr_real_1(), expand_expr_real_2(), expand_ffs(), expand_fix(), expand_float(), expand_function_end(), expand_function_start(), expand_gimple_stmt_1(), expand_reversed_crc_table_based(), expand_sfix_optab(), expand_single_bit_test(), expand_twoval_binop(), expand_twoval_unop(), expand_ubsan_result_store(), expand_unop(), force_operand(), std_expand_builtin_va_start(), store_bit_field_using_insv(), store_constructor(), and store_expr().

◆ convert_to_mode()

Return an rtx for a value that would result from converting X to mode MODE. Both X and MODE may be floating, or both integer. UNSIGNEDP is nonzero if X is an unsigned value. This can be done by referring to a part of X in place or by copying to a new temporary with conversion.

References convert_modes().

Referenced by anti_adjust_stack_and_probe(), anti_adjust_stack_and_probe_stack_clash(), assign_call_lhs(), assign_parm_setup_block(), assign_parm_setup_reg(), assign_parm_setup_stack(), builtin_memset_gen_str(), convert_extracted_bit_field(), convert_mode_scalar(), copy_blkmode_from_reg(), do_tablejump(), emit_block_op_via_libcall(), emit_partition_copy(), expand_assignment(), expand_binop(), expand_builtin_bswap(), expand_builtin_crc_table_based(), expand_builtin_extract_return_addr(), expand_builtin_int_roundingfn_2(), expand_builtin_interclass_mathfn(), expand_builtin_memcmp(), expand_builtin_memset_args(), expand_builtin_powi(), expand_builtin_stack_address(), expand_builtin_strcmp(), expand_builtin_strlen(), expand_builtin_strncmp(), expand_builtin_unop(), expand_crc_optab_fn(), expand_expr_real_1(), expand_expr_real_2(), expand_fix(), expand_float(), expand_parity(), expand_POPCOUNT(), expand_sfix_optab(), expand_widening_mult(), expmed_mult_highpart(), extract_fixed_bit_field_1(), get_dynamic_stack_size(), maybe_legitimize_operand(), prepare_cmp_insn(), prepare_float_lib_cmp(), prepare_libcall_arg(), probe_stack_range(), set_storage_via_libcall(), sjlj_emit_dispatch_table(), store_expr(), store_fixed_bit_field_1(), try_casesi(), and try_store_by_multiple_pieces().

◆ convert_tree_comp_to_rtx()

Convert the tree comparison code TCODE to the rtl one where the signedness is UNSIGNEDP.

References gcc_unreachable.

Referenced by expand_cond_expr_using_cmove().

◆ convert_wider_int_to_float()

Variant of convert_modes for ABI parameter passing/return. Return an rtx for a value that would result from converting X from an integer mode IMODE to a narrower floating point mode MODE.

References force_reg(), gcc_assert, gen_lowpart, gen_lowpart_SUBREG(), int_mode_for_mode(), opt_mode< T >::require(), SCALAR_FLOAT_MODE_P, and SCALAR_INT_MODE_P.

Referenced by assign_parm_setup_stack(), expand_call(), and expand_expr_real_1().

◆ copy_blkmode_from_reg()

Copy a BLKmode object of TYPE out of a register SRCREG into TARGET. This is used on targets that return BLKmode values in registers.

References adjust_address, as_a(), BITS_PER_WORD, convert_to_mode(), emit_move_insn(), opt_mode< T >::exists(), extract_bit_field(), gcc_assert, GET_MODE, GET_MODE_ALIGNMENT, GET_MODE_BITSIZE(), GET_MODE_SIZE(), int_mode_for_size(), int_size_in_bytes(), MEM_ALIGN, MEM_P, MIN, expand_operand::mode, NULL, NULL_RTX, operand_subword(), operand_subword_force(), REG_P, opt_mode< T >::require(), store_bit_field(), expand_operand::target, targetm, TYPE_ALIGN, TYPE_UNSIGNED, and word_mode.

Referenced by expand_assignment(), store_expr(), and store_field().

◆ copy_blkmode_to_reg()

Copy BLKmode value SRC into a register of mode MODE_IN. Return the register if it contains any data, otherwise return null. This is used on targets that return BLKmode values in registers.

References arg_int_size_in_bytes(), as_a(), BITS_PER_WORD, CONST0_RTX, emit_move_insn(), expand_normal(), extract_bit_field(), FOR_EACH_MODE_FROM, FOR_EACH_MODE_IN_CLASS, gcc_assert, gen_lowpart, gen_reg_rtx(), GET_MODE_BITSIZE(), GET_MODE_SIZE(), i, MIN, expand_operand::mode, NULL, NULL_RTX, operand_subword(), operand_subword_force(), opt_mode< T >::require(), smallest_int_mode_for_size(), store_bit_field(), targetm, TREE_TYPE, TYPE_ALIGN, TYPE_MODE, and word_mode.

Referenced by expand_assignment(), and expand_return().

◆ count_type_elements()

|

static |

If FOR_CTOR_P, return the number of top-level elements that a constructor must have in order for it to completely initialize a value of type TYPE. Return -1 if the number isn't known. If !FOR_CTOR_P, return an estimate of the number of scalars in TYPE.

References array_type_nelts_minus_one(), count_type_elements(), DECL_CHAIN, flexible_array_member_p(), gcc_assert, gcc_unreachable, simple_cst_equal(), TREE_CODE, tree_fits_uhwi_p(), tree_to_uhwi(), TREE_TYPE, TYPE_FIELDS, TYPE_SIZE, and TYPE_VECTOR_SUBPARTS().

Referenced by categorize_ctor_elements_1(), complete_ctor_at_level_p(), and count_type_elements().

◆ do_store_flag()

|

static |

Generate code to calculate OPS, and exploded expression using a store-flag instruction and return an rtx for the result. OPS reflects a comparison. If TARGET is nonzero, store the result there if convenient. Return zero if there is no suitable set-flag instruction available on this machine. Once expand_expr has been called on the arguments of the comparison, we are committed to doing the store flag, since it is not safe to re-evaluate the expression. We emit the store-flag insn by calling emit_store_flag, but only expand the arguments if we have a reason to believe that emit_store_flag will be successful. If we think that it will, but it isn't, we have to simulate the store-flag with a set/jump/set sequence.

References build2(), separate_ops::code, const0_rtx, do_store_flag(), emit_store_flag_force(), error_mark_node, wi::exact_log2(), expand_binop(), EXPAND_NORMAL, expand_operands(), expand_single_bit_test(), expand_vec_cmp_expr(), expand_vec_cmp_expr_p(), FUNC_OR_METHOD_TYPE_P, gcc_assert, gcc_unreachable, GEN_INT, gen_reg_rtx(), get_def_for_expr(), GET_MODE, GET_MODE_PRECISION(), get_subtarget(), gimple_assign_rhs1(), gimple_assign_rhs2(), HOST_WIDE_INT_1U, integer_all_onesp(), integer_onep(), integer_pow2p(), integer_zero_node, integer_zerop(), separate_ops::location, maybe_optimize_mod_cmp(), maybe_optimize_sub_cmp_0(), NULL_RTX, separate_ops::op0, separate_ops::op1, OPTAB_WIDEN, POINTER_TYPE_P, wi::popcount(), SCALAR_INT_MODE_P, STRIP_NOPS, swap_condition(), targetm, wi::to_wide(), TREE_CODE, tree_nonzero_bits(), TREE_TYPE, separate_ops::type, lang_hooks_for_types::type_for_mode, TYPE_MODE, TYPE_PRECISION, TYPE_UNSIGNED, TYPE_VECTOR_SUBPARTS(), lang_hooks::types, VECTOR_BOOLEAN_TYPE_P, and VECTOR_TYPE_P.

Referenced by do_store_flag(), and expand_expr_real_2().

◆ do_tablejump()

|

static |

Attempt to generate a tablejump instruction; same concept.

Subroutine of the next function. INDEX is the value being switched on, with the lowest value in the table already subtracted. MODE is its expected mode (needed if INDEX is constant). RANGE is the length of the jump table. TABLE_LABEL is a CODE_LABEL rtx for the table itself. DEFAULT_LABEL is a CODE_LABEL rtx to jump to if the index value is out of range. DEFAULT_PROBABILITY is the probability of jumping to the default label.

References as_a(), CASE_VECTOR_PC_RELATIVE, cfun, convert_move(), convert_to_mode(), copy_to_mode_reg(), emit_barrier(), emit_cmp_and_jump_insns(), emit_jump_insn(), gen_const_mem(), gen_int_mode(), gen_reg_rtx(), GET_CODE, GET_MODE_PRECISION(), GET_MODE_SIZE(), HOST_BITS_PER_WIDE_INT, HOST_WIDE_INT_1U, INTVAL, memory_address, expand_operand::mode, NULL_RTX, REG_P, simplify_gen_binary(), SUBREG_PROMOTED_SIGNED_P, SUBREG_PROMOTED_VAR_P, targetm, and UINTVAL.

Referenced by try_tablejump().

◆ emit_block_cmp_hints()

| rtx emit_block_cmp_hints | ( | rtx | x, |

| rtx | y, | ||

| rtx | len, | ||

| tree | len_type, | ||

| rtx | target, | ||

| bool | equality_only, | ||

| by_pieces_constfn | y_cfn, | ||

| void * | y_cfndata, | ||

| unsigned | ctz_len ) |

Emit code to compare a block Y to a block X. This may be done with string-compare instructions, with multiple scalar instructions, or with a library call. Both X and Y must be MEM rtx's. LEN is an rtx that says how long they are. LEN_TYPE is the type of the expression that was used to calculate it, and CTZ_LEN is the known trailing-zeros count of LEN, so LEN must be a multiple of 1<<CTZ_LEN even if it's not constant. If EQUALITY_ONLY is true, it means we don't have to return the tri-state value of a normal memcmp call, instead we can just compare for equality. If FORCE_LIBCALL is true, we should emit a call to memcmp rather than returning NULL_RTX. Optionally, the caller can pass a constfn and associated data in Y_CFN and Y_CFN_DATA. describing that the second operand being compared is a known constant and how to obtain its data. Return the result of the comparison, or NULL_RTX if we failed to perform the operation.

References adjust_address, can_do_by_pieces(), COMPARE_BY_PIECES, compare_by_pieces(), const0_rtx, CONST_INT_P, emit_block_cmp_via_cmpmem(), emit_block_cmp_via_loop(), gcc_assert, ILSOP_MEMCMP, INTVAL, MEM_ALIGN, MEM_P, MIN, expand_operand::target, and y.

Referenced by expand_builtin_memcmp().

◆ emit_block_cmp_via_cmpmem()

|

static |

Expand a block compare between X and Y with length LEN using the cmpmem optab, placing the result in TARGET. LEN_TYPE is the type of the expression that was used to calculate the length. ALIGN gives the known minimum common alignment.

References direct_optab_handler(), expand_cmpstrn_or_cmpmem(), NULL_RTX, expand_operand::target, and y.

Referenced by emit_block_cmp_hints().

◆ emit_block_cmp_via_loop()

|

static |

Like emit_block_cmp_hints, but with known alignment and no support for constats. Always expand to a loop with iterations that compare blocks of the largest compare-by-pieces size that divides both len and align, and then, if !EQUALITY_ONLY, identify the word and then the unit that first differs to return the result.

References apply_scale(), BITS_PER_WORD, can_compare_p(), can_do_by_pieces(), ccp_jump, change_address(), COMPARE_BY_PIECES, compare_by_pieces(), const0_rtx, const1_rtx, CONST_INT_P, CONSTANT_P, constm1_rtx, convert_modes(), convert_move(), wi::ctz(), do_pending_stack_adjust(), emit_block_cmp_via_loop(), emit_cmp_and_jump_insns(), emit_jump(), emit_label(), emit_move_insn(), opt_mode< T >::exists(), expand_binop(), expand_simple_binop(), force_operand(), gcc_checking_assert, GEN_INT, gen_label_rtx(), gen_reg_rtx(), get_address_mode(), GET_MODE, GET_MODE_BITSIZE(), profile_probability::guessed_always(), HOST_WIDE_INT_1U, int_mode_for_size(), integer_type_node, known_gt, MAX, NULL, NULL_RTX, OPTAB_LIB_WIDEN, REG_P, REGNO, opt_mode< T >::require(), rtx_equal_p(), simplify_gen_binary(), expand_operand::target, TYPE_MODE, UINTVAL, word_mode, XEXP, and y.

Referenced by emit_block_cmp_hints(), and emit_block_cmp_via_loop().

◆ emit_block_move()

| rtx emit_block_move | ( | rtx | x, |

| rtx | y, | ||

| rtx | size, | ||

| enum block_op_methods | method, | ||

| unsigned int | ctz_size ) |

References emit_block_move_hints(), GET_CODE, GET_MODE, GET_MODE_MASK, NULL, UINTVAL, and y.

Referenced by assign_parm_setup_block(), assign_parm_setup_stack(), emit_library_call_value_1(), emit_move_complex(), emit_partition_copy(), emit_push_insn(), expand_assignment(), expand_builtin_apply(), expand_builtin_va_copy(), expand_call(), expand_expr_real_1(), expand_gimple_stmt_1(), store_expr(), store_field(), and store_one_arg().

◆ emit_block_move_hints()

| rtx emit_block_move_hints | ( | rtx | x, |

| rtx | y, | ||

| rtx | size, | ||

| enum block_op_methods | method, | ||

| unsigned int | expected_align, | ||

| HOST_WIDE_INT | expected_size, | ||

| unsigned HOST_WIDE_INT | min_size, | ||

| unsigned HOST_WIDE_INT | max_size, | ||

| unsigned HOST_WIDE_INT | probable_max_size, | ||

| bool | bail_out_libcall, | ||

| bool * | is_move_done, | ||

| bool | might_overlap, | ||

| unsigned | ctz_size ) |

Emit code to move a block Y to a block X. This may be done with string-move instructions, with multiple scalar move instructions, or with a library call. Both X and Y must be MEM rtx's (perhaps inside VOLATILE) with mode BLKmode. SIZE is an rtx that says how long they are. ALIGN is the maximum alignment we can assume they have. METHOD describes what kind of copy this is, and what mechanisms may be used. MIN_SIZE is the minimal size of block to move MAX_SIZE is the maximal size of block to move, if it cannot be represented in unsigned HOST_WIDE_INT, than it is mask of all ones. CTZ_SIZE is the trailing-zeros count of SIZE; even a nonconstant SIZE is known to be a multiple of 1<<CTZ_SIZE. Return the address of the new block, if memcpy is called and returns it, 0 otherwise.

References ADDR_SPACE_GENERIC_P, adjust_address, block_move_libcall_safe_for_call_parm(), BLOCK_OP_CALL_PARM, BLOCK_OP_NO_LIBCALL, BLOCK_OP_NO_LIBCALL_RET, BLOCK_OP_NORMAL, BLOCK_OP_TAILCALL, can_move_by_pieces(), CONST_INT_P, emit_block_copy_via_libcall(), emit_block_move_via_oriented_loop(), emit_block_move_via_pattern(), emit_block_move_via_sized_loop(), gcc_assert, gcc_unreachable, ILSOP_MEMCPY, ILSOP_MEMMOVE, INTVAL, MEM_ADDR_SPACE, MEM_ALIGN, MEM_P, MEM_VOLATILE_P, MIN, move_by_pieces(), NO_DEFER_POP, OK_DEFER_POP, pc_rtx, poly_int_rtx_p(), RETURN_BEGIN, rtx_equal_p(), set_mem_size(), shallow_copy_rtx(), and y.

Referenced by emit_block_move(), emit_block_move_via_loop(), and expand_builtin_memory_copy_args().

◆ emit_block_move_via_loop() [1/2]

A subroutine of emit_block_move. Copy the data via an explicit loop. This is used only when libcalls are forbidden, or when inlining is required. INCR is the block size to be copied in each loop iteration. If it is negative, the absolute value is used, and the block is copied backwards. INCR must be a power of two, an exact divisor for SIZE and ALIGN, and imply a mode that can be safely copied per iteration assuming no overlap.

References apply_scale(), BLOCK_OP_NO_LIBCALL, can_move_by_pieces(), change_address(), const0_rtx, convert_modes(), do_pending_stack_adjust(), emit_block_move_hints(), emit_cmp_and_jump_insns(), emit_jump(), emit_label(), emit_move_insn(), opt_mode< T >::exists(), expand_simple_binop(), force_operand(), gcc_checking_assert, GEN_INT, gen_label_rtx(), gen_reg_rtx(), get_address_mode(), GET_MODE, GET_MODE_BITSIZE(), profile_probability::guessed_always(), int_mode_for_size(), NULL_RTX, OPTAB_LIB_WIDEN, opt_mode< T >::require(), simplify_gen_binary(), word_mode, XEXP, and y.

◆ emit_block_move_via_loop() [2/2]

Referenced by emit_block_move_via_oriented_loop(), and emit_block_move_via_sized_loop().

◆ emit_block_move_via_oriented_loop() [1/2]

|

static |

Like emit_block_move_via_sized_loop, but besides choosing INCR so as to ensure safe moves even in case of overlap, output dynamic tests to choose between two loops, one moving downwards, another moving upwards.

References apply_scale(), CONST_INT_P, convert_modes(), wi::ctz(), do_pending_stack_adjust(), emit_block_move_via_loop(), emit_cmp_and_jump_insns(), emit_jump(), emit_label(), force_operand(), gcc_checking_assert, gen_label_rtx(), GET_MODE, GET_MODE_BITSIZE(), profile_probability::guessed_always(), HOST_WIDE_INT_1U, int_mode_for_size(), MAX, expand_operand::mode, NULL_RTX, opt_mode< T >::require(), simplify_gen_binary(), smallest_int_mode_for_size(), UINTVAL, XEXP, and y.

◆ emit_block_move_via_oriented_loop() [2/2]

Referenced by emit_block_move_hints().

◆ emit_block_move_via_pattern() [1/2]

|

static |

A subroutine of emit_block_move. Expand a cpymem or movmem pattern; return true if successful. X is the destination of the copy or move. Y is the source of the copy or move. SIZE is the size of the block to be moved. MIGHT_OVERLAP indicates this originated with expansion of a builtin_memmove() and the source and destination blocks may overlap.

References CONST_INT_P, create_convert_operand_to(), create_fixed_operand(), create_integer_operand(), direct_optab_handler(), FOR_EACH_MODE_IN_CLASS, gcc_assert, GET_MODE_BITSIZE(), GET_MODE_MASK, insn_data, INTVAL, maybe_expand_insn(), expand_operand::mode, NULL, opt_mode< T >::require(), and y.

◆ emit_block_move_via_pattern() [2/2]

|

static |

Referenced by emit_block_move_hints().

◆ emit_block_move_via_sized_loop() [1/2]

|

static |

Like emit_block_move_via_loop, but choose a suitable INCR based on ALIGN and CTZ_SIZE.

References can_move_by_pieces(), CONST_INT_P, wi::ctz(), emit_block_move_via_loop(), gcc_checking_assert, HOST_WIDE_INT_1U, MAX, UINTVAL, and y.

◆ emit_block_move_via_sized_loop() [2/2]

Referenced by emit_block_move_hints().

◆ emit_block_op_via_libcall()

| rtx emit_block_op_via_libcall | ( | enum built_in_function | fncode, |

| rtx | dst, | ||

| rtx | src, | ||

| rtx | size, | ||

| bool | tailcall ) |

Expand a call to memcpy or memmove or memcmp, and return the result. TAILCALL is true if this is a tail call.

References build_call_expr(), builtin_decl_implicit(), CALL_EXPR_TAILCALL, convert_memory_address, convert_to_mode(), copy_addr_to_reg(), copy_to_mode_reg(), expand_call(), make_tree(), mark_addressable(), MEM_EXPR, NULL_RTX, ptr_mode, ptr_type_node, sizetype, TYPE_MODE, and XEXP.

Referenced by emit_block_comp_via_libcall(), emit_block_copy_via_libcall(), and emit_block_move_via_libcall().

◆ emit_group_load()

| void emit_group_load | ( | rtx | dst, |

| rtx | src, | ||

| tree | type, | ||

| poly_int64 | ssize ) |

Emit code to move a block SRC of type TYPE to a block DST, where DST is non-consecutive registers represented by a PARALLEL. SSIZE represents the total size of block ORIG_SRC in bytes, or -1 if not known.

References emit_group_load_1(), emit_move_insn(), i, NULL, XEXP, XVECEXP, and XVECLEN.

Referenced by emit_group_store(), emit_library_call_value_1(), emit_push_insn(), expand_assignment(), expand_function_end(), expand_value_return(), and store_expr().

◆ emit_group_load_1()

|

static |

A subroutine of emit_group_load. Arguments as for emit_group_load, except that values are placed in TMPS[i], and must later be moved into corresponding XEXP (XVECEXP (DST, 0, i), 0) element.

References adjust_address, assign_stack_temp(), COMPLEX_MODE_P, CONSTANT_P, emit_group_load_1(), emit_move_insn(), expand_shift(), extract_bit_field(), force_reg(), force_subreg(), gcc_assert, gcc_checking_assert, gen_lowpart, gen_reg_rtx(), GET_CODE, GET_MODE, GET_MODE_ALIGNMENT, GET_MODE_SIZE(), HARD_REGISTER_P, i, int_mode_for_mode(), known_eq, known_le, maybe_gt, MEM_ALIGN, MEM_P, expand_operand::mode, NULL, NULL_RTX, PAD_DOWNWARD, PAD_UPWARD, REG_P, rtx_to_poly_int64(), SCALAR_INT_MODE_P, shift, split_double(), targetm, XEXP, XVECEXP, and XVECLEN.

Referenced by emit_group_load(), emit_group_load_1(), and emit_group_load_into_temps().

◆ emit_group_load_into_temps()

| rtx emit_group_load_into_temps | ( | rtx | parallel, |

| rtx | src, | ||

| tree | type, | ||

| poly_int64 | ssize ) |

Similar, but load SRC into new pseudos in a format that looks like PARALLEL. This can later be fed to emit_group_move to get things in the right place.

References alloc_EXPR_LIST(), emit_group_load_1(), force_reg(), GET_MODE, i, REG_NOTE_KIND, rtvec_alloc(), RTVEC_ELT, XEXP, XVECEXP, and XVECLEN.

Referenced by precompute_register_parameters(), and store_one_arg().

◆ emit_group_move()

Emit code to move a block SRC to block DST, where SRC and DST are non-consecutive groups of registers, each represented by a PARALLEL.

References emit_move_insn(), gcc_assert, GET_CODE, i, XEXP, XVECEXP, and XVECLEN.

Referenced by expand_assignment(), expand_call(), expand_function_end(), load_register_parameters(), and store_expr().

◆ emit_group_move_into_temps()

Move a group of registers represented by a PARALLEL into pseudos.

References alloc_EXPR_LIST(), copy_to_reg(), GET_MODE, i, REG_NOTE_KIND, rtvec_alloc(), RTVEC_ELT, XEXP, XVECEXP, and XVECLEN.

Referenced by assign_parm_setup_block(), and expand_call().

◆ emit_group_store()

| void emit_group_store | ( | rtx | orig_dst, |

| rtx | src, | ||

| tree | type, | ||

| poly_int64 | ssize ) |

Emit code to move a block SRC to a block ORIG_DST of type TYPE, where SRC is non-consecutive registers represented by a PARALLEL. SSIZE represents the total size of block ORIG_DST, or -1 if not known.

References adjust_address, as_a(), assign_stack_temp(), COMPLEX_MODE_P, CONST0_RTX, emit_group_load(), emit_group_store(), emit_move_insn(), expand_shift(), force_subreg(), gcc_assert, gcc_checking_assert, gen_lowpart, gen_reg_rtx(), GET_CODE, GET_MODE, GET_MODE_ALIGNMENT, GET_MODE_BITSIZE(), GET_MODE_SIZE(), i, int_mode_for_mode(), known_eq, known_ge, known_le, maybe_gt, MEM_ALIGN, MEM_P, expand_operand::mode, NULL_RTX, PAD_DOWNWARD, PAD_UPWARD, REG_P, REGNO, rtx_equal_p(), rtx_to_poly_int64(), SCALAR_INT_MODE_P, shift, store_bit_field(), subreg_lowpart_offset(), targetm, XEXP, XVECEXP, and XVECLEN.

Referenced by assign_parm_adjust_entry_rtl(), assign_parm_remove_parallels(), assign_parm_setup_block(), emit_group_store(), emit_library_call_value_1(), expand_assignment(), expand_call(), maybe_emit_group_store(), store_expr(), and store_field().

◆ emit_move_ccmode()

A subroutine of emit_move_insn_1. Generate a move from Y into X. MODE is known to be MODE_CC. Returns the last instruction emitted.

References emit_insn(), emit_move_change_mode(), emit_move_via_integer(), gcc_assert, expand_operand::mode, NULL, optab_handler(), and y.

Referenced by emit_move_insn_1().

◆ emit_move_change_mode()

|

static |

A subroutine of emit_move_insn_1. Yet another lowpart generator. NEW_MODE and OLD_MODE are the same size. Return NULL if X cannot be represented in NEW_MODE. If FORCE is true, this will never happen, as we'll force-create a SUBREG if needed.

References adjust_address, adjust_address_nv, copy_replacements(), gen_rtx_MEM(), GET_MODE, MEM_COPY_ATTRIBUTES, MEM_P, new_mode(), push_operand(), reload_in_progress, simplify_gen_subreg(), simplify_subreg(), and XEXP.

Referenced by emit_move_ccmode(), and emit_move_via_integer().

◆ emit_move_complex()

A subroutine of emit_move_insn_1. Generate a move from Y into X. MODE is known to be complex. Returns the last instruction emitted.

References BLOCK_OP_NO_LIBCALL, BLOCK_OP_NORMAL, CONSTANT_P, emit_block_move(), emit_move_complex_parts(), emit_move_complex_push(), emit_move_via_integer(), gen_int_mode(), GET_CODE, get_last_insn(), get_mode_alignment(), GET_MODE_CLASS, GET_MODE_INNER, GET_MODE_SIZE(), HARD_REGISTER_P, MEM_P, expand_operand::mode, optab_handler(), optimize_insn_for_speed_p(), push_operand(), REG_NREGS, REG_P, register_operand(), and y.

Referenced by emit_move_insn_1().

◆ emit_move_complex_parts()

A subroutine of emit_move_complex. Perform the move from Y to X via two moves of the parts. Returns the last instruction emitted.

References emit_clobber(), get_last_insn(), read_complex_part(), reg_overlap_mentioned_p(), REG_P, reload_completed, reload_in_progress, write_complex_part(), and y.

Referenced by emit_move_complex().

◆ emit_move_complex_push()

A subroutine of emit_move_complex. Generate a move from Y into X. X is known to satisfy push_operand, and MODE is known to be complex. Returns the last instruction emitted.

References emit_move_insn(), emit_move_resolve_push(), gcc_unreachable, gen_rtx_MEM(), GET_CODE, GET_MODE_INNER, GET_MODE_SIZE(), expand_operand::mode, read_complex_part(), XEXP, and y.

Referenced by emit_move_complex().

◆ emit_move_insn()

Generate code to copy Y into X. Both Y and X must have the same mode, except that Y can be a constant with VOIDmode. This mode cannot be BLKmode; use emit_block_move for that. Return the last instruction emitted.

References adjust_address, compress_float_constant(), CONSTANT_P, copy_rtx(), emit_move_insn_1(), force_const_mem(), gcc_assert, GET_MODE, GET_MODE_ALIGNMENT, GET_MODE_SIZE(), known_eq, MEM_ADDR_SPACE, MEM_ALIGN, MEM_P, memory_address_addr_space_p(), expand_operand::mode, NULL_RTX, optab_handler(), push_operand(), REG_P, rtx_equal_p(), SCALAR_FLOAT_MODE_P, SET_DEST, SET_SRC, set_unique_reg_note(), simplify_subreg(), single_set(), SUBREG_P, SUBREG_REG, targetm, use_anchored_address(), validize_mem(), XEXP, and y.

Referenced by adjust_stack_1(), allocate_dynamic_stack_space(), asan_clear_shadow(), asan_emit_stack_protection(), assign_call_lhs(), assign_parm_setup_block(), assign_parm_setup_reg(), assign_parm_setup_stack(), assign_parms_unsplit_complex(), attempt_change(), avoid_likely_spilled_reg(), builtin_memset_gen_str(), builtin_memset_read_str(), clear_storage_hints(), combine_reaching_defs(), combine_var_copies_in_loop_exit(), compare_by_pieces(), compress_float_constant(), convert_mode_scalar(), convert_move(), copy_blkmode_from_reg(), copy_blkmode_to_reg(), copy_to_mode_reg(), copy_to_reg(), copy_to_suggested_reg(), curr_insn_transform(), default_speculation_safe_value(), default_zero_call_used_regs(), do_jump_by_parts_zero_rtx(), emit_block_cmp_via_loop(), emit_block_move_via_loop(), emit_conditional_move(), emit_conditional_move_1(), emit_group_load(), emit_group_load_1(), emit_group_move(), emit_group_store(), emit_initial_value_sets(), emit_libcall_block_1(), emit_library_call_value_1(), emit_move_complex_push(), emit_move_list(), emit_move_multi_word(), emit_move_resolve_push(), emit_partition_copy(), emit_push_insn(), emit_stack_probe(), emit_store_flag_force(), emit_store_flag_int(), expand_abs(), expand_absneg_bit(), expand_and(), expand_asm_stmt(), expand_assignment(), expand_atomic_compare_and_swap(), expand_atomic_fetch_op(), expand_atomic_load(), expand_atomic_store(), expand_binop(), expand_BITINTTOFLOAT(), expand_builtin_apply(), expand_builtin_apply_args_1(), expand_builtin_atomic_clear(), expand_builtin_atomic_compare_exchange(), expand_builtin_eh_copy_values(), expand_builtin_eh_return(), expand_BUILTIN_EXPECT(), expand_builtin_goacc_parlevel_id_size(), expand_builtin_init_descriptor(), expand_builtin_issignaling(), expand_builtin_longjmp(), expand_builtin_nonlocal_goto(), expand_builtin_return(), expand_builtin_setjmp_receiver(), expand_builtin_setjmp_setup(), expand_builtin_sincos(), expand_builtin_stpcpy_1(), expand_builtin_strlen(), expand_builtin_strub_enter(), expand_builtin_strub_leave(), expand_builtin_strub_update(), expand_call(), expand_compare_and_swap_loop(), expand_copysign_absneg(), expand_copysign_bit(), expand_dec(), expand_DIVMOD(), expand_divmod(), expand_doubleword_bswap(), expand_doubleword_mult(), expand_dw2_landing_pad_for_region(), expand_eh_return(), expand_expr_real_1(), expand_expr_real_2(), expand_fix(), expand_fixed_convert(), expand_float(), expand_function_end(), expand_function_start(), expand_gimple_stmt_1(), expand_GOACC_DIM_POS(), expand_GOACC_DIM_SIZE(), expand_HWASAN_ALLOCA_POISON(), expand_HWASAN_CHOOSE_TAG(), expand_HWASAN_SET_TAG(), expand_ifn_atomic_bit_test_and(), expand_ifn_atomic_compare_exchange_into_call(), expand_ifn_atomic_op_fetch_cmp_0(), expand_inc(), expand_movstr(), expand_mul_overflow(), expand_mult_const(), expand_one_ssa_partition(), expand_POPCOUNT(), expand_rotate_as_vec_perm(), expand_SET_EDOM(), expand_smod_pow2(), expand_strided_load_optab_fn(), expand_subword_shift(), expand_superword_shift(), expand_ubsan_result_store(), expand_unop(), expand_value_return(), expand_vec_set_optab_fn(), expand_vector_ubsan_overflow(), ext_dce_try_optimize_rshift(), extract_bit_field_1(), extract_integral_bit_field(), find_shift_sequence(), fix_crossing_unconditional_branches(), asan_redzone_buffer::flush_redzone_payload(), force_expand_binop(), force_not_mem(), force_operand(), force_reg(), get_arg_pointer_save_area(), get_dynamic_stack_base(), inherit_in_ebb(), init_one_dwarf_reg_size(), init_return_column_size(), init_set_costs(), initialize_uninitialized_regs(), inline_string_cmp(), insert_base_initialization(), insert_value_copy_on_edge(), insert_var_expansion_initialization(), instantiate_virtual_regs_in_insn(), load_register_parameters(), lra_emit_add(), lra_emit_move(), make_safe_from(), match_asm_constraints_1(), match_asm_constraints_2(), maybe_emit_unop_insn(), move_block_from_reg(), move_block_to_reg(), noce_emit_cmove(), noce_emit_move_insn(), noce_try_sign_bit_splat(), optimize_bitfield_assignment_op(), prepare_call_address(), prepare_copy_insn(), probe_stack_range(), resolve_shift_zext(), resolve_simple_move(), sjlj_emit_dispatch_table(), sjlj_emit_function_enter(), sjlj_mark_call_sites(), split_iv(), stack_protect_prologue(), store_bit_field(), store_bit_field_1(), store_bit_field_using_insv(), store_constructor(), store_expr(), store_fixed_bit_field_1(), store_integral_bit_field(), store_one_arg(), store_unaligned_arguments_into_pseudos(), try_store_by_multiple_pieces(), unroll_loop_runtime_iterations(), widen_bswap(), widen_operand(), and write_complex_part().

◆ emit_move_insn_1()

Low level part of emit_move_insn. Called just like emit_move_insn, but assumes X and Y are basically valid.

References ALL_FIXED_POINT_MODE_P, COMPLEX_MODE_P, CONSTANT_P, emit_insn(), emit_move_ccmode(), emit_move_complex(), emit_move_multi_word(), emit_move_via_integer(), gcc_assert, GET_MODE, GET_MODE_BITSIZE(), GET_MODE_CLASS, HOST_BITS_PER_WIDE_INT, known_le, lra_in_progress, expand_operand::mode, optab_handler(), PATTERN(), recog(), and y.

Referenced by emit_move_insn(), and gen_move_insn().

◆ emit_move_multi_word()

A subroutine of emit_move_insn_1. Generate a move from Y into X. MODE is any multi-word or full-word mode that lacks a move_insn pattern. Note that you will get better code if you define such patterns, even if they must turn into multiple assembler instructions.

References CEIL, CONSTANT_P, emit_clobber(), emit_insn(), emit_move_insn(), emit_move_resolve_push(), end_sequence(), find_replacement(), force_const_mem(), gcc_assert, GET_CODE, GET_MODE_SIZE(), i, MEM_P, expand_operand::mode, mode_size, operand_subword(), operand_subword_force(), push_operand(), reload_completed, reload_in_progress, replace_equiv_address_nv(), start_sequence(), poly_int< N, C >::to_constant(), undefined_operand_subword_p(), use_anchored_address(), XEXP, and y.

Referenced by emit_move_insn_1().

◆ emit_move_resolve_push()

A subroutine of emit_move_insn_1. X is a push_operand in MODE. Return an equivalent MEM that does not use an auto-increment.

References emit_move_insn(), expand_simple_binop(), gcc_assert, gcc_unreachable, gen_int_mode(), GET_CODE, GET_MODE_SIZE(), known_eq, expand_operand::mode, OPTAB_LIB_WIDEN, plus_constant(), replace_equiv_address(), rtx_to_poly_int64(), stack_pointer_rtx, and XEXP.

Referenced by emit_move_complex_push(), and emit_move_multi_word().

◆ emit_move_via_integer()

A subroutine of emit_move_insn_1. Generate a move from Y into X using an integer mode of the same size as MODE. Returns the instruction emitted, or NULL if such a move could not be generated.

References emit_insn(), emit_move_change_mode(), int_mode_for_mode(), expand_operand::mode, NULL, NULL_RTX, optab_handler(), and y.

Referenced by emit_move_ccmode(), emit_move_complex(), and emit_move_insn_1().

◆ emit_push_insn()

| bool emit_push_insn | ( | rtx | x, |

| machine_mode | mode, | ||

| tree | type, | ||

| rtx | size, | ||

| unsigned int | align, | ||

| int | partial, | ||

| rtx | reg, | ||

| poly_int64 | extra, | ||

| rtx | args_addr, | ||

| rtx | args_so_far, | ||

| int | reg_parm_stack_space, | ||

| rtx | alignment_pad, | ||

| bool | sibcall_p ) |

Generate code to push X onto the stack, assuming it has mode MODE and type TYPE. MODE is redundant except when X is a CONST_INT (since they don't carry mode info). SIZE is an rtx for the size of data to be copied (in bytes), needed only if X is BLKmode. Return true if successful. May return false if asked to push a partial argument during a sibcall optimization (as specified by SIBCALL_P) and the incoming and outgoing pointers cannot be shown to not overlap. ALIGN (in bits) is maximum alignment we can assume. If PARTIAL and REG are both nonzero, then copy that many of the first bytes of X into registers starting with REG, and push the rest of X. The amount of space pushed is decreased by PARTIAL bytes. REG must be a hard register in this case. If REG is zero but PARTIAL is not, take any all others actions for an argument partially in registers, but do not actually load any registers. EXTRA is the amount in bytes of extra space to leave next to this arg. This is ignored if an argument block has already been allocated. On a machine that lacks real push insns, ARGS_ADDR is the address of the bottom of the argument block for this call. We use indexing off there to store the arg. On machines with push insns, ARGS_ADDR is 0 when a argument block has not been preallocated. ARGS_SO_FAR is the size of args previously pushed for this call. REG_PARM_STACK_SPACE is nonzero if functions require stack space for arguments passed in registers. If nonzero, it will be the number of bytes required.

References ACCUMULATE_OUTGOING_ARGS, adjust_address, anti_adjust_stack(), assign_temp(), BLOCK_OP_CALL_PARM, can_move_by_pieces(), CONST_INT_P, CONSTANT_P, copy_to_reg(), DECL_INITIAL, emit_block_move(), emit_group_load(), emit_move_insn(), emit_push_insn(), expand_binop(), force_const_mem(), gcc_assert, GEN_INT, gen_int_mode(), gen_reg_rtx(), gen_rtx_MEM(), gen_rtx_REG(), GET_CODE, GET_MODE, GET_MODE_ALIGNMENT, GET_MODE_CLASS, GET_MODE_SIZE(), i, immediate_const_ctor_p(), int_expr_size(), INTVAL, known_eq, MEM_ALIGN, MEM_P, memory_address, memory_load_overlap(), expand_operand::mode, move_block_to_reg(), move_by_pieces(), NULL, NULL_RTX, NULL_TREE, operand_subword_force(), OPTAB_LIB_WIDEN, PAD_DOWNWARD, PAD_NONE, PAD_UPWARD, plus_constant(), poly_int_rtx_p(), push_block(), reg_mentioned_p(), REG_P, REGNO, RETURN_BEGIN, set_mem_align(), simplify_gen_binary(), STACK_GROWS_DOWNWARD, STACK_PUSH_CODE, store_constructor(), SYMBOL_REF_DECL, SYMBOL_REF_P, expand_operand::target, targetm, poly_int< N, C >::to_constant(), TREE_READONLY, TREE_SIDE_EFFECTS, lang_hooks_for_types::type_for_mode, lang_hooks::types, validize_mem(), VAR_P, virtual_outgoing_args_rtx, virtual_stack_dynamic_rtx, word_mode, and XEXP.

Referenced by emit_library_call_value_1(), emit_push_insn(), and store_one_arg().

◆ emit_storent_insn()

Emits nontemporal store insn that moves FROM to TO. Returns true if this succeeded, false otherwise.

References create_fixed_operand(), create_input_operand(), GET_MODE, maybe_expand_insn(), expand_operand::mode, and optab_handler().

Referenced by store_expr().

◆ expand_assignment()

Expand an assignment that stores the value of FROM into TO. If NONTEMPORAL is true, try generating a nontemporal store.

References ADDR_SPACE_GENERIC_P, adjust_address, aggregate_value_p(), as_a(), assign_stack_temp(), bits_to_bytes_round_down, BLOCK_OP_NORMAL, cfun, change_address(), COMPLETE_TYPE_P, COMPLEX_MODE_P, const0_rtx, convert_memory_address_addr_space(), convert_move(), convert_to_mode(), copy_blkmode_from_reg(), copy_blkmode_to_reg(), create_fixed_operand(), create_input_operand(), DECL_BIT_FIELD_TYPE, DECL_HARD_REGISTER, DECL_P, DECL_RTL, emit_block_move(), emit_block_move_via_libcall(), emit_group_load(), emit_group_move(), emit_group_store(), emit_move_insn(), expand_builtin_trap(), expand_expr(), expand_insn(), EXPAND_NORMAL, expand_normal(), EXPAND_SUM, EXPAND_WRITE, expr_size(), flip_storage_order(), force_not_mem(), force_operand(), force_subreg(), gcc_assert, gcc_checking_assert, gen_rtx_MEM(), get_address_mode(), get_alias_set(), get_bit_range(), GET_CODE, get_inner_reference(), GET_MODE, GET_MODE_ALIGNMENT, GET_MODE_BITSIZE(), GET_MODE_INNER, GET_MODE_PRECISION(), GET_MODE_SIZE(), GET_MODE_UNIT_BITSIZE, get_object_alignment(), handled_component_p(), highest_pow2_factor_for_target(), int_size_in_bytes(), known_eq, known_ge, known_le, lowpart_subreg(), maybe_emit_group_store(), maybe_gt, MEM_ALIGN, MEM_P, mem_ref_refers_to_non_mem_p(), MEM_VOLATILE_P, expand_operand::mode, NULL, NULL_RTX, NULL_TREE, num_trailing_bits, offset_address(), operand_equal_p(), optab_handler(), optimize_bitfield_assignment_op(), POINTER_TYPE_P, pop_temp_slots(), preserve_temp_slots(), push_temp_slots(), read_complex_part(), REF_REVERSE_STORAGE_ORDER, refs_may_alias_p(), REG_P, set_mem_attributes_minus_bitpos(), shallow_copy_rtx(), simplify_gen_unary(), size_int, store_bit_field(), store_expr(), store_field(), store_field_updates_msb_p(), SUBREG_P, subreg_promoted_mode(), SUBREG_PROMOTED_SIGN, SUBREG_PROMOTED_VAR_P, SUBREG_REG, subreg_unpromoted_mode(), targetm, TREE_CODE, TREE_OPERAND, TREE_TYPE, TYPE_ADDR_SPACE, TYPE_MODE, TYPE_SIZE, expand_operand::value, VAR_P, write_complex_part(), and XEXP.

Referenced by assign_parm_setup_reg(), expand_ACCESS_WITH_SIZE(), expand_asm_stmt(), expand_bitquery(), expand_call_stmt(), expand_DEFERRED_INIT(), expand_expr_real_1(), expand_gimple_stmt_1(), expand_LAUNDER(), store_constructor(), and ubsan_encode_value().

◆ expand_cmpstrn_or_cmpmem()

| rtx expand_cmpstrn_or_cmpmem | ( | insn_code | icode, |

| rtx | target, | ||

| rtx | arg1_rtx, | ||

| rtx | arg2_rtx, | ||

| tree | arg3_type, | ||

| rtx | arg3_rtx, | ||

| HOST_WIDE_INT | align ) |

Try to expand cmpstrn or cmpmem operation ICODE with the given operands. ARG3_TYPE is the type of ARG3_RTX. Return the result rtx on success, otherwise return null.

References create_convert_operand_from(), create_fixed_operand(), create_integer_operand(), create_output_operand(), HARD_REGISTER_P, insn_data, maybe_expand_insn(), NULL_RTX, REG_P, expand_operand::target, TYPE_MODE, TYPE_UNSIGNED, and expand_operand::value.

Referenced by emit_block_cmp_via_cmpmem(), expand_builtin_strcmp(), and expand_builtin_strncmp().

◆ expand_cond_expr_using_cmove()

Try to expand the conditional expression which is represented by TREEOP0 ? TREEOP1 : TREEOP2 using conditonal moves. If it succeeds return the rtl reg which represents the result. Otherwise return NULL_RTX.

References assign_temp(), can_conditionally_move_p(), COMPARISON_CLASS_P, const0_rtx, convert_modes(), convert_tree_comp_to_rtx(), emit_conditional_move(), emit_insn(), end_sequence(), EXPAND_NORMAL, expand_normal(), expand_operands(), gen_lowpart, get_def_for_expr_class(), GET_MODE, gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), expand_operand::mode, NULL_RTX, promote_mode(), start_sequence(), tcc_comparison, TREE_CODE, TREE_OPERAND, TREE_TYPE, TYPE_MODE, TYPE_UNSIGNED, and VECTOR_TYPE_P.

Referenced by expand_expr_real_2().

◆ expand_constructor()

|

static |

Generate code for computing CONSTRUCTOR EXP. An rtx for the computed value is returned. If AVOID_TEMP_MEM is TRUE, instead of creating a temporary variable in memory NULL is returned and the caller needs to handle it differently.