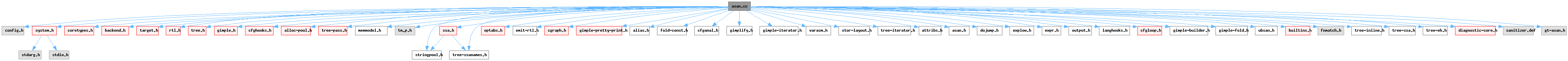

#include "config.h"#include "system.h"#include "coretypes.h"#include "backend.h"#include "target.h"#include "rtl.h"#include "tree.h"#include "gimple.h"#include "cfghooks.h"#include "alloc-pool.h"#include "tree-pass.h"#include "memmodel.h"#include "tm_p.h"#include "ssa.h"#include "stringpool.h"#include "tree-ssanames.h"#include "optabs.h"#include "emit-rtl.h"#include "cgraph.h"#include "gimple-pretty-print.h"#include "alias.h"#include "fold-const.h"#include "cfganal.h"#include "gimplify.h"#include "gimple-iterator.h"#include "varasm.h"#include "stor-layout.h"#include "tree-iterator.h"#include "attribs.h"#include "asan.h"#include "dojump.h"#include "explow.h"#include "expr.h"#include "output.h"#include "langhooks.h"#include "cfgloop.h"#include "gimple-builder.h"#include "gimple-fold.h"#include "ubsan.h"#include "builtins.h"#include "fnmatch.h"#include "tree-inline.h"#include "tree-ssa.h"#include "tree-eh.h"#include "diagnostic-core.h"#include "sanitizer.def"#include "gt-asan.h"

Data Structures | |

| struct | hwasan_stack_var |

| struct | asan_mem_ref |

| struct | asan_mem_ref_hasher |

| class | asan_redzone_buffer |

| struct | asan_add_string_csts_data |

Variables | |

| static unsigned HOST_WIDE_INT | asan_shadow_offset_value |

| static bool | asan_shadow_offset_computed |

| static vec< char * > | sanitized_sections |

| static tree | last_alloca_addr |

| hash_set< tree > * | asan_handled_variables = NULL |

| hash_set< tree > * | asan_used_labels = NULL |

| static uint8_t | hwasan_frame_tag_offset = 0 |

| static rtx | hwasan_frame_base_ptr = NULL_RTX |

| static rtx_insn * | hwasan_frame_base_init_seq = NULL |

| static vec< hwasan_stack_var > | hwasan_tagged_stack_vars |

| static rtx | asan_memfn_rtls [3] |

| alias_set_type | asan_shadow_set = -1 |

| static tree | shadow_ptr_types [3] |

| static tree | asan_detect_stack_use_after_return |

| static tree | asan_shadow_memory_dynamic_address |

| static tree | asan_local_shadow_memory_dynamic_address |

| object_allocator< asan_mem_ref > | asan_mem_ref_pool ("asan_mem_ref") |

| static hash_table< asan_mem_ref_hasher > * | asan_mem_ref_ht |

Macro Definition Documentation

◆ ATTR_COLD_CONST_NORETURN_NOTHROW_LEAF_LIST

| #define ATTR_COLD_CONST_NORETURN_NOTHROW_LEAF_LIST /* ECF_COLD missing @endverbatim */ ATTR_CONST_NORETURN_NOTHROW_LEAF_LIST |

◆ ATTR_COLD_NORETURN_NOTHROW_LEAF_LIST

| #define ATTR_COLD_NORETURN_NOTHROW_LEAF_LIST /* ECF_COLD missing @endverbatim */ ATTR_NORETURN_NOTHROW_LEAF_LIST |

◆ ATTR_COLD_NOTHROW_LEAF_LIST

| #define ATTR_COLD_NOTHROW_LEAF_LIST /* ECF_COLD missing @endverbatim */ ATTR_NOTHROW_LEAF_LIST |

◆ ATTR_CONST_NORETURN_NOTHROW_LEAF_LIST

| #define ATTR_CONST_NORETURN_NOTHROW_LEAF_LIST ECF_CONST | ATTR_NORETURN_NOTHROW_LEAF_LIST |

◆ ATTR_NORETURN_NOTHROW_LEAF_LIST

| #define ATTR_NORETURN_NOTHROW_LEAF_LIST ECF_NORETURN | ATTR_NOTHROW_LEAF_LIST |

◆ ATTR_NOTHROW_LEAF_LIST

| #define ATTR_NOTHROW_LEAF_LIST ECF_NOTHROW | ECF_LEAF |

◆ ATTR_NOTHROW_LIST

| #define ATTR_NOTHROW_LIST ECF_NOTHROW |

◆ ATTR_PURE_NOTHROW_LEAF_LIST

| #define ATTR_PURE_NOTHROW_LEAF_LIST ECF_PURE | ATTR_NOTHROW_LEAF_LIST |

Referenced by initialize_sanitizer_builtins().

◆ ATTR_TMPURE_NORETURN_NOTHROW_LEAF_LIST

| #define ATTR_TMPURE_NORETURN_NOTHROW_LEAF_LIST ECF_TM_PURE | ATTR_NORETURN_NOTHROW_LEAF_LIST |

◆ ATTR_TMPURE_NOTHROW_LEAF_LIST

| #define ATTR_TMPURE_NOTHROW_LEAF_LIST ECF_TM_PURE | ATTR_NOTHROW_LEAF_LIST |

◆ BT_FN_BOOL_VPTR_PTR_I16_INT_INT

| #define BT_FN_BOOL_VPTR_PTR_I16_INT_INT BT_FN_BOOL_VPTR_PTR_IX_INT_INT[4] |

◆ BT_FN_BOOL_VPTR_PTR_I1_INT_INT

| #define BT_FN_BOOL_VPTR_PTR_I1_INT_INT BT_FN_BOOL_VPTR_PTR_IX_INT_INT[0] |

◆ BT_FN_BOOL_VPTR_PTR_I2_INT_INT

| #define BT_FN_BOOL_VPTR_PTR_I2_INT_INT BT_FN_BOOL_VPTR_PTR_IX_INT_INT[1] |

◆ BT_FN_BOOL_VPTR_PTR_I4_INT_INT

| #define BT_FN_BOOL_VPTR_PTR_I4_INT_INT BT_FN_BOOL_VPTR_PTR_IX_INT_INT[2] |

◆ BT_FN_BOOL_VPTR_PTR_I8_INT_INT

| #define BT_FN_BOOL_VPTR_PTR_I8_INT_INT BT_FN_BOOL_VPTR_PTR_IX_INT_INT[3] |

◆ BT_FN_I16_CONST_VPTR_INT

| #define BT_FN_I16_CONST_VPTR_INT BT_FN_IX_CONST_VPTR_INT[4] |

◆ BT_FN_I16_VPTR_I16_INT

| #define BT_FN_I16_VPTR_I16_INT BT_FN_IX_VPTR_IX_INT[4] |

◆ BT_FN_I1_CONST_VPTR_INT

| #define BT_FN_I1_CONST_VPTR_INT BT_FN_IX_CONST_VPTR_INT[0] |

◆ BT_FN_I1_VPTR_I1_INT

| #define BT_FN_I1_VPTR_I1_INT BT_FN_IX_VPTR_IX_INT[0] |

◆ BT_FN_I2_CONST_VPTR_INT

| #define BT_FN_I2_CONST_VPTR_INT BT_FN_IX_CONST_VPTR_INT[1] |

◆ BT_FN_I2_VPTR_I2_INT

| #define BT_FN_I2_VPTR_I2_INT BT_FN_IX_VPTR_IX_INT[1] |

◆ BT_FN_I4_CONST_VPTR_INT

| #define BT_FN_I4_CONST_VPTR_INT BT_FN_IX_CONST_VPTR_INT[2] |

◆ BT_FN_I4_VPTR_I4_INT

| #define BT_FN_I4_VPTR_I4_INT BT_FN_IX_VPTR_IX_INT[2] |

◆ BT_FN_I8_CONST_VPTR_INT

| #define BT_FN_I8_CONST_VPTR_INT BT_FN_IX_CONST_VPTR_INT[3] |

◆ BT_FN_I8_VPTR_I8_INT

| #define BT_FN_I8_VPTR_I8_INT BT_FN_IX_VPTR_IX_INT[3] |

◆ BT_FN_VOID_VPTR_I16_INT

| #define BT_FN_VOID_VPTR_I16_INT BT_FN_VOID_VPTR_IX_INT[4] |

◆ BT_FN_VOID_VPTR_I1_INT

| #define BT_FN_VOID_VPTR_I1_INT BT_FN_VOID_VPTR_IX_INT[0] |

◆ BT_FN_VOID_VPTR_I2_INT

| #define BT_FN_VOID_VPTR_I2_INT BT_FN_VOID_VPTR_IX_INT[1] |

◆ BT_FN_VOID_VPTR_I4_INT

| #define BT_FN_VOID_VPTR_I4_INT BT_FN_VOID_VPTR_IX_INT[2] |

◆ BT_FN_VOID_VPTR_I8_INT

| #define BT_FN_VOID_VPTR_I8_INT BT_FN_VOID_VPTR_IX_INT[3] |

◆ DEF_BUILTIN_STUB

◆ DEF_SANITIZER_BUILTIN

◆ DEF_SANITIZER_BUILTIN_1

Referenced by initialize_sanitizer_builtins().

◆ RZ_BUFFER_SIZE

| #define RZ_BUFFER_SIZE 4 |

Always emit 4 bytes at a time.

Referenced by asan_redzone_buffer::asan_redzone_buffer(), asan_redzone_buffer::emit_redzone_byte(), asan_redzone_buffer::flush_if_full(), and asan_redzone_buffer::flush_redzone_payload().

Function Documentation

◆ add_string_csts()

| int add_string_csts | ( | constant_descriptor_tree ** | slot, |

| asan_add_string_csts_data * | aascd ) |

Called via hash_table::traverse. Call asan_add_global on emitted STRING_CSTs from the constant hash table.

References asan_add_global(), asan_protect_global(), constant_descriptor_tree::rtl, SYMBOL_REF_DECL, TREE_ASM_WRITTEN, TREE_CODE, asan_add_string_csts_data::type, asan_add_string_csts_data::v, constant_descriptor_tree::value, and XEXP.

Referenced by asan_finish_file().

◆ asan_add_global()

|

static |

Append description of a single global DECL into vector V. TYPE is __asan_global struct type as returned by asan_global_struct.

References asan_needs_local_alias(), asan_needs_odr_indicator_p(), asan_pp_string(), asan_red_zone_size(), assemble_alias(), build_constructor(), build_constructor_va(), build_decl(), build_fold_addr_expr, build_int_cst(), const_ptr_type_node, CONSTRUCTOR_APPEND_ELT, create_odr_indicator(), DECL_ARTIFICIAL, DECL_ASSEMBLER_NAME, DECL_CHAIN, DECL_IGNORED_P, DECL_INITIAL, DECL_NAME, DECL_NOT_GIMPLE_REG_P, DECL_SIZE_UNIT, DECL_SOURCE_LOCATION, varpool_node::dynamically_initialized, expand_location(), varpool_node::finalize_decl(), fold_convert, varpool_node::get(), get_identifier(), get_ultimate_context(), IDENTIFIER_POINTER, NULL, NULL_TREE, pp_string(), pp_tree_identifier(), TREE_ADDRESSABLE, TREE_CONSTANT, TREE_PUBLIC, TREE_READONLY, TREE_STATIC, TREE_THIS_VOLATILE, tree_to_uhwi(), TREE_TYPE, TREE_USED, TYPE_FIELDS, ubsan_get_source_location_type(), UNKNOWN_LOCATION, unsigned_type_node, and vec_safe_length().

Referenced by add_string_csts(), and asan_finish_file().

◆ asan_clear_shadow()

|

static |

Clear shadow memory at SHADOW_MEM, LEN bytes. Can't call a library call here though.

References add_reg_br_prob_note(), adjust_automodify_address, apply_scale(), BLOCK_OP_NORMAL, CALL_P, clear_storage(), const0_rtx, copy_to_mode_reg(), emit_cmp_and_jump_insns(), emit_insn(), emit_label(), emit_move_insn(), end(), end_sequence(), expand_simple_binop(), force_reg(), gcc_assert, GEN_INT, gen_int_mode(), gen_label_rtx(), get_last_insn(), profile_probability::guessed_always(), insns, JUMP_P, NEXT_INSN(), NULL_RTX, OPTAB_LIB_WIDEN, plus_constant(), start_sequence(), and XEXP.

Referenced by asan_emit_stack_protection().

◆ asan_dynamic_init_call()

Build __asan_before_dynamic_init (module_name) or __asan_after_dynamic_init () call.

References asan_init_shadow_ptr_types(), asan_pp_string(), build_call_expr(), builtin_decl_implicit(), const_ptr_type_node, fold_convert, NULL_TREE, pp_string(), and shadow_ptr_types.

◆ asan_dynamic_shadow_offset_p()

|

static |

References asan_shadow_offset_value, and targetm.

Referenced by asan_emit_stack_protection(), asan_maybe_insert_dynamic_shadow_at_function_entry(), and build_shadow_mem_access().

◆ asan_emit_allocas_unpoison()

Emit __asan_allocas_unpoison (top, bot) call. The BASE parameter corresponds to BOT argument, for TOP virtual_stack_dynamic_rtx is used. NEW_SEQUENCE indicates whether we're emitting new instructions sequence or not.

References convert_memory_address, do_pending_stack_adjust(), emit_library_call(), end_sequence(), init_one_libfunc(), LCT_NORMAL, ptr_mode, push_to_sequence(), and start_sequence().

Referenced by expand_used_vars().

◆ asan_emit_stack_protection()

| rtx_insn * asan_emit_stack_protection | ( | rtx | base, |

| rtx | pbase, | ||

| unsigned int | alignb, | ||

| HOST_WIDE_INT * | offsets, | ||

| tree * | decls, | ||

| int | length ) |

Insert code to protect stack vars. The prologue sequence should be emitted directly, epilogue sequence returned. BASE is the register holding the stack base, against which OFFSETS array offsets are relative to, OFFSETS array contains pairs of offsets in reverse order, always the end offset of some gap that needs protection followed by starting offset, and DECLS is an array of representative decls for each var partition. LENGTH is the length of the OFFSETS array, DECLS array is LENGTH / 2 - 1 elements long (OFFSETS include gap before the first variable as well as gaps after each stack variable). PBASE is, if non-NULL, some pseudo register which stack vars DECL_RTLs are based on. Either BASE should be assigned to PBASE, when not doing use after return protection, or corresponding address based on __asan_stack_malloc* return value.

References adjust_address, asan_clear_shadow(), asan_detect_stack_use_after_return, asan_dynamic_shadow_offset_p(), asan_handled_variables, asan_init_shadow_ptr_types(), ASAN_MIN_RED_ZONE_SIZE, asan_pp_string(), ASAN_RED_ZONE_SIZE, ASAN_SHADOW_GRANULARITY, asan_shadow_offset(), asan_shadow_set, ASAN_SHADOW_SHIFT, ASAN_STACK_FRAME_MAGIC, ASAN_STACK_MAGIC_LEFT, ASAN_STACK_MAGIC_MIDDLE, ASAN_STACK_MAGIC_RIGHT, ASAN_STACK_MAGIC_USE_AFTER_RET, ASAN_STACK_RETIRED_MAGIC, asan_used_labels, build_decl(), build_fold_addr_expr, builtin_memset_read_str(), BUILTINS_LOCATION, can_store_by_pieces(), char_type_node, const0_rtx, convert_memory_address, crtl, current_function_decl, current_function_funcdef_no, DECL_ALIGN_RAW, DECL_ARTIFICIAL, DECL_EXTERNAL, DECL_IGNORED_P, DECL_INITIAL, DECL_NAME, DECL_P, DECL_SOURCE_LOCATION, decls, do_pending_stack_adjust(), dump_file, dump_flags, emit_cmp_and_jump_insns(), emit_jump(), emit_label(), emit_library_call(), emit_library_call_value(), emit_move_insn(), asan_redzone_buffer::emit_redzone_byte(), end_sequence(), expand_binop(), expand_location(), expand_normal(), expand_simple_binop(), floor_log2(), gcc_assert, gcc_checking_assert, GEN_INT, gen_int_mode(), gen_int_shift_amount(), gen_label_rtx(), gen_reg_rtx(), gen_rtx_MEM(), get_asan_shadow_memory_dynamic_address_decl(), get_identifier(), GET_MODE_ALIGNMENT, GET_MODE_SIZE(), HOST_WIDE_INT_1, IDENTIFIER_LENGTH, IDENTIFIER_POINTER, init_one_libfunc(), insns, integer_type_node, LCT_NORMAL, asan_redzone_buffer::m_shadow_bytes, NULL, NULL_RTX, NULL_TREE, OPTAB_DIRECT, OPTAB_WIDEN, plus_constant(), pointer_sized_int_node, pp_decimal_int, pp_space, pp_string(), pp_tree_identifier(), pp_wide_integer(), PRId64, ptr_mode, RETURN_BEGIN, seen_error(), SET_DECL_ASSEMBLER_NAME, set_mem_alias_set(), set_mem_align(), set_storage_via_setmem(), shadow_ptr_types, start_sequence(), store_by_pieces(), TDF_DETAILS, TREE_ADDRESSABLE, TREE_ASM_WRITTEN, TREE_PUBLIC, TREE_READONLY, TREE_STATIC, TREE_USED, TYPE_MODE, profile_probability::very_likely(), and profile_probability::very_unlikely().

Referenced by expand_used_vars().

◆ asan_expand_check_ifn()

| bool asan_expand_check_ifn | ( | gimple_stmt_iterator * | iter, |

| bool | use_calls ) |

Expand the ASAN_{LOAD,STORE} builtins.

References as_a(), ASAN_CHECK_LAST, ASAN_CHECK_NON_ZERO_LEN, ASAN_CHECK_SCALAR_ACCESS, ASAN_CHECK_STORE, build_assign(), build_int_cst(), build_shadow_mem_access(), build_type_cast(), check_func(), create_cond_insert_point(), g, gcc_assert, gimple_assign_lhs(), gimple_build_assign(), gimple_build_call(), gimple_build_cond(), gimple_call_arg(), gimple_location(), gimple_seq_add_stmt(), gimple_seq_last(), gimple_seq_last_stmt(), gimple_seq_set_location(), gimple_set_location(), GSI_CONTINUE_LINKING, gsi_insert_after(), gsi_insert_before(), gsi_insert_seq_after(), gsi_last_bb(), GSI_NEW_STMT, gsi_remove(), gsi_replace(), GSI_SAME_STMT, gsi_start_bb(), gsi_stmt(), hwassist_sanitize_p(), insert_if_then_before_iter(), last, make_ssa_name(), NULL, NULL_TREE, pointer_sized_int_node, report_error_func(), SANITIZE_KERNEL_ADDRESS, SANITIZE_USER_ADDRESS, shadow_ptr_types, size_in_bytes(), tree_fits_shwi_p(), tree_to_shwi(), and TREE_TYPE.

◆ asan_expand_mark_ifn()

| bool asan_expand_mark_ifn | ( | gimple_stmt_iterator * | iter | ) |

Expand the ASAN_MARK builtins.

References asan_handled_variables, ASAN_SHADOW_GRANULARITY, ASAN_SHADOW_SHIFT, asan_store_shadow_bytes(), build_shadow_mem_access(), builtin_decl_implicit(), DECL_NONLOCAL_FRAME, g, gcc_assert, gcc_checking_assert, get_pointer_alignment(), gimple_assign_lhs(), gimple_build(), gimple_build_assign(), gimple_build_call(), gimple_build_round_up(), gimple_call_arg(), gimple_location(), gimple_set_location(), gsi_insert_after(), GSI_NEW_STMT, gsi_replace(), gsi_replace_with_seq(), gsi_safe_insert_before(), gsi_stmt(), HWASAN_TAG_GRANULE_SIZE, hwassist_sanitize_p(), make_ssa_name(), NULL, pointer_sized_int_node, poly_int_tree_p(), shadow_mem_size(), shadow_ptr_types, size_in_bytes(), size_type_node, TREE_CODE, tree_fits_uhwi_p(), TREE_OPERAND, tree_to_shwi(), tree_to_uhwi(), and void_type_node.

◆ asan_expand_poison_ifn()

| bool asan_expand_poison_ifn | ( | gimple_stmt_iterator * | iter, |

| bool * | need_commit_edge_insert, | ||

| hash_map< tree, tree > & | shadow_vars_mapping ) |

Expand ASAN_POISON ifn.

References build_fold_addr_expr, build_int_cst(), builtin_decl_implicit(), create_asan_shadow_var(), create_tmp_var, DECL_SIZE_UNIT, dyn_cast(), FOR_EACH_IMM_USE_STMT, g, gimple_build_call(), gimple_build_call_internal(), gimple_build_nop(), gimple_call_internal_p(), gimple_call_lhs(), gimple_copy(), gimple_location(), gimple_phi_arg_def(), gimple_phi_arg_edge(), gimple_phi_num_args(), gimple_set_location(), gsi_for_stmt(), gsi_insert_before(), gsi_insert_seq_on_edge(), GSI_NEW_STMT, gsi_remove(), gsi_replace(), gsi_stmt(), has_zero_uses(), hwassist_sanitize_p(), i, integer_type_node, is_a(), is_gimple_debug(), NULL, NULL_TREE, pointer_sized_int_node, report_error_func(), SANITIZE_KERNEL_ADDRESS, SANITIZE_USER_ADDRESS, SET_SSA_NAME_VAR_OR_IDENTIFIER, SSA_NAME_DEF_STMT, SSA_NAME_IS_DEFAULT_DEF, SSA_NAME_VAR, tree_to_uhwi(), and TREE_TYPE.

◆ asan_finish_file()

| void asan_finish_file | ( | void | ) |

Needs to be tree asan_ctor_statements; /** @verbatim Module-level instrumentation. - Insert __asan_init_vN() into the list of CTORs. - TODO: insert redzones around globals.

References add_string_csts(), append_to_statement_list(), asan_add_global(), asan_finish_file(), asan_global_struct(), asan_init_shadow_ptr_types(), asan_protect_global(), ASAN_SHADOW_GRANULARITY, build_array_type_nelts(), build_call_expr(), build_constructor(), build_decl(), build_fold_addr_expr, build_int_cst(), builtin_decl_implicit(), cgraph_build_static_cdtor(), const_desc_htab, constant_pool_htab(), count_string_csts(), symtab_node::decl, DECL_ALIGN, DECL_ARTIFICIAL, DECL_IGNORED_P, DECL_INITIAL, DEFAULT_INIT_PRIORITY, varpool_node::finalize_decl(), FOR_EACH_DEFINED_VARIABLE, get_identifier(), MAX, MAX_RESERVED_INIT_PRIORITY, NULL_TREE, pointer_sized_int_node, SANITIZE_ADDRESS, SANITIZE_USER_ADDRESS, SET_DECL_ALIGN, shadow_ptr_types, TREE_ASM_WRITTEN, TREE_CONSTANT, TREE_PUBLIC, TREE_STATIC, TREE_TYPE, asan_add_string_csts_data::type, UNKNOWN_LOCATION, asan_add_string_csts_data::v, and vec_alloc().

Referenced by asan_finish_file(), and compile_file().

◆ asan_function_start()

| void asan_function_start | ( | void | ) |

AddressSanitizer, a fast memory error detector. Copyright (C) 2011-2026 Free Software Foundation, Inc. Contributed by Kostya Serebryany <kcc@google.com> This file is part of GCC. GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version. GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details. You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see <http://www.gnu.org/licenses/>.

References asm_out_file, ASM_OUTPUT_DEBUG_LABEL, and current_function_funcdef_no.

Referenced by assemble_function_label_final().

◆ asan_global_struct()

|

static |

Build

struct __asan_global

{

const void *__beg;

uptr __size;

uptr __size_with_redzone;

const void *__name;

const void *__module_name;

uptr __has_dynamic_init;

__asan_global_source_location *__location;

char *__odr_indicator;

} type.

References build_decl(), const_ptr_type_node, DECL_ARTIFICIAL, DECL_CHAIN, DECL_CONTEXT, DECL_IGNORED_P, fields, get_identifier(), i, input_location, layout_type(), make_node(), pointer_sized_int_node, TYPE_ARTIFICIAL, TYPE_FIELDS, TYPE_NAME, TYPE_STUB_DECL, and UNKNOWN_LOCATION.

Referenced by asan_finish_file().

◆ asan_init_shadow_ptr_types()

|

static |

Initialize shadow_ptr_types array.

References asan_shadow_set, build_distinct_type_copy(), build_pointer_type(), i, initialize_sanitizer_builtins(), integer_type_node, new_alias_set(), shadow_ptr_types, short_integer_type_node, signed_char_type_node, and TYPE_ALIAS_SET.

Referenced by asan_dynamic_init_call(), asan_emit_stack_protection(), asan_finish_file(), and asan_instrument().

◆ asan_instrument()

|

static |

Instrument the current function.

References asan_init_shadow_ptr_types(), hwassist_sanitize_p(), initialize_sanitizer_builtins(), last_alloca_addr, NULL_TREE, shadow_ptr_types, and transform_statements().

◆ asan_instrument_reads()

| bool asan_instrument_reads | ( | void | ) |

References SANITIZE_ADDRESS, and sanitize_flags_p().

Referenced by instrument_derefs().

◆ asan_instrument_writes()

| bool asan_instrument_writes | ( | void | ) |

References SANITIZE_ADDRESS, and sanitize_flags_p().

Referenced by instrument_derefs().

◆ asan_mark_p()

| bool asan_mark_p | ( | gimple * | stmt, |

| enum asan_mark_flags | flag ) |

Return true if STMT is ASAN_MARK with FLAG as first argument.

References gimple_call_arg(), gimple_call_internal_p(), and tree_to_uhwi().

Referenced by empty_eh_cleanup(), execute_update_addresses_taken(), find_tail_calls(), and transform_statements().

◆ asan_maybe_insert_dynamic_shadow_at_function_entry()

| void asan_maybe_insert_dynamic_shadow_at_function_entry | ( | function * | fun | ) |

References asan_dynamic_shadow_offset_p(), asan_local_shadow_memory_dynamic_address, cfun, create_tmp_var, ENTRY_BLOCK_PTR_FOR_FN, function::function_start_locus, g, get_asan_shadow_memory_dynamic_address_decl(), gimple_build_assign(), gimple_set_location(), gsi_insert_on_edge_immediate(), NULL_TREE, pointer_sized_int_node, and single_succ_edge().

◆ asan_mem_ref_get_end() [1/2]

| tree asan_mem_ref_get_end | ( | const asan_mem_ref * | ref, |

| tree | len ) |

Return a tree expression that represents the end of the referenced memory region. Beware that this function can actually build a new tree expression.

References asan_mem_ref_get_end(), and asan_mem_ref::start.

◆ asan_mem_ref_get_end() [2/2]

This builds and returns a pointer to the end of the memory region that starts at START and of length LEN.

References convert_to_ptrofftype, fold_build2, integer_zerop(), NULL_TREE, ptrofftype_p(), and TREE_TYPE.

Referenced by asan_mem_ref_get_end().

◆ asan_mem_ref_init()

|

static |

Initializes an instance of asan_mem_ref.

References asan_mem_ref::access_size, and asan_mem_ref::start.

Referenced by asan_mem_ref_new(), has_mem_ref_been_instrumented(), has_stmt_been_instrumented_p(), instrument_builtin_call(), and update_mem_ref_hash_table().

◆ asan_mem_ref_new()

|

static |

Allocates memory for an instance of asan_mem_ref into the memory pool returned by asan_mem_ref_get_alloc_pool and initialize it. START is the address of (or the expression pointing to) the beginning of memory reference. ACCESS_SIZE is the size of the access to the referenced memory.

References asan_mem_ref_init(), and asan_mem_ref_pool.

Referenced by update_mem_ref_hash_table().

◆ asan_memfn_rtl()

References asan_memfn_rtl(), asan_memfn_rtls, DECL_ASSEMBLER_NAME, DECL_ASSEMBLER_NAME_RAW, DECL_FUNCTION_CODE(), DECL_NAME, DECL_RTL, gcc_unreachable, get_identifier(), i, NULL_RTX, NULL_TREE, SANITIZE_KERNEL_HWADDRESS, and SET_DECL_RTL.

Referenced by asan_memfn_rtl(), and expand_builtin().

◆ asan_memintrin()

| bool asan_memintrin | ( | void | ) |

References SANITIZE_ADDRESS, and sanitize_flags_p().

Referenced by instrument_builtin_call().

◆ asan_needs_local_alias()

Return true if DECL, a global var, might be overridden and needs therefore a local alias.

References DECL_WEAK, and targetm.

Referenced by asan_add_global(), and asan_protect_global().

◆ asan_needs_odr_indicator_p()

Return true if DECL, a global var, might be overridden and needs an additional odr indicator symbol.

References DECL_ARTIFICIAL, DECL_WEAK, SANITIZE_KERNEL_ADDRESS, and TREE_PUBLIC.

Referenced by asan_add_global().

◆ asan_pp_string()

|

static |

Create ADDR_EXPR of STRING_CST with the PP pretty printer text.

References build1(), build_array_type(), build_index_type(), build_string(), pp_formatted_text(), shadow_ptr_types, size_int, TREE_READONLY, TREE_STATIC, and TREE_TYPE.

Referenced by asan_add_global(), asan_dynamic_init_call(), and asan_emit_stack_protection().

◆ asan_protect_global()

Return true if DECL is a VAR_DECL that should be protected by Address Sanitizer, by appending a red zone with protected shadow memory after it and aligning it to at least ASAN_RED_ZONE_SIZE bytes.

References ADDR_SPACE_GENERIC_P, asan_needs_local_alias(), ASAN_RED_ZONE_SIZE, CONSTANT_POOL_ADDRESS_P, DECL_ALIGN_UNIT, DECL_ATTRIBUTES, DECL_COMMON, DECL_EXTERNAL, DECL_ONE_ONLY, DECL_RTL, DECL_RTL_SET_P, DECL_SECTION_NAME, DECL_SIZE, DECL_SIZE_UNIT, DECL_THREAD_LOCAL_P, symtab_node::get(), GET_CODE, is_odr_indicator(), lookup_attribute(), MAX_OFILE_ALIGNMENT, MEM_P, NULL, NULL_TREE, section_sanitized_p(), shadow_ptr_types, TARGET_SUPPORTS_ALIASES, TREE_CODE, TREE_CONSTANT_POOL_ADDRESS_P, TREE_PUBLIC, TREE_TYPE, TYPE_ADDR_SPACE, ubsan_get_source_location_type(), valid_constant_size_p(), VAR_P, and XEXP.

Referenced by add_string_csts(), asan_finish_file(), assemble_noswitch_variable(), assemble_variable(), categorize_decl_for_section(), count_string_csts(), get_variable_section(), output_constant_def_contents(), output_object_block(), and place_block_symbol().

◆ asan_sanitize_allocas_p()

| bool asan_sanitize_allocas_p | ( | void | ) |

References asan_sanitize_stack_p().

Referenced by expand_used_vars(), handle_builtin_alloca(), and handle_builtin_stack_restore().

◆ asan_sanitize_stack_p()

| bool asan_sanitize_stack_p | ( | void | ) |

References SANITIZE_ADDRESS, and sanitize_flags_p().

Referenced by asan_sanitize_allocas_p(), asan_sanitize_use_after_scope(), defer_stack_allocation(), expand_stack_vars(), expand_used_vars(), and partition_stack_vars().

◆ asan_shadow_offset()

|

static |

Returns Asan shadow offset.

References asan_shadow_offset_computed, asan_shadow_offset_value, and targetm.

Referenced by asan_emit_stack_protection(), and build_shadow_mem_access().

◆ asan_shadow_offset_set_p()

| bool asan_shadow_offset_set_p | ( | ) |

Returns Asan shadow offset has been set.

References asan_shadow_offset_computed.

Referenced by process_options().

◆ asan_store_shadow_bytes()

|

static |

Poison or unpoison (depending on IS_CLOBBER variable) shadow memory based on SHADOW address. Newly added statements will be added to ITER with given location LOC. We mark SIZE bytes in shadow memory, where LAST_CHUNK_SIZE is greater than zero in situation where we are at the end of a variable.

References ASAN_STACK_MAGIC_USE_AFTER_SCOPE, build2(), build_int_cst(), g, gcc_unreachable, gimple_build_assign(), gimple_set_location(), gsi_insert_after(), GSI_NEW_STMT, i, shadow_ptr_types, and TREE_TYPE.

Referenced by asan_expand_mark_ifn().

◆ build_check_stmt()

|

static |

Instrument the memory access instruction BASE. Insert new statements before or after ITER. Note that the memory access represented by BASE can be either an SSA_NAME, or a non-SSA expression. LOCATION is the source code location. IS_STORE is TRUE for a store, FALSE for a load. BEFORE_P is TRUE for inserting the instrumentation code before ITER, FALSE for inserting it after ITER. IS_SCALAR_ACCESS is TRUE for a scalar memory access and FALSE for memory region access. NON_ZERO_P is TRUE if memory region is guaranteed to have non-zero length. ALIGN tells alignment of accessed memory object. START_INSTRUMENTED and END_INSTRUMENTED are TRUE if start/end of memory region have already been instrumented. If BEFORE_P is TRUE, *ITER is arranged to still point to the statement it was pointing to prior to calling this function, otherwise, it points to the statement logically following it.

References ASAN_CHECK_NON_ZERO_LEN, ASAN_CHECK_SCALAR_ACCESS, ASAN_CHECK_STORE, build_int_cst(), g, gcc_assert, gimple_build_call_internal(), gimple_set_location(), gsi_insert_after(), GSI_NEW_STMT, gsi_next(), gsi_safe_insert_before(), hwassist_sanitize_p(), integer_type_node, maybe_cast_to_ptrmode(), maybe_create_ssa_name(), pointer_sized_int_node, size_in_bytes(), and unshare_expr().

Referenced by instrument_derefs(), and instrument_mem_region_access().

◆ build_shadow_mem_access()

|

static |

Build (base_addr >> ASAN_SHADOW_SHIFT) + asan_shadow_offset (). If RETURN_ADDRESS is set to true, return memory location instread of a value in the shadow memory.

References asan_dynamic_shadow_offset_p(), asan_local_shadow_memory_dynamic_address, asan_shadow_offset(), ASAN_SHADOW_SHIFT, build2(), build_int_cst(), g, gimple_assign_lhs(), gimple_build_assign(), gimple_set_location(), gsi_insert_after(), GSI_NEW_STMT, make_ssa_name(), and TREE_TYPE.

Referenced by asan_expand_check_ifn(), and asan_expand_mark_ifn().

◆ check_func()

Construct a function tree for __asan_{load,store}{1,2,4,8,16,_n}.

IS_STORE is either 1 (for a store) or 0 (for a load).

References builtin_decl_implicit(), exact_log2(), and size_in_bytes().

Referenced by asan_expand_check_ifn().

◆ count_string_csts()

| int count_string_csts | ( | constant_descriptor_tree ** | slot, |

| unsigned HOST_WIDE_INT * | data ) |

Called via htab_traverse. Count number of emitted STRING_CSTs in the constant hash table.

References asan_protect_global(), TREE_ASM_WRITTEN, TREE_CODE, and constant_descriptor_tree::value.

Referenced by asan_finish_file().

◆ create_asan_shadow_var()

Create ASAN shadow variable for a VAR_DECL which has been rewritten into SSA. Already seen VAR_DECLs are stored in SHADOW_VARS_MAPPING.

References copy_decl_for_dup_finish(), copy_node(), current_function_decl, DECL_ARTIFICIAL, DECL_IGNORED_P, DECL_SEEN_IN_BIND_EXPR_P, hash_map< KeyId, Value, Traits >::get(), gimple_add_tmp_var(), NULL, and hash_map< KeyId, Value, Traits >::put().

Referenced by asan_expand_poison_ifn().

◆ create_cond_insert_point()

| gimple_stmt_iterator create_cond_insert_point | ( | gimple_stmt_iterator * | iter, |

| bool | before_p, | ||

| bool | then_more_likely_p, | ||

| bool | create_then_fallthru_edge, | ||

| basic_block * | then_block, | ||

| basic_block * | fallthrough_block ) |

Split the current basic block and create a condition statement insertion point right before or after the statement pointed to by ITER. Return an iterator to the point at which the caller might safely insert the condition statement. THEN_BLOCK must be set to the address of an uninitialized instance of basic_block. The function will then set *THEN_BLOCK to the 'then block' of the condition statement to be inserted by the caller. If CREATE_THEN_FALLTHRU_EDGE is false, no edge will be created from *THEN_BLOCK to *FALLTHROUGH_BLOCK. Similarly, the function will set *FALLTRHOUGH_BLOCK to the 'else block' of the condition statement to be inserted by the caller. Note that *FALLTHROUGH_BLOCK is a new block that contains the statements starting from *ITER, and *THEN_BLOCK is a new empty block. *ITER is adjusted to point to always point to the first statement of the basic block * FALLTHROUGH_BLOCK. That statement is the same as what ITER was pointing to prior to calling this function, if BEFORE_P is true; otherwise, it is its following statement.

References add_bb_to_loop(), CDI_DOMINATORS, basic_block_def::count, create_empty_bb(), current_loops, dom_info_available_p(), find_edge(), gsi_bb(), gsi_end_p(), gsi_last_bb(), gsi_prev(), gsi_start_bb(), gsi_stmt(), profile_probability::invert(), basic_block_def::loop_father, LOOPS_NEED_FIXUP, loops_state_set(), make_edge(), make_single_succ_edge(), set_immediate_dominator(), split_block(), profile_probability::very_likely(), and profile_probability::very_unlikely().

Referenced by asan_expand_check_ifn(), insert_if_then_before_iter(), instrument_bool_enum_load(), instrument_builtin(), instrument_nonnull_arg(), instrument_nonnull_return(), ubsan_expand_bounds_ifn(), ubsan_expand_objsize_ifn(), and ubsan_expand_vptr_ifn().

◆ create_odr_indicator()

Create and return odr indicator symbol for DECL. TYPE is __asan_global struct type as returned by asan_global_struct.

References build_constructor_va(), build_decl(), build_fold_addr_expr, build_int_cst(), char_type_node, DECL_ARTIFICIAL, DECL_ASSEMBLER_NAME, DECL_ATTRIBUTES, DECL_CHAIN, DECL_IGNORED_P, DECL_INITIAL, DECL_NAME, DECL_VISIBILITY, DECL_VISIBILITY_SPECIFIED, varpool_node::finalize_decl(), fold_convert, get_identifier(), HAS_DECL_ASSEMBLER_NAME_P, IDENTIFIER_POINTER, make_decl_rtl(), NULL, NULL_TREE, targetm, TREE_ADDRESSABLE, tree_cons(), TREE_CONSTANT, TREE_PUBLIC, TREE_READONLY, TREE_STATIC, TREE_THIS_VOLATILE, TREE_TYPE, TREE_USED, TYPE_FIELDS, UNKNOWN_LOCATION, and unsigned_type_node.

Referenced by asan_add_global().

◆ empty_mem_ref_hash_table()

|

static |

Clear all entries from the memory references hash table.

References asan_mem_ref_ht.

Referenced by transform_statements().

◆ free_mem_ref_resources()

|

static |

Free the memory references hash table.

References asan_mem_ref_ht, asan_mem_ref_pool, and NULL.

Referenced by transform_statements().

◆ gate_asan()

|

static |

References SANITIZE_ADDRESS, and sanitize_flags_p().

◆ gate_hwasan()

| bool gate_hwasan | ( | void | ) |

Referenced by compile_file().

◆ gate_memtag()

| bool gate_memtag | ( | void | ) |

◆ get_asan_shadow_memory_dynamic_address_decl()

|

static |

References asan_shadow_memory_dynamic_address, build_decl(), BUILTINS_LOCATION, DECL_ARTIFICIAL, DECL_EXTERNAL, DECL_IGNORED_P, get_asan_shadow_memory_dynamic_address_decl(), get_identifier(), NULL_TREE, pointer_sized_int_node, SET_DECL_ASSEMBLER_NAME, TREE_ADDRESSABLE, TREE_PUBLIC, TREE_STATIC, and TREE_USED.

Referenced by asan_emit_stack_protection(), asan_maybe_insert_dynamic_shadow_at_function_entry(), and get_asan_shadow_memory_dynamic_address_decl().

◆ get_last_alloca_addr()

|

static |

Return address of last allocated dynamic alloca.

References cfun, create_tmp_reg(), ENTRY_BLOCK_PTR_FOR_FN, g, gimple_build_assign(), gsi_insert_on_edge_immediate(), last_alloca_addr, null_pointer_node, ptr_type_node, and single_succ_edge().

Referenced by handle_builtin_alloca(), and handle_builtin_stack_restore().

◆ get_mem_ref_hash_table()

|

static |

Returns a reference to the hash table containing memory references. This function ensures that the hash table is created. Note that this hash table is updated by the function update_mem_ref_hash_table.

References asan_mem_ref_ht.

Referenced by has_mem_ref_been_instrumented(), and update_mem_ref_hash_table().

◆ get_mem_ref_of_assignment()

|

static |

Set REF to the memory reference present in a gimple assignment ASSIGNMENT. Return true upon successful completion, false otherwise.

References asan_mem_ref::access_size, gcc_assert, gimple_assign_lhs(), gimple_assign_load_p(), gimple_assign_rhs1(), gimple_assign_single_p(), gimple_clobber_p(), gimple_store_p(), int_size_in_bytes(), asan_mem_ref::start, and TREE_TYPE.

Referenced by has_stmt_been_instrumented_p().

◆ get_mem_refs_of_builtin_call()

|

static |

Return the memory references contained in a gimple statement representing a builtin call that has to do with memory access.

References asan_mem_ref::access_size, asan_intercepted_p(), build2(), build_int_cst(), build_nonstandard_integer_type(), build_pointer_type(), BUILT_IN_NORMAL, CASE_BUILT_IN_ALLOCA, char_type_node, DECL_FUNCTION_CODE(), gcc_checking_assert, gimple_call_arg(), gimple_call_builtin_p(), gimple_call_fndecl(), gimple_call_lhs(), handle_builtin_alloca(), handle_builtin_stack_restore(), hwassist_sanitize_p(), NULL, NULL_TREE, and asan_mem_ref::start.

Referenced by has_stmt_been_instrumented_p(), and instrument_builtin_call().

◆ handle_builtin_alloca()

|

static |

Deploy and poison redzones around __builtin_alloca call. To do this, we

should replace this call with another one with changed parameters and

replace all its uses with new address, so

addr = __builtin_alloca (old_size, align);

is replaced by

left_redzone_size = max (align, ASAN_RED_ZONE_SIZE);

Following two statements are optimized out if we know that

old_size & (ASAN_RED_ZONE_SIZE - 1) == 0, i.e. alloca doesn't need partial

redzone.

misalign = old_size & (ASAN_RED_ZONE_SIZE - 1);

partial_redzone_size = ASAN_RED_ZONE_SIZE - misalign;

right_redzone_size = ASAN_RED_ZONE_SIZE;

additional_size = left_redzone_size + partial_redzone_size +

right_redzone_size;

new_size = old_size + additional_size;

new_alloca = __builtin_alloca (new_size, max (align, 32))

__asan_alloca_poison (new_alloca, old_size)

addr = new_alloca + max (align, ASAN_RED_ZONE_SIZE);

last_alloca_addr = new_alloca;

ADDITIONAL_SIZE is added to make new memory allocation contain not only

requested memory, but also left, partial and right redzones as well as some

additional space, required by alignment.

References as_combined_fn(), ASAN_RED_ZONE_SIZE, asan_sanitize_allocas_p(), wi::bit_and(), build_int_cst(), builtin_decl_implicit(), cfun, DECL_FUNCTION_CODE(), find_fallthru_edge(), g, get_last_alloca_addr(), get_nonzero_bits(), wi::get_precision(), gimple_assign_lhs(), gimple_build(), gimple_build_assign(), gimple_build_call(), gimple_build_round_up(), gimple_call_arg(), gimple_call_fndecl(), gimple_call_lhs(), gimple_call_set_lhs(), gimple_location(), gsi_bb(), gsi_for_stmt(), gsi_insert_after(), gsi_insert_before(), gsi_insert_on_edge_immediate(), gsi_insert_seq_before(), GSI_NEW_STMT, gsi_none(), gsi_replace(), GSI_SAME_STMT, gsi_stmt(), hwasan_sanitize_allocas_p(), HWASAN_TAG_GRANULE_SIZE, make_ssa_name(), MAX, memtag_sanitize_allocas_p(), memtag_sanitize_p(), wi::ne_p(), NULL, NULL_TREE, ptr_type_node, replace_call_with_value(), size_type_node, stmt_can_throw_internal(), tree_to_uhwi(), TREE_TYPE, wi::uhwi(), unsigned_char_type_node, and void_type_node.

Referenced by get_mem_refs_of_builtin_call().

◆ handle_builtin_stack_restore()

|

static |

Insert __asan_allocas_unpoison (top, bottom) call before

__builtin_stack_restore (new_sp) call.

The pseudocode of this routine should look like this:

top = last_alloca_addr;

bot = new_sp;

__asan_allocas_unpoison (top, bot);

last_alloca_addr = new_sp;

__builtin_stack_restore (new_sp);

In general, we can't use new_sp as bot parameter because on some

architectures SP has non zero offset from dynamic stack area. Moreover, on

some architectures this offset (STACK_DYNAMIC_OFFSET) becomes known for each

particular function only after all callees were expanded to rtl.

The most noticeable example is PowerPC{,64}, see

http://refspecs.linuxfoundation.org/ELF/ppc64/PPC-elf64abi.html#DYNAM-STACK.

To overcome the issue we use following trick: pass new_sp as a second

parameter to __asan_allocas_unpoison and rewrite it during expansion with

new_sp + (virtual_dynamic_stack_rtx - sp) later in

expand_asan_emit_allocas_unpoison function.

HWASAN needs to do very similar, the eventual pseudocode should be:

__hwasan_tag_memory (virtual_stack_dynamic_rtx,

0,

new_sp - sp);

__builtin_stack_restore (new_sp)

Need to use the same trick to handle STACK_DYNAMIC_OFFSET as described

above.

References asan_sanitize_allocas_p(), builtin_decl_implicit(), g, get_last_alloca_addr(), gimple_build_assign(), gimple_build_call(), gimple_build_call_internal(), gimple_call_arg(), gsi_insert_before(), GSI_SAME_STMT, hwasan_sanitize_allocas_p(), and memtag_sanitize_allocas_p().

Referenced by get_mem_refs_of_builtin_call().

◆ has_mem_ref_been_instrumented() [1/3]

|

static |

Return true iff the memory reference REF has been instrumented.

References asan_mem_ref::access_size, has_mem_ref_been_instrumented(), and asan_mem_ref::start.

◆ has_mem_ref_been_instrumented() [2/3]

|

static |

Return true iff access to memory region starting at REF and of length LEN has been instrumented.

References has_mem_ref_been_instrumented(), size_in_bytes(), asan_mem_ref::start, tree_fits_shwi_p(), and tree_to_shwi().

◆ has_mem_ref_been_instrumented() [3/3]

Return true iff the memory reference REF has been instrumented.

References asan_mem_ref::access_size, asan_mem_ref_init(), get_mem_ref_hash_table(), and r.

Referenced by has_mem_ref_been_instrumented(), has_mem_ref_been_instrumented(), has_stmt_been_instrumented_p(), instrument_derefs(), and instrument_mem_region_access().

◆ has_stmt_been_instrumented_p()

Return true iff a given gimple statement has been instrumented. Note that the statement is "defined" by the memory references it contains.

References asan_mem_ref::access_size, aggregate_value_p(), as_a(), asan_mem_ref_init(), BUILT_IN_NORMAL, get_mem_ref_of_assignment(), get_mem_refs_of_builtin_call(), gimple_assign_load_p(), gimple_assign_rhs1(), gimple_assign_single_p(), gimple_call_builtin_p(), gimple_call_fntype(), gimple_call_internal_p(), gimple_call_lhs(), gimple_store_p(), has_mem_ref_been_instrumented(), int_size_in_bytes(), is_gimple_call(), NULL, NULL_TREE, r, asan_mem_ref::start, and TREE_TYPE.

Referenced by transform_statements().

◆ hwasan_check_func()

|

static |

Construct a function tree for __hwasan_{load,store}{1,2,4,8,16,_n}.

IS_STORE is either 1 (for a store) or 0 (for a load).

References as_combined_fn(), exact_log2(), gcc_assert, and size_in_bytes().

◆ hwasan_current_frame_tag()

| uint8_t hwasan_current_frame_tag | ( | ) |

HWASAN

For stack tagging: Return the offset from the frame base tag that the "next" expanded object should have.

References hwasan_frame_tag_offset.

Referenced by expand_HWASAN_CHOOSE_TAG(), expand_one_stack_var_at(), and hwasan_record_stack_var().

◆ hwasan_emit_prologue()

| void hwasan_emit_prologue | ( | ) |

For stack tagging: (Emits HWASAN equivalent of what is emitted by `asan_emit_stack_protection`). Emits the extra prologue code to set the shadow stack as required for HWASAN stack instrumentation. Uses the vector of recorded stack variables hwasan_tagged_stack_vars. When this function has completed hwasan_tagged_stack_vars is empty and all objects it had pointed to are deallocated.

References convert_memory_address, create_input_operand(), create_integer_operand(), create_output_operand(), emit_insn(), emit_library_call(), expand_insn(), gcc_assert, gen_int_mode(), gen_reg_rtx(), HWASAN_TAG_GRANULE_SIZE, hwasan_tagged_stack_vars, hwasan_truncate_to_tag_size(), init_one_libfunc(), known_ge, known_le, LCT_NORMAL, memtag_sanitize_p(), NULL_RTX, plus_constant(), ptr_mode, and targetm.

Referenced by expand_used_vars().

◆ hwasan_emit_untag_frame()

For stack tagging: Return RTL insns to clear the tags between DYNAMIC and VARS pointers into the stack. These instructions should be emitted at the end of every function. If `dynamic` is NULL_RTX then no insns are returned.

References CONST_INT_P, convert_memory_address, do_pending_stack_adjust(), emit_insn(), emit_library_call(), end_sequence(), force_reg(), FRAME_GROWS_DOWNWARD, HWASAN_STACK_BACKGROUND, init_one_libfunc(), LCT_NORMAL, memtag_sanitize_p(), NULL, ptr_mode, simplify_gen_binary(), start_sequence(), and targetm.

Referenced by expand_used_vars().

◆ hwasan_expand_check_ifn()

| bool hwasan_expand_check_ifn | ( | gimple_stmt_iterator * | iter, |

| bool | ) |

Expand the HWASAN_{LOAD,STORE} builtins.

◆ hwasan_expand_mark_ifn()

| bool hwasan_expand_mark_ifn | ( | gimple_stmt_iterator * | ) |

For stack tagging: Dummy: the HWASAN_MARK internal function should only ever be in the code after the sanopt pass.

◆ hwasan_finish_file()

| void hwasan_finish_file | ( | void | ) |

Needs to be tree hwasan_ctor_statements; /** @verbatim Insert module initialization into this TU. This initialization calls the initialization code for libhwasan.

References append_to_statement_list(), build_call_expr(), builtin_decl_implicit(), cgraph_build_static_cdtor(), hwasan_finish_file(), initialize_sanitizer_builtins(), MAX_RESERVED_INIT_PRIORITY, SANITIZE_HWADDRESS, and SANITIZE_KERNEL_HWADDRESS.

Referenced by compile_file(), and hwasan_finish_file().

◆ hwasan_frame_base()

| rtx hwasan_frame_base | ( | ) |

For stack tagging: Return the 'base pointer' for this function. If that base pointer has not yet been created then we create a register to hold it and record the insns to initialize the register in `hwasan_frame_base_init_seq` for later emission.

References end_sequence(), force_reg(), hwasan_frame_base_init_seq, hwasan_frame_base_ptr, NULL_RTX, start_sequence(), targetm, and virtual_stack_vars_rtx.

Referenced by expand_HWASAN_CHOOSE_TAG(), expand_one_stack_var_1(), and expand_stack_vars().

◆ hwasan_get_frame_extent()

| rtx hwasan_get_frame_extent | ( | ) |

Return the RTX representing the farthest extent of the statically allocated stack objects for this frame. If hwasan_frame_base_ptr has not been initialized then we are not storing any static variables on the stack in this frame. In this case we return NULL_RTX to represent that. Otherwise simply return virtual_stack_vars_rtx + frame_offset.

References frame_offset, hwasan_frame_base_ptr, NULL_RTX, plus_constant(), and virtual_stack_vars_rtx.

Referenced by expand_used_vars().

◆ hwasan_increment_frame_tag()

| void hwasan_increment_frame_tag | ( | ) |

For stack tagging: Increment the frame tag offset modulo the size a tag can represent.

References CHAR_BIT, gcc_assert, hwasan_frame_tag_offset, HWASAN_TAG_SIZE, sanitize_flags_p(), and SANITIZE_KERNEL_HWADDRESS.

Referenced by expand_HWASAN_ALLOCA_POISON(), expand_HWASAN_CHOOSE_TAG(), expand_one_stack_var_1(), and expand_stack_vars().

◆ hwasan_instrument_reads()

| bool hwasan_instrument_reads | ( | void | ) |

◆ hwasan_instrument_writes()

| bool hwasan_instrument_writes | ( | void | ) |

◆ hwasan_maybe_emit_frame_base_init()

| void hwasan_maybe_emit_frame_base_init | ( | void | ) |

For stack tagging:

Emit frame base initialisation.

If hwasan_frame_base has been used before here then

hwasan_frame_base_init_seq contains the sequence of instructions to

initialize it. This must be put just before the hwasan prologue, so we emit

the insns before parm_birth_insn (which will point to the first instruction

of the hwasan prologue if it exists).

We update `parm_birth_insn` to point to the start of this initialisation

since that represents the end of the initialisation done by

expand_function_{start,end} functions and we want to maintain that.

References emit_insn_before(), hwasan_frame_base_init_seq, and parm_birth_insn.

◆ hwasan_memintrin()

| bool hwasan_memintrin | ( | void | ) |

Should we instrument builtin calls?

References hwasan_sanitize_p().

Referenced by instrument_builtin_call().

◆ hwasan_record_frame_init()

| void hwasan_record_frame_init | ( | ) |

Clear internal state for the next function. This function is called before variables on the stack get expanded, in `init_vars_expansion`.

References asan_used_labels, gcc_assert, hwasan_frame_base_init_seq, hwasan_frame_base_ptr, hwasan_frame_tag_offset, hwasan_tagged_stack_vars, NULL, NULL_RTX, sanitize_flags_p(), and SANITIZE_KERNEL_HWADDRESS.

Referenced by init_vars_expansion().

◆ hwasan_record_stack_var()

| void hwasan_record_stack_var | ( | rtx | untagged_base, |

| rtx | tagged_base, | ||

| poly_int64 | nearest_offset, | ||

| poly_int64 | farthest_offset ) |

Record a compile-time constant size stack variable that HWASAN will need to tag. This record of the range of a stack variable will be used by `hwasan_emit_prologue` to emit the RTL at the start of each frame which will set tags in the shadow memory according to the assigned tag for each object. The range that the object spans in stack space should be described by the bounds `untagged_base + nearest_offset` and `untagged_base + farthest_offset`. `tagged_base` is the base address which contains the "base frame tag" for this frame, and from which the value to address this object with will be calculated. We record the `untagged_base` since the functions in the hwasan library we use to tag memory take pointers without a tag.

References hwasan_stack_var::farthest_offset, hwasan_current_frame_tag(), hwasan_tagged_stack_vars, hwasan_stack_var::nearest_offset, hwasan_stack_var::tag_offset, hwasan_stack_var::tagged_base, and hwasan_stack_var::untagged_base.

Referenced by expand_one_stack_var_1(), and expand_stack_vars().

◆ hwasan_sanitize_allocas_p()

| bool hwasan_sanitize_allocas_p | ( | void | ) |

Are we tagging alloca objects?

References hwasan_sanitize_stack_p().

Referenced by expand_used_vars(), handle_builtin_alloca(), and handle_builtin_stack_restore().

◆ hwasan_sanitize_p()

| bool hwasan_sanitize_p | ( | void | ) |

HWAddressSanitizer (hwasan) is a probabilistic method for detecting

out-of-bounds and use-after-free bugs.

Read more:

http://code.google.com/p/address-sanitizer/

Similar to AddressSanitizer (asan) it consists of two parts: the

instrumentation module in this file, and a run-time library.

The instrumentation module adds a run-time check before every memory insn in

the same manner as asan (see the block comment for AddressSanitizer above).

Currently, hwasan only adds out-of-line instrumentation, where each check is

implemented as a function call to the run-time library. Hence a check for a

load of N bytes from address X would be implemented with a function call to

__hwasan_loadN(X), and checking a store of N bytes from address X would be

implemented with a function call to __hwasan_storeN(X).

The main difference between hwasan and asan is in the information stored to

help this checking. Both sanitizers use a shadow memory area which stores

data recording the state of main memory at a corresponding address.

For hwasan, each 16 byte granule in main memory has a corresponding 1 byte

in shadow memory. This shadow address can be calculated with equation:

(addr >> log_2(HWASAN_TAG_GRANULE_SIZE))

+ __hwasan_shadow_memory_dynamic_address;

The conversion between real and shadow memory for asan is given in the block

comment at the top of this file.

The description of how this shadow memory is laid out for asan is in the

block comment at the top of this file, here we describe how this shadow

memory is used for hwasan.

For hwasan, each variable is assigned a byte-sized 'tag'. The extent of

the shadow memory for that variable is filled with the assigned tag, and

every pointer referencing that variable has its top byte set to the same

tag. The run-time library redefines malloc so that every allocation returns

a tagged pointer and tags the corresponding shadow memory with the same tag.

On each pointer dereference the tag found in the pointer is compared to the

tag found in the shadow memory corresponding to the accessed memory address.

If these tags are found to differ then this memory access is judged to be

invalid and a report is generated.

This method of bug detection is not perfect -- it can not catch every bad

access -- but catches them probabilistically instead. There is always the

possibility that an invalid memory access will happen to access memory

tagged with the same tag as the pointer that this access used.

The chances of this are approx. 0.4% for any two uncorrelated objects.

Random tag generation can mitigate this problem by decreasing the

probability that an invalid access will be missed in the same manner over

multiple runs. i.e. if two objects are tagged the same in one run of the

binary they are unlikely to be tagged the same in the next run.

Both heap and stack allocated objects have random tags by default.

[16 byte granule implications]

Since the shadow memory only has a resolution on real memory of 16 bytes,

invalid accesses that are within the same 16 byte granule as a valid

address will not be caught.

There is a "short-granule" feature in the runtime library which does catch

such accesses, but this feature is not implemented for stack objects (since

stack objects are allocated and tagged by compiler instrumentation, and

this feature has not yet been implemented in GCC instrumentation).

Another outcome of this 16 byte resolution is that each tagged object must

be 16 byte aligned. If two objects were to share any 16 byte granule in

memory, then they both would have to be given the same tag, and invalid

accesses to one using a pointer to the other would be undetectable.

[Compiler instrumentation]

Compiler instrumentation ensures that two adjacent buffers on the stack are

given different tags, this means an access to one buffer using a pointer

generated from the other (e.g. through buffer overrun) will have mismatched

tags and be caught by hwasan.

We don't randomly tag every object on the stack, since that would require

keeping many registers to record each tag. Instead we randomly generate a

tag for each function frame, and each new stack object uses a tag offset

from that frame tag.

i.e. each object is tagged as RFT + offset, where RFT is the "random frame

tag" generated for this frame.

This means that randomisation does not peturb the difference between tags

on tagged stack objects within a frame, but this is mitigated by the fact

that objects with the same tag within a frame are very far apart

(approx. 2^HWASAN_TAG_SIZE objects apart).

As a demonstration, using the same example program as in the asan block

comment above:

int

foo ()

{

char a[24] = {0};

int b[2] = {0};

a[5] = 1;

b[1] = 2;

return a[5] + b[1];

}

On AArch64 the stack will be ordered as follows for the above function:

Slot 1/ [24 bytes for variable 'a']

Slot 2/ [8 bytes padding for alignment]

Slot 3/ [8 bytes for variable 'b']

Slot 4/ [8 bytes padding for alignment]

(The padding is there to ensure 16 byte alignment as described in the 16

byte granule implications).

While the shadow memory will be ordered as follows:

- 2 bytes (representing 32 bytes in real memory) tagged with RFT + 1.

- 1 byte (representing 16 bytes in real memory) tagged with RFT + 2.

And any pointer to "a" will have the tag RFT + 1, and any pointer to "b"

will have the tag RFT + 2.

[Top Byte Ignore requirements]

Hwasan requires the ability to store an 8 bit tag in every pointer. There

is no instrumentation done to remove this tag from pointers before

dereferencing, which means the hardware must ignore this tag during memory

accesses.

Architectures where this feature is available should indicate this using

the TARGET_MEMTAG_CAN_TAG_ADDRESSES hook.

[Stack requires cleanup on unwinding]

During normal operation of a hwasan sanitized program more space in the

shadow memory becomes tagged as the stack grows. As the stack shrinks this

shadow memory space must become untagged. If it is not untagged then when

the stack grows again (during other function calls later on in the program)

objects on the stack that are usually not tagged (e.g. parameters passed on

the stack) can be placed in memory whose shadow space is tagged with

something else, and accesses can cause false positive reports.

Hence we place untagging code on every epilogue of functions which tag some

stack objects.

Moreover, the run-time library intercepts longjmp & setjmp to untag when

the stack is unwound this way.

C++ exceptions are not yet handled, which means this sanitizer can not

handle C++ code that throws exceptions -- it will give false positives

after an exception has been thrown. The implementation that the hwasan

library has for handling these relies on the frame pointer being after any

local variables. This is not generally the case for GCC. Returns whether we are tagging pointers and checking those tags on memory access.

References sanitize_flags_p(), and SANITIZE_HWADDRESS.

Referenced by asan_intercepted_p(), hwasan_instrument_reads(), hwasan_instrument_writes(), hwasan_memintrin(), hwasan_sanitize_stack_p(), hwassist_sanitize_p(), and sanopt_optimize_walker().

◆ hwasan_sanitize_stack_p()

| bool hwasan_sanitize_stack_p | ( | void | ) |

Are we tagging the stack?

References hwasan_sanitize_p().

Referenced by hwasan_sanitize_allocas_p(), and hwassist_sanitize_stack_p().

◆ hwasan_truncate_to_tag_size()

For stack tagging: Truncate `tag` to the number of bits that a tag uses (i.e. to HWASAN_TAG_SIZE). Store the result in `target` if it's convenient.

References expand_simple_binop(), gcc_assert, gen_int_mode(), GET_MODE, GET_MODE_PRECISION(), HOST_WIDE_INT_1U, HWASAN_TAG_SIZE, and OPTAB_WIDEN.

Referenced by expand_HWASAN_CHOOSE_TAG(), and hwasan_emit_prologue().

◆ hwassist_sanitize_p()

| bool hwassist_sanitize_p | ( | void | ) |

Returns whether we are tagging pointers and checking those tags on memory access.

References hwasan_sanitize_p(), and memtag_sanitize_p().

Referenced by asan_expand_check_ifn(), asan_expand_mark_ifn(), asan_expand_poison_ifn(), asan_instrument(), asan_poison_variable(), build_check_stmt(), get_mem_refs_of_builtin_call(), instrument_derefs(), maybe_instrument_call(), and report_error_func().

◆ hwassist_sanitize_stack_p()

| bool hwassist_sanitize_stack_p | ( | void | ) |

Are we tagging stack objects for hwasan or memtag?

References hwasan_sanitize_stack_p(), and memtag_sanitize_stack_p().

Referenced by align_local_variable(), asan_poison_variable(), asan_sanitize_use_after_scope(), expand_one_stack_var_1(), expand_one_stack_var_at(), expand_stack_vars(), expand_used_vars(), and init_vars_expansion().

◆ initialize_sanitizer_builtins()

| void initialize_sanitizer_builtins | ( | void | ) |

Initialize sanitizer.def builtins if the FE hasn't initialized them.

This file contains the definitions and documentation for the Address Sanitizer and Thread Sanitizer builtins used in the GNU compiler. Copyright (C) 2012-2026 Free Software Foundation, Inc. This file is part of GCC. GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version. GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details. You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see <http://www.gnu.org/licenses/>.

Before including this file, you should define a macro: DEF_BUILTIN_STUB(ENUM, NAME) DEF_SANITIZER_BUILTIN (ENUM, NAME, TYPE, ATTRS) See builtins.def for details. The builtins are created by the C-family of FEs in c-family/c-common.cc, for other FEs by asan.cc.

This has to come before all the sanitizer builtins.

Address Sanitizer

Do not reorder the BUILT_IN_ASAN_{REPORT,CHECK}* builtins, e.g. cfgcleanup.cc

relies on this order. Hardware Address Sanitizer.

Thread Sanitizer

Undefined Behavior Sanitizer

Sanitizer coverage

This has to come after all the sanitizer builtins.

References ATTR_PURE_NOTHROW_LEAF_LIST, BOOL_TYPE_SIZE, build_function_type_list(), build_nonstandard_integer_type(), build_pointer_type(), build_qualified_type(), builtin_decl_implicit_p(), const_ptr_type_node, DEF_SANITIZER_BUILTIN_1, double_type_node, float_type_node, i, integer_type_node, NULL_TREE, pointer_sized_int_node, ptr_type_node, SANITIZE_OBJECT_SIZE, size_type_node, lang_hooks_for_types::type_for_size, TYPE_QUAL_CONST, TYPE_QUAL_VOLATILE, lang_hooks::types, uint16_type_node, uint32_type_node, uint64_type_node, unsigned_char_type_node, and void_type_node.

Referenced by asan_init_shadow_ptr_types(), asan_instrument(), hwasan_finish_file(), tsan_finish_file(), tsan_pass(), and ubsan_create_data().

◆ insert_if_then_before_iter()

|

static |

Insert an if condition followed by a 'then block' right before the statement pointed to by ITER. The fallthrough block -- which is the else block of the condition as well as the destination of the outcoming edge of the 'then block' -- starts with the statement pointed to by ITER. COND is the condition of the if. If THEN_MORE_LIKELY_P is true, the probability of the edge to the 'then block' is higher than the probability of the edge to the fallthrough block. Upon completion of the function, *THEN_BB is set to the newly inserted 'then block' and similarly, *FALLTHROUGH_BB is set to the fallthrough block. *ITER is adjusted to still point to the same statement it was pointing to initially.

References create_cond_insert_point(), gsi_insert_after(), and GSI_NEW_STMT.

Referenced by asan_expand_check_ifn().

◆ instrument_builtin_call()

|

static |

Instrument the call to a built-in memory access function that is pointed to by the iterator ITER. Upon completion, return TRUE iff *ITER has been advanced to the statement following the one it was originally pointing to.

References as_a(), asan_mem_ref_init(), asan_memintrin(), BUILT_IN_NORMAL, gcc_checking_assert, get_mem_refs_of_builtin_call(), gimple_call_builtin_p(), gimple_location(), gsi_for_stmt(), gsi_next(), gsi_stmt(), hwasan_memintrin(), instrument_derefs(), instrument_mem_region_access(), maybe_update_mem_ref_hash_table(), memtag_memintrin(), NULL, NULL_TREE, and asan_mem_ref::start.

Referenced by maybe_instrument_call().

◆ instrument_derefs()

|

static |

If T represents a memory access, add instrumentation code before ITER. LOCATION is source code location. IS_STORE is either TRUE (for a store) or FALSE (for a load).

References ADDR_SPACE_GENERIC_P, aggregate_value_p(), asan_instrument_reads(), asan_instrument_writes(), asan_sanitize_use_after_scope(), build3(), build_check_stmt(), build_fold_addr_expr, current_function_decl, DECL_BIT_FIELD_REPRESENTATIVE, DECL_EXTERNAL, decl_function_context(), DECL_HARD_REGISTER, DECL_P, DECL_SIZE, DECL_THREAD_LOCAL_P, varpool_node::dynamically_initialized, EXPR_LOCATION, varpool_node::get(), get_inner_reference(), get_object_alignment(), has_mem_ref_been_instrumented(), hwasan_instrument_reads(), hwasan_instrument_writes(), hwassist_sanitize_p(), instrument_derefs(), int_size_in_bytes(), is_global_var(), mark_addressable(), NULL_TREE, poly_int_tree_p(), size_in_bytes(), TREE_ADDRESSABLE, TREE_CODE, TREE_OPERAND, TREE_STATIC, TREE_TYPE, TYPE_ADDR_SPACE, UNKNOWN_LOCATION, update_mem_ref_hash_table(), and VAR_P.

Referenced by instrument_builtin_call(), instrument_derefs(), maybe_instrument_assignment(), and maybe_instrument_call().

◆ instrument_mem_region_access()

|

static |

Instrument an access to a contiguous memory region that starts at the address pointed to by BASE, over a length of LEN (expressed in the sizeof (*BASE) bytes). ITER points to the instruction before which the instrumentation instructions must be inserted. LOCATION is the source location that the instrumentation instructions must have. If IS_STORE is true, then the memory access is a store; otherwise, it's a load.

References build_check_stmt(), gsi_for_stmt(), gsi_stmt(), has_mem_ref_been_instrumented(), integer_zerop(), INTEGRAL_TYPE_P, maybe_update_mem_ref_hash_table(), POINTER_TYPE_P, size_in_bytes(), tree_fits_shwi_p(), tree_to_shwi(), and TREE_TYPE.

Referenced by instrument_builtin_call().

◆ is_odr_indicator()

Return true if DECL, a global var, is an artificial ODR indicator symbol therefore doesn't need protection.

References DECL_ARTIFICIAL, DECL_ATTRIBUTES, and lookup_attribute().

Referenced by asan_protect_global().

◆ make_pass_asan()

| gimple_opt_pass * make_pass_asan | ( | gcc::context * | ctxt | ) |

◆ make_pass_asan_O0()

| gimple_opt_pass * make_pass_asan_O0 | ( | gcc::context * | ctxt | ) |

◆ maybe_cast_to_ptrmode()

| tree maybe_cast_to_ptrmode | ( | location_t | loc, |

| tree | len, | ||

| gimple_stmt_iterator * | iter, | ||

| bool | before_p ) |

LEN can already have necessary size and precision; in that case, do not create a new variable.

References g, gimple_assign_lhs(), gimple_build_assign(), gimple_set_location(), gsi_insert_after(), GSI_NEW_STMT, gsi_safe_insert_before(), make_ssa_name(), pointer_sized_int_node, and ptrofftype_p().

Referenced by build_check_stmt().

◆ maybe_create_ssa_name()

|

static |

BASE can already be an SSA_NAME; in that case, do not create a new SSA_NAME for it.

References g, gimple_assign_lhs(), gimple_build_assign(), gimple_set_location(), gsi_insert_after(), GSI_NEW_STMT, gsi_safe_insert_before(), make_ssa_name(), STRIP_USELESS_TYPE_CONVERSION, TREE_CODE, and TREE_TYPE.

Referenced by build_check_stmt().

◆ maybe_instrument_assignment()

|

static |

Instrument the assignment statement ITER if it is subject to instrumentation. Return TRUE iff instrumentation actually happened. In that case, the iterator ITER is advanced to the next logical expression following the one initially pointed to by ITER, and the relevant memory reference that which access has been instrumented is added to the memory references hash table.

References gcc_assert, gimple_assign_lhs(), gimple_assign_load_p(), gimple_assign_rhs1(), gimple_assign_single_p(), gimple_location(), gimple_store_p(), gsi_next(), gsi_stmt(), instrument_derefs(), and NULL_TREE.

Referenced by transform_statements().

◆ maybe_instrument_call()

|

static |

Instrument the function call pointed to by the iterator ITER, if it is subject to instrumentation. At the moment, the only function calls that are instrumented are some built-in functions that access memory. Look at instrument_builtin_call to learn more. Upon completion return TRUE iff *ITER was advanced to the statement following the one it was originally pointing to.

References aggregate_value_p(), BUILT_IN_NORMAL, builtin_decl_implicit(), DECL_FUNCTION_CODE(), g, gimple_build_call(), gimple_call_arg(), gimple_call_builtin_p(), gimple_call_fndecl(), gimple_call_fntype(), gimple_call_internal_p(), gimple_call_lhs(), gimple_call_noreturn_p(), gimple_call_num_args(), gimple_location(), gimple_set_location(), gimple_store_p(), gsi_next(), gsi_safe_insert_before(), gsi_stmt(), hwassist_sanitize_p(), i, instrument_builtin_call(), instrument_derefs(), is_gimple_min_invariant(), is_gimple_reg(), and TREE_TYPE.

Referenced by transform_statements().

◆ maybe_update_mem_ref_hash_table()

Insert a memory reference into the hash table if access length can be determined in compile time.

References INTEGRAL_TYPE_P, POINTER_TYPE_P, size_in_bytes(), tree_fits_shwi_p(), tree_to_shwi(), TREE_TYPE, and update_mem_ref_hash_table().

Referenced by instrument_builtin_call(), and instrument_mem_region_access().

◆ memtag_memintrin()

| bool memtag_memintrin | ( | void | ) |

Are we taggin mem intrinsics?

References memtag_sanitize_p().

Referenced by instrument_builtin_call().

◆ memtag_sanitize_allocas_p()

| bool memtag_sanitize_allocas_p | ( | void | ) |

Are we tagging alloca objects?

References memtag_sanitize_stack_p().

Referenced by handle_builtin_alloca(), and handle_builtin_stack_restore().

◆ memtag_sanitize_p()

| bool memtag_sanitize_p | ( | void | ) |

MEMoryTAGging sanitizer (MEMTAG) uses a hardware based capability known as

memory tagging to detect memory safety vulnerabilities. Similar to HWASAN,

it is also a probabilistic method.

MEMTAG relies on the optional extension in armv8.5a known as MTE (Memory

Tagging Extension). The extension is available in AArch64 only and

introduces two types of tags:

- Logical Address Tag - bits 56-59 (TARGET_MEMTAG_TAG_BITSIZE) of the

virtual address.

- Allocation Tag - 4 bits for each tag granule (TARGET_MEMTAG_GRANULE_SIZE

set to 16 bytes), stored separately.

Load / store instructions raise an exception if tags differ, thereby

providing a faster way (than HWASAN) to detect memory safety issues.

Further, new instructions are available in MTE to manipulate (generate,

update address with) tags. Load / store instructions with SP base register

and immediate offset do not check tags.

PS: Currently, MEMTAG sanitizer is capable of stack (variable / memory)

tagging only.

In general, detecting stack-related memory bugs requires the compiler to:

- ensure that each tag granule is only used by one variable at a time.

This includes alloca.

- Tag/Color: put tags into each stack variable pointer.

- Untag: the function epilogue will retag the memory.

MEMTAG sanitizer is based off the HWASAN sanitizer implementation

internally. Similar to HWASAN:

- Assigning an independently random tag to each variable is carried out by

keeping a tagged base pointer. A tagged base pointer allows addressing

variables with (addr offset, tag offset).

Returns whether we are tagging pointers and checking those tags on memory access.

References sanitize_flags_p(), and SANITIZE_MEMTAG.

Referenced by expand_HWASAN_ALLOCA_POISON(), expand_HWASAN_ALLOCA_UNPOISON(), expand_HWASAN_MARK(), expand_used_vars(), handle_builtin_alloca(), hwasan_emit_prologue(), hwasan_emit_untag_frame(), hwassist_sanitize_p(), and memtag_memintrin().

◆ memtag_sanitize_stack_p()

| bool memtag_sanitize_stack_p | ( | void | ) |

Are we tagging the stack?

References sanitize_flags_p(), and SANITIZE_MEMTAG_STACK.

Referenced by hwassist_sanitize_stack_p(), and memtag_sanitize_allocas_p().

◆ report_error_func()

|

static |

Construct a function tree for __asan_report_{load,store}{1,2,4,8,16,_n}.

IS_STORE is either 1 (for a store) or 0 (for a load).

References builtin_decl_implicit(), exact_log2(), gcc_assert, hwassist_sanitize_p(), and size_in_bytes().

Referenced by asan_expand_check_ifn(), and asan_expand_poison_ifn().

◆ section_sanitized_p()

|

static |

Checks whether section SEC should be sanitized.

References FOR_EACH_VEC_ELT, i, and sanitized_sections.

Referenced by asan_protect_global().

◆ set_asan_shadow_offset()

| bool set_asan_shadow_offset | ( | const char * | val | ) |

Sets shadow offset to value in string VAL.

References asan_shadow_offset_computed, asan_shadow_offset_value, and errno.

Referenced by handle_common_deferred_options().

◆ set_sanitized_sections()

| void set_sanitized_sections | ( | const char * | sections | ) |

Set list of user-defined sections that need to be sanitized.

References end(), FOR_EACH_VEC_ELT, free(), i, and sanitized_sections.

Referenced by handle_common_deferred_options().

◆ shadow_mem_size()

|

static |

Return number of shadow bytes that are occupied by a local variable of SIZE bytes.

References ASAN_SHADOW_GRANULARITY, gcc_assert, MAX_SUPPORTED_STACK_ALIGNMENT, and ROUND_UP.

Referenced by asan_expand_mark_ifn().

◆ stack_vars_base_reg_p()

For stack tagging: Check whether this RTX is a standard pointer addressing the base of the stack variables for this frame. Returns true if the RTX is either virtual_stack_vars_rtx or hwasan_frame_base_ptr.

References hwasan_frame_base_ptr, and virtual_stack_vars_rtx.

Referenced by expand_one_stack_var_at().

◆ transform_statements()

|

static |

Walk each instruction of all basic block and instrument those that represent memory references: loads, stores, or function calls. In a given basic block, this function avoids instrumenting memory references that have already been instrumented.

References asan_mark_p(), cfun, empty_mem_ref_hash_table(), FOR_EACH_BB_FN, free_mem_ref_resources(), gimple_assign_single_p(), gimple_clobber_p(), gsi_end_p(), gsi_next(), gsi_start_bb(), gsi_stmt(), has_stmt_been_instrumented_p(), i, basic_block_def::index, is_gimple_call(), last_basic_block_for_fn, maybe_instrument_assignment(), maybe_instrument_call(), nonfreeing_call_p(), NULL, single_pred(), and single_pred_p().

Referenced by asan_instrument().

◆ update_mem_ref_hash_table()

|

static |

Insert a memory reference into the hash table.

References asan_mem_ref_init(), asan_mem_ref_new(), hash_table< Descriptor, Lazy, Allocator >::find_slot(), get_mem_ref_hash_table(), NULL, and r.

Referenced by instrument_derefs(), and maybe_update_mem_ref_hash_table().

Variable Documentation

◆ asan_detect_stack_use_after_return

|

static |

Decl for __asan_option_detect_stack_use_after_return.

Referenced by asan_emit_stack_protection().

◆ asan_handled_variables

Set of variable declarations that are going to be guarded by use-after-scope sanitizer.

Referenced by asan_emit_stack_protection(), asan_expand_mark_ifn(), and expand_stack_vars().

◆ asan_local_shadow_memory_dynamic_address

|

static |

Local copy for the asan_shadow_memory_dynamic_address within the function.

Referenced by asan_maybe_insert_dynamic_shadow_at_function_entry(), and build_shadow_mem_access().

◆ asan_mem_ref_ht

|

static |

Referenced by empty_mem_ref_hash_table(), free_mem_ref_resources(), and get_mem_ref_hash_table().

◆ asan_mem_ref_pool

| object_allocator< asan_mem_ref > asan_mem_ref_pool("asan_mem_ref") | ( | "asan_mem_ref" | ) |

Referenced by asan_mem_ref_new(), and free_mem_ref_resources().

◆ asan_memfn_rtls

|

static |

Support for --param asan-kernel-mem-intrinsic-prefix=1.

Referenced by asan_memfn_rtl().

◆ asan_shadow_memory_dynamic_address

|

static |

Referenced by get_asan_shadow_memory_dynamic_address_decl().

◆ asan_shadow_offset_computed

|

static |

Referenced by asan_shadow_offset(), asan_shadow_offset_set_p(), and set_asan_shadow_offset().

◆ asan_shadow_offset_value

|

static |

AddressSanitizer, a fast memory error detector. Copyright (C) 2012-2026 Free Software Foundation, Inc. Contributed by Kostya Serebryany <kcc@google.com> This file is part of GCC. GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version. GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details. You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see <http://www.gnu.org/licenses/>.

AddressSanitizer finds out-of-bounds and use-after-free bugs

with <2x slowdown on average.

The tool consists of two parts:

instrumentation module (this file) and a run-time library.

The instrumentation module adds a run-time check before every memory insn.

For a 8- or 16- byte load accessing address X:

ShadowAddr = (X >> 3) + Offset

ShadowValue = *(char*)ShadowAddr; // *(short*) for 16-byte access.

if (ShadowValue)

__asan_report_load8(X);

For a load of N bytes (N=1, 2 or 4) from address X:

ShadowAddr = (X >> 3) + Offset

ShadowValue = *(char*)ShadowAddr;

if (ShadowValue)

if ((X & 7) + N - 1 > ShadowValue)

__asan_report_loadN(X);

Stores are instrumented similarly, but using __asan_report_storeN functions.

A call too __asan_init_vN() is inserted to the list of module CTORs.

N is the version number of the AddressSanitizer API. The changes between the

API versions are listed in libsanitizer/asan/asan_interface_internal.h.

The run-time library redefines malloc (so that redzone are inserted around

the allocated memory) and free (so that reuse of free-ed memory is delayed),

provides __asan_report* and __asan_init_vN functions.

Read more:

http://code.google.com/p/address-sanitizer/wiki/AddressSanitizerAlgorithm

The current implementation supports detection of out-of-bounds and

use-after-free in the heap, on the stack and for global variables.

[Protection of stack variables]

To understand how detection of out-of-bounds and use-after-free works

for stack variables, lets look at this example on x86_64 where the

stack grows downward:

int

foo ()

{

char a[24] = {0};

int b[2] = {0};

a[5] = 1;

b[1] = 2;

return a[5] + b[1];

}

For this function, the stack protected by asan will be organized as

follows, from the top of the stack to the bottom:

Slot 1/ [red zone of 32 bytes called 'RIGHT RedZone']

Slot 2/ [8 bytes of red zone, that adds up to the space of 'a' to make

the next slot be 32 bytes aligned; this one is called Partial

Redzone; this 32 bytes alignment is an asan constraint]

Slot 3/ [24 bytes for variable 'a']

Slot 4/ [red zone of 32 bytes called 'Middle RedZone']

Slot 5/ [24 bytes of Partial Red Zone (similar to slot 2]

Slot 6/ [8 bytes for variable 'b']

Slot 7/ [32 bytes of Red Zone at the bottom of the stack, called

'LEFT RedZone']

The 32 bytes of LEFT red zone at the bottom of the stack can be

decomposed as such:

1/ The first 8 bytes contain a magical asan number that is always

0x41B58AB3.

2/ The following 8 bytes contains a pointer to a string (to be

parsed at runtime by the runtime asan library), which format is

the following:

"<function-name> <space> <num-of-variables-on-the-stack>

(<32-bytes-aligned-offset-in-bytes-of-variable> <space>

<length-of-var-in-bytes> ){n} "

where '(...){n}' means the content inside the parenthesis occurs 'n'

times, with 'n' being the number of variables on the stack.

3/ The following 8 bytes contain the PC of the current function which

will be used by the run-time library to print an error message.

4/ The following 8 bytes are reserved for internal use by the run-time.

The shadow memory for that stack layout is going to look like this: