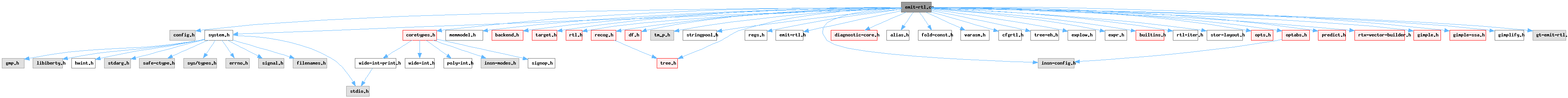

#include "config.h"#include "system.h"#include "coretypes.h"#include "memmodel.h"#include "backend.h"#include "target.h"#include "rtl.h"#include "tree.h"#include "df.h"#include "tm_p.h"#include "stringpool.h"#include "insn-config.h"#include "regs.h"#include "emit-rtl.h"#include "recog.h"#include "diagnostic-core.h"#include "alias.h"#include "fold-const.h"#include "varasm.h"#include "cfgrtl.h"#include "tree-eh.h"#include "explow.h"#include "expr.h"#include "builtins.h"#include "rtl-iter.h"#include "stor-layout.h"#include "opts.h"#include "optabs.h"#include "predict.h"#include "rtx-vector-builder.h"#include "gimple.h"#include "gimple-ssa.h"#include "gimplify.h"#include "bbitmap.h"#include "gt-emit-rtl.h"

Data Structures | |

| struct | const_int_hasher |

| struct | const_wide_int_hasher |

| struct | const_poly_int_hasher |

| struct | reg_attr_hasher |

| struct | const_double_hasher |

| struct | const_fixed_hasher |

Macros | |

| #define | initial_regno_reg_rtx (this_target_rtl->x_initial_regno_reg_rtx) |

| #define | cur_insn_uid (crtl->emit.x_cur_insn_uid) |

| #define | cur_debug_insn_uid (crtl->emit.x_cur_debug_insn_uid) |

| #define | first_label_num (crtl->emit.x_first_label_num) |

Macro Definition Documentation

◆ cur_debug_insn_uid

| #define cur_debug_insn_uid (crtl->emit.x_cur_debug_insn_uid) |

Referenced by get_max_insn_count(), init_emit(), make_debug_insn_raw(), and set_new_first_and_last_insn().

◆ cur_insn_uid

| #define cur_insn_uid (crtl->emit.x_cur_insn_uid) |

Referenced by copy_delay_slot_insn(), emit_barrier(), emit_barrier_after(), emit_barrier_before(), emit_jump_table_data(), emit_label(), emit_label_after(), emit_label_before(), get_max_insn_count(), init_emit(), make_call_insn_raw(), make_debug_insn_raw(), make_insn_raw(), make_jump_insn_raw(), make_note_raw(), and set_new_first_and_last_insn().

◆ first_label_num

| #define first_label_num (crtl->emit.x_first_label_num) |

◆ initial_regno_reg_rtx

| #define initial_regno_reg_rtx (this_target_rtl->x_initial_regno_reg_rtx) |

Referenced by init_emit(), and init_emit_regs().

Function Documentation

◆ active_insn_p()

References CALL_P, GET_CODE, JUMP_P, JUMP_TABLE_DATA_P, NONJUMP_INSN_P, PATTERN(), and reload_completed.

Referenced by add_cfis_to_fde(), bb_ok_for_noce_convert_multiple_sets(), bb_valid_for_noce_process_p(), bbs_ok_for_cmove_arith(), can_fallthru(), count_bb_insns(), emit_pattern_after_setloc(), emit_pattern_before_setloc(), expensive_function_p(), flow_active_insn_p(), flow_find_cross_jump(), flow_find_head_matching_sequence(), init_noce_multiple_sets_info(), loop_exit_at_end_p(), maybe_add_nop_after_section_switch(), next_active_insn(), next_active_insn_bb(), noce_convert_multiple_sets_1(), noce_emit_all_but_last(), peep2_attempt(), prev_active_insn(), prev_active_insn_bb(), and reemit_insn_block_notes().

◆ add_function_usage_to()

Append CALL_FUSAGE to the CALL_INSN_FUNCTION_USAGE for CALL_INSN.

References CALL_INSN_FUNCTION_USAGE, CALL_P, gcc_assert, and XEXP.

Referenced by emit_call_1(), and expand_builtin_apply().

◆ add_insn()

| void add_insn | ( | rtx_insn * | insn | ) |

Add INSN to the end of the doubly-linked list. INSN may be an INSN, JUMP_INSN, CALL_INSN, CODE_LABEL, BARRIER or NOTE.

References get_insns(), get_last_insn(), link_insn_into_chain(), NULL, set_first_insn(), and set_last_insn().

Referenced by address_reload_context::emit_autoinc(), emit_barrier(), emit_call_insn(), emit_debug_insn(), emit_insn(), emit_jump_insn(), emit_jump_table_data(), emit_label(), emit_libcall_block_1(), emit_note(), emit_note_copy(), and inc_for_reload().

◆ add_insn_after()

| void add_insn_after | ( | rtx_insn * | insn, |

| rtx_insn * | after, | ||

| basic_block | bb ) |

Like add_insn_after_nobb, but try to set BLOCK_FOR_INSN. If BB is NULL, an attempt is made to infer the bb from before. This and the next function should be the only functions called to insert an insn once delay slots have been filled since only they know how to update a SEQUENCE.

References add_insn_after_nobb(), BARRIER_P, BB_END, BLOCK_FOR_INSN(), df_insn_rescan(), INSN_P, NOTE_INSN_BASIC_BLOCK_P, and set_block_for_insn().

Referenced by delete_from_delay_slot(), emit_barrier_after(), emit_delay_sequence(), emit_label_after(), emit_note_after(), emit_pattern_after_noloc(), make_return_insns(), and relax_delay_slots().

◆ add_insn_after_nobb()

Add INSN into the doubly-linked list after insn AFTER.

References rtx_insn::deleted(), gcc_assert, get_current_sequence(), sequence_stack::last, link_insn_into_chain(), sequence_stack::next, NEXT_INSN(), and NULL.

Referenced by add_insn_after(), and emit_note_after().

◆ add_insn_before()

| void add_insn_before | ( | rtx_insn * | insn, |

| rtx_insn * | before, | ||

| basic_block | bb ) |

Like add_insn_before_nobb, but try to set BLOCK_FOR_INSN. If BB is NULL, an attempt is made to infer the bb from before. This and the previous function should be the only functions called to insert an insn once delay slots have been filled since only they know how to update a SEQUENCE.

References add_insn_before_nobb(), BARRIER_P, BB_HEAD, BLOCK_FOR_INSN(), df_insn_rescan(), gcc_assert, INSN_P, NOTE_INSN_BASIC_BLOCK_P, and set_block_for_insn().

Referenced by emit_barrier_before(), emit_label_before(), emit_note_before(), and emit_pattern_before_noloc().

◆ add_insn_before_nobb()

Add INSN into the doubly-linked list before insn BEFORE.

References rtx_insn::deleted(), sequence_stack::first, gcc_assert, get_current_sequence(), link_insn_into_chain(), sequence_stack::next, NULL, and PREV_INSN().

Referenced by add_insn_before(), and emit_note_before().

◆ adjust_address_1()

| rtx adjust_address_1 | ( | rtx | memref, |

| machine_mode | mode, | ||

| poly_int64 | offset, | ||

| int | validate, | ||

| int | adjust_address, | ||

| int | adjust_object, | ||

| poly_int64 | size ) |

Return a memory reference like MEMREF, but with its mode changed to MODE and its address offset by OFFSET bytes. If VALIDATE is nonzero, the memory address is forced to be valid. If ADJUST_ADDRESS is zero, OFFSET is only used to update MEM_ATTRS and the caller is responsible for adjusting MEMREF base register. If ADJUST_OBJECT is zero, the underlying object associated with the memory reference is left unchanged and the caller is responsible for dealing with it. Otherwise, if the new memory reference is outside the underlying object, even partially, then the object is dropped. SIZE, if nonzero, is the size of an access in cases where MODE has no inherent size.

References adjust_address, change_address_1(), copy_rtx(), gcc_assert, get_address_mode(), GET_CODE, get_mem_attrs(), GET_MODE, GET_MODE_ALIGNMENT, known_eq, maybe_gt, memory_address_addr_space_p(), MIN, mode_mem_attrs, NULL_TREE, attrs::offset, mem_attrs::offset, plus_constant(), set_mem_attrs(), mem_attrs::size, targetm, trunc_int_for_mode(), and XEXP.

Referenced by adjust_automodify_address_1(), store_expr(), and widen_memory_access().

◆ adjust_automodify_address_1()

| rtx adjust_automodify_address_1 | ( | rtx | memref, |

| machine_mode | mode, | ||

| rtx | addr, | ||

| poly_int64 | offset, | ||

| int | validate ) |

Return a memory reference like MEMREF, but with its mode changed to MODE and its address changed to ADDR, which is assumed to be MEMREF offset by OFFSET bytes. If VALIDATE is nonzero, the memory address is forced to be valid.

References adjust_address_1(), change_address_1(), and mem_attrs::offset.

◆ adjust_reg_mode()

| void adjust_reg_mode | ( | rtx | reg, |

| machine_mode | mode ) |

Adjust REG in-place so that it has mode MODE. It is assumed that the new register is a (possibly paradoxical) lowpart of the old one.

References byte_lowpart_offset(), GET_MODE, PUT_MODE(), and update_reg_offset().

Referenced by subst_mode(), try_combine(), and undo_to_marker().

◆ byte_lowpart_offset()

| poly_int64 byte_lowpart_offset | ( | machine_mode | outer_mode, |

| machine_mode | inner_mode ) |

Return the number of bytes between the start of an OUTER_MODE in-memory value and the start of an INNER_MODE in-memory value, given that the former is a lowpart of the latter. It may be a paradoxical lowpart, in which case the offset will be negative on big-endian targets.

References paradoxical_subreg_p(), and subreg_lowpart_offset().

Referenced by adjust_reg_mode(), alter_subreg(), gen_lowpart_for_combine(), gen_lowpart_general(), gen_lowpart_if_possible(), replace_reg_with_saved_mem(), rtl_for_decl_location(), rtx_equal_for_field_assignment_p(), set_reg_attrs_for_decl_rtl(), set_reg_attrs_from_value(), simplify_immed_subreg(), track_loc_p(), var_lowpart(), and vt_add_function_parameter().

◆ change_address()

Like change_address_1 with VALIDATE nonzero, but we are not saying in what way we are changing MEMREF, so we only preserve the alias set.

References mem_attrs::align, attrs, change_address_1(), gen_rtx_MEM(), get_mem_attrs(), GET_MODE, mem_attrs_eq_p(), MEM_COPY_ATTRIBUTES, mode_mem_attrs, NULL_TREE, set_mem_attrs(), mem_attrs::size, mem_attrs::size_known_p, and XEXP.

Referenced by assign_parm_setup_block(), drop_writeback(), emit_block_cmp_via_loop(), emit_block_move_via_loop(), expand_assignment(), expand_builtin_init_trampoline(), expand_expr_real_1(), extract_writebacks(), store_expr(), and try_store_by_multiple_pieces().

◆ change_address_1()

Return a memory reference like MEMREF, but with its mode changed to MODE and its address changed to ADDR. (VOIDmode means don't change the mode. NULL for ADDR means don't change the address.) VALIDATE is nonzero if the returned memory location is required to be valid. INPLACE is true if any changes can be made directly to MEMREF or false if MEMREF must be treated as immutable. The memory attributes are not changed.

References gcc_assert, gen_rtx_MEM(), GET_MODE, lra_in_progress, MEM_ADDR_SPACE, MEM_COPY_ATTRIBUTES, MEM_P, memory_address_addr_space(), memory_address_addr_space_p(), reload_completed, reload_in_progress, rtx_equal_p(), and XEXP.

Referenced by adjust_address_1(), adjust_automodify_address_1(), change_address(), offset_address(), replace_equiv_address(), and replace_equiv_address_nv().

◆ clear_mem_offset()

| void clear_mem_offset | ( | rtx | mem | ) |

Clear the offset of MEM.

References attrs, get_mem_attrs(), and set_mem_attrs().

Referenced by expand_partial_load_optab_fn(), expand_partial_store_optab_fn(), get_memory_rtx(), merge_memattrs(), and try_store_by_multiple_pieces().

◆ clear_mem_size()

| void clear_mem_size | ( | rtx | mem | ) |

Clear the size of MEM.

References attrs, get_mem_attrs(), and set_mem_attrs().

Referenced by merge_memattrs().

◆ complete_seq()

Read and emit a sequence of instructions from the bytecode in SEQ, which was generated by genemit.cc. Replace operand placeholders with the values given in OPERANDS.

References end_sequence().

◆ const_double_from_real_value()

| rtx const_double_from_real_value | ( | REAL_VALUE_TYPE | value, |

| machine_mode | mode ) |

Return a CONST_DOUBLE rtx for a floating-point value specified by VALUE in mode MODE.

References lookup_const_double(), PUT_MODE(), REAL_VALUE_TYPE, rtx_alloc(), rtx_def::u::rv, and rtx_def::u.

Referenced by avoid_constant_pool_reference(), compress_float_constant(), const_vector_from_tree(), expand_expr_real_1(), expand_fix(), expand_float(), fold_rtx(), init_emit_once(), native_decode_rtx(), relational_result(), simplify_context::simplify_binary_operation_1(), simplify_const_binary_operation(), and simplify_const_unary_operation().

◆ const_fixed_from_fixed_value()

| rtx const_fixed_from_fixed_value | ( | FIXED_VALUE_TYPE | value, |

| machine_mode | mode ) |

Return a CONST_FIXED rtx for a fixed-point value specified by VALUE in mode MODE.

References FIXED_VALUE_TYPE, rtx_def::u::fv, lookup_const_fixed(), PUT_MODE(), rtx_alloc(), and rtx_def::u.

Referenced by const_fixed_from_double_int().

◆ const_vec_series_p_1()

A subroutine of const_vec_series_p that handles the case in which: (GET_CODE (X) == CONST_VECTOR && CONST_VECTOR_NPATTERNS (X) == 1 && !CONST_VECTOR_DUPLICATE_P (X)) is known to hold.

References CONST0_RTX, CONST_VECTOR_ELT, CONST_VECTOR_ENCODED_ELT, CONST_VECTOR_NUNITS, CONST_VECTOR_STEPPED_P, GET_MODE, GET_MODE_CLASS, GET_MODE_INNER, rtx_equal_p(), and simplify_binary_operation().

Referenced by const_vec_series_p().

◆ const_vector_elt()

Return the value of element I of CONST_VECTOR X.

References CONST_VECTOR_ENCODED_ELT, const_vector_encoded_nelts(), const_vector_int_elt(), CONST_VECTOR_NPATTERNS, CONST_VECTOR_STEPPED_P, GET_MODE, GET_MODE_INNER, i, immed_wide_int_const(), and XVECLEN.

◆ const_vector_int_elt()

Return the value of element I of CONST_VECTOR X as a wide_int.

References wi::add(), CONST_VECTOR_ENCODED_ELT, const_vector_encoded_nelts(), CONST_VECTOR_NPATTERNS, CONST_VECTOR_STEPPED_P, count, GET_MODE, GET_MODE_INNER, i, wi::sub(), and XVECLEN.

Referenced by const_vector_elt().

◆ copy_delay_slot_insn()

Return a copy of INSN that can be used in a SEQUENCE delay slot, on that assumption that INSN itself remains in its original place.

References as_a(), copy_rtx(), cur_insn_uid, and INSN_UID().

Referenced by fill_simple_delay_slots(), fill_slots_from_thread(), and steal_delay_list_from_target().

◆ copy_insn()

Create a new copy of an rtx. This function differs from copy_rtx in that it handles SCRATCHes and ASM_OPERANDs properly. INSN doesn't really have to be a full INSN; it could be just the pattern.

References copy_asm_constraints_vector, copy_asm_operands_vector, copy_insn_1(), copy_insn_n_scratches, orig_asm_constraints_vector, and orig_asm_operands_vector.

Referenced by bypass_block(), compare_and_jump_seq(), do_remat(), eliminate_partially_redundant_load(), eliminate_regs_in_insn(), emit_copy_of_insn_after(), lra_process_new_insns(), and try_optimize_cfg().

◆ copy_insn_1()

Recursively create a new copy of an rtx for copy_insn. This function differs from copy_rtx in that it handles SCRATCHes and ASM_OPERANDs properly. Normally, this function is not used directly; use copy_insn as front end. However, you could first copy an insn pattern with copy_insn and then use this function afterwards to properly copy any REG_NOTEs containing SCRATCHes.

References ASM_OPERANDS_INPUT_CONSTRAINT_VEC, ASM_OPERANDS_INPUT_VEC, CASE_CONST_ANY, copy_asm_constraints_vector, copy_asm_operands_vector, copy_insn_1(), copy_insn_n_scratches, copy_insn_scratch_in, copy_insn_scratch_out, gcc_assert, gcc_unreachable, GET_CODE, GET_RTX_FORMAT, GET_RTX_LENGTH, HARD_REGISTER_NUM_P, i, INSN_P, NULL, orig_asm_constraints_vector, orig_asm_operands_vector, ORIGINAL_REGNO, REG_P, REGNO, rtvec_alloc(), RTX_CODE, RTX_FLAG, shallow_copy_rtx(), shared_const_p(), XEXP, XVEC, XVECEXP, and XVECLEN.

Referenced by copy_insn(), copy_insn_1(), duplicate_reg_note(), eliminate_regs_in_insn(), and gcse_emit_move_after().

◆ copy_rtx_if_shared()

Mark ORIG as in use, and return a copy of it if it was already in use. Recursively does the same for subexpressions. Uses copy_rtx_if_shared_1 to reduce stack space.

References copy_rtx_if_shared_1().

Referenced by emit_notes_in_bb(), try_combine(), unshare_all_rtl(), unshare_all_rtl_1(), and unshare_all_rtl_in_chain().

◆ copy_rtx_if_shared_1()

|

static |

Mark *ORIG1 as in use, and set it to a copy of it if it was already in use. Recursively does the same for subexpressions.

References CASE_CONST_ANY, copy_rtx_if_shared_1(), gen_rtvec_v(), GET_CODE, GET_RTX_FORMAT, GET_RTX_LENGTH, HARD_REGISTER_NUM_P, i, NULL, ORIGINAL_REGNO, REG_P, REGNO, RTX_FLAG, shallow_copy_rtx(), shared_const_p(), XEXP, XVEC, XVECEXP, and XVECLEN.

Referenced by copy_rtx_if_shared(), and copy_rtx_if_shared_1().

◆ curr_insn_location()

| location_t curr_insn_location | ( | void | ) |

Get current location.

References curr_location.

Referenced by expand_expr_real_1(), expand_expr_real_gassign(), expand_gimple_basic_block(), expand_gimple_cond(), expand_thunk(), make_call_insn_raw(), make_debug_insn_raw(), make_insn_raw(), make_jump_insn_raw(), and store_expr().

◆ delete_insns_since()

| void delete_insns_since | ( | rtx_insn * | from | ) |

Delete all insns made since FROM. FROM becomes the new last instruction.

References set_first_insn(), set_last_insn(), and SET_NEXT_INSN().

Referenced by base_to_reg(), can_widen_mult_without_libcall(), default_zero_call_used_regs(), emit_add3_insn(), address_reload_context::emit_autoinc(), emit_conditional_add(), emit_conditional_move(), emit_conditional_neg_or_complement(), emit_cstore(), emit_insn_if_valid_for_reload_1(), emit_store_flag(), emit_store_flag_int(), expand_abs_nojump(), expand_addsub_overflow(), expand_atomic_load(), expand_atomic_store(), expand_binop(), expand_binop_directly(), expand_builtin_interclass_mathfn(), expand_builtin_issignaling(), expand_builtin_signbit(), expand_ccmp_expr(), expand_DIVMOD(), expand_divmod(), expand_doubleword_shift(), expand_fix(), expand_mul_overflow(), expand_neg_overflow(), expand_one_cmpl_abs_nojump(), expand_parity(), expand_sfix_optab(), expand_twoval_binop(), expand_twoval_unop(), expand_unop(), expand_unop_direct(), expand_vec_perm_const(), extract_integral_bit_field(), force_subreg(), gen_reload_chain_without_interm_reg_p(), inc_for_reload(), lra_emit_add(), maybe_emit_sync_lock_test_and_set(), maybe_legitimize_operand_same_code(), maybe_legitimize_operands(), move_block_from_reg(), move_block_to_reg(), prepare_cmp_insn(), process_address_1(), store_bit_field_using_insv(), store_integral_bit_field(), widen_bswap(), and widen_leading().

◆ emit()

Emit the rtl pattern X as an appropriate kind of insn. Also emit a following barrier if the instruction needs one and if ALLOW_BARRIER_P is true. If X is a label, it is simply added into the insn chain.

References any_uncondjump_p(), classify_insn(), emit_barrier(), emit_call_insn(), emit_debug_insn(), emit_insn(), emit_jump_insn(), emit_label(), gcc_unreachable, and GET_CODE.

Referenced by ensure_regno(), and gen_reg_rtx().

◆ emit_barrier()

| rtx_barrier * emit_barrier | ( | void | ) |

Make an insn of code BARRIER and add it to the end of the doubly-linked list.

References add_insn(), as_a(), cur_insn_uid, INSN_UID(), and rtx_alloc().

Referenced by allocate_dynamic_stack_space(), compare_by_pieces(), do_tablejump(), duplicate_insn_chain(), emit(), emit_case_dispatch_table(), emit_indirect_jump(), emit_jump(), expand_builtin_return(), expand_builtin_trap(), expand_builtin_unreachable(), expand_divmod(), expand_doubleword_clz_ctz_ffs(), expand_doubleword_shift(), expand_expr_real_2(), expand_fix(), expand_float(), expand_gimple_tailcall(), find_end_label(), and store_expr().

◆ emit_barrier_after()

| rtx_barrier * emit_barrier_after | ( | rtx_insn * | after | ) |

Make an insn of code BARRIER and output it after the insn AFTER.

References add_insn_after(), as_a(), cur_insn_uid, INSN_UID(), NULL, and rtx_alloc().

Referenced by commit_one_edge_insertion(), delete_from_delay_slot(), emit_barrier_after_bb(), emit_library_call_value_1(), expand_call(), find_cond_trap(), fixup_reorder_chain(), make_return_insns(), try_optimize_cfg(), try_redirect_by_replacing_jump(), and update_cfg_for_uncondjump().

◆ emit_barrier_before()

| rtx_barrier * emit_barrier_before | ( | rtx_insn * | before | ) |

Make an insn of code BARRIER and output it before the insn BEFORE.

References add_insn_before(), as_a(), cur_insn_uid, INSN_UID(), NULL, and rtx_alloc().

◆ emit_call_insn()

Make an insn of code CALL_INSN with pattern X and add it to the end of the doubly-linked list.

References add_insn(), emit_insn(), gcc_unreachable, GET_CODE, and make_call_insn_raw().

Referenced by emit().

◆ emit_call_insn_after()

Like emit_call_insn_after_noloc, but set INSN_LOCATION according to AFTER.

References emit_pattern_after(), and make_call_insn_raw().

Referenced by emit_copy_of_insn_after().

◆ emit_call_insn_after_noloc()

Make an instruction with body X and code CALL_INSN and output it after the instruction AFTER.

References emit_pattern_after_noloc(), make_call_insn_raw(), and NULL.

◆ emit_call_insn_after_setloc()

Like emit_call_insn_after_noloc, but set INSN_LOCATION according to LOC.

References emit_pattern_after_setloc(), and make_call_insn_raw().

◆ emit_call_insn_before()

Like emit_call_insn_before_noloc, but set insn_location according to BEFORE.

References emit_pattern_before(), and make_call_insn_raw().

◆ emit_call_insn_before_noloc()

Make an instruction with body X and code CALL_INSN and output it before the instruction BEFORE.

References emit_pattern_before_noloc(), make_call_insn_raw(), and NULL.

◆ emit_call_insn_before_setloc()

Like emit_insn_before_noloc, but set INSN_LOCATION according to LOC.

References emit_pattern_before_setloc(), and make_call_insn_raw().

◆ emit_clobber()

Emit a clobber of lvalue X.

References emit_clobber(), emit_insn(), GET_CODE, and XEXP.

Referenced by convert_mode_scalar(), do_clobber_return_reg(), emit_clobber(), emit_move_complex_parts(), emit_move_multi_word(), emit_stack_restore(), expand_binop(), expand_builtin_longjmp(), expand_builtin_nonlocal_goto(), expand_builtin_setjmp_receiver(), expand_clobber(), expand_doubleword_bswap(), expand_eh_return(), extract_integral_bit_field(), gen_clobber(), initialize_uninitialized_regs(), match_reload(), resolve_simple_move(), store_constructor(), and widen_operand().

◆ emit_copy_of_insn_after()

Produce exact duplicate of insn INSN after AFTER. Care updating of libcall regions if present.

References CALL_INSN_FUNCTION_USAGE, copy_insn(), CROSSING_JUMP_P, duplicate_reg_note(), emit_call_insn_after(), emit_debug_insn_after(), emit_insn_after(), emit_jump_insn_after(), gcc_unreachable, GET_CODE, INSN_CODE, INSN_LOCATION(), mark_jump_label(), NONDEBUG_INSN_P, NULL_RTX, PATTERN(), REG_NOTE_KIND, REG_NOTES, RTL_CONST_CALL_P, RTL_LOOPING_CONST_OR_PURE_CALL_P, RTL_PURE_CALL_P, RTX_FRAME_RELATED_P, SIBLING_CALL_P, and XEXP.

Referenced by duplicate_insn_chain(), expand_gimple_tailcall(), make_return_insns(), and relax_delay_slots().

◆ emit_debug_insn()

Make an insn of code DEBUG_INSN with pattern X and add it to the end of the doubly-linked list.

References add_insn(), as_a(), gcc_unreachable, GET_CODE, get_last_insn(), last, make_debug_insn_raw(), sequence_stack::next, NEXT_INSN(), and NULL_RTX.

Referenced by emit(), and expand_gimple_basic_block().

◆ emit_debug_insn_after()

Like emit_debug_insn_after_noloc, but set INSN_LOCATION according to AFTER.

References emit_pattern_after(), and make_debug_insn_raw().

Referenced by dead_debug_insert_temp(), and emit_copy_of_insn_after().

◆ emit_debug_insn_after_noloc()

Make an instruction with body X and code CALL_INSN and output it after the instruction AFTER.

References emit_pattern_after_noloc(), make_debug_insn_raw(), and NULL.

◆ emit_debug_insn_after_setloc()

Like emit_debug_insn_after_noloc, but set INSN_LOCATION according to LOC.

References emit_pattern_after_setloc(), and make_debug_insn_raw().

◆ emit_debug_insn_before()

Like emit_debug_insn_before_noloc, but set insn_location according to BEFORE.

References emit_pattern_before(), and make_debug_insn_raw().

Referenced by avoid_complex_debug_insns(), dead_debug_insert_temp(), dead_debug_promote_uses(), delete_trivially_dead_insns(), and propagate_for_debug_subst().

◆ emit_debug_insn_before_noloc()

Make an instruction with body X and code DEBUG_INSN and output it before the instruction BEFORE.

References emit_pattern_before_noloc(), make_debug_insn_raw(), and NULL.

◆ emit_debug_insn_before_setloc()

Like emit_insn_before_noloc, but set INSN_LOCATION according to LOC.

References emit_pattern_before_setloc(), and make_debug_insn_raw().

◆ emit_insn()

Take X and emit it at the end of the doubly-linked INSN list. Returns the last insn emitted.

References add_insn(), as_a(), gcc_unreachable, GET_CODE, get_last_insn(), last, make_insn_raw(), sequence_stack::next, NEXT_INSN(), and NULL_RTX.

Referenced by allocate_dynamic_stack_space(), anti_adjust_stack_and_probe_stack_clash(), asan_clear_shadow(), assign_parm_setup_reg(), assign_parms(), base_to_reg(), check_and_process_move(), compute_can_copy(), convert_mode_scalar(), curr_insn_transform(), default_speculation_safe_value(), do_compare_and_jump(), do_remat(), emit(), emit_add2_insn(), emit_add3_insn(), address_reload_context::emit_autoinc(), emit_call_1(), emit_call_insn(), emit_clobber(), emit_cmp_and_jump_insns(), emit_delay_sequence(), emit_inc_dec_insn_before(), emit_input_reload_insns(), emit_insn_if_valid_for_reload_1(), emit_move_ccmode(), emit_move_insn_1(), emit_move_list(), emit_move_multi_word(), emit_move_via_integer(), emit_output_reload_insns(), emit_stack_probe(), emit_stack_restore(), emit_stack_save(), emit_use(), expand_absneg_bit(), expand_asm_loc(), expand_asm_memory_blockage(), expand_asm_reg_clobber_mem_blockage(), expand_asm_stmt(), expand_atomic_fetch_op(), expand_binop(), expand_binop_directly(), expand_builtin_apply(), expand_builtin_feclear_feraise_except(), expand_builtin_fegetround(), expand_builtin_goacc_parlevel_id_size(), expand_builtin_int_roundingfn(), expand_builtin_int_roundingfn_2(), expand_builtin_longjmp(), expand_builtin_mathfn_3(), expand_builtin_mathfn_ternary(), expand_builtin_nonlocal_goto(), expand_builtin_prefetch(), expand_builtin_return(), expand_builtin_setjmp_receiver(), expand_builtin_setjmp_setup(), expand_builtin_trap(), expand_call(), expand_ccmp_expr(), expand_clrsb_using_clz(), expand_compare_and_swap_loop(), expand_cond_expr_using_cmove(), expand_copysign_bit(), expand_ctz(), expand_DIVMOD(), expand_doubleword_clz_ctz_ffs(), expand_doubleword_popcount(), expand_dw2_landing_pad_for_region(), expand_eh_return(), expand_expr_divmod(), expand_expr_real_1(), expand_expr_real_2(), expand_ffs(), expand_function_end(), expand_GOACC_DIM_POS(), expand_GOACC_DIM_SIZE(), expand_GOMP_SIMT_LANE(), expand_HWASAN_ALLOCA_POISON(), expand_HWASAN_ALLOCA_UNPOISON(), expand_HWASAN_MARK(), expand_mem_thread_fence(), expand_memory_blockage(), expand_POPCOUNT(), expand_sdiv_pow2(), expand_UNIQUE(), expand_unop(), expand_unop_direct(), expand_vector_broadcast(), expmed_mult_highpart_optab(), find_shift_sequence(), gen_cond_trap(), gen_reload(), gen_reload_chain_without_interm_reg_p(), move_by_pieces_d::generate(), store_by_pieces_d::generate(), hwasan_emit_prologue(), hwasan_emit_untag_frame(), inc_for_reload(), pieces_addr::increment_address(), inline_string_cmp(), insert_insn_on_edge(), insert_move_for_subreg(), ira(), lra_emit_move(), lra_process_new_insns(), make_prologue_seq(), make_split_prologue_seq(), match_reload(), maybe_emit_unop_insn(), maybe_expand_insn(), maybe_optimize_mod_cmp(), maybe_optimize_pow2p_mod_cmp(), move_block_from_reg(), move_block_to_reg(), noce_convert_multiple_sets_1(), noce_emit_all_but_last(), noce_emit_cmove(), noce_emit_insn(), noce_emit_move_insn(), noce_emit_store_flag(), prepare_call_address(), prepare_cmp_insn(), prepare_copy_insn(), prepend_insn_to_edge(), probe_stack_range(), process_addr_reg(), process_address_1(), remove_inheritance_pseudos(), stack_protect_epilogue(), stack_protect_prologue(), store_constructor(), and thread_prologue_and_epilogue_insns().

◆ emit_insn_after()

Like emit_insn_after_noloc, but set INSN_LOCATION according to AFTER.

References emit_pattern_after(), and make_insn_raw().

Referenced by combine_var_copies_in_loop_exit(), doloop_modify(), eliminate_regs_in_insn(), emit_common_heads_for_components(), emit_common_tails_for_components(), emit_copy_of_insn_after(), emit_moves(), emit_reload_insns(), expand_builtin_saveregs(), expand_builtin_strlen(), expand_function_end(), ext_dce_try_optimize_rshift(), fill_slots_from_thread(), find_and_remove_re(), find_moveable_pseudos(), find_reloads(), gcse_emit_move_after(), get_arg_pointer_save_area(), insert_one_insn(), insert_prologue_epilogue_for_components(), insert_var_expansion_initialization(), lra_process_new_insns(), maybe_add_nop_after_section_switch(), move_insn_for_shrink_wrap(), move_invariant_reg(), move_unallocated_pseudos(), pre_insert_copy_insn(), replace_store_insn(), resolve_clobber(), rtl_lv_add_condition_to_bb(), sjlj_emit_function_enter(), sjlj_emit_function_exit(), split_edge_and_insert(), split_live_ranges_for_shrink_wrap(), and try_merge_compare().

◆ emit_insn_after_1()

|

static |

Helper for emit_insn_after, handles lists of instructions efficiently.

References BARRIER_P, BB_END, BLOCK_FOR_INSN(), df_insn_rescan(), df_set_bb_dirty(), sequence_stack::first, get_last_insn(), last, NEXT_INSN(), set_block_for_insn(), set_last_insn(), SET_NEXT_INSN(), and SET_PREV_INSN().

Referenced by emit_pattern_after_noloc().

◆ emit_insn_after_noloc()

| rtx_insn * emit_insn_after_noloc | ( | rtx | x, |

| rtx_insn * | after, | ||

| basic_block | bb ) |

Make X be output after the insn AFTER and set the BB of insn. If BB is NULL, an attempt is made to infer the BB from AFTER.

References emit_pattern_after_noloc(), and make_insn_raw().

Referenced by cfg_layout_merge_blocks(), commit_one_edge_insertion(), emit_nop_for_unique_locus_between(), expand_gimple_basic_block(), fixup_reorder_chain(), insert_insn_end_basic_block(), insert_insn_start_basic_block(), insert_insn_start_basic_block(), and lra_process_new_insns().

◆ emit_insn_after_setloc()

Like emit_insn_after_noloc, but set INSN_LOCATION according to LOC.

References emit_pattern_after_setloc(), and make_insn_raw().

Referenced by peep2_attempt(), and try_split().

◆ emit_insn_before()

Like emit_insn_before_noloc, but set INSN_LOCATION according to BEFORE.

References emit_pattern_before(), and make_insn_raw().

Referenced by attempt_change(), combine_and_move_insns(), emit_common_tails_for_components(), emit_inc_dec_insn_before(), emit_moves(), emit_reload_insns(), emit_to_new_bb_before(), expand_builtin_apply_args(), expand_builtin_strlen(), expand_function_end(), expand_gimple_tailcall(), find_moveable_pseudos(), find_reloads(), find_reloads_address(), find_reloads_subreg_address(), find_reloads_toplev(), fix_crossing_unconditional_branches(), force_move_args_size_note(), gen_call_used_regs_seq(), hwasan_maybe_emit_frame_base_init(), initialize_uninitialized_regs(), insert_base_initialization(), insert_one_insn(), insert_prologue_epilogue_for_components(), instantiate_virtual_regs_in_insn(), lra_process_new_insns(), make_more_copies(), match_asm_constraints_1(), match_asm_constraints_2(), pre_insert_copy_insn(), replace_read(), resolve_shift_zext(), resolve_simple_move(), sjlj_emit_function_enter(), sjlj_mark_call_sites(), split_iv(), subst_reg_equivs(), thread_prologue_and_epilogue_insns(), try_optimize_cfg(), update_block(), and update_ld_motion_stores().

◆ emit_insn_before_noloc()

| rtx_insn * emit_insn_before_noloc | ( | rtx | x, |

| rtx_insn * | before, | ||

| basic_block | bb ) |

Make X be output before the instruction BEFORE.

References emit_pattern_before_noloc(), and make_insn_raw().

Referenced by commit_one_edge_insertion(), insert_insn_end_basic_block(), and lra_process_new_insns().

◆ emit_insn_before_setloc()

Like emit_insn_before_noloc, but set INSN_LOCATION according to LOC.

References emit_pattern_before_setloc(), and make_insn_raw().

Referenced by cond_move_process_if_block(), emit_to_new_bb_before(), find_cond_trap(), noce_convert_multiple_sets(), noce_process_if_block(), noce_try_abs(), noce_try_addcc(), noce_try_bitop(), noce_try_cmove(), noce_try_cmove_arith(), noce_try_cond_arith(), noce_try_ifelse_collapse(), noce_try_inverse_constants(), noce_try_minmax(), noce_try_move(), noce_try_sign_bit_splat(), noce_try_sign_mask(), noce_try_store_flag(), noce_try_store_flag_constants(), and noce_try_store_flag_mask().

◆ emit_jump_insn()

Make an insn of code JUMP_INSN with pattern X and add it to the end of the doubly-linked list.

References add_insn(), as_a(), gcc_unreachable, GET_CODE, last, make_jump_insn_raw(), sequence_stack::next, NEXT_INSN(), and NULL.

Referenced by compare_and_jump_seq(), create_eh_forwarder_block(), do_tablejump(), emit(), emit_cmp_and_jump_insn_1(), emit_jump(), emit_likely_jump_insn(), emit_unlikely_jump_insn(), expand_asm_stmt(), expand_builtin_return(), expand_divmod(), expand_doubleword_clz_ctz_ffs(), expand_doubleword_shift(), expand_expr_real_2(), expand_fix(), expand_float(), find_end_label(), fix_crossing_conditional_branches(), get_uncond_jump_length(), make_epilogue_seq(), maybe_expand_jump_insn(), and store_expr().

◆ emit_jump_insn_after()

| rtx_jump_insn * emit_jump_insn_after | ( | rtx | pattern, |

| rtx_insn * | after ) |

Like emit_jump_insn_after_noloc, but set INSN_LOCATION according to AFTER.

References as_a(), emit_pattern_after(), and make_jump_insn_raw().

Referenced by add_labels_and_missing_jumps(), doloop_modify(), emit_copy_of_insn_after(), find_cond_trap(), and handle_simple_exit().

◆ emit_jump_insn_after_noloc()

| rtx_jump_insn * emit_jump_insn_after_noloc | ( | rtx | x, |

| rtx_insn * | after ) |

Make an insn of code JUMP_INSN with body X and output it after the insn AFTER.

References as_a(), emit_pattern_after_noloc(), make_jump_insn_raw(), and NULL.

Referenced by try_redirect_by_replacing_jump().

◆ emit_jump_insn_after_setloc()

| rtx_jump_insn * emit_jump_insn_after_setloc | ( | rtx | pattern, |

| rtx_insn * | after, | ||

| location_t | loc ) |

Like emit_jump_insn_after_noloc, but set INSN_LOCATION according to LOC.

References as_a(), emit_pattern_after_setloc(), and make_jump_insn_raw().

Referenced by force_nonfallthru_and_redirect().

◆ emit_jump_insn_before()

| rtx_jump_insn * emit_jump_insn_before | ( | rtx | pattern, |

| rtx_insn * | before ) |

Like emit_jump_insn_before_noloc, but set INSN_LOCATION according to BEFORE.

References as_a(), emit_pattern_before(), and make_jump_insn_raw().

Referenced by cse_insn().

◆ emit_jump_insn_before_noloc()

| rtx_jump_insn * emit_jump_insn_before_noloc | ( | rtx | x, |

| rtx_insn * | before ) |

Make an instruction with body X and code JUMP_INSN and output it before the instruction BEFORE.

References as_a(), emit_pattern_before_noloc(), make_jump_insn_raw(), and NULL.

◆ emit_jump_insn_before_setloc()

| rtx_jump_insn * emit_jump_insn_before_setloc | ( | rtx | pattern, |

| rtx_insn * | before, | ||

| location_t | loc ) |

like emit_insn_before_noloc, but set INSN_LOCATION according to LOC.

References as_a(), emit_pattern_before_setloc(), and make_jump_insn_raw().

◆ emit_jump_table_data()

| rtx_jump_table_data * emit_jump_table_data | ( | rtx | table | ) |

Make an insn of code JUMP_TABLE_DATA and add it to the end of the doubly-linked list.

References add_insn(), as_a(), BLOCK_FOR_INSN(), cur_insn_uid, INSN_UID(), NULL, PATTERN(), rtx_alloc(), and table.

Referenced by emit_case_dispatch_table().

◆ emit_label()

| rtx_code_label * emit_label | ( | rtx | uncast_label | ) |

Add the label LABEL to the end of the doubly-linked list.

References add_insn(), as_a(), cur_insn_uid, gcc_checking_assert, and INSN_UID().

Referenced by allocate_dynamic_stack_space(), anti_adjust_stack_and_probe(), anti_adjust_stack_and_probe_stack_clash(), asan_clear_shadow(), asan_emit_stack_protection(), compare_by_pieces(), create_eh_forwarder_block(), do_compare_rtx_and_jump(), do_jump(), do_jump_1(), do_jump_by_parts_equality_rtx(), do_jump_by_parts_greater_rtx(), do_jump_by_parts_zero_rtx(), dw2_build_landing_pads(), emit(), emit_block_cmp_via_loop(), emit_block_move_via_loop(), emit_block_move_via_oriented_loop(), emit_case_dispatch_table(), emit_stack_clash_protection_probe_loop_end(), emit_stack_clash_protection_probe_loop_start(), emit_store_flag_force(), expand_abs(), expand_addsub_overflow(), expand_arith_overflow_result_store(), expand_asm_stmt(), expand_builtin_atomic_compare_exchange(), expand_builtin_strub_leave(), expand_builtin_strub_update(), expand_compare_and_swap_loop(), expand_copysign_absneg(), expand_divmod(), expand_doubleword_clz_ctz_ffs(), expand_doubleword_shift(), expand_eh_return(), expand_expr_real_1(), expand_expr_real_2(), expand_ffs(), expand_fix(), expand_float(), expand_function_end(), expand_gimple_basic_block(), expand_gimple_tailcall(), expand_label(), expand_mul_overflow(), expand_neg_overflow(), expand_sdiv_pow2(), expand_sjlj_dispatch_table(), expand_smod_pow2(), expand_vector_ubsan_overflow(), find_end_label(), fix_crossing_conditional_branches(), get_uncond_jump_length(), inline_string_cmp(), prepare_call_address(), probe_stack_range(), sjlj_emit_dispatch_table(), stack_protect_epilogue(), store_constructor(), store_expr(), and try_store_by_multiple_pieces().

◆ emit_label_after()

Emit the label LABEL after the insn AFTER.

References add_insn_after(), cur_insn_uid, gcc_checking_assert, INSN_UID(), and NULL.

Referenced by find_end_label(), and get_label_before().

◆ emit_label_before()

| rtx_code_label * emit_label_before | ( | rtx_code_label * | label, |

| rtx_insn * | before ) |

Emit the label LABEL before the insn BEFORE.

References add_insn_before(), cur_insn_uid, gcc_checking_assert, INSN_UID(), and NULL.

Referenced by block_label(), and label_rtx_for_bb().

◆ emit_likely_jump_insn()

Make an insn of code JUMP_INSN with pattern X, add a REG_BR_PROB note that indicates very likely probability, and add it to the end of the doubly-linked list.

References add_reg_br_prob_note(), emit_jump_insn(), and profile_probability::very_likely().

◆ emit_note()

Make an insn of code NOTE or type NOTE_NO and add it to the end of the doubly-linked list.

References add_insn(), and make_note_raw().

Referenced by duplicate_insn_chain(), expand_function_start(), expand_gimple_basic_block(), expand_gimple_tailcall(), lra(), make_epilogue_seq(), make_prologue_seq(), reemit_insn_block_notes(), reload(), reload_as_needed(), thread_prologue_and_epilogue_insns(), and update_sjlj_context().

◆ emit_note_after()

Emit a note of subtype SUBTYPE after the insn AFTER.

References add_insn_after(), add_insn_after_nobb(), BARRIER_P, BB_END, BLOCK_FOR_INSN(), make_note_raw(), note_outside_basic_block_p(), and NULL.

Referenced by add_cfi(), create_basic_block_structure(), emit_note_eh_region_end(), emit_note_insn_var_location(), expand_gimple_basic_block(), handle_simple_exit(), reemit_insn_block_notes(), rtl_split_block(), and thread_prologue_and_epilogue_insns().

◆ emit_note_before()

Emit a note of subtype SUBTYPE before the insn BEFORE.

References add_insn_before(), add_insn_before_nobb(), BARRIER_P, BB_HEAD, BLOCK_FOR_INSN(), make_note_raw(), note_outside_basic_block_p(), and NULL.

Referenced by add_cfis_to_fde(), change_scope(), convert_to_eh_region_ranges(), create_basic_block_structure(), emit_note_insn_var_location(), insert_section_boundary_note(), reemit_insn_block_notes(), and reemit_marker_as_note().

◆ emit_note_copy()

Emit a copy of note ORIG.

References add_insn(), make_note_raw(), NOTE_DATA, and NOTE_KIND.

Referenced by duplicate_insn_chain().

◆ emit_pattern_after()

|

static |

Insert PATTERN after AFTER. MAKE_RAW indicates how to turn PATTERN into a real insn. SKIP_DEBUG_INSNS indicates whether to insert after any DEBUG_INSNs.

References DEBUG_INSN_P, emit_pattern_after_noloc(), emit_pattern_after_setloc(), INSN_LOCATION(), INSN_P, NULL, and PREV_INSN().

Referenced by emit_call_insn_after(), emit_debug_insn_after(), emit_insn_after(), and emit_jump_insn_after().

◆ emit_pattern_after_noloc()

|

static |

References add_insn_after(), as_a(), emit_insn_after_1(), gcc_assert, gcc_unreachable, GET_CODE, last, and NULL_RTX.

Referenced by emit_call_insn_after_noloc(), emit_debug_insn_after_noloc(), emit_insn_after_noloc(), emit_jump_insn_after_noloc(), emit_pattern_after(), and emit_pattern_after_setloc().

◆ emit_pattern_after_setloc()

|

static |

Insert PATTERN after AFTER, setting its INSN_LOCATION to LOC. MAKE_RAW indicates how to turn PATTERN into a real insn.

References active_insn_p(), emit_pattern_after_noloc(), INSN_LOCATION(), JUMP_TABLE_DATA_P, last, NEXT_INSN(), NULL, and NULL_RTX.

Referenced by emit_call_insn_after_setloc(), emit_debug_insn_after_setloc(), emit_insn_after_setloc(), emit_jump_insn_after_setloc(), and emit_pattern_after().

◆ emit_pattern_before()

|

static |

Insert PATTERN before BEFORE. MAKE_RAW indicates how to turn PATTERN into a real insn. SKIP_DEBUG_INSNS indicates whether to insert before any DEBUG_INSNs. INSNP indicates if PATTERN is meant for an INSN as opposed to a JUMP_INSN, CALL_INSN, etc.

References DEBUG_INSN_P, emit_pattern_before_noloc(), emit_pattern_before_setloc(), INSN_LOCATION(), INSN_P, sequence_stack::next, NULL, and PREV_INSN().

Referenced by emit_call_insn_before(), emit_debug_insn_before(), emit_insn_before(), and emit_jump_insn_before().

◆ emit_pattern_before_noloc()

|

static |

Emit insn(s) of given code and pattern

at a specified place within the doubly-linked list.

All of the emit_foo global entry points accept an object

X which is either an insn list or a PATTERN of a single

instruction.

There are thus a few canonical ways to generate code and

emit it at a specific place in the instruction stream. For

example, consider the instruction named SPOT and the fact that

we would like to emit some instructions before SPOT. We might

do it like this:

start_sequence ();

... emit the new instructions ...

insns_head = end_sequence ();

emit_insn_before (insns_head, SPOT);

It used to be common to generate SEQUENCE rtl instead, but that

is a relic of the past which no longer occurs. The reason is that

SEQUENCE rtl results in much fragmented RTL memory since the SEQUENCE

generated would almost certainly die right after it was created.

References add_insn_before(), as_a(), gcc_assert, gcc_unreachable, GET_CODE, last, sequence_stack::next, NEXT_INSN(), and NULL_RTX.

Referenced by emit_call_insn_before_noloc(), emit_debug_insn_before_noloc(), emit_insn_before_noloc(), emit_jump_insn_before_noloc(), emit_pattern_before(), and emit_pattern_before_setloc().

◆ emit_pattern_before_setloc()

|

static |

Insert PATTERN before BEFORE, setting its INSN_LOCATION to LOC. MAKE_RAW indicates how to turn PATTERN into a real insn. INSNP indicates if PATTERN is meant for an INSN as opposed to a JUMP_INSN, CALL_INSN, etc.

References active_insn_p(), emit_pattern_before_noloc(), sequence_stack::first, get_insns(), INSN_LOCATION(), JUMP_TABLE_DATA_P, last, NEXT_INSN(), NULL, NULL_RTX, and PREV_INSN().

Referenced by emit_call_insn_before_setloc(), emit_debug_insn_before_setloc(), emit_insn_before_setloc(), emit_jump_insn_before_setloc(), and emit_pattern_before().

◆ emit_unlikely_jump_insn()

Make an insn of code JUMP_INSN with pattern X, add a REG_BR_PROB note that indicates very unlikely probability, and add it to the end of the doubly-linked list.

References add_reg_br_prob_note(), emit_jump_insn(), and profile_probability::very_unlikely().

◆ emit_use()

Emit a use of rvalue X.

References emit_insn(), emit_use(), GET_CODE, and XEXP.

Referenced by do_use_return_reg(), emit_use(), expand_builtin_longjmp(), expand_builtin_nonlocal_goto(), expand_builtin_return(), expand_builtin_setjmp_receiver(), gen_use(), and make_prologue_seq().

◆ end_sequence()

| rtx_insn * end_sequence | ( | void | ) |

After emitting to a sequence, restore the previous saved state and return the start of the completed sequence. If the compiler might have deferred popping arguments while generating this sequence, and this sequence will not be immediately inserted into the instruction stream, use do_pending_stack_adjust before calling this function. That will ensure that the deferred pops are inserted into this sequence, and not into some random location in the instruction stream. See INHIBIT_DEFER_POP for more information about deferred popping of arguments.

References sequence_stack::first, free_sequence_stack, get_current_sequence(), get_insns(), insns, sequence_stack::last, sequence_stack::next, set_first_insn(), and set_last_insn().

Referenced by add_test(), asan_clear_shadow(), asan_emit_allocas_unpoison(), asan_emit_stack_protection(), assign_parm_setup_block(), assign_parm_setup_reg(), assign_parm_setup_stack(), assign_parms_unsplit_complex(), attempt_change(), check_and_process_move(), combine_reaching_defs(), combine_var_copies_in_loop_exit(), compare_and_jump_seq(), complete_seq(), computation_cost(), compute_can_copy(), cond_move_process_if_block(), convert_mode_scalar(), curr_insn_transform(), do_remat(), doloop_modify(), dw2_build_landing_pads(), emit_cmp_and_jump_insns(), emit_common_heads_for_components(), emit_common_tails_for_components(), emit_delay_sequence(), emit_inc_dec_insn_before(), emit_initial_value_sets(), emit_input_reload_insns(), emit_move_list(), emit_move_multi_word(), emit_output_reload_insns(), emit_partition_copy(), end_ifcvt_sequence(), expand_absneg_bit(), expand_asm_stmt(), expand_atomic_fetch_op(), expand_binop(), expand_builtin_apply_args(), expand_builtin_int_roundingfn(), expand_builtin_int_roundingfn_2(), expand_builtin_mathfn_3(), expand_builtin_mathfn_ternary(), expand_builtin_return(), expand_builtin_saveregs(), expand_builtin_strlen(), expand_call(), expand_clrsb_using_clz(), expand_cond_expr_using_cmove(), expand_copysign_bit(), expand_ctz(), expand_DIVMOD(), expand_doubleword_clz_ctz_ffs(), expand_doubleword_popcount(), expand_dummy_function_end(), expand_expr_divmod(), expand_expr_real_2(), expand_ffs(), expand_fix(), expand_fixed_convert(), expand_float(), expand_function_end(), expand_gimple_tailcall(), expand_POPCOUNT(), expand_sdiv_pow2(), expand_twoval_binop_libfunc(), expand_unop(), expmed_mult_highpart_optab(), ext_dce_try_optimize_rshift(), find_shift_sequence(), fix_crossing_unconditional_branches(), gen_call_used_regs_seq(), gen_clobber(), gen_cond_trap(), gen_move_insn(), gen_use(), get_arg_pointer_save_area(), get_uncond_jump_length(), hwasan_emit_untag_frame(), hwasan_frame_base(), inherit_in_ebb(), inherit_reload_reg(), init_set_costs(), initialize_uninitialized_regs(), inline_string_cmp(), insert_base_initialization(), insert_insn_on_edge(), insert_move_for_subreg(), insert_prologue_epilogue_for_components(), insert_value_copy_on_edge(), insert_var_expansion_initialization(), instantiate_virtual_regs_in_insn(), ira(), lra_process_new_insns(), make_epilogue_seq(), make_prologue_seq(), make_split_prologue_seq(), match_asm_constraints_1(), match_asm_constraints_2(), match_reload(), maybe_optimize_mod_cmp(), maybe_optimize_pow2p_mod_cmp(), noce_convert_multiple_sets(), noce_convert_multiple_sets_1(), noce_emit_cmove(), noce_emit_move_insn(), noce_emit_store_flag(), noce_process_if_block(), noce_try_abs(), noce_try_addcc(), noce_try_cmove(), noce_try_cmove_arith(), noce_try_cond_arith(), noce_try_inverse_constants(), noce_try_minmax(), noce_try_sign_mask(), noce_try_store_flag(), noce_try_store_flag_constants(), noce_try_store_flag_mask(), pop_topmost_sequence(), prepare_copy_insn(), prepare_float_lib_cmp(), prepend_insn_to_edge(), process_addr_reg(), process_address_1(), process_invariant_for_inheritance(), record_store(), remove_inheritance_pseudos(), replace_read(), resolve_shift_zext(), resolve_simple_move(), rtl_lv_add_condition_to_bb(), sjlj_emit_dispatch_table(), sjlj_emit_function_enter(), sjlj_emit_function_exit(), sjlj_mark_call_sites(), split_iv(), thread_prologue_and_epilogue_insns(), try_emit_cmove_seq(), and unroll_loop_runtime_iterations().

◆ expand_rtx()

Read an rtx from the bytecode in SEQ, which was generated by genemit.cc. Replace operand placeholders with the values given in OPERANDS.

◆ find_auto_inc()

Find a RTX_AUTOINC class rtx which matches DATA.

References FOR_EACH_SUBRTX, GET_CODE, GET_RTX_CLASS, RTX_AUTOINC, rtx_equal_p(), and XEXP.

Referenced by try_split().

◆ force_reload_address()

Return a memory reference like MEM, but with the address reloaded into a pseudo register.

References address_reload_context::emit_autoinc(), force_reg(), GET_CODE, GET_MODE, GET_MODE_SIZE(), GET_RTX_CLASS, replace_equiv_address(), RTX_AUTOINC, mem_attrs::size, and XEXP.

◆ gen_blockage()

| rtx gen_blockage | ( | void | ) |

Generate an empty ASM_INPUT, which is used to block attempts to schedule, and to block register equivalences to be seen across this insn.

References gen_rtx_ASM_INPUT, and MEM_VOLATILE_P.

Referenced by anti_adjust_stack_and_probe_stack_clash(), expand_builtin_longjmp(), expand_builtin_nonlocal_goto(), expand_builtin_setjmp_receiver(), expand_function_end(), make_prologue_seq(), and probe_stack_range().

◆ gen_clobber()

Return a sequence of insns to clobber lvalue X.

References emit_clobber(), end_sequence(), and start_sequence().

Referenced by eliminate_regs_in_insn(), and find_reloads().

◆ gen_const_mem()

Generate a memory referring to non-trapping constant memory.

References gen_rtx_MEM(), MEM_NOTRAP_P, and MEM_READONLY_P.

Referenced by assemble_trampoline_template(), build_constant_desc(), do_tablejump(), and force_const_mem().

◆ gen_const_vec_duplicate()

Generate a vector constant of mode MODE in which every element has value ELT.

References rtx_vector_builder::build().

Referenced by builtin_memset_read_str(), expand_absneg_bit(), expand_vector_broadcast(), gen_const_vector(), gen_rtx_CONST_VECTOR(), gen_vec_duplicate(), relational_result(), and simplify_const_unary_operation().

◆ gen_const_vec_series()

Generate a vector constant of mode MODE in which element I has the value BASE + I * STEP.

References rtx_vector_builder::build(), gcc_assert, GET_MODE_INNER, i, simplify_gen_binary(), and valid_for_const_vector_p().

Referenced by gen_vec_series(), and simplify_context::simplify_binary_operation_1().

◆ gen_const_vector()

|

static |

Generate a new vector constant for mode MODE and constant value CONSTANT.

References const_tiny_rtx, DECIMAL_FLOAT_MODE_P, gcc_assert, gen_const_vec_duplicate(), and GET_MODE_INNER.

Referenced by init_emit_once().

◆ gen_frame_mem()

Generate a MEM referring to fixed portions of the frame, e.g., register save areas.

References gen_rtx_MEM(), get_frame_alias_set(), MEM_NOTRAP_P, and set_mem_alias_set().

Referenced by expand_builtin_return_addr().

◆ gen_hard_reg_clobber()

| rtx gen_hard_reg_clobber | ( | machine_mode | mode, |

| unsigned int | regno ) |

References gen_rtx_REG(), and hard_reg_clobbers.

Referenced by emit_insn_if_valid_for_reload().

◆ gen_highpart()

In emit-rtl.cc

References adjust_address, gcc_assert, GET_MODE, GET_MODE_SIZE(), GET_MODE_UNIT_SIZE, known_eq, known_le, MEM_P, simplify_gen_subreg(), and subreg_highpart_offset().

Referenced by expand_doubleword_clz_ctz_ffs(), expand_expr_real_2(), extract_high_half(), and gen_highpart_mode().

◆ gen_highpart_mode()

Like gen_highpart, but accept mode of EXP operand in case EXP can be VOIDmode constant.

References exp(), gcc_assert, gen_highpart(), GET_MODE, simplify_gen_subreg(), and subreg_highpart_offset().

◆ gen_int_mode()

| rtx gen_int_mode | ( | poly_int64 | c, |

| machine_mode | mode ) |

References as_a(), poly_int< NUM_POLY_INT_COEFFS, wide_int >::from(), GEN_INT, GET_MODE_PRECISION(), immed_wide_int_const(), SIGNED, and trunc_int_for_mode().

Referenced by add_args_size_note(), addr_offset_valid_p(), align_dynamic_address(), allocate_dynamic_stack_space(), anti_adjust_stack_and_probe(), asan_clear_shadow(), asan_emit_stack_protection(), assemble_real(), assign_parm_setup_block(), assign_parms(), autoinc_split(), builtin_memset_read_str(), calculate_table_based_CRC(), canonicalize_address_mult(), canonicalize_condition(), cleanup_auto_inc_dec(), combine_set_extension(), combine_simplify_rtx(), convert_mode_scalar(), create_integer_operand(), cselib_hash_rtx(), default_memtag_untagged_pointer(), do_pending_stack_adjust(), do_tablejump(), dw2_asm_output_offset(), address_reload_context::emit_autoinc(), emit_call_1(), emit_library_call_value_1(), emit_move_complex(), emit_move_resolve_push(), emit_push_insn(), emit_store_flag(), emit_store_flag_int(), expand_atomic_test_and_set(), expand_binop(), expand_builtin_memset_args(), expand_call(), expand_ctz(), expand_debug_expr(), expand_divmod(), expand_doubleword_clz_ctz_ffs(), expand_doubleword_mod(), expand_expr_real_1(), expand_expr_real_2(), expand_ffs(), expand_field_assignment(), expand_fix(), expand_HWASAN_CHOOSE_TAG(), expand_sdiv_pow2(), expand_SET_EDOM(), expand_shift_1(), expand_smod_pow2(), expand_stack_vars(), expand_unop(), expand_vector_ubsan_overflow(), expmed_mult_highpart(), extract_left_shift(), find_split_point(), asan_redzone_buffer::flush_redzone_payload(), for_each_inc_dec_find_inc_dec(), force_int_to_mode(), force_to_mode(), gen_common_operation_to_reflect(), gen_int_shift_amount(), get_call_args(), get_dynamic_stack_base(), get_mode_bounds(), get_stored_val(), hwasan_emit_prologue(), hwasan_truncate_to_tag_size(), if_then_else_cond(), immed_double_const(), immed_wide_int_const_1(), inc_for_reload(), pieces_addr::increment_address(), init_caller_save(), init_one_dwarf_reg_size(), init_reload(), init_return_column_size(), insert_const_anchor(), instantiate_virtual_regs_in_insn(), iv_number_of_iterations(), make_compound_operation_int(), make_extraction(), make_field_assignment(), maybe_legitimize_operand(), mem_loc_descriptor(), move2add_use_add2_insn(), move2add_use_add3_insn(), multiplier_allowed_in_address_p(), native_decode_vector_rtx(), noce_try_bitop(), noce_try_cmove(), noce_try_store_flag_constants(), optimize_bitfield_assignment_op(), output_constant_pool_2(), plus_constant(), probe_stack_range(), push_block(), reload_cse_move2add(), round_trampoline_addr(), simplify_and_const_int(), simplify_and_const_int_1(), simplify_context::simplify_binary_operation_1(), simplify_compare_const(), simplify_comparison(), simplify_if_then_else(), simplify_set(), simplify_shift_const_1(), simplify_context::simplify_ternary_operation(), simplify_context::simplify_unary_operation_1(), sjlj_mark_call_sites(), split_iv(), store_bit_field_using_insv(), store_constructor(), store_field(), store_one_arg(), try_store_by_multiple_pieces(), unroll_loop_constant_iterations(), unroll_loop_runtime_iterations(), update_reg_equal_equiv_notes(), validate_test_and_branch(), vec_perm_indices_to_rtx(), and widen_leading().

◆ gen_int_shift_amount()

| rtx gen_int_shift_amount | ( | machine_mode | , |

| poly_int64 | value ) |

Return a constant shift amount for shifting a value of mode MODE by VALUE bits.

References gen_int_mode(), int_mode_for_size(), and opt_mode< T >::require().

Referenced by asan_emit_stack_protection(), change_zero_ext(), expand_binop(), expand_doubleword_mult(), expand_shift(), expand_shift_1(), expand_smod_pow2(), expand_superword_shift(), expand_unop(), expand_vec_perm_var(), find_shift_sequence(), find_split_point(), fold_rtx(), force_int_to_mode(), init_expmed_one_mode(), shift_amt_for_vec_perm_mask(), shift_cost(), shift_return_value(), simplify_context::simplify_binary_operation_1(), simplify_shift_const(), simplify_shift_const_1(), simplify_context::simplify_unary_operation_1(), and try_combine().

◆ gen_label_rtx()

| rtx_code_label * gen_label_rtx | ( | void | ) |

Return a newly created CODE_LABEL rtx with a unique label number.

References as_a(), label_num, NULL, and NULL_RTX.

Referenced by allocate_dynamic_stack_space(), anti_adjust_stack_and_probe(), anti_adjust_stack_and_probe_stack_clash(), asan_clear_shadow(), asan_emit_stack_protection(), block_label(), compare_by_pieces(), do_compare_rtx_and_jump(), do_jump(), do_jump_1(), do_jump_by_parts_equality_rtx(), do_jump_by_parts_greater_rtx(), do_jump_by_parts_zero_rtx(), dw2_build_landing_pads(), dw2_fix_up_crossing_landing_pad(), emit_block_cmp_via_loop(), emit_block_move_via_loop(), emit_block_move_via_oriented_loop(), emit_case_dispatch_table(), emit_stack_clash_protection_probe_loop_start(), emit_store_flag_force(), expand_abs(), expand_addsub_overflow(), expand_arith_overflow_result_store(), expand_asm_stmt(), expand_builtin_atomic_compare_exchange(), expand_builtin_eh_return(), expand_builtin_strub_leave(), expand_builtin_strub_update(), expand_compare_and_swap_loop(), expand_copysign_absneg(), expand_divmod(), expand_doubleword_clz_ctz_ffs(), expand_doubleword_shift(), expand_eh_return(), expand_expr_real_1(), expand_expr_real_2(), expand_ffs(), expand_fix(), expand_float(), expand_function_start(), expand_gimple_tailcall(), expand_mul_overflow(), expand_naked_return(), expand_neg_overflow(), expand_sdiv_pow2(), expand_sjlj_dispatch_table(), expand_smod_pow2(), expand_vector_ubsan_overflow(), find_end_label(), fix_crossing_conditional_branches(), get_label_before(), get_uncond_jump_length(), inline_string_cmp(), label_rtx(), label_rtx_for_bb(), prepare_call_address(), probe_stack_range(), sjlj_build_landing_pads(), sjlj_emit_dispatch_table(), sjlj_fix_up_crossing_landing_pad(), stack_protect_epilogue(), store_constructor(), store_expr(), and try_store_by_multiple_pieces().

◆ gen_lowpart_common()

Return a value representing some low-order bits of X, where the number of low-order bits is given by MODE. Note that no conversion is done between floating-point and fixed-point values, rather, the bit representation is returned. This function handles the cases in common between gen_lowpart, below, and two variants in cse.cc and combine.cc. These are the cases that can be safely handled at all points in the compilation. If this is not a case we can handle, return 0.

References CONST_DOUBLE_AS_FLOAT_P, CONST_INT_P, CONST_POLY_INT_P, CONST_SCALAR_INT_P, gcc_assert, gen_lowpart_common(), GET_CODE, GET_MODE, GET_MODE_SIZE(), HOST_BITS_PER_DOUBLE_INT, HOST_BITS_PER_WIDE_INT, int_mode_for_size(), is_a(), known_le, lowpart_subreg(), maybe_gt, REG_P, REGMODE_NATURAL_SIZE, opt_mode< T >::require(), SCALAR_FLOAT_MODE_P, and XEXP.

Referenced by combine_simplify_rtx(), do_output_reload(), expand_expr_real_1(), extract_low_bits(), force_to_mode(), gen_lowpart_common(), gen_lowpart_for_combine(), gen_lowpart_general(), gen_lowpart_if_possible(), move2add_use_add2_insn(), store_split_bit_field(), and strip_paradoxical_subreg().

◆ gen_lowpart_SUBREG()

Generate a SUBREG representing the least-significant part of REG if MODE is smaller than mode of REG, otherwise paradoxical SUBREG.

References gen_rtx_SUBREG(), GET_MODE, and subreg_lowpart_offset().

Referenced by assign_parm_setup_block(), assign_parms_unsplit_complex(), change_zero_ext(), convert_wider_int_to_float(), emit_input_reload_insns(), emit_spill_move(), expand_call(), expand_expr_real_1(), extract_bit_field_using_extv(), gen_lowpart_if_possible(), precompute_arguments(), reverse_op(), simplify_operand_subreg(), and store_bit_field_using_insv().

◆ gen_raw_REG()

| rtx gen_raw_REG | ( | machine_mode | mode, |

| unsigned int | regno ) |

Generate a new REG rtx. Make sure ORIGINAL_REGNO is set properly, and don't attempt to share with the various global pieces of rtl (such as frame_pointer_rtx).

References init_raw_REG(), MEM_STAT_INFO, and rtx_alloc().

Referenced by addr_for_mem_ref(), addr_offset_valid_p(), adjust_insn(), assign_spill_hard_regs(), can_widen_mult_without_libcall(), tree_switch_conversion::bit_test_cluster::emit(), expand_debug_parm_decl(), expand_expr_real_1(), expand_mult(), gen_reg_rtx(), gen_rtx_REG(), gen_rtx_REG_offset(), get_address_cost_ainc(), init_emit_regs(), init_expmed(), init_expr_target(), init_set_costs(), lshift_cheap_p(), make_decl_rtl(), maybe_mode_change(), mult_by_coeff_cost(), multiplier_allowed_in_address_p(), optimize_range_tests_to_bit_test(), prepare_decl_rtl(), produce_memory_decl_rtl(), regrename_do_replace(), try_combine(), and vt_add_function_parameter().

◆ gen_reg_rtx()

| rtx gen_reg_rtx | ( | machine_mode | mode | ) |

Generate a REG rtx for a new pseudo register of mode MODE. This pseudo is assigned the next sequential register number.

References can_create_pseudo_p, crtl, emit(), gcc_assert, gen_raw_REG(), gen_reg_rtx(), generating_concat_p, GET_MODE_ALIGNMENT, GET_MODE_CLASS, GET_MODE_INNER, min_align(), MINIMUM_ALIGNMENT, NULL, reg_rtx_no, regno_reg_rtx, and SUPPORTS_STACK_ALIGNMENT.

Referenced by allocate_basic_variable(), allocate_dynamic_stack_space(), asan_emit_stack_protection(), assign_parm_remove_parallels(), assign_parm_setup_block(), assign_parm_setup_reg(), assign_parm_setup_stack(), assign_temp(), avoid_likely_spilled_reg(), builtin_memset_gen_str(), builtin_memset_read_str(), calculate_table_based_CRC(), compare_by_pieces(), compress_float_constant(), convert_mode_scalar(), convert_modes(), copy_blkmode_to_reg(), copy_to_mode_reg(), copy_to_reg(), copy_to_suggested_reg(), do_compare_and_jump(), do_jump_by_parts_zero_rtx(), do_store_flag(), do_tablejump(), doloop_optimize(), emit_block_cmp_via_loop(), emit_block_move_via_loop(), emit_conditional_add(), emit_conditional_move(), emit_conditional_move_1(), emit_conditional_neg_or_complement(), emit_cstore(), emit_group_load_1(), emit_group_store(), emit_libcall_block_1(), emit_library_call_value_1(), emit_push_insn(), emit_stack_save(), emit_store_flag_1(), emit_store_flag_force(), entry_register(), expand_abs(), expand_absneg_bit(), expand_addsub_overflow(), expand_asm_stmt(), expand_atomic_compare_and_swap(), expand_atomic_fetch_op(), expand_atomic_load(), expand_atomic_test_and_set(), expand_binop(), expand_builtin(), expand_builtin_apply(), expand_builtin_cexpi(), expand_builtin_crc_table_based(), expand_builtin_eh_copy_values(), expand_builtin_eh_filter(), expand_builtin_eh_pointer(), expand_builtin_feclear_feraise_except(), expand_builtin_fegetround(), expand_builtin_goacc_parlevel_id_size(), expand_builtin_int_roundingfn(), expand_builtin_int_roundingfn_2(), expand_builtin_issignaling(), expand_builtin_mathfn_3(), expand_builtin_mathfn_ternary(), expand_builtin_powi(), expand_builtin_signbit(), expand_builtin_sincos(), expand_builtin_stpcpy_1(), expand_builtin_strlen(), expand_builtin_thread_pointer(), expand_call(), expand_ccmp_expr(), expand_compare_and_swap_loop(), expand_copysign_absneg(), expand_copysign_bit(), expand_crc_optab_fn(), expand_crc_table_based(), expand_DIVMOD(), expand_divmod(), expand_doubleword_bswap(), expand_doubleword_clz_ctz_ffs(), expand_doubleword_mod(), expand_doubleword_popcount(), expand_doubleword_shift_condmove(), expand_expr_real_1(), expand_expr_real_2(), expand_fix(), expand_float(), expand_function_end(), expand_function_start(), expand_gimple_basic_block(), expand_GOMP_SIMT_ENTER_ALLOC(), expand_ifn_atomic_bit_test_and(), expand_ifn_atomic_op_fetch_cmp_0(), expand_mul_overflow(), expand_mult_highpart(), expand_neg_overflow(), expand_one_error_var(), expand_one_register_var(), expand_one_ssa_partition(), expand_parity(), expand_reversed_crc_table_based(), expand_sdiv_pow2(), expand_sfix_optab(), expand_single_bit_test(), expand_smod_pow2(), expand_speculation_safe_value(), expand_stack_vars(), expand_twoval_binop(), expand_twoval_unop(), expand_UADDC(), expand_ubsan_result_store(), expand_unop(), expand_used_vars(), expand_var_during_unrolling(), expand_vec_perm_const(), expand_vec_perm_var(), expand_vec_set_optab_fn(), expand_vector_broadcast(), expand_vector_ubsan_overflow(), extract_bit_field_1(), extract_bit_field_using_extv(), extract_integral_bit_field(), find_shift_sequence(), fix_crossing_unconditional_branches(), force_not_mem(), force_operand(), force_reg(), gen_group_rtx(), gen_reg_rtx(), gen_reg_rtx_and_attrs(), gen_reg_rtx_offset(), get_dynamic_stack_base(), get_hard_reg_initial_val(), address_reload_context::get_reload_reg(), get_scratch_reg(), get_temp_reg(), hwasan_emit_prologue(), inline_string_cmp(), ira_create_new_reg(), load_register_parameters(), lra(), lra_create_new_reg_with_unique_value(), make_more_copies(), make_safe_from(), match_asm_constraints_2(), maybe_emit_compare_and_swap_exchange_loop(), maybe_emit_group_store(), maybe_legitimize_operand(), maybe_optimize_fetch_op(), noce_convert_multiple_sets_1(), noce_emit_cmove(), noce_process_if_block(), noce_try_addcc(), noce_try_cmove_arith(), noce_try_cond_arith(), noce_try_inverse_constants(), noce_try_sign_mask(), noce_try_store_flag_constants(), noce_try_store_flag_mask(), prepare_cmp_insn(), prepare_float_lib_cmp(), resolve_simple_move(), round_trampoline_addr(), split_iv(), store_bit_field_using_insv(), store_constructor(), store_field(), store_integral_bit_field(), store_one_arg(), store_unaligned_arguments_into_pseudos(), unroll_loop_runtime_iterations(), widen_bswap(), widen_leading(), and widen_operand().

◆ gen_reg_rtx_and_attrs()

Generate a REG rtx for a new pseudo register, copying the mode and attributes from X.

References gen_reg_rtx(), GET_MODE, and set_reg_attrs_from_value().

Referenced by build_store_vectors(), delete_store(), hoist_code(), move_invariant_reg(), and pre_delete().

◆ gen_reg_rtx_offset()

Generate a new pseudo-register with the same attributes as REG, but with OFFSET added to the REG_OFFSET.

References gen_reg_rtx(), and update_reg_offset().

Referenced by decompose_register().

◆ gen_rtvec()

| rtvec gen_rtvec | ( | int | n, |

| ... ) |

Create an rtvec and stores within it the RTXen passed in the arguments.

References rtvec_def::elem, i, NULL_RTVEC, and rtvec_alloc().

Referenced by combine_reg_notes(), convert_memory_address_addr_space_1(), emit_insn_if_valid_for_reload(), expand_asm_memory_blockage(), simplify_context::simplify_gen_vec_select(), try_combine(), and try_validate_parallel().

◆ gen_rtvec_v() [1/2]

In emit-rtl.cc

References rtvec_def::elem, i, NULL_RTVEC, and rtvec_alloc().

Referenced by copy_rtx_if_shared_1(), eliminate_regs_1(), emit_case_dispatch_table(), emit_note_insn_var_location(), gen_group_rtx(), lra_eliminate_regs_1(), and result_vector().

◆ gen_rtvec_v() [2/2]

References rtvec_def::elem, i, NULL_RTVEC, and rtvec_alloc().

◆ gen_rtx_CONST_INT()

| rtx gen_rtx_CONST_INT | ( | machine_mode | mode, |

| HOST_WIDE_INT | arg ) |

References const_int_htab, const_int_rtx, const_true_rtx, MAX_SAVED_CONST_INT, and STORE_FLAG_VALUE.

Referenced by anti_adjust_stack_and_probe_stack_clash(), function_reader::consolidate_singletons(), and init_emit_once().

◆ gen_rtx_CONST_VECTOR()

Generate a vector like gen_rtx_raw_CONST_VEC, but use the zero vector when all elements are zero, and the one vector when all elements are one.

References rtx_vector_builder::build(), gcc_assert, gen_const_vec_duplicate(), GET_MODE_NUNITS(), GET_NUM_ELEM, i, known_eq, rtvec_all_equal_p(), and RTVEC_ELT.

Referenced by function_reader::consolidate_singletons(), simplify_context::simplify_binary_operation_1(), simplify_const_binary_operation(), and simplify_context::simplify_ternary_operation().

◆ gen_rtx_EXPR_LIST()

| rtx_expr_list * gen_rtx_EXPR_LIST | ( | machine_mode | mode, |

| rtx | expr, | ||

| rtx | expr_list ) |

There are some RTL codes that require special attention; the generation functions do the raw handling. If you add to this list, modify special_rtx in gengenrtl.cc as well.

References as_a().

Referenced by alloc_EXPR_LIST(), assemble_external_libcall(), clobber_reg_mode(), emit_call_1(), emit_library_call_value_1(), emit_note_insn_var_location(), expand_call(), gen_group_rtx(), prepare_call_arguments(), use_reg_mode(), and vt_add_function_parameter().

◆ gen_rtx_INSN()

| rtx_insn * gen_rtx_INSN | ( | machine_mode | mode, |

| rtx_insn * | prev_insn, | ||

| rtx_insn * | next_insn, | ||

| basic_block | bb, | ||

| rtx | pattern, | ||

| location_t | location, | ||

| int | code, | ||

| rtx | reg_notes ) |

References as_a(), and next_insn().

Referenced by init_caller_save(), init_emit_once(), setup_prohibited_mode_move_regs(), and try_combine().

◆ gen_rtx_INSN_LIST()

| rtx_insn_list * gen_rtx_INSN_LIST | ( | machine_mode | mode, |

| rtx | insn, | ||

| rtx | insn_list ) |

References as_a().

Referenced by add_next_usage_insn(), add_store_equivs(), alloc_INSN_LIST(), combine_and_move_insns(), emit_move_list(), expand_builtin(), expand_label(), ira_update_equiv_info_by_shuffle_insn(), no_equiv(), and update_equiv_regs().

◆ gen_rtx_MEM()

References MEM_ATTRS.

Referenced by asan_emit_stack_protection(), assemble_asm(), assign_parm_find_stack_rtl(), assign_parm_setup_block(), assign_parm_setup_reg(), assign_parms(), assign_stack_local_1(), assign_stack_temp_for_type(), calculate_table_based_CRC(), change_address(), change_address_1(), compute_argument_addresses(), cselib_init(), decode_addr_const(), default_static_chain(), emit_call_1(), emit_library_call_value_1(), emit_move_change_mode(), emit_move_complex_push(), emit_push_insn(), emit_stack_probe(), emit_stack_restore(), expand_asm_loc(), expand_asm_memory_blockage(), expand_asm_reg_clobber_mem_blockage(), expand_asm_stmt(), expand_assignment(), expand_builtin_apply(), expand_builtin_atomic_compare_exchange(), expand_builtin_init_descriptor(), expand_builtin_init_dwarf_reg_sizes(), expand_builtin_init_trampoline(), expand_builtin_longjmp(), expand_builtin_nonlocal_goto(), expand_builtin_return(), expand_builtin_setjmp_setup(), expand_builtin_strlen(), expand_builtin_strub_leave(), expand_builtin_update_setjmp_buf(), expand_builtin_va_copy(), expand_call(), expand_debug_expr(), expand_expr_real_1(), expand_function_start(), expand_one_error_var(), expand_one_stack_var_at(), expand_SET_EDOM(), gen_const_mem(), gen_frame_mem(), gen_tmp_stack_mem(), gen_tombstone(), get_builtin_sync_mem(), get_group_info(), get_memory_rtx(), get_spill_slot_decl(), init_caller_save(), init_expr_target(), init_fake_stack_mems(), init_reload(), init_set_costs(), initialize_argument_information(), make_decl_rtl(), noce_try_cmove_arith(), prepare_call_address(), prepare_call_arguments(), produce_memory_decl_rtl(), replace_pseudos_in(), rtl_for_decl_init(), rtl_for_decl_location(), scan_insn(), store_one_arg(), and vt_add_function_parameter().

◆ gen_rtx_REG()

| rtx gen_rtx_REG | ( | machine_mode | mode, |

| unsigned int | regno ) |

References arg_pointer_rtx, cfun, fixed_regs, frame_pointer_needed, frame_pointer_rtx, gen_raw_REG(), HARD_FRAME_POINTER_IS_FRAME_POINTER, HARD_FRAME_POINTER_REGNUM, hard_frame_pointer_rtx, INVALID_REGNUM, lra_in_progress, PIC_OFFSET_TABLE_REGNUM, pic_offset_table_rtx, reg_raw_mode, regno_reg_rtx, reload_completed, reload_in_progress, return_address_pointer_rtx, and stack_pointer_rtx.

Referenced by assign_parm_setup_block(), can_assign_to_reg_without_clobbers_p(), can_eliminate_compare(), can_reload_into(), canon_reg(), change_zero_ext(), choose_reload_regs(), combine_reaching_defs(), combine_reloads(), combine_set_extension(), compute_can_copy(), cse_cc_succs(), cse_condition_code_reg(), cse_insn(), default_static_chain(), default_zero_call_used_regs(), do_output_reload(), do_reload(), emit_library_call_value_1(), emit_push_insn(), expand_asm_stmt(), expand_builtin_apply(), expand_builtin_apply_args_1(), expand_builtin_init_dwarf_reg_sizes(), expand_builtin_return(), expand_call(), expand_dw2_landing_pad_for_region(), expand_function_end(), expand_vector_mult(), find_and_remove_re(), find_dummy_reload(), find_reloads_address_1(), gen_hard_reg_clobber(), gen_reload(), gen_reload_chain_without_interm_reg_p(), get_hard_reg_initial_val(), init_caller_save(), init_elim_table(), init_elim_table(), init_emit_regs(), init_expr_target(), init_lower_subreg(), init_reload(), insert_restore(), insert_save(), invalidate_from_sets_and_clobbers(), load_register_parameters(), maybe_memory_address_addr_space_p(), maybe_select_cc_mode(), move2add_use_add3_insn(), move_block_from_reg(), move_block_to_reg(), peep2_find_free_register(), prefer_and_bit_test(), push_reload(), reload_adjust_reg_for_mode(), reload_combine_recognize_pattern(), reload_cse_regs_1(), reload_cse_simplify_operands(), reload_cse_simplify_set(), replace_reg_with_saved_mem(), result_vector(), set_reload_reg(), setup_prohibited_mode_move_regs(), simplify_set(), split_reg(), transform_ifelse(), try_combine(), try_eliminate_compare(), vt_add_function_parameter(), and zcur_select_mode_rtx().

◆ gen_rtx_REG_offset()

| rtx gen_rtx_REG_offset | ( | rtx | reg, |

| machine_mode | mode, | ||

| unsigned int | regno, | ||

| poly_int64 | offset ) |

Generate a register with same attributes as REG, but with OFFSET added to the REG_OFFSET.

References gen_raw_REG(), and update_reg_offset().

Referenced by alter_subreg(), expand_debug_parm_decl(), simplify_context::simplify_subreg(), var_lowpart(), and vt_add_function_parameter().

◆ gen_rtx_SUBREG()

| rtx gen_rtx_SUBREG | ( | machine_mode | mode, |

| rtx | reg, | ||

| poly_uint64 | offset ) |

References gcc_assert, GET_MODE, and validate_subreg().

Referenced by curr_insn_transform(), eliminate_regs_1(), equiv_constant(), expand_debug_expr(), extract_low_bits(), find_reloads(), gen_lowpart_for_combine(), gen_lowpart_SUBREG(), get_matching_reload_reg_subreg(), lra_substitute_pseudo(), make_extraction(), noce_emit_cmove(), simplify_context::simplify_gen_subreg(), simplify_context::simplify_subreg(), store_bit_field_using_insv(), and store_integral_bit_field().

◆ gen_rtx_VAR_LOCATION()

| rtx gen_rtx_VAR_LOCATION | ( | machine_mode | mode, |

| tree | decl, | ||

| rtx | loc, | ||

| enum var_init_status | status ) |

◆ gen_tmp_stack_mem()

Generate a MEM referring to a temporary use of the stack, not part of the fixed stack frame. For example, something which is pushed by a target splitter.

References cfun, gen_rtx_MEM(), get_frame_alias_set(), MEM_NOTRAP_P, and set_mem_alias_set().

◆ gen_use()

Return a sequence of insns to use rvalue X.

References emit_use(), end_sequence(), and start_sequence().

Referenced by rtl_flow_call_edges_add().

◆ gen_vec_duplicate()

Return a vector rtx of mode MODE in which every element has value X. The result will be a constant if X is constant.

References gen_const_vec_duplicate(), and valid_for_const_vector_p().

Referenced by gen_vec_series(), simplify_context::simplify_binary_operation_1(), simplify_context::simplify_subreg(), and simplify_context::simplify_unary_operation_1().

◆ gen_vec_series()

Generate a vector of mode MODE in which element I has the value BASE + I * STEP. The result will be a constant if BASE and STEP are both constants.

References const0_rtx, gen_const_vec_series(), gen_vec_duplicate(), and valid_for_const_vector_p().

Referenced by simplify_context::simplify_binary_operation_series(), and simplify_context::simplify_unary_operation_1().

◆ get_first_label_num()

| int get_first_label_num | ( | void | ) |

Return first label number used in this function (if any were used).

References first_label_num.

Referenced by compute_alignments(), init_eliminable_invariants(), and reload_combine().

◆ get_first_nonnote_insn()

| rtx_insn * get_first_nonnote_insn | ( | void | ) |

Return the first nonnote insn emitted in current sequence or current function. This routine looks inside SEQUENCEs.

References as_a(), GET_CODE, get_insns(), next_insn(), NONJUMP_INSN_P, NOTE_P, and PATTERN().

◆ get_last_insn_anywhere()

| rtx_insn * get_last_insn_anywhere | ( | void | ) |

Emission of insns (adding them to the doubly-linked list).

Return the last insn emitted, even if it is in a sequence now pushed.

References get_current_sequence(), sequence_stack::last, and sequence_stack::next.

◆ get_last_nonnote_insn()

| rtx_insn * get_last_nonnote_insn | ( | void | ) |

Return the last nonnote insn emitted in current sequence or current function. This routine looks inside SEQUENCEs.

References dyn_cast(), get_last_insn(), NONJUMP_INSN_P, NOTE_P, PATTERN(), and previous_insn().

◆ get_max_insn_count()

| int get_max_insn_count | ( | void | ) |

Return the number of actual (non-debug) insns emitted in this function.

References cur_debug_insn_uid, and cur_insn_uid.

Referenced by alloc_hash_table(), and alloc_hash_table().

◆ get_mem_align_offset()

| int get_mem_align_offset | ( | rtx | mem, |

| unsigned int | align ) |

Return OFFSET if XEXP (MEM, 0) - OFFSET is known to be ALIGN bits aligned for 0 <= OFFSET < ALIGN / BITS_PER_UNIT, or -1 if not known.

References component_ref_field_offset(), DECL_ALIGN, DECL_FIELD_BIT_OFFSET, DECL_FIELD_CONTEXT, DECL_P, gcc_assert, INDIRECT_REF_P, MEM_EXPR, MEM_OFFSET, MEM_OFFSET_KNOWN_P, MEM_P, NULL_TREE, poly_int_tree_p(), TREE_CODE, tree_fits_uhwi_p(), TREE_OPERAND, tree_to_uhwi(), TREE_TYPE, and TYPE_ALIGN.

◆ get_reg_attrs()

|

static |

Allocate a new reg_attrs structure and insert it into the hash table if one identical to it is not already in the table. We are doing this for MEM of mode MODE.

References ggc_alloc(), known_eq, attrs::offset, and reg_attrs_htab.

Referenced by set_reg_attrs_for_decl_rtl(), set_reg_attrs_for_parm(), set_reg_attrs_from_value(), and update_reg_offset().

◆ get_spill_slot_decl()