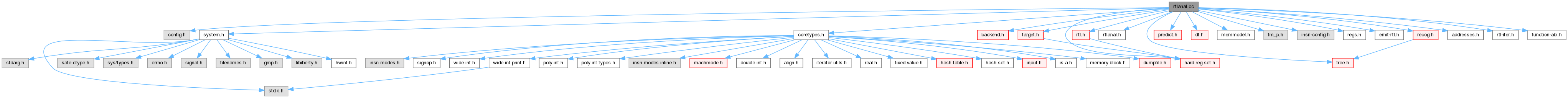

#include "config.h"#include "system.h"#include "coretypes.h"#include "backend.h"#include "target.h"#include "rtl.h"#include "rtlanal.h"#include "tree.h"#include "predict.h"#include "df.h"#include "memmodel.h"#include "tm_p.h"#include "insn-config.h"#include "regs.h"#include "emit-rtl.h"#include "recog.h"#include "addresses.h"#include "rtl-iter.h"#include "hard-reg-set.h"#include "function-abi.h"

Data Structures | |

| struct | set_of_data |

| struct | parms_set_data |

Macros | |

| #define | cached_num_sign_bit_copies sorry_i_am_preventing_exponential_behavior |

Variables | |

| rtx_subrtx_bound_info | rtx_all_subrtx_bounds [NUM_RTX_CODE] |

| rtx_subrtx_bound_info | rtx_nonconst_subrtx_bounds [NUM_RTX_CODE] |

| static unsigned int | num_sign_bit_copies_in_rep [MAX_MODE_INT+1][MAX_MODE_INT+1] |

| template<typename T> | |

| const size_t | generic_subrtx_iterator< T >::LOCAL_ELEMS |

Macro Definition Documentation

◆ cached_num_sign_bit_copies

| #define cached_num_sign_bit_copies sorry_i_am_preventing_exponential_behavior |

We let num_sign_bit_copies recur into nonzero_bits as that is useful. We don't let nonzero_bits recur into num_sign_bit_copies, because that is less useful. We can't allow both, because that results in exponential run time recursion. There is a nullstone testcase that triggered this. This macro avoids accidental uses of num_sign_bit_copies.

Referenced by cached_num_sign_bit_copies(), num_sign_bit_copies(), and num_sign_bit_copies1().

Function Documentation

◆ add_args_size_note()

| void add_args_size_note | ( | rtx_insn * | insn, |

| poly_int64 | value ) |

Add a REG_ARGS_SIZE note to INSN with value VALUE.

References add_reg_note(), find_reg_note(), gcc_checking_assert, gen_int_mode(), and NULL_RTX.

Referenced by adjust_stack_1(), emit_call_1(), expand_builtin_trap(), and fixup_args_size_notes().

◆ add_auto_inc_notes()

Process recursively X of INSN and add REG_INC notes if necessary.

References add_auto_inc_notes(), add_auto_inc_notes(), add_reg_note(), auto_inc_p(), GET_CODE, GET_RTX_FORMAT, GET_RTX_LENGTH, i, XEXP, XVECEXP, and XVECLEN.

Referenced by add_auto_inc_notes(), peep2_attempt(), reload(), and update_inc_notes().

◆ add_int_reg_note()

Add an integer register note with kind KIND and datum DATUM to INSN.

References gcc_checking_assert, int_reg_note_p(), and REG_NOTES.

Referenced by add_reg_br_prob_note(), and add_shallow_copy_of_reg_note().

◆ add_reg_note()

Add register note with kind KIND and datum DATUM to INSN.

References alloc_reg_note(), and REG_NOTES.

Referenced by add_args_size_note(), add_auto_inc_notes(), add_label_notes(), add_shallow_copy_of_reg_note(), attempt_change(), copy_frame_info_to_split_insn(), copy_reg_eh_region_note_backward(), copy_reg_eh_region_note_forward(), delete_prior_computation(), df_set_note(), distribute_notes(), doloop_modify(), emit_call_1(), emit_library_call_value_1(), emit_notes_in_bb(), expand_builtin_apply(), expand_builtin_longjmp(), expand_builtin_nonlocal_goto(), expand_call(), expand_call_stmt(), ext_dce_try_optimize_rshift(), find_reloads(), force_move_args_size_note(), force_nonfallthru_and_redirect(), ira_register_new_scratch_op(), make_reg_eh_region_note(), make_reg_eh_region_note_nothrow_nononlocal(), mark_jump_label_1(), mark_transaction_restart_calls(), maybe_merge_cfa_adjust(), maybe_move_args_size_note(), move_deaths(), patch_jump_insn(), peep2_attempt(), predict_insn(), process_bb_lives(), process_bb_node_lives(), reload_as_needed(), resolve_simple_move(), set_unique_reg_note(), and try_split().

◆ add_shallow_copy_of_reg_note()

Add a register note like NOTE to INSN.

References add_int_reg_note(), add_reg_note(), GET_CODE, REG_NOTE_KIND, XEXP, and XINT.

Referenced by distribute_notes().

◆ address_cost()

| int address_cost | ( | rtx | x, |

| machine_mode | mode, | ||

| addr_space_t | as, | ||

| bool | speed ) |

Return cost of address expression X. Expect that X is properly formed address reference. SPEED parameter specify whether costs optimized for speed or size should be returned.

References memory_address_addr_space_p(), and targetm.

Referenced by computation_cost(), create_new_invariant(), force_expr_to_var_cost(), get_address_cost(), get_address_cost_ainc(), preferred_mem_scale_factor(), should_replace_address(), and try_replace_in_use().

◆ alloc_reg_note()

Allocate a register note with kind KIND and datum DATUM. LIST is stored as the pointer to the next register note.

References alloc_EXPR_LIST(), alloc_INSN_LIST(), gcc_checking_assert, int_reg_note_p(), and PUT_REG_NOTE_KIND.

Referenced by add_reg_note(), combine_reg_notes(), distribute_notes(), duplicate_reg_note(), eliminate_regs_1(), filter_notes(), lra_eliminate_regs_1(), move_deaths(), recog_for_combine_1(), and try_combine().

◆ auto_inc_p()

Return true if X is an autoincrement side effect and the register is not the stack pointer.

References GET_CODE, stack_pointer_rtx, and XEXP.

Referenced by add_auto_inc_notes().

◆ baseness()

|

static |

Evaluate the likelihood of X being a base or index value, returning positive if it is likely to be a base, negative if it is likely to be an index, and 0 if we can't tell. Make the magnitude of the return value reflect the amount of confidence we have in the answer. MODE, AS, OUTER_CODE and INDEX_CODE are as for ok_for_base_p_1.

References HARD_REGISTER_P, MEM_P, MEM_POINTER, ok_for_base_p_1(), REG_P, REG_POINTER, and REGNO.

Referenced by decompose_normal_address().

◆ binary_scale_code_p()

Return true if CODE applies some kind of scale. The scaled value is is the first operand and the scale is the second.

Referenced by get_index_term().

◆ cached_nonzero_bits()

|

static |

The function cached_nonzero_bits is a wrapper around nonzero_bits1. It avoids exponential behavior in nonzero_bits1 when X has identical subexpressions on the first or the second level.

References cached_nonzero_bits(), nonzero_bits1(), nonzero_bits_binary_arith_p(), and XEXP.

Referenced by cached_nonzero_bits(), nonzero_bits(), and nonzero_bits1().

◆ cached_num_sign_bit_copies()

|

static |

The function cached_num_sign_bit_copies is a wrapper around num_sign_bit_copies1. It avoids exponential behavior in num_sign_bit_copies1 when X has identical subexpressions on the first or the second level.

References cached_num_sign_bit_copies, num_sign_bit_copies1(), num_sign_bit_copies_binary_arith_p(), and XEXP.

◆ canonicalize_condition()

| rtx canonicalize_condition | ( | rtx_insn * | insn, |

| rtx | cond, | ||

| int | reverse, | ||

| rtx_insn ** | earliest, | ||

| rtx | want_reg, | ||

| int | allow_cc_mode, | ||

| int | valid_at_insn_p ) |

Given an insn INSN and condition COND, return the condition in a

canonical form to simplify testing by callers. Specifically:

(1) The code will always be a comparison operation (EQ, NE, GT, etc.).

(2) Both operands will be machine operands.

(3) If an operand is a constant, it will be the second operand.

(4) (LE x const) will be replaced with (LT x <const+1>) and similarly

for GE, GEU, and LEU.

If the condition cannot be understood, or is an inequality floating-point

comparison which needs to be reversed, 0 will be returned.

If REVERSE is nonzero, then reverse the condition prior to canonizing it.

If EARLIEST is nonzero, it is a pointer to a place where the earliest

insn used in locating the condition was found. If a replacement test

of the condition is desired, it should be placed in front of that

insn and we will be sure that the inputs are still valid.

If WANT_REG is nonzero, we wish the condition to be relative to that

register, if possible. Therefore, do not canonicalize the condition

further. If ALLOW_CC_MODE is nonzero, allow the condition returned

to be a compare to a CC mode register.

If VALID_AT_INSN_P, the condition must be valid at both *EARLIEST

and at INSN.

References BLOCK_FOR_INSN(), COMPARISON_P, CONST0_RTX, CONST_INT_P, const_val, CONSTANT_P, FIND_REG_INC_NOTE, gen_int_mode(), GET_CODE, GET_MODE, GET_MODE_CLASS, GET_MODE_MASK, GET_MODE_PRECISION(), GET_RTX_CLASS, HOST_BITS_PER_WIDE_INT, HOST_WIDE_INT_1U, INTVAL, is_a(), modified_between_p(), modified_in_p(), NONJUMP_INSN_P, NULL_RTX, prev_nonnote_nondebug_insn(), REAL_VALUE_NEGATIVE, REAL_VALUE_TYPE, REG_P, reg_set_p(), reversed_comparison_code(), RTX_COMM_COMPARE, RTX_COMPARE, rtx_equal_p(), SCALAR_FLOAT_MODE_P, SET, SET_DEST, set_of(), SET_SRC, STORE_FLAG_VALUE, swap_condition(), val_signbit_known_set_p(), and XEXP.

Referenced by get_condition(), noce_get_alt_condition(), and noce_get_condition().

◆ commutative_operand_precedence()

| int commutative_operand_precedence | ( | rtx | op | ) |

Return a value indicating whether OP, an operand of a commutative operation, is preferred as the first or second operand. The more positive the value, the stronger the preference for being the first operand.

References avoid_constant_pool_reference(), GET_CODE, GET_RTX_CLASS, MEM_P, MEM_POINTER, OBJECT_P, REG_P, REG_POINTER, RTX_BIN_ARITH, RTX_COMM_ARITH, RTX_CONST_OBJ, RTX_EXTRA, RTX_OBJ, RTX_UNARY, and SUBREG_REG.

Referenced by compare_address_parts(), simplify_plus_minus_op_data_cmp(), swap_commutative_operands_p(), and swap_commutative_operands_with_target().

◆ computed_jump_p()

Return true if INSN is an indirect jump (aka computed jump). Tablejumps and casesi insns are not considered indirect jumps; we can recognize them by a (use (label_ref)).

References computed_jump_p_1(), GET_CODE, i, JUMP_LABEL, JUMP_P, NULL, set_of_data::pat, PATTERN(), pc_rtx, SET, SET_DEST, SET_SRC, XEXP, XVECEXP, and XVECLEN.

Referenced by bypass_conditional_jumps(), create_trace_edges(), default_invalid_within_doloop(), duplicate_computed_gotos(), fix_crossing_unconditional_branches(), make_edges(), patch_jump_insn(), reorder_basic_blocks_simple(), try_crossjump_bb(), and try_head_merge_bb().

◆ computed_jump_p_1()

A subroutine of computed_jump_p, return true if X contains a REG or MEM or constant that is not in the constant pool and not in the condition of an IF_THEN_ELSE.

References CASE_CONST_ANY, computed_jump_p_1(), CONSTANT_POOL_ADDRESS_P, GET_CODE, GET_RTX_FORMAT, GET_RTX_LENGTH, i, XEXP, XVECEXP, and XVECLEN.

Referenced by computed_jump_p(), and computed_jump_p_1().

◆ constant_pool_constant_p()

Check whether this is a constant pool constant.

References avoid_constant_pool_reference(), and CONST_DOUBLE_P.

Referenced by get_inv_cost().

◆ contains_constant_pool_address_p()

Return true if RTL X contains a constant pool address.

References ALL, CONSTANT_POOL_ADDRESS_P, FOR_EACH_SUBRTX, and SYMBOL_REF_P.

◆ contains_mem_rtx_p()

Return true if X contains a MEM subrtx.

References ALL, FOR_EACH_SUBRTX, and MEM_P.

Referenced by bb_ok_for_noce_convert_multiple_sets(), bb_valid_for_noce_process_p(), prune_expressions(), and try_fwprop_subst_pattern().

◆ contains_paradoxical_subreg_p()

Return true if X contains a paradoxical subreg.

References FOR_EACH_SUBRTX_VAR, paradoxical_subreg_p(), and SUBREG_P.

Referenced by forward_propagate_and_simplify(), try_fwprop_subst_pattern(), and try_replace_reg().

◆ contains_symbol_ref_p()

Return true if RTL X contains a SYMBOL_REF.

References ALL, FOR_EACH_SUBRTX, and SYMBOL_REF_P.

Referenced by lra_constraints(), scan_one_insn(), and track_expr_p().

◆ contains_symbolic_reference_p()

Return true if RTL X contains a SYMBOL_REF or LABEL_REF.

References ALL, FOR_EACH_SUBRTX, GET_CODE, and SYMBOL_REF_P.

Referenced by simplify_context::simplify_binary_operation_1().

◆ count_occurrences()

Return the number of places FIND appears within X. If COUNT_DEST is zero, we do not count occurrences inside the destination of a SET.

References CASE_CONST_ANY, count, count_occurrences(), count_occurrences(), find(), GET_CODE, GET_RTX_FORMAT, GET_RTX_LENGTH, i, MEM_P, rtx_equal_p(), SET, SET_DEST, SET_SRC, XEXP, XVECEXP, and XVECLEN.

Referenced by count_occurrences(), delete_output_reload(), emit_input_reload_insns(), find_inc(), record_value_for_reg(), and reload_as_needed().

◆ covers_regno_no_parallel_p()

Return TRUE iff DEST is a register or subreg of a register, is a complete rather than read-modify-write destination, and contains register TEST_REGNO.

References END_REGNO(), GET_CODE, read_modify_subreg_p(), REG_P, REGNO, and SUBREG_REG.

Referenced by covers_regno_p(), and simple_regno_set().

◆ covers_regno_p()

Like covers_regno_no_parallel_p, but also handles PARALLELs where any member matches the covers_regno_no_parallel_p criteria.

References covers_regno_no_parallel_p(), GET_CODE, i, NULL_RTX, XEXP, XVECEXP, and XVECLEN.

Referenced by dead_or_set_regno_p().

◆ dead_or_set_p()

Return true if X's old contents don't survive after INSN. This will be true if X is a register and X dies in INSN or because INSN entirely sets X. "Entirely set" means set directly and not through a SUBREG, or ZERO_EXTRACT, so no trace of the old contents remains. Likewise, REG_INC does not count. REG may be a hard or pseudo reg. Renumbering is not taken into account, but for this use that makes no difference, since regs don't overlap during their lifetimes. Therefore, this function may be used at any time after deaths have been computed. If REG is a hard reg that occupies multiple machine registers, this function will only return true if each of those registers will be replaced by INSN.

References dead_or_set_regno_p(), END_REGNO(), gcc_assert, i, REG_P, and REGNO.

Referenced by decrease_live_ranges_number(), distribute_notes(), do_input_reload(), emit_input_reload_insns(), find_single_use(), set_nonzero_bits_and_sign_copies(), and try_combine().

◆ dead_or_set_regno_p()

Utility function for dead_or_set_p to check an individual register.

References CALL_P, COND_EXEC_CODE, covers_regno_p(), find_regno_fusage(), find_regno_note(), GET_CODE, i, PATTERN(), SET, SET_DEST, XVECEXP, and XVECLEN.

Referenced by dead_or_set_p(), distribute_notes(), and move_deaths().

◆ decompose_address()

| void decompose_address | ( | struct address_info * | info, |

| rtx * | loc, | ||

| machine_mode | mode, | ||

| addr_space_t | as, | ||

| enum rtx_code | outer_code ) |

Describe address *LOC in *INFO. MODE is the mode of the addressed value, or VOIDmode if not known. AS is the address space associated with LOC. OUTER_CODE is MEM if *LOC is a MEM address and ADDRESS otherwise.

References decompose_automod_address(), decompose_incdec_address(), decompose_normal_address(), GET_CODE, and strip_address_mutations().

Referenced by decompose_lea_address(), decompose_mem_address(), and update_address().

◆ decompose_automod_address()

|

static |

INFO->INNER describes a {PRE,POST}_MODIFY address. Set up the rest

of INFO accordingly.

References CONSTANT_P, gcc_assert, gcc_checking_assert, GET_CODE, rtx_equal_p(), set_address_base(), set_address_disp(), set_address_index(), strip_address_mutations(), and XEXP.

Referenced by decompose_address().

◆ decompose_incdec_address()

|

static |

INFO->INNER describes a {PRE,POST}_{INC,DEC} address. Set up the

rest of INFO accordingly.

References gcc_checking_assert, set_address_base(), and XEXP.

Referenced by decompose_address().

◆ decompose_lea_address()

| void decompose_lea_address | ( | struct address_info * | info, |

| rtx * | loc ) |

Describe address operand LOC in INFO.

References ADDR_SPACE_GENERIC, and decompose_address().

Referenced by process_address_1(), and satisfies_address_constraint_p().

◆ decompose_mem_address()

| void decompose_mem_address | ( | struct address_info * | info, |

| rtx | x ) |

Describe the address of MEM X in INFO.

References decompose_address(), gcc_assert, GET_MODE, MEM_ADDR_SPACE, MEM_P, and XEXP.

Referenced by process_address_1(), and satisfies_memory_constraint_p().

◆ decompose_normal_address()

|

static |

INFO->INNER describes a normal, non-automodified address. Fill in the rest of INFO accordingly.

References baseness(), CONSTANT_P, extract_plus_operands(), gcc_assert, get_base_term(), GET_CODE, get_index_term(), GET_MODE, set_address_base(), set_address_disp(), set_address_index(), set_address_segment(), strip_address_mutations(), and targetm.

Referenced by decompose_address().

◆ default_address_cost()

| int default_address_cost | ( | rtx | x, |

| machine_mode | , | ||

| addr_space_t | , | ||

| bool | speed ) |

If the target doesn't override, compute the cost as with arithmetic.

References rtx_cost().

◆ duplicate_reg_note()

Duplicate NOTE and return the copy.

References alloc_reg_note(), copy_insn_1(), GET_CODE, NULL_RTX, REG_NOTE_KIND, XEXP, and XINT.

Referenced by emit_copy_of_insn_after().

◆ extract_plus_operands()

Treat *LOC as a tree of PLUS operands and store pointers to the summed values in [PTR, END). Return a pointer to the end of the used array.

References end(), extract_plus_operands(), gcc_assert, GET_CODE, and XEXP.

Referenced by decompose_normal_address(), and extract_plus_operands().

◆ find_all_hard_reg_sets()

| void find_all_hard_reg_sets | ( | const rtx_insn * | insn, |

| HARD_REG_SET * | pset, | ||

| bool | implicit ) |

Examine INSN, and compute the set of hard registers written by it. Store it in *PSET. Should only be called after reload. IMPLICIT is true if we should include registers that are fully-clobbered by calls. This should be used with caution, since it doesn't include partially-clobbered registers.

References CALL_P, CLEAR_HARD_REG_SET, function_abi::full_reg_clobbers(), insn_callee_abi(), note_stores(), NULL, record_hard_reg_sets(), record_hard_reg_sets(), REG_NOTE_KIND, REG_NOTES, and XEXP.

Referenced by collect_fn_hard_reg_usage().

◆ find_all_hard_regs()

| void find_all_hard_regs | ( | const_rtx | x, |

| HARD_REG_SET * | pset ) |

Add all hard register in X to *PSET.

References add_to_hard_reg_set(), FOR_EACH_SUBRTX, GET_MODE, REG_P, and REGNO.

Referenced by record_hard_reg_uses().

◆ find_constant_src()

Check whether INSN is a single_set whose source is known to be equivalent to a constant. Return that constant if so, otherwise return null.

References avoid_constant_pool_reference(), CONSTANT_P, find_reg_equal_equiv_note(), NULL_RTX, SET_SRC, single_set(), and XEXP.

◆ find_first_parameter_load()

Look backward for first parameter to be loaded. Note that loads of all parameters will not necessarily be found if CSE has eliminated some of them (e.g., an argument to the outer function is passed down as a parameter). Do not skip BOUNDARY.

References CALL_INSN_FUNCTION_USAGE, CALL_P, CLEAR_HARD_REG_SET, gcc_assert, GET_CODE, INSN_P, LABEL_P, note_stores(), parms_set_data::nregs, parms_set(), PREV_INSN(), REG_P, REGNO, parms_set_data::regs, SET_HARD_REG_BIT, STATIC_CHAIN_REG_P, and XEXP.

Referenced by insert_insn_end_basic_block(), and sjlj_mark_call_sites().

◆ find_reg_equal_equiv_note()

Return a REG_EQUIV or REG_EQUAL note if insn has only a single set and has such a note.

References GET_CODE, INSN_P, multiple_sets(), NULL, PATTERN(), REG_NOTE_KIND, REG_NOTES, and XEXP.

Referenced by adjust_insn(), allocate_dynamic_stack_space(), bypass_block(), can_replace_by(), combine_instructions(), compute_ld_motion_mems(), count_reg_usage(), cprop_insn(), cprop_jump(), cselib_record_sets(), df_find_single_def_src(), find_constant_src(), gcse_emit_move_after(), get_biv_step_1(), hash_scan_set(), hash_scan_set(), init_alias_analysis(), iv_analyze_def(), local_cprop_pass(), maybe_strip_eq_note_for_split_iv(), merge_notes(), move2add_note_store(), noce_get_alt_condition(), noce_try_abs(), purge_dead_edges(), remove_reachable_equiv_notes(), remove_reg_equal_equiv_notes_for_regno(), replace_store_insn(), resolve_reg_notes(), split_insn(), store_killed_in_insn(), suitable_set_for_replacement(), try_replace_reg(), and update_rsp_from_reg_equal().

◆ find_reg_fusage()

Return true if DATUM, or any overlap of DATUM, of kind CODE is found in the CALL_INSN_FUNCTION_USAGE information of INSN.

References CALL_INSN_FUNCTION_USAGE, CALL_P, END_REGNO(), find_regno_fusage(), gcc_assert, GET_CODE, i, REG_P, REGNO, rtx_equal_p(), and XEXP.

Referenced by can_combine_p(), decrease_live_ranges_number(), distribute_links(), distribute_notes(), no_conflict_move_test(), push_reload(), reg_set_p(), and reg_used_between_p().

◆ find_reg_note()

Return the reg-note of kind KIND in insn INSN, if there is one. If DATUM is nonzero, look for one whose datum is DATUM.

References gcc_checking_assert, INSN_P, REG_NOTE_KIND, REG_NOTES, and XEXP.

Referenced by add_args_size_note(), add_insn_allocno_copies(), add_reg_br_prob_note(), add_store_equivs(), adjust_insn(), any_uncondjump_p(), attempt_change(), find_comparison_dom_walker::before_dom_children(), can_combine_p(), can_nonlocal_goto(), canonicalize_insn(), check_for_inc_dec(), check_for_inc_dec_1(), check_for_label_ref(), combine_and_move_insns(), combine_predictions_for_insn(), combine_stack_adjustments_for_block(), compute_outgoing_frequencies(), cond_exec_process_if_block(), control_flow_insn_p(), copy_reg_eh_region_note_backward(), copy_reg_eh_region_note_forward(), copyprop_hardreg_forward_1(), create_trace_edges(), cse_insn(), cselib_process_insn(), curr_insn_transform(), dead_or_predicable(), decrease_live_ranges_number(), deletable_insn_p(), delete_insn(), delete_unmarked_insns(), distribute_notes(), do_local_cprop(), do_output_reload(), dw2_fix_up_crossing_landing_pad(), emit_cmp_and_jump_insn_1(), emit_input_reload_insns(), emit_libcall_block_1(), expand_addsub_overflow(), expand_gimple_stmt(), expand_loc(), expand_mul_overflow(), expand_neg_overflow(), ext_dce_process_bb(), find_dummy_reload(), find_equiv_reg(), find_moveable_store(), find_reloads(), fixup_args_size_notes(), fixup_eh_region_note(), fixup_reorder_chain(), fixup_tail_calls(), force_move_args_size_note(), force_nonfallthru_and_redirect(), forward_propagate_and_simplify(), forward_propagate_into(), fp_setter_insn(), get_call_fndecl(), get_eh_region_and_lp_from_rtx(), hash_scan_set(), indirect_jump_optimize(), inherit_in_ebb(), init_alias_analysis(), init_eliminable_invariants(), init_elimination(), insn_stack_adjust_offset_pre_post(), ira_update_equiv_info_by_shuffle_insn(), label_is_jump_target_p(), latest_hazard_before(), lra_process_new_insns(), make_edges(), make_reg_eh_region_note_nothrow_nononlocal(), mark_jump_label_1(), mark_referenced_resources(), mark_set_resources(), match_reload(), maybe_merge_cfa_adjust(), maybe_move_args_size_note(), maybe_propagate_label_ref(), merge_if_block(), mostly_true_jump(), move_invariant_reg(), need_fake_edge_p(), no_equiv(), noce_process_if_block(), notice_args_size(), old_insns_match_p(), outgoing_edges_match(), patch_jump_insn(), peep2_attempt(), process_alt_operands(), process_bb_lives(), process_bb_node_lives(), purge_dead_edges(), record_reg_classes(), record_set_data(), record_store(), redirect_jump_2(), redundant_insn(), reg_scan_mark_refs(), regstat_bb_compute_ri(), reload(), reload_as_needed(), resolve_simple_move(), rest_of_clean_state(), rtl_verify_edges(), save_call_clobbered_regs(), scan_insn(), scan_one_insn(), scan_trace(), set_unique_reg_note(), setup_reg_equiv(), setup_save_areas(), single_set_2(), sjlj_fix_up_crossing_landing_pad(), split_all_insns(), subst_reloads(), try_back_substitute_reg(), try_combine(), try_eliminate_compare(), pair_fusion_bb_info::try_fuse_pair(), try_fwprop_subst_pattern(), try_head_merge_bb(), try_split(), rtx_properties::try_to_add_insn(), update_br_prob_note(), update_equiv_regs(), and validate_equiv_mem().

◆ find_regno_fusage()

Return true if REGNO, or any overlap of REGNO, of kind CODE is found in the CALL_INSN_FUNCTION_USAGE information of INSN.

References CALL_INSN_FUNCTION_USAGE, CALL_P, END_REGNO(), GET_CODE, REG_P, REGNO, and XEXP.

Referenced by dead_or_set_regno_p(), distribute_notes(), and find_reg_fusage().

◆ find_regno_note()

Return the reg-note of kind KIND in insn INSN which applies to register number REGNO, if any. Return 0 if there is no such reg-note. Note that the REGNO of this NOTE need not be REGNO if REGNO is a hard register; it might be the case that the note overlaps REGNO.

References END_REGNO(), INSN_P, REG_NOTE_KIND, REG_NOTES, REG_P, REGNO, and XEXP.

Referenced by build_def_use(), calculate_gen_cands(), calculate_loop_reg_pressure(), calculate_spill_cost(), dead_or_set_regno_p(), dead_pseudo_p(), decrease_live_ranges_number(), delete_dead_insn(), delete_output_reload(), delete_prior_computation(), distribute_notes(), do_remat(), emit_output_reload_insns(), emit_reload_insns(), ext_dce_try_optimize_rshift(), fill_simple_delay_slots(), fill_slots_from_thread(), lra_delete_dead_insn(), match_reload(), move_deaths(), operand_to_remat(), process_address_1(), process_alt_operands(), record_operand_costs(), reg_dead_at_p(), remove_death(), set_bb_regs(), try_eliminate_compare(), try_merge(), and update_reg_unused_notes().

◆ for_each_inc_dec()

| int for_each_inc_dec | ( | rtx | x, |

| for_each_inc_dec_fn | fn, | ||

| void * | data ) |

Traverse *LOC looking for MEMs that have autoinc addresses. For each such autoinc operation found, call FN, passing it the innermost enclosing MEM, the operation itself, the RTX modified by the operation, two RTXs (the second may be NULL) that, once added, represent the value to be held by the modified RTX afterwards, and DATA. FN is to return 0 to continue the traversal or any other value to have it returned to the caller of for_each_inc_dec.

References for_each_inc_dec_find_inc_dec(), FOR_EACH_SUBRTX_VAR, GET_CODE, GET_RTX_CLASS, MEM_P, RTX_AUTOINC, and XEXP.

Referenced by check_for_inc_dec(), check_for_inc_dec_1(), cselib_record_sets(), stack_adjust_offset_pre_post(), and try_combine().

◆ for_each_inc_dec_find_inc_dec()

|

static |

MEM has a PRE/POST-INC/DEC/MODIFY address X. Extract the operands of the equivalent add insn and pass the result to FN, using DATA as the final argument.

References gcc_unreachable, gen_int_mode(), GET_CODE, GET_MODE, GET_MODE_SIZE(), NULL, and XEXP.

Referenced by for_each_inc_dec().

◆ get_address_mode()

| scalar_int_mode get_address_mode | ( | rtx | mem | ) |

Return the mode of MEM's address.

References as_a(), gcc_assert, GET_MODE, MEM_ADDR_SPACE, MEM_P, targetm, and XEXP.

Referenced by add_stores(), add_uses(), adjust_address_1(), canon_address(), check_mem_read_rtx(), cselib_record_sets(), cst_pool_loc_descr(), pieces_addr::decide_autoinc(), dw_loc_list_1(), dwarf2out_frame_debug_cfa_expression(), emit_block_cmp_via_loop(), emit_block_move_via_loop(), expand_assignment(), expand_expr_real_1(), find_reloads(), find_split_point(), loc_descriptor(), loc_list_from_tree_1(), maybe_legitimize_operand_same_code(), mem_loc_descriptor(), noce_try_cmove_arith(), offset_address(), record_store(), replace_expr_with_values(), rtl_for_decl_location(), store_expr(), and use_type().

◆ get_args_size()

| poly_int64 get_args_size | ( | const_rtx | x | ) |

Return the argument size in REG_ARGS_SIZE note X.

References gcc_checking_assert, REG_NOTE_KIND, rtx_to_poly_int64(), and XEXP.

Referenced by distribute_notes(), fixup_args_size_notes(), lra_process_new_insns(), notice_args_size(), peep2_attempt(), reload_as_needed(), and try_split().

◆ get_base_term()

If *INNER can be interpreted as a base, return a pointer to the inner term (see address_info). Return null otherwise.

References GET_CODE, strip_address_mutations(), valid_base_or_index_term_p(), and XEXP.

Referenced by decompose_normal_address().

◆ get_call_fndecl()

Get the declaration of the function called by INSN.

References find_reg_note(), NULL_RTX, NULL_TREE, SYMBOL_REF_DECL, and XEXP.

Referenced by insn_callee_abi(), and self_recursive_call_p().

◆ get_condition()

Given a jump insn JUMP, return the condition that will cause it to branch to its JUMP_LABEL. If the condition cannot be understood, or is an inequality floating-point comparison which needs to be reversed, 0 will be returned. If EARLIEST is nonzero, it is a pointer to a place where the earliest insn used in locating the condition was found. If a replacement test of the condition is desired, it should be placed in front of that insn and we will be sure that the inputs are still valid. If EARLIEST is null, the returned condition will be valid at INSN. If ALLOW_CC_MODE is nonzero, allow the condition returned to be a compare CC mode register. VALID_AT_INSN_P is the same as for canonicalize_condition.

References any_condjump_p(), canonicalize_condition(), GET_CODE, JUMP_LABEL, JUMP_P, label_ref_label(), NULL_RTX, pc_set(), SET_SRC, and XEXP.

Referenced by bb_estimate_probability_locally(), check_simple_exit(), fis_get_condition(), simplify_using_initial_values(), and try_head_merge_bb().

◆ get_full_rtx_cost()

| void get_full_rtx_cost | ( | rtx | x, |

| machine_mode | mode, | ||

| enum rtx_code | outer, | ||

| int | opno, | ||

| struct full_rtx_costs * | c ) |

Fill in the structure C with information about both speed and size rtx costs for X, which is operand OPNO in an expression with code OUTER.

References rtx_cost().

Referenced by get_full_set_rtx_cost(), and get_full_set_src_cost().

◆ get_index_code()

| enum rtx_code get_index_code | ( | const struct address_info * | info | ) |

Return the "index code" of INFO, in the form required by ok_for_base_p_1.

References GET_CODE.

Referenced by base_plus_disp_to_reg(), base_to_reg(), and process_address_1().

◆ get_index_scale()

| HOST_WIDE_INT get_index_scale | ( | const struct address_info * | info | ) |

Return the scale applied to *INFO->INDEX_TERM, or 0 if the index is more complicated than that.

References CONST_INT_P, GET_CODE, HOST_WIDE_INT_1, INTVAL, and XEXP.

Referenced by equiv_address_substitution(), index_part_to_reg(), and process_address_1().

◆ get_index_term()

If *INNER can be interpreted as an index, return a pointer to the inner term (see address_info). Return null otherwise.

References binary_scale_code_p(), CONSTANT_P, GET_CODE, strip_address_mutations(), valid_base_or_index_term_p(), and XEXP.

Referenced by decompose_normal_address().

◆ get_initial_register_offset()

|

static |

Compute an approximation for the offset between the register FROM and TO for the current function, as it was at the start of the routine.

References crtl, epilogue_completed, get_frame_size(), get_initial_register_offset(), HARD_FRAME_POINTER_REGNUM, i, and table.

Referenced by get_initial_register_offset(), and rtx_addr_can_trap_p_1().

◆ get_integer_term()

| HOST_WIDE_INT get_integer_term | ( | const_rtx | x | ) |

Return the value of the integer term in X, if one is apparent; otherwise return 0. Only obvious integer terms are detected. This is used in cse.cc with the `related_value' field.

References CONST_INT_P, GET_CODE, INTVAL, and XEXP.

Referenced by use_related_value().

◆ get_related_value()

If X is a constant, return the value sans apparent integer term; otherwise return 0. Only obvious integer terms are detected.

References CONST_INT_P, GET_CODE, and XEXP.

Referenced by insert_with_costs(), and use_related_value().

◆ in_insn_list_p()

| bool in_insn_list_p | ( | const rtx_insn_list * | listp, |

| const rtx_insn * | node ) |

Search LISTP (an EXPR_LIST) for an entry whose first operand is NODE and return 1 if it is found. A simple equality test is used to determine if NODE matches.

References XEXP.

Referenced by remove_node_from_insn_list().

◆ init_num_sign_bit_copies_in_rep()

|

static |

Initialize the table NUM_SIGN_BIT_COPIES_IN_REP based on TARGET_MODE_REP_EXTENDED. Note that we assume that the property of TARGET_MODE_REP_EXTENDED(B, C) is sticky to the integral modes narrower than mode B. I.e., if A is a mode narrower than B then in order to be able to operate on it in mode B, mode A needs to satisfy the requirements set by the representation of mode B.

References FOR_EACH_MODE, FOR_EACH_MODE_IN_CLASS, FOR_EACH_MODE_UNTIL, gcc_assert, GET_MODE_PRECISION(), GET_MODE_WIDER_MODE(), i, num_sign_bit_copies_in_rep, opt_mode< T >::require(), require(), and targetm.

Referenced by init_rtlanal().

◆ init_rtlanal()

| void init_rtlanal | ( | void | ) |

Initialize rtx_all_subrtx_bounds.

References GET_RTX_CLASS, i, init_num_sign_bit_copies_in_rep(), NUM_RTX_CODE, rtx_all_subrtx_bounds, RTX_CONST_OBJ, rtx_nonconst_subrtx_bounds, setup_reg_subrtx_bounds(), and UCHAR_MAX.

Referenced by backend_init().

◆ insn_cost()

Calculate the cost of a single instruction. A return value of zero indicates an instruction pattern without a known cost.

References PATTERN(), pattern_cost(), and targetm.

Referenced by bb_ok_for_noce_convert_multiple_sets(), cheap_bb_rtx_cost_p(), combine_instructions(), combine_validate_cost(), find_shift_sequence(), noce_convert_multiple_sets(), noce_find_if_block(), output_asm_name(), rtl_account_profile_record(), and seq_cost().

◆ int_reg_note_p()

Return true if KIND is an integer REG_NOTE.

Referenced by add_int_reg_note(), and alloc_reg_note().

◆ keep_with_call_p()

Return true if we should avoid inserting code between INSN and preceding call instruction.

References fixed_regs, general_operand(), i2, INSN_P, keep_with_call_p(), next_nonnote_insn(), NULL, REG_P, REGNO, SET_DEST, SET_SRC, single_set(), stack_pointer_rtx, and targetm.

Referenced by keep_with_call_p(), rtl_block_ends_with_call_p(), and rtl_flow_call_edges_add().

◆ label_is_jump_target_p()

Return true if LABEL is a target of JUMP_INSN. This applies only to non-complex jumps. That is, direct unconditional, conditional, and tablejumps, but not computed jumps or returns. It also does not apply to the fallthru case of a conditional jump.

References find_reg_note(), GET_NUM_ELEM, i, JUMP_LABEL, NULL, RTVEC_ELT, table, tablejump_p(), and XEXP.

Referenced by cfg_layout_redirect_edge_and_branch(), check_for_label_ref(), find_reloads(), subst_reloads(), and try_optimize_cfg().

◆ loc_mentioned_in_p()

Return true if IN contains a piece of rtl that has the address LOC.

References GET_CODE, GET_RTX_FORMAT, GET_RTX_LENGTH, i, loc_mentioned_in_p(), XEXP, XVECEXP, and XVECLEN.

Referenced by df_remove_dead_eq_notes(), loc_mentioned_in_p(), and remove_address_replacements().

◆ low_bitmask_len()

| int low_bitmask_len | ( | machine_mode | mode, |

| unsigned HOST_WIDE_INT | m ) |

If M is a bitmask that selects a field of low-order bits within an item but not the entire word, return the length of the field. Return -1 otherwise. M is used in machine mode MODE.

References exact_log2(), GET_MODE_MASK, and HWI_COMPUTABLE_MODE_P().

Referenced by try_widen_shift_mode().

◆ lowpart_subreg_regno()

| int lowpart_subreg_regno | ( | unsigned int | regno, |

| machine_mode | xmode, | ||

| machine_mode | ymode ) |

A wrapper around simplify_subreg_regno that uses subreg_lowpart_offset (xmode, ymode) as the offset.

References subreg_info::offset, simplify_subreg_regno(), and subreg_lowpart_offset().

Referenced by gen_memset_value_from_prev().

◆ lsb_bitfield_op_p()

Return true if X is a sign_extract or zero_extract from the least significant bit.

References GET_CODE, GET_MODE, GET_MODE_PRECISION(), GET_RTX_CLASS, INTVAL, known_eq, RTX_BITFIELD_OPS, and XEXP.

Referenced by strip_address_mutations().

◆ may_trap_or_fault_p()

Same as above, but additionally return true if evaluating rtx X might

cause a fault. We define a fault for the purpose of this function as a

erroneous execution condition that cannot be encountered during the normal

execution of a valid program; the typical example is an unaligned memory

access on a strict alignment machine. The compiler guarantees that it

doesn't generate code that will fault from a valid program, but this

guarantee doesn't mean anything for individual instructions. Consider

the following example:

struct S { int d; union { char *cp; int *ip; }; };

int foo(struct S *s)

{

if (s->d == 1)

return *s->ip;

else

return *s->cp;

}

on a strict alignment machine. In a valid program, foo will never be

invoked on a structure for which d is equal to 1 and the underlying

unique field of the union not aligned on a 4-byte boundary, but the

expression *s->ip might cause a fault if considered individually.

At the RTL level, potentially problematic expressions will almost always

verify may_trap_p; for example, the above dereference can be emitted as

(mem:SI (reg:P)) and this expression is may_trap_p for a generic register.

However, suppose that foo is inlined in a caller that causes s->cp to

point to a local character variable and guarantees that s->d is not set

to 1; foo may have been effectively translated into pseudo-RTL as:

if ((reg:SI) == 1)

(set (reg:SI) (mem:SI (%fp - 7)))

else

(set (reg:QI) (mem:QI (%fp - 7)))

Now (mem:SI (%fp - 7)) is considered as not may_trap_p since it is a

memory reference to a stack slot, but it will certainly cause a fault

on a strict alignment machine.

References may_trap_p_1().

Referenced by can_move_insns_across(), fill_simple_delay_slots(), fill_slots_from_thread(), find_invariant_insn(), noce_try_cmove_arith(), noce_try_sign_mask(), steal_delay_list_from_fallthrough(), steal_delay_list_from_target(), and update_equiv_regs().

◆ may_trap_p()

Return true if evaluating rtx X might cause a trap.

References may_trap_p_1().

Referenced by can_move_insns_across(), check_cond_move_block(), copyprop_hardreg_forward_1(), distribute_notes(), eliminate_partially_redundant_loads(), emit_libcall_block_1(), find_moveable_store(), insn_could_throw_p(), noce_operand_ok(), prepare_cmp_insn(), process_bb_lives(), prune_expressions(), purge_dead_edges(), simple_mem(), simplify_context::simplify_ternary_operation(), and try_combine().

◆ may_trap_p_1()

Return true if evaluating rtx X might cause a trap. FLAGS controls how to consider MEMs. A true means the context of the access may have changed from the original, such that the address may have become invalid.

References CASE_CONST_ANY, const0_rtx, CONST_VECTOR_DUPLICATE_P, CONST_VECTOR_ELT, CONST_VECTOR_ENCODED_ELT, CONSTANT_P, FLOAT_MODE_P, GET_CODE, GET_MODE, GET_MODE_NUNITS(), GET_RTX_FORMAT, GET_RTX_LENGTH, HONOR_NANS(), HONOR_SNANS(), i, may_trap_p_1(), MEM_NOTRAP_P, MEM_SIZE, MEM_SIZE_KNOWN_P, MEM_VOLATILE_P, rtx_addr_can_trap_p_1(), stack_pointer_rtx, targetm, XEXP, XVECEXP, and XVECLEN.

Referenced by default_unspec_may_trap_p(), may_trap_or_fault_p(), may_trap_p(), and may_trap_p_1().

◆ modified_between_p()

Similar to reg_set_between_p, but check all registers in X. Return false only if none of them are modified between START and END. Return true if X contains a MEM; this routine does use memory aliasing.

References CASE_CONST_ANY, end(), GET_CODE, GET_RTX_FORMAT, GET_RTX_LENGTH, i, MEM_READONLY_P, memory_modified_in_insn_p(), modified_between_p(), NEXT_INSN(), reg_set_between_p(), XEXP, XVECEXP, and XVECLEN.

Referenced by can_combine_p(), canonicalize_condition(), check_cond_move_block(), cprop_jump(), cse_condition_code_reg(), find_call_crossed_cheap_reg(), find_moveable_pseudos(), modified_between_p(), no_conflict_move_test(), noce_process_if_block(), and try_combine().

◆ modified_in_p()

Similar to reg_set_p, but check all registers in X. Return false only if none of them are modified in INSN. Return true if X contains a MEM; this routine does use memory aliasing.

References CASE_CONST_ANY, GET_CODE, GET_RTX_FORMAT, GET_RTX_LENGTH, i, MEM_READONLY_P, memory_modified_in_insn_p(), modified_in_p(), reg_set_p(), XEXP, XVECEXP, and XVECLEN.

Referenced by canonicalize_condition(), clobbers_queued_reg_save(), cond_exec_process_if_block(), cond_exec_process_insns(), cprop_jump(), cse_cc_succs(), fill_slots_from_thread(), find_moveable_pseudos(), fp_setter_insn(), init_alias_analysis(), modified_in_p(), no_conflict_move_test(), noce_convert_multiple_sets_1(), noce_get_alt_condition(), noce_process_if_block(), noce_try_cmove_arith(), and thread_jump().

◆ multiple_sets()

Given an INSN, return true if it has more than one SET, else return false.

References set_of_data::found, GET_CODE, i, INSN_P, PATTERN(), SET, XVECEXP, and XVECLEN.

Referenced by combine_and_move_insns(), cse_extended_basic_block(), find_reg_equal_equiv_note(), forward_propagate_into(), hash_scan_set(), process_bb_node_lives(), set_for_reg_notes(), and simple_move_p().

◆ no_labels_between_p()

Return true if in between BEG and END, exclusive of BEG and END, there is no CODE_LABEL insn.

References end(), LABEL_P, and NEXT_INSN().

Referenced by fill_simple_delay_slots(), and relax_delay_slots().

◆ nonzero_address_p()

Return true if X is an address that is known to not be zero.

References arg_pointer_rtx, CONST_INT_P, CONSTANT_P, fixed_regs, frame_pointer_rtx, GET_CODE, hard_frame_pointer_rtx, INTVAL, nonzero_address_p(), pic_offset_table_rtx, stack_pointer_rtx, SYMBOL_REF_WEAK, VIRTUAL_REGISTER_P, and XEXP.

Referenced by nonzero_address_p(), and simplify_const_relational_operation().

◆ nonzero_bits()

| unsigned HOST_WIDE_INT nonzero_bits | ( | const_rtx | x, |

| machine_mode | mode ) |

References cached_nonzero_bits(), GET_MODE, GET_MODE_MASK, is_a(), and NULL_RTX.

Referenced by combine_simplify_rtx(), evaluate_stmt(), exact_int_to_float_conversion_p(), expand_compound_operation(), extend_mask(), extended_count(), find_split_point(), force_int_to_mode(), force_to_mode(), get_default_value(), if_then_else_cond(), intersect_range_with_nonzero_bits(), make_compound_operation_int(), make_extraction(), make_field_assignment(), num_sign_bit_copies1(), record_value_for_reg(), simplify_and_const_int_1(), simplify_context::simplify_binary_operation_1(), simplify_compare_const(), simplify_comparison(), simplify_const_relational_operation(), simplify_if_then_else(), simplify_context::simplify_relational_operation_1(), simplify_set(), simplify_shift_const_1(), simplify_context::simplify_unary_operation_1(), try_combine(), try_widen_shift_mode(), and update_rsp_from_reg_equal().

◆ nonzero_bits1()

|

static |

Given an expression, X, compute which bits in X can be nonzero. We don't care about bits outside of those defined in MODE. For most X this is simply GET_MODE_MASK (GET_MODE (X)), but if X is an arithmetic operation, we can do better.

References arg_pointer_rtx, BITS_PER_WORD, cached_nonzero_bits(), CLZ_DEFINED_VALUE_AT_ZERO, CONST_INT_P, count, ctz_or_zero(), floor_log2(), frame_pointer_rtx, gcc_unreachable, GET_CODE, GET_MODE, GET_MODE_CLASS, GET_MODE_MASK, GET_MODE_PRECISION(), HOST_BITS_PER_WIDE_INT, HOST_WIDE_INT_1U, HOST_WIDE_INT_M1U, HOST_WIDE_INT_UC, INTVAL, is_a(), load_extend_op(), MAX, MEM_P, MIN, num_sign_bit_copies(), ptr_mode, rtl_hooks::reg_nonzero_bits, REG_POINTER, REGNO, REGNO_POINTER_ALIGN, SHORT_IMMEDIATES_SIGN_EXTEND, stack_pointer_rtx, STORE_FLAG_VALUE, SUBREG_PROMOTED_UNSIGNED_P, SUBREG_PROMOTED_VAR_P, SUBREG_REG, target_default_pointer_address_modes_p(), targetm, poly_int< N, C >::to_constant(), UINTVAL, UNARY_P, val_signbit_known_set_p(), word_register_operation_p(), WORD_REGISTER_OPERATIONS, and XEXP.

Referenced by cached_nonzero_bits().

◆ nonzero_bits_binary_arith_p()

Return true if nonzero_bits1 might recurse into both operands of X.

References ARITHMETIC_P, and GET_CODE.

Referenced by cached_nonzero_bits().

◆ noop_move_p()

Return true if an insn consists only of SETs, each of which only sets a value to itself.

References COND_EXEC_CODE, GET_CODE, i, INSN_CODE, NOOP_MOVE_INSN_CODE, set_of_data::pat, PATTERN(), SET, set_noop_p(), XVECEXP, and XVECLEN.

Referenced by copyprop_hardreg_forward_1(), delete_noop_moves(), delete_unmarked_insns(), distribute_notes(), and find_rename_reg().

◆ note_pattern_stores()

Call FUN on each register or MEM that is stored into or clobbered by X. (X would be the pattern of an insn). DATA is an arbitrary pointer, ignored by note_stores, but passed to FUN. FUN receives three arguments: 1. the REG, MEM or PC being stored in or clobbered, 2. the SET or CLOBBER rtx that does the store, 3. the pointer DATA provided to note_stores. If the item being stored in or clobbered is a SUBREG of a hard register, the SUBREG will be passed.

References COND_EXEC_CODE, GET_CODE, i, note_pattern_stores(), REG_P, REGNO, SET, SET_DEST, SUBREG_REG, XEXP, XVECEXP, and XVECLEN.

Referenced by cselib_record_sets(), note_pattern_stores(), note_stores(), reload(), set_of(), single_set_gcse(), try_combine(), and update_equiv_regs().

◆ note_stores()

Same, but for an instruction. If the instruction is a call, include any CLOBBERs in its CALL_INSN_FUNCTION_USAGE.

References CALL_INSN_FUNCTION_USAGE, CALL_P, GET_CODE, note_pattern_stores(), PATTERN(), and XEXP.

Referenced by add_with_sets(), adjust_insn(), assign_parm_setup_reg(), build_def_use(), calculate_loop_reg_pressure(), can_move_insns_across(), combine_instructions(), compute_hash_table_work(), copyprop_hardreg_forward_1(), delete_trivially_dead_insns(), doloop_optimize(), emit_inc_dec_insn_before(), emit_libcall_block_1(), emit_output_reload_insns(), expand_atomic_compare_and_swap(), find_all_hard_reg_sets(), find_first_parameter_load(), init_alias_analysis(), insert_one_insn(), insn_invalid_p(), kill_clobbered_values(), likely_spilled_retval_p(), load_killed_in_block_p(), load_killed_in_block_p(), mark_nonreg_stores(), mark_target_live_regs(), memory_modified_in_insn_p(), record_dead_and_set_regs(), record_last_mem_set_info_common(), record_opr_changes(), reg_dead_at_p(), reload_as_needed(), reload_combine(), reload_cse_move2add_invalidate(), replace_read(), save_call_clobbered_regs(), setup_save_areas(), simplify_using_initial_values(), try_shrink_wrapping(), and validate_equiv_mem().

◆ note_uses()

Like notes_stores, but call FUN for each expression that is being referenced in PBODY, a pointer to the PATTERN of an insn. We only call FUN for each expression, not any interior subexpressions. FUN receives a pointer to the expression and the DATA passed to this function. Note that this is not quite the same test as that done in reg_referenced_p since that considers something as being referenced if it is being partially set, while we do not.

References ASM_OPERANDS_INPUT, ASM_OPERANDS_INPUT_LENGTH, COND_EXEC_CODE, COND_EXEC_TEST, GET_CODE, i, MEM_P, note_uses(), note_uses(), PATTERN(), SET, SET_DEST, SET_SRC, TRAP_CONDITION, XEXP, XVECEXP, and XVECLEN.

Referenced by add_with_sets(), adjust_insn(), bypass_block(), combine_instructions(), copyprop_hardreg_forward_1(), cprop_insn(), find_call_stack_args(), insert_one_insn(), local_cprop_pass(), note_uses(), scan_insn(), try_shrink_wrapping(), and validate_replace_src_group().

◆ num_sign_bit_copies()

| unsigned int num_sign_bit_copies | ( | const_rtx | x, |

| machine_mode | mode ) |

References cached_num_sign_bit_copies, GET_MODE, is_a(), and NULL_RTX.

Referenced by combine_simplify_rtx(), exact_int_to_float_conversion_p(), extended_count(), force_int_to_mode(), if_then_else_cond(), nonzero_bits1(), record_value_for_reg(), simplify_compare_const(), simplify_comparison(), simplify_const_relational_operation(), simplify_if_then_else(), simplify_set(), simplify_shift_const_1(), simplify_context::simplify_unary_operation_1(), truncated_to_mode(), try_widen_shift_mode(), and update_rsp_from_reg_equal().

◆ num_sign_bit_copies1()

|

static |

Return the number of bits at the high-order end of X that are known to be equal to the sign bit. X will be used in mode MODE. The returned value will always be between 1 and the number of bits in MODE.

References as_a(), BITS_PER_WORD, cached_num_sign_bit_copies, CONST_INT_P, constm1_rtx, floor_log2(), GET_CODE, GET_MODE, GET_MODE_MASK, GET_MODE_PRECISION(), HOST_BITS_PER_WIDE_INT, HOST_WIDE_INT_1U, INTVAL, is_a(), load_extend_op(), MAX, MEM_P, MIN, nonzero_bits(), nonzero_bits(), paradoxical_subreg_p(), ptr_mode, rtl_hooks::reg_num_sign_bit_copies, REG_POINTER, STORE_FLAG_VALUE, SUBREG_PROMOTED_SIGNED_P, SUBREG_PROMOTED_VAR_P, SUBREG_REG, target_default_pointer_address_modes_p(), targetm, UINTVAL, word_register_operation_p(), WORD_REGISTER_OPERATIONS, and XEXP.

Referenced by cached_num_sign_bit_copies().

◆ num_sign_bit_copies_binary_arith_p()

See the macro definition above.

Return true if num_sign_bit_copies1 might recurse into both operands of X.

References ARITHMETIC_P, and GET_CODE.

Referenced by cached_num_sign_bit_copies().

◆ offset_within_block_p()

Return true if SYMBOL is a SYMBOL_REF and OFFSET + SYMBOL points to somewhere in the same object or object_block as SYMBOL.

References CONSTANT_POOL_ADDRESS_P, GET_CODE, GET_MODE_SIZE(), get_pool_mode(), int_size_in_bytes(), SYMBOL_REF_BLOCK, SYMBOL_REF_BLOCK_OFFSET, SYMBOL_REF_DECL, SYMBOL_REF_HAS_BLOCK_INFO_P, and TREE_TYPE.

◆ parms_set()

Helper function for noticing stores to parameter registers.

References CLEAR_HARD_REG_BIT, parms_set_data::nregs, REG_P, REGNO, parms_set_data::regs, and TEST_HARD_REG_BIT.

Referenced by find_first_parameter_load().

◆ pattern_cost()

Calculate the rtx_cost of a single instruction pattern. A return value of zero indicates an instruction pattern without a known cost.

References COSTS_N_INSNS, GET_CODE, GET_MODE, GET_MODE_CLASS, i, MAX, NULL_RTX, SET, SET_DEST, SET_SRC, set_src_cost(), XVECEXP, and XVECLEN.

Referenced by bb_valid_for_noce_process_p(), and insn_cost().

◆ read_modify_subreg_p()

Return true if X is a SUBREG and if storing a value to X would preserve some of its SUBREG_REG. For example, on a normal 32-bit target, using a SUBREG to store to one half of a DImode REG would preserve the other half.

References gcc_checking_assert, GET_CODE, GET_MODE, GET_MODE_SIZE(), maybe_gt, REGMODE_NATURAL_SIZE, and SUBREG_REG.

Referenced by add_regs_to_insn_regno_info(), insn_propagation::apply_to_lvalue_1(), collect_non_operand_hard_regs(), covers_regno_no_parallel_p(), curr_insn_transform(), df_def_record_1(), df_uses_record(), df_word_lr_mark_ref(), expand_field_assignment(), find_single_use_1(), init_subregs_of_mode(), local_cprop_find_used_regs(), mark_pseudo_reg_dead(), mark_pseudo_reg_live(), mark_ref_dead(), mark_referenced_regs(), move_deaths(), reg_referenced_p(), simplify_operand_subreg(), and rtx_properties::try_to_add_dest().

◆ record_hard_reg_sets()

This function, called through note_stores, collects sets and clobbers of hard registers in a HARD_REG_SET, which is pointed to by DATA.

References add_to_hard_reg_set(), GET_MODE, HARD_REGISTER_P, REG_P, and REGNO.

Referenced by assign_parm_setup_reg(), find_all_hard_reg_sets(), and try_shrink_wrapping().

◆ record_hard_reg_uses()

| void record_hard_reg_uses | ( | rtx * | px, |

| void * | data ) |

Like record_hard_reg_sets, but called through note_uses.

References find_all_hard_regs().

Referenced by try_shrink_wrapping().

◆ refers_to_regno_p()

Return true if register in range [REGNO, ENDREGNO) appears either explicitly or implicitly in X other than being stored into. References contained within the substructure at LOC do not count. LOC may be zero, meaning don't ignore anything.

References END_REGNO(), GET_CODE, GET_RTX_FORMAT, GET_RTX_LENGTH, i, refers_to_regno_p(), REG_P, REGNO, RTX_CODE, SET, SET_DEST, SET_SRC, subreg_nregs(), SUBREG_REG, subreg_regno(), VIRTUAL_REGISTER_NUM_P, XEXP, XVECEXP, and XVECLEN.

Referenced by delete_output_reload(), df_get_call_refs(), distribute_notes(), first_hazard_after(), pair_fusion_bb_info::fuse_pair(), latest_hazard_before(), move_insn_for_shrink_wrap(), refers_to_regno_p(), refers_to_regno_p(), reg_overlap_mentioned_p(), remove_invalid_refs(), remove_invalid_subreg_refs(), and pair_fusion_bb_info::try_fuse_pair().

◆ reg_mentioned_p()

Return true if register REG appears somewhere within IN. Also works if REG is not a register; in this case it checks for a subexpression of IN that is Lisp "equal" to REG.

References CASE_CONST_ANY, GET_CODE, GET_RTX_FORMAT, GET_RTX_LENGTH, i, label_ref_label(), reg_mentioned_p(), REG_P, REGNO, rtx_equal_p(), XEXP, XVECEXP, and XVECLEN.

Referenced by assign_parm_setup_reg(), combine_stack_adjustments_for_block(), decrease_live_ranges_number(), delete_dead_insn(), delete_output_reload(), distribute_notes(), emit_output_reload_insns(), emit_push_insn(), emit_store_flag_force(), expand_call(), expand_divmod(), expand_function_start(), extract_integral_bit_field(), find_reloads(), find_split_point(), fold_rtx(), gcse_emit_move_after(), gen_reload_chain_without_interm_reg_p(), if_test_bypass_p(), init_label_info(), init_noce_multiple_sets_info(), lra_delete_dead_insn(), make_safe_from(), maybe_strip_eq_note_for_split_iv(), noce_get_alt_condition(), push_reload(), record_stack_refs(), reg_mentioned_p(), reg_overlap_mentioned_for_reload_p(), reg_overlap_mentioned_p(), rehash_using_reg(), reloads_unique_chain_p(), scan_loop(), simple_mem(), simplify_if_then_else(), store_data_bypass_p_1(), try_back_substitute_reg(), try_combine(), try_replace_reg(), and unmentioned_reg_p().

◆ reg_overlap_mentioned_p()

Rreturn true if modifying X will affect IN. If X is a register or a SUBREG, we check if any register number in X conflicts with the relevant register numbers. If X is a constant, return false. If X is a MEM, return true iff IN contains a MEM (we don't bother checking for memory addresses that can't conflict because we expect this to be a rare case.

References CONSTANT_P, END_REGNO(), gcc_assert, GET_CODE, GET_RTX_FORMAT, GET_RTX_LENGTH, i, MEM_P, refers_to_regno_p(), reg_mentioned_p(), reg_overlap_mentioned_p(), REGNO, subreg_nregs(), SUBREG_REG, subreg_regno(), XEXP, XVECEXP, and XVECLEN.

Referenced by add_equal_note(), add_removable_extension(), insn_propagation::apply_to_lvalue_1(), insn_propagation::apply_to_rvalue_1(), bb_valid_for_noce_process_p(), can_combine_p(), check_cond_move_block(), check_invalid_inc_dec(), combinable_i3pat(), combine_reaching_defs(), conflicts_with_override(), convert_mode_scalar(), copyprop_hardreg_forward_1(), decrease_live_ranges_number(), delete_prior_computation(), distribute_links(), distribute_notes(), emit_move_complex_parts(), expand_absneg_bit(), expand_asm_stmt(), expand_atomic_compare_and_swap(), expand_binop(), expand_copysign_bit(), expand_expr_real_2(), expand_unop(), fill_slots_from_thread(), find_inc(), gen_reload(), init_alias_analysis(), match_asm_constraints_1(), no_conflict_move_test(), noce_convert_multiple_sets_1(), noce_get_alt_condition(), noce_process_if_block(), noce_try_store_flag_constants(), process_bb_node_lives(), record_value_for_reg(), reg_overlap_mentioned_p(), reg_referenced_p(), reg_used_between_p(), reg_used_on_edge(), resolve_simple_move(), set_of_1(), subst(), try_combine(), pair_fusion_bb_info::try_fuse_pair(), validate_equiv_mem(), and validate_equiv_mem_from_store().

◆ reg_referenced_p()

Return true if the old value of X, a register, is referenced in BODY. If X is entirely replaced by a new value and the only use is as a SET_DEST, we do not consider it a reference.

References ASM_OPERANDS_INPUT, ASM_OPERANDS_INPUT_LENGTH, COND_EXEC_CODE, COND_EXEC_TEST, GET_CODE, i, MEM_P, read_modify_subreg_p(), reg_overlap_mentioned_p(), reg_overlap_mentioned_p(), REG_P, reg_referenced_p(), reg_referenced_p(), SET, SET_DEST, SET_SRC, SUBREG_REG, TRAP_CONDITION, XEXP, XVECEXP, and XVECLEN.

Referenced by can_split_parallel_of_n_reg_sets(), combinable_i3pat(), cse_condition_code_reg(), delete_address_reloads_1(), distribute_links(), distribute_notes(), fill_slots_from_thread(), move_deaths(), note_add_store(), reg_referenced_p(), set_nonzero_bits_and_sign_copies(), try_combine(), and update_reg_dead_notes().

◆ reg_set_between_p()

Return true if register REG is set or clobbered in an insn between FROM_INSN and TO_INSN (exclusive of those two).

References INSN_P, NEXT_INSN(), and reg_set_p().

Referenced by bb_valid_for_noce_process_p(), can_combine_p(), combine_reaching_defs(), distribute_notes(), eliminate_partially_redundant_load(), get_bb_avail_insn(), modified_between_p(), and try_combine().

◆ reg_set_p()

Return true if REG is set or clobbered inside INSN.

References as_a(), CALL_P, find_reg_fusage(), FIND_REG_INC_NOTE, FOR_EACH_SUBRTX_VAR, GET_CODE, GET_MODE, GET_RTX_CLASS, i, insn_callee_abi(), INSN_P, MEM_P, NULL_RTX, PATTERN(), REG_P, reg_set_p(), REGNO, RTX_AUTOINC, set_of(), stack_pointer_rtx, XEXP, XVECEXP, and XVECLEN.

Referenced by canonicalize_condition(), cse_cc_succs(), cse_change_cc_mode_insns(), cse_condition_code_reg(), decrease_live_ranges_number(), delete_address_reloads_1(), delete_prior_computation(), distribute_links(), distribute_notes(), emit_reload_insns(), fill_slots_from_thread(), find_call_crossed_cheap_reg(), fix_reg_dead_note(), modified_in_p(), move_deaths(), reg_killed_on_edge(), reg_killed_on_edge(), reg_set_between_p(), reg_set_p(), reload_as_needed(), reload_cse_move2add_invalidate(), reload_cse_regs_1(), remove_init_insns(), and try_combine().

◆ reg_used_between_p()

Return true if register REG is used in an insn between FROM_INSN and TO_INSN (exclusive of those two).

References CALL_P, find_reg_fusage(), NEXT_INSN(), NONDEBUG_INSN_P, PATTERN(), and reg_overlap_mentioned_p().

Referenced by can_combine_p(), combine_reaching_defs(), eliminate_partially_redundant_load(), no_conflict_move_test(), and try_combine().

◆ register_asm_p()

Return true if X is register asm.

References DECL_ASSEMBLER_NAME_SET_P, DECL_REGISTER, HAS_DECL_ASSEMBLER_NAME_P, NULL_TREE, REG_EXPR, and REG_P.

Referenced by insn_propagation::apply_to_rvalue_1(), and verify_changes().

◆ regno_use_in()

Searches X for any reference to REGNO, returning the rtx of the reference found if any. Otherwise, returns NULL_RTX.

References GET_CODE, GET_RTX_FORMAT, GET_RTX_LENGTH, i, NULL_RTX, REG_P, REGNO, regno_use_in(), XEXP, XVECEXP, and XVECLEN.

Referenced by regno_use_in().

◆ remove_node_from_insn_list()

| void remove_node_from_insn_list | ( | const rtx_insn * | node, |

| rtx_insn_list ** | listp ) |

Search LISTP (an INSN_LIST) for an entry whose first operand is NODE and remove that entry from the list if it is found. A simple equality test is used to determine if NODE matches.

References gcc_checking_assert, in_insn_list_p(), rtx_insn_list::insn(), rtx_insn_list::next(), NULL, and XEXP.

Referenced by delete_insn().

◆ remove_note()

Remove register note NOTE from the REG_NOTES of INSN.

References df_notes_rescan(), NULL_RTX, REG_NOTE_KIND, REG_NOTES, and XEXP.

Referenced by canonicalize_insn(), cprop_jump(), cse_insn(), dead_or_predicable(), decrease_live_ranges_number(), delete_insn(), emit_delay_sequence(), emit_libcall_block_1(), ext_dce_try_optimize_rshift(), fix_reg_dead_note(), fixup_eh_region_note(), fixup_tail_calls(), force_nonfallthru_and_redirect(), init_label_info(), lra_process_new_insns(), maybe_strip_eq_note_for_split_iv(), merge_notes(), move_invariant_reg(), no_equiv(), noce_process_if_block(), patch_jump_insn(), purge_dead_edges(), redirect_jump_2(), reload_as_needed(), remove_death(), remove_reachable_equiv_notes(), remove_reg_equal_equiv_notes_for_regno(), replace_store_insn(), resolve_reg_notes(), rest_of_clean_state(), try_back_substitute_reg(), try_combine(), try_eliminate_compare(), try_replace_reg(), update_equiv_regs(), update_reg_dead_notes(), and update_reg_unused_notes().

◆ remove_reg_equal_equiv_notes()

Remove REG_EQUAL and/or REG_EQUIV notes if INSN has such notes. If NO_RESCAN is false and any notes were removed, call df_notes_rescan. Return true if any note has been removed.

References df_notes_rescan(), REG_NOTE_KIND, REG_NOTES, and XEXP.

Referenced by adjust_for_new_dest(), ext_dce_try_optimize_extension(), reload_combine_recognize_pattern(), and try_apply_stack_adjustment().

◆ remove_reg_equal_equiv_notes_for_regno()

| void remove_reg_equal_equiv_notes_for_regno | ( | unsigned int | regno | ) |

Remove all REG_EQUAL and REG_EQUIV notes referring to REGNO.

References df, DF_REF_INSN, DF_REG_EQ_USE_CHAIN, find_reg_equal_equiv_note(), gcc_assert, NULL, and remove_note().

Referenced by dead_or_predicable(), and remove_reg_equal_equiv_notes_for_defs().

◆ replace_label()

Replace occurrences of the OLD_LABEL in *LOC with NEW_LABEL. Also track the change in LABEL_NUSES if UPDATE_LABEL_NUSES.

References ALL, CONSTANT_POOL_ADDRESS_P, copy_rtx(), FOR_EACH_SUBRTX_PTR, force_const_mem(), GET_CODE, GET_NUM_ELEM, get_pool_constant(), get_pool_mode(), i, JUMP_LABEL, JUMP_P, JUMP_TABLE_DATA_P, LABEL_NUSES, PATTERN(), replace_label(), replace_rtx(), RTVEC_ELT, rtx_referenced_p(), XEXP, and XVEC.

Referenced by replace_label(), and replace_label_in_insn().

◆ replace_label_in_insn()

| void replace_label_in_insn | ( | rtx_insn * | insn, |

| rtx_insn * | old_label, | ||

| rtx_insn * | new_label, | ||

| bool | update_label_nuses ) |

References gcc_checking_assert, and replace_label().

Referenced by outgoing_edges_match(), and try_crossjump_to_edge().

◆ replace_rtx()

Replace any occurrence of FROM in X with TO. The function does not enter into CONST_DOUBLE for the replace. Note that copying is not done so X must not be shared unless all copies are to be modified. ALL_REGS is true if we want to replace all REGs equal to FROM, not just those pointer-equal ones.

References CONST_SCALAR_INT_P, gcc_assert, GET_CODE, GET_MODE, GET_RTX_FORMAT, GET_RTX_LENGTH, i, REG_P, REGNO, replace_rtx(), simplify_subreg(), simplify_unary_operation(), SUBREG_BYTE, SUBREG_REG, XEXP, XVECEXP, and XVECLEN.

Referenced by find_split_point(), match_asm_constraints_1(), record_value_for_reg(), replace_label(), and replace_rtx().

◆ rtx_addr_can_trap_p()

Return true if the use of X as an address in a MEM can cause a trap.

References rtx_addr_can_trap_p_1().

Referenced by find_comparison_args().

◆ rtx_addr_can_trap_p_1()

|

static |

Return true if the use of X+OFFSET as an address in a MEM with SIZE bytes can cause a trap. MODE is the mode of the MEM (not that of X) and UNALIGNED_MEMS controls whether true is returned for unaligned memory references on strict alignment machines.

References arg_pointer_rtx, CONSTANT_POOL_ADDRESS_P, crtl, current_function_decl, DECL_P, DECL_SIZE_UNIT, fixed_regs, FRAME_GROWS_DOWNWARD, frame_pointer_rtx, gcc_checking_assert, GET_CODE, get_frame_size(), get_initial_register_offset(), GET_MODE_SIZE(), HARD_FRAME_POINTER_REGNUM, hard_frame_pointer_rtx, int_size_in_bytes(), known_eq, known_ge, known_le, pic_offset_table_rtx, poly_int_rtx_p(), poly_int_tree_p(), PREFERRED_STACK_BOUNDARY, rtx_addr_can_trap_p_1(), STACK_POINTER_OFFSET, stack_pointer_rtx, SYMBOL_REF_DECL, SYMBOL_REF_FUNCTION_P, SYMBOL_REF_WEAK, targetm, TREE_CODE, TREE_STRING_LENGTH, TREE_TYPE, TYPE_SIZE_UNIT, VIRTUAL_REGISTER_P, and XEXP.

Referenced by may_trap_p_1(), rtx_addr_can_trap_p(), and rtx_addr_can_trap_p_1().

◆ rtx_addr_varies_p()

Return true if X refers to a memory location whose address cannot be compared reliably with constant addresses, or if X refers to a BLKmode memory object. FOR_ALIAS is nonzero if we are called from alias analysis; if it is zero, we are slightly more conservative.

References GET_CODE, GET_MODE, GET_RTX_FORMAT, GET_RTX_LENGTH, i, rtx_addr_varies_p(), rtx_varies_p(), XEXP, XVECEXP, and XVECLEN.

Referenced by rtx_addr_varies_p().

◆ rtx_cost()

Return an estimate of the cost of computing rtx X. One use is in cse, to decide which expression to keep in the hash table. Another is in rtl generation, to pick the cheapest way to multiply. Other uses like the latter are expected in the future. X appears as operand OPNO in an expression with code OUTER_CODE. SPEED specifies whether costs optimized for speed or size should be returned.

References COSTS_N_INSNS, estimated_poly_value(), GET_CODE, GET_MODE, GET_MODE_SIZE(), GET_RTX_FORMAT, GET_RTX_LENGTH, i, mode_size, rtx_cost(), rtx_cost(), SET, SET_DEST, SUBREG_REG, targetm, XEXP, XVECEXP, and XVECLEN.

Referenced by avoid_expensive_constant(), canonicalize_comparison(), default_address_cost(), emit_conditional_move(), emit_store_flag(), emit_store_flag_int(), get_full_rtx_cost(), maybe_optimize_mod_cmp(), maybe_optimize_pow2p_mod_cmp(), noce_try_sign_bit_splat(), notreg_cost(), prefer_and_bit_test(), prepare_cmp_insn(), rtx_cost(), set_rtx_cost(), and set_src_cost().

◆ rtx_referenced_p()

Return true if X is referenced in BODY.

References ALL, CONSTANT_POOL_ADDRESS_P, FOR_EACH_SUBRTX, GET_CODE, get_pool_constant(), LABEL_P, label_ref_label(), rtx_equal_p(), and y.

Referenced by analyze_insn_to_expand_var(), outgoing_edges_match(), referenced_in_one_insn_in_loop_p(), replace_label(), reset_debug_uses_in_loop(), and try_combine().

◆ rtx_unstable_p()

Return true if the value of X is unstable (would be different at a different point in the program). The frame pointer, arg pointer, etc. are considered stable (within one function) and so is anything marked `unchanging'.

References arg_pointer_rtx, CASE_CONST_ANY, fixed_regs, frame_pointer_rtx, GET_CODE, GET_RTX_FORMAT, GET_RTX_LENGTH, hard_frame_pointer_rtx, i, MEM_READONLY_P, MEM_VOLATILE_P, PIC_OFFSET_TABLE_REG_CALL_CLOBBERED, pic_offset_table_rtx, RTX_CODE, rtx_unstable_p(), rtx_unstable_p(), XEXP, XVECEXP, and XVECLEN.

Referenced by rtx_unstable_p().

◆ rtx_varies_p()

Return true if X has a value that can vary even between two executions of the program. false means X can be compared reliably against certain constants or near-constants. FOR_ALIAS is nonzero if we are called from alias analysis; if it is zero, we are slightly more conservative. The frame pointer and the arg pointer are considered constant.

References arg_pointer_rtx, CASE_CONST_ANY, fixed_regs, frame_pointer_rtx, GET_CODE, GET_RTX_FORMAT, GET_RTX_LENGTH, hard_frame_pointer_rtx, i, MEM_READONLY_P, MEM_VOLATILE_P, PIC_OFFSET_TABLE_REG_CALL_CLOBBERED, pic_offset_table_rtx, RTX_CODE, rtx_varies_p(), rtx_varies_p(), XEXP, XVECEXP, and XVECLEN.

Referenced by equiv_init_movable_p(), equiv_init_varies_p(), init_alias_analysis(), make_memloc(), rtx_addr_varies_p(), rtx_varies_p(), and update_equiv_regs().

◆ seq_cost()

Returns estimate on cost of computing SEQ.

References insn_cost(), NEXT_INSN(), NONDEBUG_INSN_P, set_rtx_cost(), and single_set().

Referenced by attempt_change(), computation_cost(), default_noce_conversion_profitable_p(), expand_ccmp_expr_1(), expand_expr_divmod(), expand_expr_real_2(), expand_POPCOUNT(), init_set_costs(), maybe_optimize_mod_cmp(), maybe_optimize_pow2p_mod_cmp(), noce_try_cond_arith(), and try_emit_cmove_seq().

◆ set_address_base()

|

static |

Set the base part of address INFO to LOC, given that INNER is the unmutated value.

References gcc_assert.

Referenced by decompose_automod_address(), decompose_incdec_address(), and decompose_normal_address().

◆ set_address_disp()

|

static |

Set the displacement part of address INFO to LOC, given that INNER is the constant term.

References gcc_assert.

Referenced by decompose_automod_address(), and decompose_normal_address().

◆ set_address_index()

|

static |

Set the index part of address INFO to LOC, given that INNER is the unmutated value.

References gcc_assert.

Referenced by decompose_automod_address(), and decompose_normal_address().

◆ set_address_segment()

|

static |

Set the segment part of address INFO to LOC, given that INNER is the unmutated value.

References gcc_assert.

Referenced by decompose_normal_address().

◆ set_noop_p()

Return true if the destination of SET equals the source and there are no side effects.

References const0_rtx, GET_CODE, GET_MODE, GET_MODE_UNIT_SIZE, HARD_REGISTER_P, i, MEM_P, pc_rtx, poly_int_rtx_p(), REG_CAN_CHANGE_MODE_P, REG_P, REGNO, rtx_equal_p(), SET_DEST, SET_SRC, side_effects_p(), simplify_subreg_regno(), SUBREG_BYTE, SUBREG_REG, validate_subreg(), XEXP, XVECEXP, and XVECLEN.

Referenced by eliminate_partially_redundant_load(), hash_scan_set(), hash_scan_set(), lra_coalesce(), noop_move_p(), recog_for_combine_1(), set_live_p(), split_all_insns(), split_all_insns_noflow(), and try_combine().

◆ set_of()

Give an INSN, return a SET or CLOBBER expression that does modify PAT (either directly or via STRICT_LOW_PART and similar modifiers).

References INSN_P, note_pattern_stores(), NULL_RTX, set_of_data::pat, PATTERN(), and set_of_1().

Referenced by canonicalize_condition(), check_cond_move_block(), end_ifcvt_sequence(), get_defs(), insn_valid_noce_process_p(), reg_set_p(), and reversed_comparison_code_parts().

◆ set_of_1()

Analyze RTL for GNU compiler. Copyright (C) 1987-2026 Free Software Foundation, Inc. This file is part of GCC. GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version. GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details. You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see <http://www.gnu.org/licenses/>.

Forward declarations

References MEM_P, set_of_data::pat, reg_overlap_mentioned_p(), and rtx_equal_p().

Referenced by set_of().

◆ setup_reg_subrtx_bounds()

|

static |

Return true if RTX code CODE has a single sequence of zero or more "e" operands and no rtvec operands. Initialize its rtx_all_subrtx_bounds entry in that case.

References count, gcc_checking_assert, GET_RTX_FORMAT, i, and rtx_all_subrtx_bounds.

Referenced by init_rtlanal().

◆ side_effects_p()

Similar to above, except that it also rejects register pre- and post- incrementing.

References CASE_CONST_ANY, GET_CODE, GET_MODE, GET_RTX_FORMAT, GET_RTX_LENGTH, i, MEM_VOLATILE_P, RTX_CODE, side_effects_p(), XEXP, XVECEXP, and XVECLEN.

Referenced by add_insn_allocno_copies(), can_combine_p(), can_split_parallel_of_n_reg_sets(), check_cond_move_block(), combine_instructions(), combine_simplify_rtx(), copyprop_hardreg_forward_1(), count_reg_usage(), cse_insn(), cselib_add_permanent_equiv(), cselib_record_set(), delete_dead_insn(), delete_prior_computation(), delete_trivially_dead_insns(), distribute_notes(), drop_writeback(), eliminate_partially_redundant_loads(), emit_conditional_move_1(), expand_builtin_prefetch(), fill_slots_from_thread(), find_moveable_store(), find_split_point(), flow_find_cross_jump(), fold_rtx(), force_to_mode(), get_reload_reg(), if_then_else_cond(), interesting_dest_for_shprep(), invariant_p(), known_cond(), lra_coalesce(), lra_delete_dead_insn(), match_plus_neg_pattern(), maybe_legitimize_operand_same_code(), noce_get_condition(), noce_operand_ok(), noce_process_if_block(), noce_try_sign_mask(), non_conflicting_reg_copy_p(), onlyjump_p(), recompute_constructor_flags(), record_set_data(), reload_cse_simplify_operands(), reload_cse_simplify_set(), resolve_simple_move(), rtl_can_remove_branch_p(), scan_one_insn(), set_live_p(), set_noop_p(), set_unique_reg_note(), side_effects_p(), simple_mem(), simplify_and_const_int_1(), simplify_context::simplify_binary_operation_1(), simplify_const_relational_operation(), simplify_context::simplify_distributive_operation(), simplify_if_then_else(), simplify_context::simplify_logical_relational_operation(), simplify_context::simplify_merge_mask(), simplify_context::simplify_relational_operation_1(), simplify_set(), simplify_shift_const_1(), simplify_context::simplify_ternary_operation(), simplify_context::simplify_unary_operation_1(), single_set_2(), store_expr(), thread_jump(), try_combine(), try_eliminate_compare(), try_merge_compare(), try_redirect_by_replacing_jump(), update_equiv_regs(), validate_equiv_mem(), and verify_constructor_flags().

◆ simple_regno_set()

Check whether instruction pattern PAT contains a SET with the following

properties:

- the SET is executed unconditionally; and

- either:

- the destination of the SET is a REG that contains REGNO; or

- both:

- the destination of the SET is a SUBREG of such a REG; and

- writing to the subreg clobbers all of the SUBREG_REG

(in other words, read_modify_subreg_p is false).

If PAT does have a SET like that, return the set, otherwise return null.

This is intended to be an alternative to single_set for passes that

can handle patterns with multiple_sets.

References covers_regno_no_parallel_p(), GET_CODE, i, last, set_of_data::pat, SET, SET_DEST, simple_regno_set(), XVECEXP, and XVECLEN.

Referenced by forward_propagate_into(), and simple_regno_set().

◆ simplify_subreg_regno()

| int simplify_subreg_regno | ( | unsigned int | xregno, |

| machine_mode | xmode, | ||

| poly_uint64 | offset, | ||

| machine_mode | ymode, | ||

| bool | allow_stack_regs ) |

Return the number of a YMODE register to which

(subreg:YMODE (reg:XMODE XREGNO) OFFSET)

can be simplified. Return -1 if the subreg can't be simplified.

XREGNO is a hard register number. ALLOW_STACK_REGS is true if

we should allow subregs of stack_pointer_rtx, frame_pointer_rtx.

and arg_pointer_rtx (which are normally expected to be the unique

way of referring to their respective registers).

References frame_pointer_needed, GET_MODE_CLASS, HARD_REGISTER_NUM_P, lra_in_progress, subreg_info::offset, REG_CAN_CHANGE_MODE_P, reload_completed, subreg_info::representable_p, subreg_get_info(), and targetm.

Referenced by can_decompose_p(), curr_insn_transform(), find_reloads(), ira_build_conflicts(), lowpart_subreg_regno(), operands_match_p(), process_single_reg_class_operands(), set_noop_p(), simplifiable_subregs(), simplify_operand_subreg(), simplify_context::simplify_subreg(), subst(), and validate_subreg().

◆ single_output_fused_pair_p()

Return true if INSN is the second element of a pair of macro-fused single_sets, both of which having the same register output as another.

References INSN_P, NULL_RTX, prev_nonnote_nondebug_insn(), REG_P, REGNO, reload_completed, SCHED_GROUP_P, SET_DEST, and single_set().

Referenced by add_insn_allocno_copies(), and scan_rtx_reg().

◆ single_set_2()

Given an INSN, return a SET expression if this insn has only a single SET. It may also have CLOBBERs, USEs, or SET whose output will not be used, which we ignore.

References df, df_note, DF_REG_USE_COUNT, find_reg_note(), GET_CODE, HARD_REGISTER_P, i, NULL, NULL_RTX, set_of_data::pat, REG_P, REGNO, SET, SET_DEST, side_effects_p(), XVECEXP, and XVECLEN.

Referenced by single_set().

◆ split_const()

Split X into a base and a constant offset, storing them in *BASE_OUT and *OFFSET_OUT respectively.

References const0_rtx, CONST_INT_P, GET_CODE, and XEXP.

Referenced by insn_propagation::apply_to_rvalue_1(), and simplify_replace_fn_rtx().

◆ split_double()

Split up a CONST_DOUBLE or integer constant rtx into two rtx's for single words, storing in *FIRST the word that comes first in memory in the target and in *SECOND the other. TODO: This function needs to be rewritten to work on any size integer.

References BITS_PER_WORD, const0_rtx, CONST_DOUBLE_HIGH, CONST_DOUBLE_LOW, CONST_DOUBLE_P, CONST_DOUBLE_REAL_VALUE, CONST_INT_P, CONST_WIDE_INT_ELT, CONST_WIDE_INT_NUNITS, constm1_rtx, gcc_assert, GEN_INT, GET_CODE, GET_MODE, GET_MODE_CLASS, HOST_BITS_PER_LONG, HOST_BITS_PER_WIDE_INT, INTVAL, and REAL_VALUE_TO_TARGET_DOUBLE.

Referenced by emit_group_load_1().

◆ strip_address_mutations()

Strip outer address "mutations" from LOC and return a pointer to the inner value. If OUTER_CODE is nonnull, store the code of the innermost stripped expression there. "Mutations" either convert between modes or apply some kind of extension, truncation or alignment.

References CONST_INT_P, CONSTANT_P, GET_CODE, GET_RTX_CLASS, lsb_bitfield_op_p(), OBJECT_P, RTX_UNARY, subreg_lowpart_p(), SUBREG_REG, and XEXP.

Referenced by decompose_address(), decompose_automod_address(), decompose_normal_address(), get_base_term(), and get_index_term().

◆ strip_offset()

| rtx strip_offset | ( | rtx | x, |

| poly_int64 * | offset_out ) |

Express integer value X as some value Y plus a polynomial offset, where Y is either const0_rtx, X or something within X (as opposed to a new rtx). Return the Y and store the offset in *OFFSET_OUT.

References const0_rtx, GET_CODE, poly_int_rtx_p(), and XEXP.