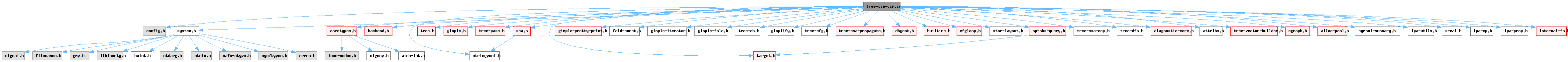

#include "config.h"#include "system.h"#include "coretypes.h"#include "backend.h"#include "target.h"#include "tree.h"#include "gimple.h"#include "tree-pass.h"#include "ssa.h"#include "gimple-pretty-print.h"#include "fold-const.h"#include "gimple-iterator.h"#include "gimple-fold.h"#include "tree-eh.h"#include "gimplify.h"#include "tree-cfg.h"#include "tree-ssa-propagate.h"#include "dbgcnt.h"#include "builtins.h"#include "cfgloop.h"#include "stor-layout.h"#include "optabs-query.h"#include "tree-ssa-ccp.h"#include "tree-dfa.h"#include "diagnostic-core.h"#include "stringpool.h"#include "attribs.h"#include "tree-vector-builder.h"#include "cgraph.h"#include "alloc-pool.h"#include "symbol-summary.h"#include "ipa-utils.h"#include "sreal.h"#include "ipa-cp.h"#include "ipa-prop.h"#include "internal-fn.h"#include "gimple-range.h"#include "tree-ssa-strlen.h"

Data Structures | |

| class | ccp_prop_value_t |

| class | ccp_propagate |

| class | ccp_folder |

Typedefs | |

| typedef hash_table< nofree_ptr_hash< gimple > > | gimple_htab |

Enumerations | |

| enum | ccp_lattice_t { UNINITIALIZED , UNDEFINED , CONSTANT , VARYING } |

Variables | |

| static ccp_prop_value_t * | const_val |

| static unsigned | n_const_val |

| static const unsigned char | gray_code_bit_flips [63] |

Typedef Documentation

◆ gimple_htab

| typedef hash_table<nofree_ptr_hash<gimple> > gimple_htab |

Enumeration Type Documentation

◆ ccp_lattice_t

| enum ccp_lattice_t |

Conditional constant propagation pass for the GNU compiler. Copyright (C) 2000-2026 Free Software Foundation, Inc. Adapted from original RTL SSA-CCP by Daniel Berlin <dberlin@dberlin.org> Adapted to GIMPLE trees by Diego Novillo <dnovillo@redhat.com> This file is part of GCC. GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version. GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details. You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see <http://www.gnu.org/licenses/>.

Conditional constant propagation (CCP) is based on the SSA

propagation engine (tree-ssa-propagate.cc). Constant assignments of

the form VAR = CST are propagated from the assignments into uses of

VAR, which in turn may generate new constants. The simulation uses

a four level lattice to keep track of constant values associated

with SSA names. Given an SSA name V_i, it may take one of the

following values:

UNINITIALIZED -> the initial state of the value. This value

is replaced with a correct initial value

the first time the value is used, so the

rest of the pass does not need to care about

it. Using this value simplifies initialization

of the pass, and prevents us from needlessly

scanning statements that are never reached.

UNDEFINED -> V_i is a local variable whose definition

has not been processed yet. Therefore we

don't yet know if its value is a constant

or not.

CONSTANT -> V_i has been found to hold a constant

value C.

VARYING -> V_i cannot take a constant value, or if it

does, it is not possible to determine it

at compile time.

The core of SSA-CCP is in ccp_visit_stmt and ccp_visit_phi_node:

1- In ccp_visit_stmt, we are interested in assignments whose RHS

evaluates into a constant and conditional jumps whose predicate

evaluates into a boolean true or false. When an assignment of

the form V_i = CONST is found, V_i's lattice value is set to

CONSTANT and CONST is associated with it. This causes the

propagation engine to add all the SSA edges coming out the

assignment into the worklists, so that statements that use V_i

can be visited.

If the statement is a conditional with a constant predicate, we

mark the outgoing edges as executable or not executable

depending on the predicate's value. This is then used when

visiting PHI nodes to know when a PHI argument can be ignored.

2- In ccp_visit_phi_node, if all the PHI arguments evaluate to the

same constant C, then the LHS of the PHI is set to C. This

evaluation is known as the "meet operation". Since one of the

goals of this evaluation is to optimistically return constant

values as often as possible, it uses two main short cuts:

- If an argument is flowing in through a non-executable edge, it

is ignored. This is useful in cases like this:

if (PRED)

a_9 = 3;

else

a_10 = 100;

a_11 = PHI (a_9, a_10)

If PRED is known to always evaluate to false, then we can

assume that a_11 will always take its value from a_10, meaning

that instead of consider it VARYING (a_9 and a_10 have

different values), we can consider it CONSTANT 100.

- If an argument has an UNDEFINED value, then it does not affect

the outcome of the meet operation. If a variable V_i has an

UNDEFINED value, it means that either its defining statement

hasn't been visited yet or V_i has no defining statement, in

which case the original symbol 'V' is being used

uninitialized. Since 'V' is a local variable, the compiler

may assume any initial value for it.

After propagation, every variable V_i that ends up with a lattice

value of CONSTANT will have the associated constant value in the

array CONST_VAL[i].VALUE. That is fed into substitute_and_fold for

final substitution and folding.

This algorithm uses wide-ints at the max precision of the target.

This means that, with one uninteresting exception, variables with

UNSIGNED types never go to VARYING because the bits above the

precision of the type of the variable are always zero. The

uninteresting case is a variable of UNSIGNED type that has the

maximum precision of the target. Such variables can go to VARYING,

but this causes no loss of infomation since these variables will

never be extended.

References:

Constant propagation with conditional branches,

Wegman and Zadeck, ACM TOPLAS 13(2):181-210.

Building an Optimizing Compiler,

Robert Morgan, Butterworth-Heinemann, 1998, Section 8.9.

Advanced Compiler Design and Implementation,

Steven Muchnick, Morgan Kaufmann, 1997, Section 12.6 Possible lattice values.

| Enumerator | |

|---|---|

| UNINITIALIZED | |

| UNDEFINED | |

| CONSTANT | |

| VARYING | |

Function Documentation

◆ bit_value_assume_aligned()

|

static |

Return the propagation value for __builtin_assume_aligned and functions with assume_aligned or alloc_aligned attribute. For __builtin_assume_aligned, ATTR is NULL_TREE, for assume_aligned attribute ATTR is non-NULL and ALLOC_ALIGNED is false, for alloc_aligned attribute ATTR is non-NULL and ALLOC_ALIGNED is true.

References bit_value_binop(), build_int_cst_type(), CONSTANT, gcc_assert, get_value_for_expr(), gimple_call_arg(), gimple_call_lhs(), gimple_call_num_args(), ccp_prop_value_t::lattice_val, ccp_prop_value_t::mask, NULL_TREE, wi::sext(), generic_wide_int< storage >::to_uhwi(), TREE_CHAIN, TREE_CODE, tree_fits_uhwi_p(), tree_to_uhwi(), TREE_TYPE, TREE_VALUE, TYPE_PRECISION, TYPE_SIGN, UNDEFINED, ccp_prop_value_t::value, value_to_wide_int(), VARYING, and wide_int_to_tree().

Referenced by evaluate_stmt(), and ccp_folder::fold_stmt().

◆ bit_value_binop() [1/2]

| void bit_value_binop | ( | enum tree_code | code, |

| signop | sgn, | ||

| int | width, | ||

| widest_int * | val, | ||

| widest_int * | mask, | ||

| signop | r1type_sgn, | ||

| int | r1type_precision, | ||

| const widest_int & | r1val, | ||

| const widest_int & | r1mask, | ||

| signop | r2type_sgn, | ||

| int | r2type_precision, | ||

| const widest_int & | r2val, | ||

| const widest_int & | r2mask ) |

Apply the operation CODE in type TYPE to the value, mask pairs R1VAL, R1MASK and R2VAL, R2MASK representing a values of type R1TYPE and R2TYPE and set the value, mask pair *VAL and *MASK to the result.

References wi::bit_and_not(), wi::bit_not(), bit_value_binop(), bit_value_mult_const(), wi::clrsb(), wi::clz(), wi::cmp(), count, wi::ctz(), wi::exact_log2(), wi::ext(), get_individual_bits(), wi::get_precision(), gray_code_bit_flips, i, wi::lrotate(), wi::lrshift(), wi::lshift(), wi::ltu_p(), wi::mask(), wi::neg_p(), wi::popcount(), wi::rrotate(), wi::rshift(), wi::sext(), shift, swap_tree_comparison(), wi::udiv_trunc(), UNSIGNED, value_mask_to_min_max(), and wi::zext().

◆ bit_value_binop() [2/2]

|

static |

Return the propagation value when applying the operation CODE to the values RHS1 and RHS2 yielding type TYPE.

References wi::bit_and_not(), bit_value_binop(), CONSTANT, gcc_assert, get_value_for_expr(), ccp_prop_value_t::lattice_val, ccp_prop_value_t::mask, NULL_TREE, wi::sext(), TREE_CODE, TREE_TYPE, TYPE_PRECISION, TYPE_SIGN, UNDEFINED, ccp_prop_value_t::value, value_to_wide_int(), VARYING, and wide_int_to_tree().

Referenced by bit_value_assume_aligned(), bit_value_binop(), bit_value_binop(), bit_value_unop(), evaluate_stmt(), ipcp_bits_lattice::meet_with(), and update_known_bitmask().

◆ bit_value_mult_const()

|

static |

Determine the mask pair *VAL and *MASK from multiplying the argument mask pair RVAL, RMASK by the unsigned constant C.

References wi::bit_and_not(), wi::ctz(), wi::ext(), and wi::lshift().

Referenced by bit_value_binop().

◆ bit_value_unop() [1/2]

| void bit_value_unop | ( | enum tree_code | code, |

| signop | type_sgn, | ||

| int | type_precision, | ||

| widest_int * | val, | ||

| widest_int * | mask, | ||

| signop | rtype_sgn, | ||

| int | rtype_precision, | ||

| const widest_int & | rval, | ||

| const widest_int & | rmask ) |

Apply the operation CODE in type TYPE to the value, mask pair RVAL and RMASK representing a value of type RTYPE and set the value, mask pair *VAL and *MASK to the result.

References bit_value_binop(), bit_value_unop(), CASE_CONVERT, wi::ext(), wi::neg_p(), and wi::sext().

Referenced by bit_value_unop(), bit_value_unop(), evaluate_stmt(), ipcp_bits_lattice::meet_with(), and update_known_bitmask().

◆ bit_value_unop() [2/2]

|

static |

Return the propagation value when applying the operation CODE to the value RHS yielding type TYPE.

References bit_value_unop(), CONSTANT, gcc_assert, get_value_for_expr(), ccp_prop_value_t::lattice_val, ccp_prop_value_t::mask, NULL_TREE, wi::sext(), TREE_CODE, TREE_TYPE, TYPE_PRECISION, TYPE_SIGN, UNDEFINED, ccp_prop_value_t::value, value_to_wide_int(), VARYING, and wide_int_to_tree().

◆ canonicalize_value()

|

static |

For integer constants, make sure to drop TREE_OVERFLOW.

References CONSTANT, drop_tree_overflow(), ccp_prop_value_t::lattice_val, TREE_OVERFLOW_P, and ccp_prop_value_t::value.

Referenced by get_value(), get_value_for_expr(), and set_lattice_value().

◆ ccp_finalize()

Do final substitution of propagated values, cleanup the flowgraph and free allocated storage. If NONZERO_P, record nonzero bits. Return TRUE when something was optimized.

References cfun, const_val, CONSTANT, do_dbg_cnt(), FOR_EACH_SSA_NAME, free(), wide_int_storage::from(), get_ptr_info(), get_value(), i, INTEGRAL_TYPE_P, ccp_prop_value_t::lattice_val, least_bit_hwi(), ccp_prop_value_t::mask, NULL, POINTER_TYPE_P, set_bitmask(), set_ptr_info_alignment(), substitute_and_fold_engine::substitute_and_fold(), generic_wide_int< storage >::to_uhwi(), wi::to_wide(), TREE_CODE, TREE_INT_CST_LOW, TREE_TYPE, TYPE_PRECISION, UNSIGNED, and ccp_prop_value_t::value.

Referenced by do_ssa_ccp().

◆ ccp_fold()

CCP specific front-end to the non-destructive constant folding routines. Attempt to simplify the RHS of STMT knowing that one or more operands are constants. If simplification is possible, return the simplified RHS, otherwise return the original RHS or NULL_TREE.

References as_a(), gcc_unreachable, gimple_fold_stmt_to_constant_1(), gimple_switch_index(), valueize_op(), and valueize_op_1().

Referenced by evaluate_stmt().

◆ ccp_initialize()

|

static |

Initialize local data structures for CCP.

References cfun, const_val, FOR_EACH_BB_FN, FOR_EACH_SSA_TREE_OPERAND, gimple_phi_result(), gsi_end_p(), gsi_next(), gsi_start_bb(), gsi_start_phis(), gsi_stmt(), i, n_const_val, num_ssa_names, prop_set_simulate_again(), set_value_varying(), SSA_OP_ALL_DEFS, stmt_ends_bb_p(), surely_varying_stmt_p(), and virtual_operand_p().

Referenced by do_ssa_ccp().

◆ ccp_lattice_meet()

|

static |

Compute the meet operator between *VAL1 and *VAL2. Store the result

in VAL1.

any M UNDEFINED = any

any M VARYING = VARYING

Ci M Cj = Ci if (i == j)

Ci M Cj = VARYING if (i != j)

References ccp_lattice_meet(), CONSTANT, get_value_for_expr(), ccp_prop_value_t::lattice_val, ccp_prop_value_t::mask, NULL_TREE, operand_equal_p(), wi::sext(), wi::to_widest(), TREE_CODE, TREE_TYPE, TYPE_PRECISION, UNDEFINED, ccp_prop_value_t::value, and VARYING.

Referenced by ccp_lattice_meet(), set_lattice_value(), and ccp_propagate::visit_phi().

◆ debug_lattice_value()

| DEBUG_FUNCTION void debug_lattice_value | ( | ccp_prop_value_t | val | ) |

Print lattice value VAL to stderr.

References dump_lattice_value().

◆ do_dbg_cnt()

|

static |

Debug count support. Reset the values of ssa names VARYING when the total number ssa names analyzed is beyond the debug count specified.

References const_val, dbg_cnt(), i, NULL_TREE, num_ssa_names, and VARYING.

Referenced by ccp_finalize().

◆ do_ssa_ccp()

|

static |

Main entry point for SSA Conditional Constant Propagation. If NONZERO_P, record nonzero bits.

References calculate_dominance_info(), ccp_finalize(), ccp_initialize(), CDI_DOMINATORS, free_dominance_info(), LOOP_CLOSED_SSA, loops_state_clear(), ssa_propagation_engine::ssa_propagate(), todo, and TODO_cleanup_cfg.

◆ dump_lattice_value()

|

static |

Dump constant propagation value VAL to file OUTF prefixed by PREFIX.

References wi::bit_and_not(), CONSTANT, dump_flags, gcc_unreachable, ccp_prop_value_t::lattice_val, ccp_prop_value_t::mask, print_generic_expr(), print_hex(), wi::to_widest(), TREE_CODE, UNDEFINED, UNINITIALIZED, ccp_prop_value_t::value, and VARYING.

Referenced by debug_lattice_value(), set_lattice_value(), and ccp_propagate::visit_phi().

◆ evaluate_stmt()

|

static |

Evaluate statement STMT. Valid only for assignments, calls, conditionals, and switches.

References as_a(), wi::bit_and_not(), bit_value_assume_aligned(), bit_value_binop(), bit_value_unop(), wi::bswap(), build_int_cst(), build_zero_cst(), BUILT_IN_NORMAL, CASE_BUILT_IN_ALLOCA, ccp_fold(), CONSTANT, DECL_FUNCTION_CODE(), dump_file, dump_flags, ERF_RETURN_ARG_MASK, ERF_RETURNS_ARG, extend_mask(), fold_defer_overflow_warnings(), fold_undefer_overflow_warnings(), wide_int_storage::from(), gcc_assert, get_constant_value(), get_gimple_rhs_class(), get_nonzero_bits(), get_value(), get_value_for_expr(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), gimple_assign_single_p(), GIMPLE_BINARY_RHS, gimple_call_arg(), gimple_call_builtin_p(), gimple_call_fndecl(), gimple_call_fntype(), gimple_call_lhs(), gimple_call_num_args(), gimple_call_return_flags(), gimple_cond_code(), gimple_cond_lhs(), gimple_cond_rhs(), gimple_get_lhs(), GIMPLE_SINGLE_RHS, gimple_switch_index(), GIMPLE_UNARY_RHS, INTEGRAL_TYPE_P, is_gimple_call(), is_gimple_min_invariant(), ccp_prop_value_t::lattice_val, likely_value(), lookup_attribute(), MALLOC_ABI_ALIGNMENT, ccp_prop_value_t::mask, nonzero_bits(), NULL_TREE, POINTER_TYPE_P, prop_simulate_again_p(), ptr_type_node, wi::sext(), SSA_NAME_DEF_STMT, SSA_NAME_IS_DEFAULT_DEF, SSA_NAME_OCCURS_IN_ABNORMAL_PHI, TDF_DETAILS, wi::to_wide(), TREE_CODE, tree_fits_uhwi_p(), TREE_INT_CST_LOW, tree_to_uhwi(), TREE_TYPE, TYPE_ATTRIBUTES, TYPE_PRECISION, TYPE_SIGN, UNDEFINED, UNSIGNED, ccp_prop_value_t::value, VARYING, VECTOR_TYPE_P, and wide_int_to_tree().

Referenced by ccp_folder::fold_stmt(), visit_assignment(), and visit_cond_stmt().

◆ extend_mask()

|

static |

Extend NONZERO_BITS to a full mask, based on sgn.

References nonzero_bits().

Referenced by evaluate_stmt(), and get_default_value().

◆ fold_builtin_alloca_with_align()

Detects a __builtin_alloca_with_align with constant size argument. Declares fixed-size array and returns the address, if found, otherwise returns NULL_TREE.

References pt_solution::anything, BLOCK_SUPERCONTEXT, build_array_type_nelts(), build_fold_addr_expr, build_nonstandard_integer_type(), cfun, create_tmp_var, DECL_SOURCE_LOCATION, fold_convert, get_constant_value(), gimple_block(), gimple_call_arg(), gimple_call_lhs(), gimple_location(), IDENTIFIER_POINTER, NULL, NULL_TREE, ptr_info_def::pt, pt_solution_singleton_or_null_p(), SET_DECL_ALIGN, SET_DECL_PT_UID, ssa_name, SSA_NAME_DEF_STMT, SSA_NAME_IDENTIFIER, SSA_NAME_PTR_INFO, TREE_CODE, tree_fits_uhwi_p(), TREE_INT_CST_LOW, tree_to_uhwi(), and TREE_TYPE.

Referenced by ccp_folder::fold_stmt().

◆ get_constant_value()

Return the constant tree value associated with VAR.

References CONSTANT, get_value(), is_gimple_min_invariant(), ccp_prop_value_t::lattice_val, ccp_prop_value_t::mask, NULL_TREE, TREE_CODE, and ccp_prop_value_t::value.

Referenced by evaluate_stmt(), fold_builtin_alloca_with_align(), ccp_folder::fold_stmt(), ccp_folder::value_of_expr(), valueize_op(), and valueize_op_1().

◆ get_default_value()

|

static |

Compute a default value for variable VAR and store it in the CONST_VAL array. The following rules are used to get default values: 1- Global and static variables that are declared constant are considered CONSTANT. 2- Any other value is considered UNDEFINED. This is useful when considering PHI nodes. PHI arguments that are undefined do not change the constant value of the PHI node, which allows for more constants to be propagated. 3- Variables defined by statements other than assignments and PHI nodes are considered VARYING. 4- Initial values of variables that are not GIMPLE registers are considered VARYING.

References wi::bit_and(), build_zero_cst(), CONSTANT, DECL_P, extend_mask(), gcc_assert, get_nonzero_bits(), get_symbol_constant_value(), gimple_assign_rhs1(), gimple_assign_single_p(), gimple_call_lhs(), gimple_nop_p(), ipcp_get_parm_bits(), is_gimple_assign(), is_gimple_call(), ccp_prop_value_t::lattice_val, ccp_prop_value_t::mask, nonzero_bits(), NULL_TREE, SSA_NAME_DEF_STMT, SSA_NAME_VAR, wi::to_widest(), TREE_CODE, TREE_TYPE, TYPE_SIGN, UNDEFINED, UNINITIALIZED, ccp_prop_value_t::value, VAR_P, VARYING, VECTOR_TYPE_P, and virtual_operand_p().

Referenced by get_value().

◆ get_individual_bits()

|

static |

Fill up to MAX values in the BITS array with values representing each of the non-zero bits in the value X. Returns the number of bits in X (capped at the maximum value MAX). For example, an X value 11, places 1, 2 and 8 in BITS and returns the value 3.

References count, wi::ctz(), and wi::lshift().

Referenced by bit_value_binop().

◆ get_value()

|

inlinestatic |

Get the constant value associated with variable VAR.

References canonicalize_value(), const_val, get_default_value(), ccp_prop_value_t::lattice_val, n_const_val, NULL, SSA_NAME_VERSION, and UNINITIALIZED.

Referenced by ccp_finalize(), evaluate_stmt(), get_constant_value(), get_value_for_expr(), and likely_value().

◆ get_value_for_expr()

|

static |

Return the value for the tree operand EXPR. If FOR_BITS_P is true return constant bits extracted from alignment information for invariant addresses.

References canonicalize_value(), CONSTANT, get_value(), get_value_from_alignment(), INTEGRAL_TYPE_P, is_gimple_min_invariant(), ccp_prop_value_t::lattice_val, ccp_prop_value_t::mask, NULL_TREE, SSA_NAME_OCCURS_IN_ABNORMAL_PHI, TREE_CODE, TREE_TYPE, TYPE_PRECISION, TYPE_UNSIGNED, ccp_prop_value_t::value, VARYING, and wi::zext().

Referenced by bit_value_assume_aligned(), bit_value_binop(), bit_value_unop(), ccp_lattice_meet(), evaluate_stmt(), ccp_folder::fold_stmt(), and ccp_propagate::visit_phi().

◆ get_value_from_alignment()

|

static |

Return the value for the address expression EXPR based on alignment information.

References wi::bit_and_not(), build_int_cstu(), CONSTANT, gcc_assert, get_pointer_alignment_1(), ccp_prop_value_t::lattice_val, ccp_prop_value_t::mask, wi::mask(), NULL_TREE, POINTER_TYPE_P, wi::sext(), TREE_CODE, TREE_TYPE, TYPE_PRECISION, TYPE_UNSIGNED, ccp_prop_value_t::value, and VARYING.

Referenced by get_value_for_expr().

◆ gsi_prev_dom_bb_nondebug()

|

inlinestatic |

Advance the iterator to the previous non-debug gimple statement in the same or dominating basic block.

References CDI_DOMINATORS, cfun, ENTRY_BLOCK_PTR_FOR_FN, get_immediate_dominator(), gsi_bb(), gsi_end_p(), gsi_last_bb(), gsi_prev_nondebug(), i, and NULL.

Referenced by insert_clobbers_for_var().

◆ insert_clobber_before_stack_restore()

|

static |

Given a BUILT_IN_STACK_SAVE value SAVED_VAL, insert a clobber of VAR before each matching BUILT_IN_STACK_RESTORE. Mark visited phis in VISITED.

References build_clobber(), cfun, CLOBBER_STORAGE_END, FOR_EACH_IMM_USE_STMT, gimple_assign_lhs(), gimple_assign_ssa_name_copy_p(), gimple_build_assign(), gimple_call_builtin_p(), gimple_phi_result(), gimple_set_vdef(), gimple_set_vuse(), gimple_vdef(), gimple_vop(), gimple_vuse(), gsi_for_stmt(), gsi_insert_before(), GSI_SAME_STMT, i, insert_clobber_before_stack_restore(), make_ssa_name(), NULL, SSA_NAME_DEF_STMT, TREE_TYPE, update_stmt(), and visited.

Referenced by insert_clobber_before_stack_restore(), and insert_clobbers_for_var().

◆ insert_clobbers_for_var()

|

static |

Find a BUILT_IN_STACK_SAVE dominating gsi_stmt (I), and insert a clobber of VAR before each matching BUILT_IN_STACK_RESTORE. It is possible that BUILT_IN_STACK_SAVE cannot be found in a dominator when a previous pass (such as DOM) duplicated it along multiple paths to a BB. In that case the function gives up without inserting the clobbers.

References gimple_call_builtin_p(), gimple_call_lhs(), gsi_end_p(), gsi_prev_dom_bb_nondebug(), gsi_stmt(), i, insert_clobber_before_stack_restore(), NULL, NULL_TREE, and visited.

Referenced by ccp_folder::fold_stmt().

◆ likely_value()

|

static |

Return the likely CCP lattice value for STMT. If STMT has no operands, then return CONSTANT. Else if undefinedness of operands of STMT cause its value to be undefined, then return UNDEFINED. Else if any operands of STMT are constants, then return CONSTANT. Else return VARYING.

References CONSTANT, CONSTANT_CLASS_P, CONSTRUCTOR_ELTS, FOR_EACH_CONSTRUCTOR_VALUE, FOR_EACH_SSA_TREE_OPERAND, gcc_assert, get_value(), gimple_assign_rhs_code(), gimple_call_internal_fn(), gimple_call_internal_p(), gimple_has_lhs(), gimple_has_volatile_ops(), gimple_num_ops(), gimple_op(), gimple_references_memory_p(), i, is_gimple_call(), is_gimple_min_invariant(), ccp_prop_value_t::lattice_val, prop_simulate_again_p(), SSA_NAME_DEF_STMT, SSA_NAME_IS_DEFAULT_DEF, SSA_OP_USE, TREE_CODE, UNDEFINED, and VARYING.

Referenced by evaluate_stmt().

◆ make_pass_ccp()

| gimple_opt_pass * make_pass_ccp | ( | gcc::context * | ctxt | ) |

◆ make_pass_post_ipa_warn()

| gimple_opt_pass * make_pass_post_ipa_warn | ( | gcc::context * | ctxt | ) |

◆ set_lattice_value()

|

static |

Set the value for variable VAR to NEW_VAL. Return true if the new value is different from VAR's previous value.

References wi::bit_and_not(), canonicalize_value(), ccp_lattice_meet(), const_val, CONSTANT, CONSTANT_CLASS_P, dump_file, dump_flags, dump_lattice_value(), gcc_assert, gcc_checking_assert, ccp_prop_value_t::lattice_val, ccp_prop_value_t::mask, operand_equal_p(), SSA_NAME_VERSION, TDF_DETAILS, wi::to_widest(), TREE_CODE, UNINITIALIZED, valid_lattice_transition(), and ccp_prop_value_t::value.

Referenced by visit_assignment(), and ccp_propagate::visit_phi().

◆ set_value_varying()

|

inlinestatic |

Sets the value associated with VAR to VARYING.

References const_val, ccp_prop_value_t::lattice_val, ccp_prop_value_t::mask, NULL_TREE, SSA_NAME_VERSION, ccp_prop_value_t::value, and VARYING.

Referenced by ccp_initialize(), and ccp_propagate::visit_stmt().

◆ surely_varying_stmt_p()

Returns true if STMT cannot be constant.

References fndecl_built_in_p(), gimple_call_fndecl(), gimple_call_fntype(), gimple_call_lhs(), gimple_has_volatile_ops(), gimple_vdef(), is_gimple_call(), lookup_attribute(), NULL_TREE, and TYPE_ATTRIBUTES.

Referenced by ccp_initialize().

◆ valid_lattice_transition()

|

static |

Return whether the lattice transition is valid.

References vector_builder< tree, tree, tree_vector_builder >::binary_encoded_nelts(), wi::bit_and_not(), COMPLEX_FLOAT_TYPE_P, count, HONOR_NANS(), i, is_gimple_min_invariant(), ccp_prop_value_t::lattice_val, ccp_prop_value_t::mask, operand_equal_p(), REAL_VALUE_ISNAN, SCALAR_FLOAT_TYPE_P, wi::to_widest(), TREE_CODE, TREE_IMAGPART, TREE_REAL_CST, TREE_REALPART, TREE_TYPE, types_compatible_p(), ccp_prop_value_t::value, VECTOR_CST_ENCODED_ELT, and VECTOR_FLOAT_TYPE_P.

Referenced by set_lattice_value().

◆ value_mask_to_min_max()

|

static |

Determine the minimum and maximum values, *MIN and *MAX respectively, represented by the mask pair VAL and MASK with signedness SGN and precision PRECISION.

References wi::bit_and_not(), wi::ext(), wi::lshift(), wi::neg_p(), and SIGNED.

Referenced by bit_value_binop().

◆ value_to_wide_int()

|

static |

Return a widest_int that can be used for bitwise simplifications from VAL.

References wi::to_widest(), TREE_CODE, and ccp_prop_value_t::value.

Referenced by bit_value_assume_aligned(), bit_value_binop(), and bit_value_unop().

◆ valueize_op()

Return the constant value for OP or OP otherwise.

References get_constant_value(), and TREE_CODE.

Referenced by ccp_fold(), gimple_extract(), and gimple_simplify().

◆ valueize_op_1()

Return the constant value for OP, but signal to not follow SSA edges if the definition may be simulated again.

References get_constant_value(), gimple_nop_p(), NULL_TREE, prop_simulate_again_p(), SSA_NAME_DEF_STMT, and TREE_CODE.

Referenced by ccp_fold().

◆ visit_assignment()

|

static |

Visit the assignment statement STMT. Set the value of its LHS to the value computed by the RHS and store LHS in *OUTPUT_P. If STMT creates virtual definitions, set the value of each new name to that of the RHS (if we can derive a constant out of the RHS). Value-returning call statements also perform an assignment, and are handled here.

References evaluate_stmt(), gimple_get_lhs(), ccp_prop_value_t::lattice_val, set_lattice_value(), SSA_PROP_INTERESTING, SSA_PROP_NOT_INTERESTING, SSA_PROP_VARYING, TREE_CODE, and VARYING.

Referenced by ccp_propagate::visit_stmt().

◆ visit_cond_stmt()

|

static |

Visit the conditional statement STMT. Return SSA_PROP_INTERESTING if it can determine which edge will be taken. Otherwise, return SSA_PROP_VARYING.

References CONSTANT, evaluate_stmt(), find_taken_edge(), gimple_bb(), ccp_prop_value_t::lattice_val, ccp_prop_value_t::mask, SSA_PROP_INTERESTING, SSA_PROP_VARYING, and ccp_prop_value_t::value.

Referenced by ccp_propagate::visit_stmt().

Variable Documentation

◆ const_val

|

static |

Array of propagated constant values. After propagation, CONST_VAL[I].VALUE holds the constant value for SSA_NAME(I). If the constant is held in an SSA name representing a memory store (i.e., a VDEF), CONST_VAL[I].MEM_REF will contain the actual memory reference used to store (i.e., the LHS of the assignment doing the store).

Referenced by canon_condition(), canonicalize_condition(), ccp_finalize(), ccp_initialize(), do_dbg_cnt(), get_value(), set_lattice_value(), and set_value_varying().

◆ gray_code_bit_flips

|

static |

Array of 2^N - 1 values representing the bits flipped between consecutive Gray codes. This is used to efficiently enumerate all permutations on N bits using XOR.

Referenced by bit_value_binop().

◆ n_const_val

|

static |

Referenced by ccp_initialize(), and get_value().