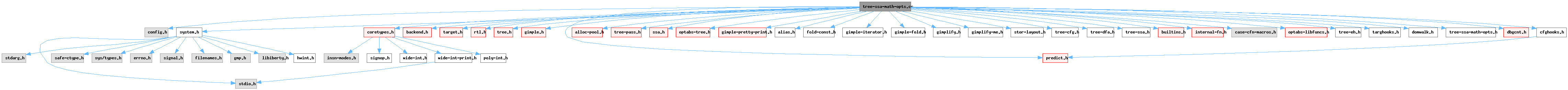

#include "config.h"#include "system.h"#include "coretypes.h"#include "backend.h"#include "target.h"#include "rtl.h"#include "tree.h"#include "gimple.h"#include "predict.h"#include "alloc-pool.h"#include "tree-pass.h"#include "ssa.h"#include "optabs-tree.h"#include "gimple-pretty-print.h"#include "alias.h"#include "fold-const.h"#include "gimple-iterator.h"#include "gimple-fold.h"#include "gimplify.h"#include "gimplify-me.h"#include "stor-layout.h"#include "tree-cfg.h"#include "tree-dfa.h"#include "tree-ssa.h"#include "builtins.h"#include "internal-fn.h"#include "case-cfn-macros.h"#include "optabs-libfuncs.h"#include "tree-eh.h"#include "targhooks.h"#include "domwalk.h"#include "tree-ssa-math-opts.h"#include "dbgcnt.h"#include "cfghooks.h"

Data Structures | |

| struct | occurrence |

| struct | pow_synth_sqrt_info |

| struct | fma_transformation_info |

| class | fma_deferring_state |

Macros | |

| #define | POWI_MAX_MULTS (2*HOST_BITS_PER_WIDE_INT-2) |

| #define | POWI_TABLE_SIZE 256 |

| #define | POWI_WINDOW_SIZE 3 |

Variables | |

| static struct { ... } | reciprocal_stats |

| struct { | |

| int inserted | |

| int conv_removed | |

| } | sincos_stats |

| struct { | |

| int widen_mults_inserted | |

| int maccs_inserted | |

| int fmas_inserted | |

| int divmod_calls_inserted | |

| int highpart_mults_inserted | |

| } | widen_mul_stats |

| static struct occurrence * | occ_head |

| static object_allocator< occurrence > * | occ_pool |

| static const unsigned char | powi_table [POWI_TABLE_SIZE] |

Macro Definition Documentation

◆ POWI_MAX_MULTS

| #define POWI_MAX_MULTS (2*HOST_BITS_PER_WIDE_INT-2) |

To evaluate powi(x,n), the floating point value x raised to the constant integer exponent n, we use a hybrid algorithm that combines the "window method" with look-up tables. For an introduction to exponentiation algorithms and "addition chains", see section 4.6.3, "Evaluation of Powers" of Donald E. Knuth, "Seminumerical Algorithms", Vol. 2, "The Art of Computer Programming", 3rd Edition, 1998, and Daniel M. Gordon, "A Survey of Fast Exponentiation Methods", Journal of Algorithms, Vol. 27, pp. 129-146, 1998.

Provide a default value for POWI_MAX_MULTS, the maximum number of multiplications to inline before calling the system library's pow function. powi(x,n) requires at worst 2*bits(n)-2 multiplications, so this default never requires calling pow, powf or powl.

Referenced by expand_pow_as_sqrts(), gimple_expand_builtin_pow(), and gimple_expand_builtin_powi().

◆ POWI_TABLE_SIZE

| #define POWI_TABLE_SIZE 256 |

The size of the "optimal power tree" lookup table. All exponents less than this value are simply looked up in the powi_table below. This threshold is also used to size the cache of pseudo registers that hold intermediate results.

Referenced by powi_as_mults(), powi_as_mults_1(), and powi_cost().

◆ POWI_WINDOW_SIZE

| #define POWI_WINDOW_SIZE 3 |

The size, in bits of the window, used in the "window method" exponentiation algorithm. This is equivalent to a radix of (1<<POWI_WINDOW_SIZE) in the corresponding "m-ary method".

Referenced by powi_as_mults_1(), and powi_cost().

Function Documentation

◆ arith_cast_equal_p()

Helper function for arith_overflow_check_p. Return true if VAL1 is equal to VAL2 cast to corresponding integral type with other signedness or vice versa.

References wi::eq_p(), gimple_assign_cast_p(), gimple_assign_rhs1(), SSA_NAME_DEF_STMT, wi::to_wide(), and TREE_CODE.

Referenced by arith_overflow_check_p().

◆ arith_overflow_check_p()

|

static |

Helper function of match_arith_overflow. Return 1 if USE_STMT is unsigned overflow check ovf != 0 for STMT, -1 if USE_STMT is unsigned overflow check ovf == 0 and 0 otherwise.

References arith_cast_equal_p(), COMPARISON_CLASS_P, CONVERT_EXPR_CODE_P, gimple_assign_cast_p(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_class(), gimple_assign_rhs_code(), GIMPLE_BINARY_RHS, gimple_cond_code(), gimple_cond_lhs(), gimple_cond_rhs(), integer_zerop(), INTEGRAL_TYPE_P, is_gimple_assign(), NULL, NULL_TREE, operand_equal_p(), sc, single_imm_use(), stmt_ends_bb_p(), tcc_comparison, wi::to_widest(), TREE_CODE, TREE_CODE_CLASS, tree_int_cst_equal(), TREE_OPERAND, TREE_TYPE, TYPE_PRECISION, and TYPE_UNSIGNED.

Referenced by match_arith_overflow().

◆ build_and_insert_binop()

|

static |

Build a gimple binary operation with the given CODE and arguments ARG0, ARG1, assigning the result to a new SSA name for variable TARGET. Insert the statement prior to GSI's current position, and return the fresh SSA name.

References gimple_build_assign(), gimple_set_location(), gsi_insert_before(), GSI_SAME_STMT, make_temp_ssa_name(), NULL, and TREE_TYPE.

Referenced by convert_mult_to_highpart(), expand_pow_as_sqrts(), and gimple_expand_builtin_pow().

◆ build_and_insert_call()

|

static |

Build a gimple call statement that calls FN with argument ARG. Set the lhs of the call statement to a fresh SSA name. Insert the statement prior to GSI's current position, and return the fresh SSA name.

References gimple_build_call(), gimple_set_lhs(), gimple_set_location(), gsi_insert_before(), GSI_SAME_STMT, make_temp_ssa_name(), NULL, and TREE_TYPE.

Referenced by get_fn_chain(), and gimple_expand_builtin_pow().

◆ build_and_insert_cast()

|

static |

Build a gimple assignment to cast VAL to TYPE. Insert the statement prior to GSI's current position, and return the fresh SSA name.

References gimple_convert(), and GSI_SAME_STMT.

Referenced by convert_mult_to_highpart(), convert_mult_to_widen(), and convert_plusminus_to_widen().

◆ build_saturation_binary_arith_call_and_insert()

|

static |

References direct_internal_fn_supported_p(), gimple_build_call_internal(), gimple_call_set_lhs(), gsi_insert_before(), GSI_SAME_STMT, OPTIMIZE_FOR_BOTH, and TREE_TYPE.

Referenced by match_saturation_add(), match_saturation_mul(), and match_saturation_sub().

◆ build_saturation_binary_arith_call_and_replace()

|

static |

◆ cancel_fma_deferring()

|

static |

Transform all deferred FMA candidates and mark STATE as no longer deferring.

References convert_mult_to_fma_1(), dump_file, dump_flags, gsi_for_stmt(), gsi_remove(), i, fma_transformation_info::mul_result, fma_transformation_info::mul_stmt, fma_transformation_info::op1, fma_transformation_info::op2, release_defs(), and TDF_DETAILS.

Referenced by convert_mult_to_fma().

◆ compute_merit()

|

static |

Compute the number of divisions that postdominate each block in OCC and its children.

References occurrence::bb, CDI_POST_DOMINATORS, occurrence::children, compute_merit(), dominated_by_p(), occurrence::next, occurrence::num_divisions, and single_noncomplex_succ().

Referenced by compute_merit(), and execute_cse_reciprocals_1().

◆ convert_expand_mult_copysign()

|

static |

Try to expand the pattern x * copysign (1, y) into xorsign (x, y). This only happens when the xorsign optab is defined, if the pattern is not a xorsign pattern or if expansion fails FALSE is returned, otherwise TRUE is returned.

References as_a(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_build_call_internal(), gimple_call_arg(), gimple_location(), gimple_set_lhs(), gimple_set_location(), gsi_replace(), has_single_use(), HONOR_SNANS(), is_copysign_call_with_1(), optab_handler(), SSA_NAME_DEF_STMT, TREE_CODE, TREE_TYPE, and TYPE_MODE.

◆ convert_mult_to_fma()

|

static |

Combine the multiplication at MUL_STMT with operands MULOP1 and MULOP2 with uses in additions and subtractions to form fused multiply-add operations. Returns true if successful and MUL_STMT should be removed. If MUL_COND is nonnull, the multiplication in MUL_STMT is conditional on MUL_COND, otherwise it is unconditional. If STATE indicates that we are deferring FMA transformation, that means that we do not produce FMAs for basic blocks which look like: <bb 6> # accumulator_111 = PHI <0.0(5), accumulator_66(6)> _65 = _14 * _16; accumulator_66 = _65 + accumulator_111; or its unrolled version, i.e. with several FMA candidates that feed result of one into the addend of another. Instead, we add them to a list in STATE and if we later discover an FMA candidate that is not part of such a chain, we go back and perform all deferred past candidates.

References ANY_INTEGRAL_TYPE_P, bb_optimization_type(), can_interpret_as_conditional_op_p(), cancel_fma_deferring(), convert_mult_to_fma_1(), dbg_cnt(), direct_internal_fn_supported_p(), dump_file, dump_flags, FLOAT_TYPE_P, FOR_EACH_IMM_USE_FAST, FOR_EACH_PHI_OR_STMT_USE, FP_CONTRACT_FAST, gcc_assert, gcc_checking_assert, gimple_assign_cast_p(), gimple_assign_lhs(), gimple_assign_rhs_code(), gimple_bb(), gimple_get_lhs(), has_single_use(), has_zero_uses(), integer_truep(), INTEGRAL_TYPE_P, is_gimple_assign(), is_gimple_debug(), fma_transformation_info::mul_result, fma_transformation_info::mul_stmt, NULL_TREE, fma_transformation_info::op1, fma_transformation_info::op2, poly_int_tree_p(), print_gimple_stmt(), result_of_phi(), single_imm_use(), SSA_NAME_DEF_STMT, SSA_OP_USE, TDF_DETAILS, TDF_NONE, wi::to_widest(), TREE_CODE, tree_nop_conversion_p(), tree_to_poly_int64(), TREE_TYPE, type_has_mode_precision_p(), TYPE_OVERFLOW_TRAPS, TYPE_SIZE, USE_FROM_PTR, and USE_STMT.

◆ convert_mult_to_fma_1()

Given a result MUL_RESULT which is a result of a multiplication of OP1 and OP2 and which we know is used in statements that can be, together with the multiplication, converted to FMAs, perform the transformation.

References can_interpret_as_conditional_op_p(), cfun, dump_file, dump_flags, fold_stmt(), follow_all_ssa_edges(), FOR_EACH_IMM_USE_STMT, gcc_unreachable, gimple_assign_cast_p(), gimple_assign_lhs(), gimple_assign_rhs_code(), gimple_build(), gimple_build_call_internal(), gimple_call_internal_p(), gimple_call_lhs(), gimple_call_set_nothrow(), gimple_convert(), gimple_get_lhs(), gimple_set_lhs(), gsi_for_stmt(), gsi_insert_seq_before(), gsi_remove(), gsi_replace(), GSI_SAME_STMT, gsi_stmt(), is_gimple_assign(), is_gimple_call(), is_gimple_debug(), maybe_clean_or_replace_eh_stmt(), NULL, print_gimple_stmt(), release_defs(), single_imm_use(), stmt_can_throw_internal(), stmt_could_throw_p(), TDF_DETAILS, TDF_NONE, TREE_CODE, tree_nop_conversion_p(), TREE_TYPE, UNKNOWN_LOCATION, update_stmt(), and widen_mul_stats.

Referenced by cancel_fma_deferring(), and convert_mult_to_fma().

◆ convert_mult_to_highpart()

|

static |

Process a single gimple assignment STMT, which has a RSHIFT_EXPR as its rhs, and try to convert it into a MULT_HIGHPART_EXPR. The return value is true iff we converted the statement.

References build_and_insert_binop(), build_and_insert_cast(), build_int_cst(), dyn_cast(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), gimple_bb(), gimple_build_assign(), gimple_location(), gsi_replace(), has_single_use(), optab_handler(), signed_type_for(), SSA_NAME_DEF_STMT, TREE_CODE, tree_fits_uhwi_p(), tree_to_uhwi(), TREE_TYPE, TYPE_MODE, TYPE_PRECISION, TYPE_UNSIGNED, unsigned_type_for(), and widen_mul_stats.

◆ convert_mult_to_widen()

|

static |

Process a single gimple statement STMT, which has a MULT_EXPR as its rhs, and try to convert it into a WIDEN_MULT_EXPR. The return value is true iff we converted the statement.

References build_and_insert_cast(), build_nonstandard_integer_type(), find_widening_optab_handler_and_mode(), fold_convert, GET_MODE_PRECISION(), GET_MODE_SIZE(), GET_MODE_WIDER_MODE(), gimple_assign_lhs(), gimple_assign_set_rhs1(), gimple_assign_set_rhs2(), gimple_assign_set_rhs_code(), gimple_location(), is_widening_mult_p(), SCALAR_INT_TYPE_MODE, SSA_NAME_OCCURS_IN_ABNORMAL_PHI, TREE_CODE, TREE_TYPE, TYPE_PRECISION, TYPE_UNSIGNED, update_stmt(), useless_type_conversion_p(), and widen_mul_stats.

◆ convert_plusminus_to_widen()

|

static |

Process a single gimple statement STMT, which is found at the iterator GSI and has a either a PLUS_EXPR or a MINUS_EXPR as its rhs (given by CODE), and try to convert it into a WIDEN_MULT_PLUS_EXPR or a WIDEN_MULT_MINUS_EXPR. The return value is true iff we converted the statement.

References build_and_insert_cast(), build_nonstandard_integer_type(), CONVERT_EXPR_CODE_P, find_widening_optab_handler_and_mode(), fold_convert, GET_MODE_PRECISION(), GET_MODE_SIZE(), GET_MODE_WIDER_MODE(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), gimple_assign_set_rhs_with_ops(), gimple_bb(), gimple_location(), gsi_stmt(), has_single_use(), INTEGRAL_TYPE_P, is_gimple_assign(), is_widening_mult_p(), NULL, optab_default, optab_for_tree_code(), SCALAR_TYPE_MODE, SSA_NAME_DEF_STMT, TREE_CODE, TREE_TYPE, type_has_mode_precision_p(), TYPE_PRECISION, TYPE_UNSIGNED, update_stmt(), useless_type_conversion_p(), and widen_mul_stats.

◆ convert_to_divmod()

This function looks for: t1 = a TRUNC_DIV_EXPR b; t2 = a TRUNC_MOD_EXPR b; and transforms it to the following sequence: complex_tmp = DIVMOD (a, b); t1 = REALPART_EXPR(a); t2 = IMAGPART_EXPR(b); For conditions enabling the transform see divmod_candidate_p(). The pass has three parts: 1) Find top_stmt which is trunc_div or trunc_mod stmt and dominates all other trunc_div_expr and trunc_mod_expr stmts. 2) Add top_stmt and all trunc_div and trunc_mod stmts dominated by top_stmt to stmts vector. 3) Insert DIVMOD call just before top_stmt and update entries in stmts vector to use return value of DIMOVD (REALEXPR_PART for div, IMAGPART_EXPR for mod).

References build_complex_type(), CDI_DOMINATORS, cfun, divmod_candidate_p(), dominated_by_p(), fold_build1, FOR_EACH_IMM_USE_STMT, gcc_unreachable, gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), gimple_assign_set_rhs_from_tree(), gimple_bb(), gimple_build_call_internal(), gimple_call_set_lhs(), gimple_call_set_nothrow(), gimple_uid(), gsi_for_stmt(), gsi_insert_before(), GSI_SAME_STMT, i, is_gimple_assign(), make_temp_ssa_name(), operand_equal_p(), stmt_can_throw_internal(), TREE_TYPE, update_stmt(), and widen_mul_stats.

◆ divmod_candidate_p()

Check if stmt is candidate for divmod transform.

References BITS_PER_WORD, CONSTANT_CLASS_P, element_precision(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), HOST_BITS_PER_WIDE_INT, integer_pow2p(), target_supports_divmod_p(), TREE_TYPE, TYPE_MODE, TYPE_OVERFLOW_TRAPS, and TYPE_UNSIGNED.

Referenced by convert_to_divmod().

◆ dump_fractional_sqrt_sequence()

|

static |

Print to STREAM the fractional sequence of sqrt chains applied to ARG, described by INFO. Used for the dump file.

References pow_synth_sqrt_info::deepest, pow_synth_sqrt_info::factors, i, and print_nested_fn().

Referenced by expand_pow_as_sqrts().

◆ dump_integer_part()

|

static |

Print to STREAM a representation of raising ARG to an integer power N. Used for the dump file.

References HOST_WIDE_INT_PRINT_DEC.

Referenced by expand_pow_as_sqrts().

◆ execute_cse_conv_1()

If NAME is the result of a type conversion, look for other equivalent dominating or dominated conversions, and replace all uses with the earliest dominating name, removing the redundant conversions. Return the prevailing name.

References CDI_DOMINATORS, cfg_changed, dominated_by_p(), FOR_EACH_IMM_USE_STMT, gimple_assign_cast_p(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_bb(), gimple_purge_dead_eh_edges(), gsi_end_p(), gsi_for_stmt(), gsi_next(), gsi_remove(), gsi_stmt(), release_defs(), replace_uses_by(), sincos_stats, SSA_NAME_DEF_STMT, SSA_NAME_IS_DEFAULT_DEF, SSA_NAME_OCCURS_IN_ABNORMAL_PHI, TREE_CODE, TREE_TYPE, and types_compatible_p().

Referenced by execute_cse_sincos_1().

◆ execute_cse_reciprocals_1()

|

static |

Look for floating-point divisions among DEF's uses, and try to replace them by multiplications with the reciprocal. Add as many statements computing the reciprocal as needed. DEF must be a GIMPLE register of a floating-point type.

References compute_merit(), count, FLOAT_TYPE_P, FOR_EACH_IMM_USE_FAST, FOR_EACH_IMM_USE_ON_STMT, FOR_EACH_IMM_USE_STMT, free_bb(), gcc_assert, gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), gimple_bb(), insert_reciprocals(), is_division_by(), is_gimple_assign(), is_square_of(), occurrence::next, NULL, occ_head, register_division_in(), replace_reciprocal(), replace_reciprocal_squares(), SSA_NAME_DEF_STMT, targetm, TREE_CODE, TREE_TYPE, TYPE_MODE, and USE_STMT.

◆ execute_cse_sincos_1()

Look for sin, cos and cexpi calls with the same argument NAME and create a single call to cexpi CSEing the result in this case. We first walk over all immediate uses of the argument collecting statements that we can CSE in a vector and in a second pass replace the statement rhs with a REALPART or IMAGPART expression on the result of the cexpi call we insert before the use statement that dominates all other candidates.

References cfg_changed, execute_cse_conv_1(), fold_build1, FOR_EACH_IMM_USE_STMT, gcc_checking_assert, gcc_unreachable, gimple_bb(), gimple_build_assign(), gimple_build_call(), gimple_call_combined_fn(), gimple_call_lhs(), gimple_call_set_lhs(), gimple_purge_dead_eh_edges(), gsi_after_labels(), gsi_for_stmt(), gsi_insert_after(), gsi_insert_before(), gsi_replace(), GSI_SAME_STMT, i, internal_fn_p(), make_temp_ssa_name(), mathfn_built_in(), mathfn_built_in_type(), maybe_record_sincos(), NULL, NULL_TREE, sincos_stats, SSA_NAME_DEF_STMT, SSA_NAME_IS_DEFAULT_DEF, TREE_TYPE, and types_compatible_p().

◆ expand_pow_as_sqrts()

|

static |

Attempt to synthesize a POW[F] (ARG0, ARG1) call using chains of

square roots. Place at GSI and LOC. Limit the maximum depth

of the sqrt chains to MAX_DEPTH. Return the tree holding the

result of the expanded sequence or NULL_TREE if the expansion failed.

This routine assumes that ARG1 is a real number with a fractional part

(the integer exponent case will have been handled earlier in

gimple_expand_builtin_pow).

For ARG1 > 0.0:

* For ARG1 composed of a whole part WHOLE_PART and a fractional part

FRAC_PART i.e. WHOLE_PART == floor (ARG1) and

FRAC_PART == ARG1 - WHOLE_PART:

Produce POWI (ARG0, WHOLE_PART) * POW (ARG0, FRAC_PART) where

POW (ARG0, FRAC_PART) is expanded as a product of square root chains

if it can be expressed as such, that is if FRAC_PART satisfies:

FRAC_PART == <SUM from i = 1 until MAX_DEPTH> (a[i] * (0.5**i))

where integer a[i] is either 0 or 1.

Example:

POW (x, 3.625) == POWI (x, 3) * POW (x, 0.625)

--> POWI (x, 3) * SQRT (x) * SQRT (SQRT (SQRT (x)))

For ARG1 < 0.0 there are two approaches:

* (A) Expand to 1.0 / POW (ARG0, -ARG1) where POW (ARG0, -ARG1)

is calculated as above.

Example:

POW (x, -5.625) == 1.0 / POW (x, 5.625)

--> 1.0 / (POWI (x, 5) * SQRT (x) * SQRT (SQRT (SQRT (x))))

* (B) : WHOLE_PART := - ceil (abs (ARG1))

FRAC_PART := ARG1 - WHOLE_PART

and expand to POW (x, FRAC_PART) / POWI (x, WHOLE_PART).

Example:

POW (x, -5.875) == POW (x, 0.125) / POWI (X, 6)

--> SQRT (SQRT (SQRT (x))) / (POWI (x, 6))

For ARG1 < 0.0 we choose between (A) and (B) depending on

how many multiplications we'd have to do.

So, for the example in (B): POW (x, -5.875), if we were to

follow algorithm (A) we would produce:

1.0 / POWI (X, 5) * SQRT (X) * SQRT (SQRT (X)) * SQRT (SQRT (SQRT (X)))

which contains more multiplications than approach (B).

Hopefully, this approach will eliminate potentially expensive POW library

calls when unsafe floating point math is enabled and allow the compiler to

further optimise the multiplies, square roots and divides produced by this

function.

References build_and_insert_binop(), build_real(), cache, dconst0, dconst1, pow_synth_sqrt_info::deepest, dump_file, dump_fractional_sqrt_sequence(), dump_integer_part(), exp(), pow_synth_sqrt_info::factors, gcc_assert, get_fn_chain(), gimple_expand_builtin_powi(), i, mathfn_built_in(), NULL_TREE, pow_synth_sqrt_info::num_mults, powi_cost(), POWI_MAX_MULTS, real_arithmetic(), real_ceil(), real_floor(), real_from_integer(), real_identical(), real_to_decimal(), real_to_integer(), real_value_abs(), REAL_VALUE_NEGATIVE, REAL_VALUE_TYPE, representable_as_half_series_p(), SIGNED, TREE_CODE, TREE_REAL_CST, TREE_TYPE, and TYPE_MODE.

Referenced by gimple_expand_builtin_pow().

◆ free_bb()

|

static |

Free OCC and return one more "struct occurrence" to be freed.

References occurrence::children, free_bb(), and occurrence::next.

Referenced by execute_cse_reciprocals_1(), and free_bb().

◆ get_fn_chain()

|

static |

Return the tree corresponding to FN being applied to ARG N times at GSI and LOC. Look up previous results from CACHE if need be. cache[0] should contain just plain ARG i.e. FN applied to ARG 0 times.

References build_and_insert_call(), cache, and get_fn_chain().

Referenced by expand_pow_as_sqrts(), and get_fn_chain().

◆ gimple_expand_builtin_pow()

|

static |

ARG0 and ARG1 are the two arguments to a pow builtin call in GSI with location info LOC. If possible, create an equivalent and less expensive sequence of statements prior to GSI, and return an expession holding the result.

References abs_hwi(), absu_hwi(), build_and_insert_binop(), build_and_insert_call(), build_real(), cfun, dconst1, dconst2, dconst_third, dconsthalf, expand_pow_as_sqrts(), gimple_expand_builtin_powi(), gsi_bb(), HONOR_NANS(), HONOR_SIGNED_ZEROS(), HONOR_SNANS(), mathfn_built_in(), NULL_TREE, optab_handler(), optimize_bb_for_speed_p(), optimize_function_for_speed_p(), powi_cost(), POWI_MAX_MULTS, real_arithmetic(), real_convert(), real_equal(), REAL_EXP, real_from_integer(), real_identical(), real_round(), real_to_integer(), REAL_VALUE_ISSIGNALING_NAN, real_value_truncate(), REAL_VALUE_TYPE, SET_REAL_EXP, SIGNED, TREE_CODE, tree_expr_nonnegative_p(), TREE_REAL_CST, TREE_TYPE, and TYPE_MODE.

◆ gimple_expand_builtin_powi()

|

static |

ARG0 and N are the two arguments to a powi builtin in GSI with location info LOC. If the arguments are appropriate, create an equivalent sequence of statements prior to GSI using an optimal number of multiplications, and return an expession holding the result.

References cfun, NULL_TREE, optimize_function_for_speed_p(), powi_as_mults(), powi_cost(), and POWI_MAX_MULTS.

Referenced by expand_pow_as_sqrts(), and gimple_expand_builtin_pow().

◆ gimple_signed_integer_sat_add()

Referenced by match_saturation_add(), match_saturation_add_with_assign(), and vect_recog_sat_add_pattern().

◆ gimple_signed_integer_sat_sub()

Referenced by match_saturation_sub(), and vect_recog_sat_sub_pattern().

◆ gimple_signed_integer_sat_trunc()

Referenced by match_saturation_trunc(), and vect_recog_sat_trunc_pattern().

◆ gimple_unsigned_integer_sat_add()

Referenced by match_saturation_add(), match_saturation_add_with_assign(), and vect_recog_sat_add_pattern().

◆ gimple_unsigned_integer_sat_mul()

Referenced by match_saturation_mul(), and match_unsigned_saturation_mul().

◆ gimple_unsigned_integer_sat_sub()

Referenced by match_saturation_sub(), match_unsigned_saturation_sub(), and vect_recog_sat_sub_pattern().

◆ gimple_unsigned_integer_sat_trunc()

Referenced by match_saturation_trunc(), match_unsigned_saturation_trunc(), and vect_recog_sat_trunc_pattern().

◆ insert_bb()

|

static |

Insert NEW_OCC into our subset of the dominator tree. P_HEAD points to a list of "struct occurrence"s, one per basic block, having IDOM as their common dominator. We try to insert NEW_OCC as deep as possible in the tree, and we also insert any other block that is a common dominator for BB and one block already in the tree.

References basic_block_def::aux, occurrence::bb, CDI_DOMINATORS, occurrence::children, gcc_assert, insert_bb(), nearest_common_dominator(), occurrence::next, NULL, and occurrence::occurrence().

Referenced by insert_bb(), and register_division_in().

◆ insert_reciprocals()

|

static |

Walk the subset of the dominator tree rooted at OCC, setting the RECIP_DEF field to a definition of 1.0 / DEF that can be used in the given basic block. The field may be left NULL, of course, if it is not possible or profitable to do the optimization. DEF_BSI is an iterator pointing at the statement defining DEF. If RECIP_DEF is set, a dominator already has a computation that can be used. If should_insert_square_recip is set, then this also inserts the square of the reciprocal immediately after the definition of the reciprocal.

References occurrence::bb, occurrence::bb_has_division, build_one_cst(), occurrence::children, create_tmp_reg(), gimple_build_assign(), gsi_after_labels(), gsi_bb(), gsi_end_p(), gsi_insert_after(), gsi_insert_before(), GSI_NEW_STMT, gsi_next(), GSI_SAME_STMT, gsi_stmt(), insert_reciprocals(), is_division_by(), is_division_by_square(), occurrence::next, occurrence::num_divisions, occurrence::recip_def, occurrence::recip_def_stmt, reciprocal_stats, occurrence::square_recip_def, and TREE_TYPE.

Referenced by execute_cse_reciprocals_1(), and insert_reciprocals().

◆ internal_fn_reciprocal()

| internal_fn internal_fn_reciprocal | ( | gcall * | call | ) |

Return an internal function that implements the reciprocal of CALL, or IFN_LAST if there is no such function that the target supports.

References direct_internal_fn_supported_p(), direct_internal_fn_types(), gimple_call_combined_fn(), and OPTIMIZE_FOR_SPEED.

◆ is_copysign_call_with_1()

Check to see if the CALL statement is an invocation of copysign with 1. being the first argument.

References as_builtin_fn(), as_internal_fn(), builtin_fn_p(), CASE_FLT_FN, CASE_FLT_FN_FLOATN_NX, dyn_cast(), gimple_call_arg(), gimple_call_combined_fn(), internal_fn_p(), and real_onep().

Referenced by convert_expand_mult_copysign().

◆ is_division_by()

Return whether USE_STMT is a floating-point division by DEF.

References cfun, gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), is_gimple_assign(), and stmt_can_throw_internal().

Referenced by execute_cse_reciprocals_1(), and insert_reciprocals().

◆ is_division_by_square()

Return whether USE_STMT is a floating-point division by DEF * DEF.

References cfun, gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), is_square_of(), SSA_NAME_DEF_STMT, stmt_can_throw_internal(), and TREE_CODE.

Referenced by insert_reciprocals().

◆ is_mult_by()

Return TRUE if USE_STMT is a multiplication of DEF by A.

References a, gimple_assign_rhs1(), gimple_assign_rhs2(), and gimple_assign_rhs_code().

Referenced by is_square_of(), and optimize_recip_sqrt().

◆ is_square_of()

Return whether USE_STMT is DEF * DEF.

References is_mult_by().

Referenced by execute_cse_reciprocals_1(), is_division_by_square(), and optimize_recip_sqrt().

◆ is_widening_mult_p()

|

static |

Return true if STMT performs a widening multiplication, assuming the output type is TYPE. If so, store the unwidened types of the operands in *TYPE1_OUT and *TYPE2_OUT respectively. Also fill *RHS1_OUT and *RHS2_OUT such that converting those operands to types *TYPE1_OUT and *TYPE2_OUT would give the operands of the multiplication.

References gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), int_fits_type_p(), is_widening_mult_rhs_p(), NULL, TREE_CODE, TREE_TYPE, TYPE_OVERFLOW_TRAPS, and TYPE_PRECISION.

Referenced by convert_mult_to_widen(), and convert_plusminus_to_widen().

◆ is_widening_mult_rhs_p()

Return true if RHS is a suitable operand for a widening multiplication,

assuming a target type of TYPE.

There are two cases:

- RHS makes some value at least twice as wide. Store that value

in *NEW_RHS_OUT if so, and store its type in *TYPE_OUT.

- RHS is an integer constant. Store that value in *NEW_RHS_OUT if so,

but leave *TYPE_OUT untouched.

References wi::bit_and(), build_nonstandard_integer_type(), wide_int_storage::from(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), int_mode_for_size(), is_gimple_assign(), wi::mask(), NULL, SSA_NAME_DEF_STMT, wi::to_wide(), TREE_CODE, tree_nonzero_bits(), TREE_TYPE, TYPE_PRECISION, TYPE_SIGN, TYPE_UNSIGNED, and widening_mult_conversion_strippable_p().

Referenced by is_widening_mult_p().

◆ last_fma_candidate_feeds_initial_phi()

|

static |

After processing statements of a BB and recording STATE, return true if the initial phi is fed by the last FMA candidate result ore one such result from previously processed BBs marked in LAST_RESULT_SET.

References hash_set< KeyId, Lazy, Traits >::contains(), FOR_EACH_PHI_ARG, SSA_OP_USE, and USE_FROM_PTR.

◆ make_pass_cse_reciprocals()

| gimple_opt_pass * make_pass_cse_reciprocals | ( | gcc::context * | ctxt | ) |

◆ make_pass_cse_sincos()

| gimple_opt_pass * make_pass_cse_sincos | ( | gcc::context * | ctxt | ) |

◆ make_pass_expand_pow()

| gimple_opt_pass * make_pass_expand_pow | ( | gcc::context * | ctxt | ) |

◆ make_pass_optimize_widening_mul()

| gimple_opt_pass * make_pass_optimize_widening_mul | ( | gcc::context * | ctxt | ) |

◆ match_arith_overflow()

|

static |

Recognize for unsigned x x = y - z; if (x > y) where there are other uses of x and replace it with _7 = .SUB_OVERFLOW (y, z); x = REALPART_EXPR <_7>; _8 = IMAGPART_EXPR <_7>; if (_8) and similarly for addition. Also recognize: yc = (type) y; zc = (type) z; x = yc + zc; if (x > max) where y and z have unsigned types with maximum max and there are other uses of x and all of those cast x back to that unsigned type and again replace it with _7 = .ADD_OVERFLOW (y, z); _9 = REALPART_EXPR <_7>; _8 = IMAGPART_EXPR <_7>; if (_8) and replace (utype) x with _9. Or with x >> popcount (max) instead of x > max. Also recognize: x = ~z; if (y > x) and replace it with _7 = .ADD_OVERFLOW (y, z); _8 = IMAGPART_EXPR <_7>; if (_8) And also recognize: z = x * y; if (x != 0) goto <bb 3>; [50.00%] else goto <bb 4>; [50.00%] <bb 3> [local count: 536870913]: _2 = z / x; _9 = _2 != y; _10 = (int) _9; <bb 4> [local count: 1073741824]: # iftmp.0_3 = PHI <_10(3), 0(2)> and replace it with _7 = .MUL_OVERFLOW (x, y); z = IMAGPART_EXPR <_7>; _8 = IMAGPART_EXPR <_7>; _9 = _8 != 0; iftmp.0_3 = (int) _9;

References arith_overflow_check_p(), as_a(), boolean_type_node, build1(), build2(), build_complex_type(), build_int_cst(), can_mult_highpart_p(), cfg_changed, fold_convert, FOR_EACH_IMM_USE_FAST, FOR_EACH_IMM_USE_STMT, g, gcc_assert, gcc_checking_assert, GET_MODE_BITSIZE(), gimple_assign_cast_p(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_class(), gimple_assign_rhs_code(), gimple_assign_set_rhs1(), gimple_assign_set_rhs2(), gimple_assign_set_rhs_code(), gimple_assign_set_rhs_with_ops(), gimple_bb(), GIMPLE_BINARY_RHS, gimple_build_assign(), gimple_build_call_internal(), gimple_call_set_lhs(), gimple_cond_set_code(), gimple_cond_set_lhs(), gimple_cond_set_rhs(), gsi_end_p(), gsi_for_stmt(), gsi_insert_after(), gsi_insert_before(), GSI_NEW_STMT, gsi_next_nondebug(), gsi_prev_nondebug(), gsi_remove(), gsi_replace(), GSI_SAME_STMT, gsi_stmt(), has_zero_uses(), i, INTEGRAL_TYPE_P, is_gimple_assign(), is_gimple_debug(), make_ssa_name(), wi::max_value(), maybe_optimize_guarding_check(), wi::ne_p(), NULL, NULL_TREE, operand_equal_p(), optab_handler(), release_ssa_name(), wi::rshift(), sc, SCALAR_INT_TYPE_MODE, single_imm_use(), SSA_NAME_DEF_STMT, SSA_NAME_OCCURS_IN_ABNORMAL_PHI, wi::to_wide(), TREE_CODE, TREE_TYPE, TYPE_MODE, TYPE_PRECISION, TYPE_UNSIGNED, UNSIGNED, update_stmt(), USE_STMT, useless_type_conversion_p(), and wide_int_to_tree().

◆ match_saturation_add()

|

static |

◆ match_saturation_add_with_assign()

|

static |

◆ match_saturation_mul()

|

static |

Try to match saturation unsigned mul, aka: _6 = .MUL_OVERFLOW (a_4(D), b_5(D)); _2 = IMAGPART_EXPR <_6>; if (_2 != 0) goto <bb 4>; [35.00%] else goto <bb 3>; [65.00%] <bb 3> [local count: 697932184]: _1 = REALPART_EXPR <_6>; <bb 4> [local count: 1073741824]: # _3 = PHI <18446744073709551615(2), _1(3)> => _3 = .SAT_MUL (a_4(D), b_5(D));

References build_saturation_binary_arith_call_and_insert(), gimple_phi_num_args(), gimple_phi_result(), gimple_unsigned_integer_sat_mul(), and NULL.

◆ match_saturation_sub()

|

static |

◆ match_saturation_trunc()

|

static |

◆ match_single_bit_test()

|

static |

Replace .POPCOUNT (x) == 1 or .POPCOUNT (x) != 1 with (x & (x - 1)) > x - 1 or (x & (x - 1)) <= x - 1 if .POPCOUNT isn't a direct optab. Also handle `<=`/`>` to be `x & (x - 1) !=/== x`.

References build_int_cst(), build_zero_cst(), direct_internal_fn_supported_p(), dyn_cast(), g, gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), gimple_assign_set_rhs1(), gimple_assign_set_rhs2(), gimple_assign_set_rhs_code(), gimple_build_assign(), gimple_build_call_internal(), gimple_call_arg(), gimple_call_combined_fn(), gimple_call_lhs(), gimple_call_set_lhs(), gimple_cond_code(), gimple_cond_lhs(), gimple_cond_rhs(), gimple_cond_set_code(), gimple_cond_set_lhs(), gimple_cond_set_rhs(), gsi_for_stmt(), gsi_insert_before(), gsi_remove(), gsi_replace(), GSI_SAME_STMT, has_single_use(), integer_minus_one_node, integer_one_node, integer_onep(), integer_zero_node, INTEGRAL_TYPE_P, make_ssa_name(), OPTIMIZE_FOR_BOTH, release_defs(), SSA_NAME_DEF_STMT, TREE_CODE, tree_expr_nonzero_p(), TREE_TYPE, and update_stmt().

◆ match_uaddc_usubc()

|

static |

Try to match e.g. _29 = .ADD_OVERFLOW (_3, _4); _30 = REALPART_EXPR <_29>; _31 = IMAGPART_EXPR <_29>; _32 = .ADD_OVERFLOW (_30, _38); _33 = REALPART_EXPR <_32>; _34 = IMAGPART_EXPR <_32>; _35 = _31 + _34; as _36 = .UADDC (_3, _4, _38); _33 = REALPART_EXPR <_36>; _35 = IMAGPART_EXPR <_36>; or _22 = .SUB_OVERFLOW (_6, _5); _23 = REALPART_EXPR <_22>; _24 = IMAGPART_EXPR <_22>; _25 = .SUB_OVERFLOW (_23, _37); _26 = REALPART_EXPR <_25>; _27 = IMAGPART_EXPR <_25>; _28 = _24 | _27; as _29 = .USUBC (_6, _5, _37); _26 = REALPART_EXPR <_29>; _288 = IMAGPART_EXPR <_29>; provided _38 or _37 above have [0, 1] range and _3, _4 and _30 or _6, _5 and _23 are unsigned integral types with the same precision. Whether + or | or ^ is used on the IMAGPART_EXPR results doesn't matter, with one of added or subtracted operands in [0, 1] range at most one .ADD_OVERFLOW or .SUB_OVERFLOW will indicate overflow.

References build1(), build_complex_type(), build_zero_cst(), const_unop(), fold_convert, FOR_EACH_IMM_USE_FAST, g, gcc_checking_assert, gimple_assign_cast_p(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), gimple_build_assign(), gimple_build_call_internal(), gimple_call_arg(), gimple_call_internal_fn(), gimple_call_internal_p(), gimple_call_lhs(), gimple_call_set_lhs(), gsi_for_stmt(), gsi_insert_before(), gsi_remove(), gsi_replace(), GSI_SAME_STMT, has_single_use(), i, INTEGRAL_TYPE_P, is_gimple_assign(), is_gimple_call(), is_gimple_debug(), make_ssa_name(), NULL, NULL_TREE, num_imm_uses(), optab_handler(), r, release_defs(), SSA_NAME_DEF_STMT, TREE_CODE, TREE_OPERAND, TREE_TYPE, tree_zero_one_valued_p(), TYPE_MODE, TYPE_PRECISION, TYPE_UNSIGNED, types_compatible_p(), uaddc_cast(), uaddc_is_cplxpart(), uaddc_ne0(), and USE_STMT.

◆ match_unsigned_saturation_mul()

|

static |

◆ match_unsigned_saturation_sub()

|

static |

◆ match_unsigned_saturation_trunc()

|

static |

◆ maybe_optimize_guarding_check()

|

static |

Helper function of match_arith_overflow. For MUL_OVERFLOW, if we have a check for non-zero like: _1 = x_4(D) * y_5(D); *res_7(D) = _1; if (x_4(D) != 0) goto <bb 3>; [50.00%] else goto <bb 4>; [50.00%] <bb 3> [local count: 536870913]: _2 = _1 / x_4(D); _9 = _2 != y_5(D); _10 = (int) _9; <bb 4> [local count: 1073741824]: # iftmp.0_3 = PHI <_10(3), 0(2)> then in addition to using .MUL_OVERFLOW (x_4(D), y_5(D)) we can also optimize the x_4(D) != 0 condition to 1.

References as_a(), cfg_changed, EDGE_COUNT, EDGE_SUCC, FOR_EACH_VEC_ELT, g, gimple_assign_cast_p(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs3(), gimple_assign_rhs_code(), gimple_bb(), gimple_cond_code(), gimple_cond_lhs(), gimple_cond_make_false(), gimple_cond_make_true(), gimple_cond_rhs(), gimple_phi_arg_def(), gsi_after_labels(), gsi_end_p(), gsi_last_bb(), gsi_next(), gsi_next_nondebug(), gsi_start_phis(), gsi_stmt(), i, integer_onep(), integer_zerop(), INTEGRAL_TYPE_P, is_gimple_debug(), NULL, operand_equal_p(), reset_flow_sensitive_info_in_bb(), safe_dyn_cast(), single_pred_edge(), single_pred_p(), single_succ(), single_succ_edge(), single_succ_p(), SSA_NAME_DEF_STMT, basic_block_def::succs, TREE_CODE, TREE_TYPE, TYPE_PRECISION, and update_stmt().

Referenced by match_arith_overflow().

◆ maybe_record_sincos()

|

static |

Records an occurrence at statement USE_STMT in the vector of trees STMTS if it is dominated by *TOP_BB or dominates it or this basic block is not yet initialized. Returns true if the occurrence was pushed on the vector. Adjusts *TOP_BB to be the basic block dominating all statements in the vector.

References CDI_DOMINATORS, dominated_by_p(), and gimple_bb().

Referenced by execute_cse_sincos_1().

◆ optimize_recip_sqrt()

|

static |

Transform sequences like t = sqrt (a) x = 1.0 / t; r1 = x * x; r2 = a * x; into: t = sqrt (a) r1 = 1.0 / a; r2 = t; x = r1 * r2; depending on the uses of x, r1, r2. This removes one multiplication and allows the sqrt and division operations to execute in parallel. DEF_GSI is the gsi of the initial division by sqrt that defines DEF (x in the example above).

References a, dconst1, dump_file, dyn_cast(), fold_stmt_inplace(), FOR_EACH_IMM_USE_STMT, FOR_EACH_VEC_ELT, gcc_assert, gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), gimple_assign_set_rhs_from_tree(), gimple_bb(), gimple_build_assign(), gimple_call_arg(), gimple_call_combined_fn(), gimple_call_lhs(), gsi_for_stmt(), gsi_insert_after(), gsi_insert_before(), GSI_NEW_STMT, gsi_remove(), GSI_SAME_STMT, gsi_stmt(), i, is_gimple_debug(), is_mult_by(), is_square_of(), make_temp_ssa_name(), NULL, NULL_TREE, print_gimple_stmt(), real_equal(), release_defs(), release_ssa_name(), SSA_NAME_DEF_STMT, TDF_NONE, TREE_CODE, TREE_REAL_CST, TREE_TYPE, and update_stmt().

◆ optimize_spaceship()

|

static |

If target has spaceship<MODE>3 expander, pattern recognize <bb 2> [local count: 1073741824]: if (a_2(D) == b_3(D)) goto <bb 6>; [34.00%] else goto <bb 3>; [66.00%] <bb 3> [local count: 708669601]: if (a_2(D) < b_3(D)) goto <bb 6>; [1.04%] else goto <bb 4>; [98.96%] <bb 4> [local count: 701299439]: if (a_2(D) > b_3(D)) goto <bb 5>; [48.89%] else goto <bb 6>; [51.11%] <bb 5> [local count: 342865295]: <bb 6> [local count: 1073741824]: and turn it into: <bb 2> [local count: 1073741824]: _1 = .SPACESHIP (a_2(D), b_3(D), 0); if (_1 == 0) goto <bb 6>; [34.00%] else goto <bb 3>; [66.00%] <bb 3> [local count: 708669601]: if (_1 == -1) goto <bb 6>; [1.04%] else goto <bb 4>; [98.96%] <bb 4> [local count: 701299439]: if (_1 == 1) goto <bb 5>; [48.89%] else goto <bb 6>; [51.11%] <bb 5> [local count: 342865295]: <bb 6> [local count: 1073741824]: so that the backend can emit optimal comparison and conditional jump sequence. If the <bb 6> [local count: 1073741824]: above has a single PHI like: # _27 = PHI<0(2), -1(3), -128(4), 1(5)> then replace it with effectively _1 = .SPACESHIP (a_2(D), b_3(D), -128); _27 = _1;

References a, as_a(), boolean_false_node, build_int_cst(), cond_only_block_p(), EDGE_COUNT, EDGE_SUCC, empty_block_p(), g, gcc_assert, gimple_bb(), gimple_build_assign(), gimple_build_call_internal(), gimple_call_set_lhs(), gimple_cond_code(), gimple_cond_lhs(), gimple_cond_rhs(), gimple_cond_set_code(), gimple_cond_set_lhs(), gimple_cond_set_rhs(), gimple_phi_arg_def_from_edge(), gimple_phi_result(), gsi_end_p(), gsi_for_stmt(), gsi_insert_before(), gsi_last_bb(), gsi_next(), GSI_SAME_STMT, gsi_start_phis(), HONOR_NANS(), i, integer_all_onesp(), integer_minus_one_node, integer_one_node, integer_onep(), integer_type_node, integer_zero_node, integer_zerop(), INTEGRAL_TYPE_P, make_ssa_name(), wi::minus_one(), NULL, wi::one(), operand_equal_p(), optab_handler(), basic_block_def::preds, safe_dyn_cast(), SCALAR_FLOAT_TYPE_P, SET_PHI_ARG_DEF_ON_EDGE, set_range_info(), wi::shwi(), SIGNED, signed_char_type_node, single_pred_p(), single_succ(), single_succ_edge(), single_succ_p(), wi::to_wide(), wi::to_widest(), TREE_CODE, tree_int_cst_sgn(), TREE_TYPE, TYPE_MAX_VALUE, TYPE_MIN_VALUE, TYPE_MODE, TYPE_PRECISION, TYPE_UNSIGNED, update_stmt(), useless_type_conversion_p(), and virtual_operand_p().

◆ powi_as_mults()

| tree powi_as_mults | ( | gimple_stmt_iterator * | gsi, |

| location_t | loc, | ||

| tree | arg0, | ||

| HOST_WIDE_INT | n ) |

Convert ARG0**N to a tree of multiplications of ARG0 with itself. This function needs to be kept in sync with powi_cost above.

References absu_hwi(), build_one_cst(), build_real(), cache, dconst1, gimple_build_assign(), gimple_set_location(), gsi_insert_before(), GSI_SAME_STMT, make_temp_ssa_name(), NULL, powi_as_mults_1(), POWI_TABLE_SIZE, and TREE_TYPE.

Referenced by attempt_builtin_powi(), and gimple_expand_builtin_powi().

◆ powi_as_mults_1()

|

static |

Recursive subroutine of powi_as_mults. This function takes the array, CACHE, of already calculated exponents and an exponent N and returns a tree that corresponds to CACHE[1]**N, with type TYPE.

References cache, gimple_build_assign(), gimple_set_location(), gsi_insert_before(), GSI_SAME_STMT, make_temp_ssa_name(), NULL, powi_as_mults_1(), powi_table, POWI_TABLE_SIZE, and POWI_WINDOW_SIZE.

Referenced by powi_as_mults(), and powi_as_mults_1().

◆ powi_cost()

|

static |

Return the number of multiplications required to calculate powi(x,n) for an arbitrary x, given the exponent N. This function needs to be kept in sync with powi_as_mults below.

References absu_hwi(), cache, powi_lookup_cost(), POWI_TABLE_SIZE, and POWI_WINDOW_SIZE.

Referenced by expand_pow_as_sqrts(), gimple_expand_builtin_pow(), and gimple_expand_builtin_powi().

◆ powi_lookup_cost()

|

static |

Return the number of multiplications required to calculate powi(x,n) where n is less than POWI_TABLE_SIZE. This is a subroutine of powi_cost. CACHE is an array indicating which exponents have already been calculated.

References cache, powi_lookup_cost(), and powi_table.

Referenced by powi_cost(), and powi_lookup_cost().

◆ print_nested_fn()

|

static |

Print to STREAM the repeated application of function FNAME to ARG N times. So, for FNAME = "foo", ARG = "x", N = 2 it would print: "foo (foo (x))".

References print_nested_fn().

Referenced by dump_fractional_sqrt_sequence(), and print_nested_fn().

◆ register_division_in()

|

inlinestatic |

Register that we found a division in BB. IMPORTANCE is a measure of how much weighting to give that division. Use IMPORTANCE = 2 to register a single division. If the division is going to be found multiple times use 1 (as it is with squares).

References basic_block_def::aux, occurrence::bb, occurrence::bb_has_division, cfun, ENTRY_BLOCK_PTR_FOR_FN, insert_bb(), NULL, occurrence::num_divisions, occ_head, and occurrence::occurrence().

Referenced by execute_cse_reciprocals_1().

◆ replace_reciprocal()

|

inlinestatic |

Replace the division at USE_P with a multiplication by the reciprocal, if possible.

References basic_block_def::aux, occurrence::bb, fold_stmt_inplace(), gimple_assign_set_rhs_code(), gimple_bb(), gsi_for_stmt(), optimize_bb_for_speed_p(), occurrence::recip_def, occurrence::recip_def_stmt, SET_USE, update_stmt(), and USE_STMT.

Referenced by execute_cse_reciprocals_1().

◆ replace_reciprocal_squares()

|

inlinestatic |

Replace occurrences of expr / (x * x) with expr * ((1 / x) * (1 / x)). Take as argument the use for (x * x).

References basic_block_def::aux, occurrence::bb, fold_stmt_inplace(), gimple_assign_set_rhs2(), gimple_assign_set_rhs_code(), gimple_bb(), gsi_for_stmt(), optimize_bb_for_speed_p(), occurrence::recip_def, SET_USE, occurrence::square_recip_def, update_stmt(), and USE_STMT.

Referenced by execute_cse_reciprocals_1().

◆ representable_as_half_series_p()

| bool representable_as_half_series_p | ( | REAL_VALUE_TYPE | c, |

| unsigned | n, | ||

| struct pow_synth_sqrt_info * | info ) |

Return true iff the real value C can be represented as a sum of powers of 0.5 up to N. That is: C == SUM<i from 1..N> (a[i]*(0.5**i)) where a[i] is either 0 or 1. Record in INFO the various parameters of the synthesis algorithm such as the factors a[i], the maximum 0.5 power and the number of multiplications that will be required.

References dconst0, dconsthalf, pow_synth_sqrt_info::deepest, pow_synth_sqrt_info::factors, i, pow_synth_sqrt_info::num_mults, real_arithmetic(), real_equal(), REAL_VALUE_NEGATIVE, and REAL_VALUE_TYPE.

Referenced by expand_pow_as_sqrts().

◆ result_of_phi()

If OP is an SSA name defined by a PHI node, return the PHI statement. Otherwise return NULL.

References dyn_cast(), NULL, SSA_NAME_DEF_STMT, and TREE_CODE.

Referenced by convert_mult_to_fma().

◆ target_supports_divmod_p()

Return true if target has support for divmod.

References FOR_EACH_MODE_FROM, NULL, NULL_RTX, optab_handler(), optab_libfunc(), and targetm.

Referenced by divmod_candidate_p().

◆ uaddc_cast()

Helper of match_uaddc_usubc. Look through an integral cast which should preserve [0, 1] range value (unless source has 1-bit signed type) and the cast has single use.

References g, gimple_assign_cast_p(), gimple_assign_lhs(), gimple_assign_rhs1(), has_single_use(), INTEGRAL_TYPE_P, SSA_NAME_DEF_STMT, TREE_CODE, TREE_TYPE, TYPE_PRECISION, and TYPE_UNSIGNED.

Referenced by match_uaddc_usubc().

◆ uaddc_is_cplxpart()

Return true if G is {REAL,IMAG}PART_EXPR PART with SSA_NAME

operand.

References g, gimple_assign_rhs1(), gimple_assign_rhs_code(), is_gimple_assign(), TREE_CODE, and TREE_OPERAND.

Referenced by match_uaddc_usubc().

◆ uaddc_ne0()

Helper of match_uaddc_usubc. Look through a NE_EXPR comparison with 0 which also preserves [0, 1] value range.

References g, gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), has_single_use(), integer_zerop(), is_gimple_assign(), SSA_NAME_DEF_STMT, and TREE_CODE.

Referenced by match_uaddc_usubc().

◆ widening_mult_conversion_strippable_p()

Return true if stmt is a type conversion operation that can be stripped when used in a widening multiply operation.

References CONVERT_EXPR_CODE_P, gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs_code(), TREE_CODE, TREE_TYPE, TYPE_PRECISION, and TYPE_UNSIGNED.

Referenced by is_widening_mult_rhs_p().

Variable Documentation

◆ conv_removed

| int conv_removed |

◆ divmod_calls_inserted

| int divmod_calls_inserted |

◆ fmas_inserted

| int fmas_inserted |

◆ highpart_mults_inserted

| int highpart_mults_inserted |

◆ inserted

| int inserted |

◆ maccs_inserted

| int maccs_inserted |

◆ occ_head

|

static |

The instance of "struct occurrence" representing the highest interesting block in the dominator tree.

Referenced by execute_cse_reciprocals_1(), and register_division_in().

◆ occ_pool

|

static |

Allocation pool for getting instances of "struct occurrence".

Referenced by occurrence::operator delete(), and occurrence::operator new().

◆ powi_table

|

static |

The following table is an efficient representation of an "optimal power tree". For each value, i, the corresponding value, j, in the table states than an optimal evaluation sequence for calculating pow(x,i) can be found by evaluating pow(x,j)*pow(x,i-j). An optimal power tree for the first 100 integers is given in Knuth's "Seminumerical algorithms".

Referenced by powi_as_mults_1(), and powi_lookup_cost().

◆ []

| struct { ... } reciprocal_stats |

Referenced by insert_reciprocals().

◆ [struct]

| struct { ... } sincos_stats |

Referenced by execute_cse_conv_1(), and execute_cse_sincos_1().

◆ [struct]

| struct { ... } widen_mul_stats |

◆ widen_mults_inserted

| int widen_mults_inserted |