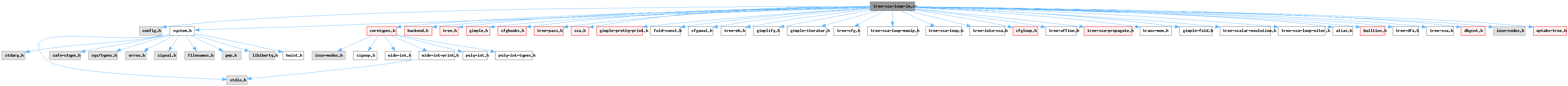

#include "config.h"#include "system.h"#include "coretypes.h"#include "backend.h"#include "tree.h"#include "gimple.h"#include "cfghooks.h"#include "tree-pass.h"#include "ssa.h"#include "gimple-pretty-print.h"#include "fold-const.h"#include "cfganal.h"#include "tree-eh.h"#include "gimplify.h"#include "gimple-iterator.h"#include "tree-cfg.h"#include "tree-ssa-loop-manip.h"#include "tree-ssa-loop.h"#include "tree-into-ssa.h"#include "cfgloop.h"#include "tree-affine.h"#include "tree-ssa-propagate.h"#include "trans-mem.h"#include "gimple-fold.h"#include "tree-scalar-evolution.h"#include "tree-ssa-loop-niter.h"#include "alias.h"#include "builtins.h"#include "tree-dfa.h"#include "tree-ssa.h"#include "dbgcnt.h"#include "insn-codes.h"#include "optabs-tree.h"

Data Structures | |

| struct | lim_aux_data |

| struct | mem_ref_loc |

| class | im_mem_ref |

| struct | mem_ref_hasher |

| struct | fmt_data |

| class | rewrite_mem_ref_loc |

| class | first_mem_ref_loc_1 |

| class | sm_set_flag_if_changed |

| struct | sm_aux |

| struct | seq_entry |

| class | ref_always_accessed |

| class | ref_in_loop_hot_body |

Macros | |

| #define | LIM_EXPENSIVE ((unsigned) param_lim_expensive) |

| #define | ALWAYS_EXECUTED_IN(BB) |

| #define | SET_ALWAYS_EXECUTED_IN(BB, VAL) |

| #define | UNANALYZABLE_MEM_ID 0 |

| #define | MEM_ANALYZABLE(REF) |

Enumerations | |

| enum | dep_kind { lim_raw , sm_war , sm_waw } |

| enum | dep_state { dep_unknown , dep_independent , dep_dependent } |

| enum | move_pos { MOVE_IMPOSSIBLE , MOVE_PRESERVE_EXECUTION , MOVE_POSSIBLE } |

| enum | sm_kind { sm_ord , sm_unord , sm_other } |

Functions | |

| static void | record_loop_dependence (class loop *loop, im_mem_ref *ref, dep_kind kind, dep_state state) |

| static dep_state | query_loop_dependence (class loop *loop, im_mem_ref *ref, dep_kind kind) |

| static bool | ref_indep_loop_p (class loop *, im_mem_ref *, dep_kind) |

| static bool | ref_always_accessed_p (class loop *, im_mem_ref *, bool) |

| static bool | refs_independent_p (im_mem_ref *, im_mem_ref *, bool=true) |

| static struct lim_aux_data * | init_lim_data (gimple *stmt) |

| static struct lim_aux_data * | get_lim_data (gimple *stmt) |

| static void | free_lim_aux_data (struct lim_aux_data *data) |

| static void | clear_lim_data (gimple *stmt) |

| static enum move_pos | movement_possibility_1 (gimple *stmt) |

| static enum move_pos | movement_possibility (gimple *stmt) |

| bool | bb_colder_than_loop_preheader (basic_block bb, class loop *loop) |

| static class loop * | get_coldest_out_loop (class loop *outermost_loop, class loop *loop, basic_block curr_bb) |

| static class loop * | outermost_invariant_loop (tree def, class loop *loop) |

| static bool | add_dependency (tree def, struct lim_aux_data *data, class loop *loop, bool add_cost) |

| static unsigned | stmt_cost (gimple *stmt) |

| static class loop * | outermost_indep_loop (class loop *outer, class loop *loop, im_mem_ref *ref) |

| static tree * | simple_mem_ref_in_stmt (gimple *stmt, bool *is_store) |

| static bool | extract_true_false_args_from_phi (basic_block dom, gphi *phi, tree *true_arg_p, tree *false_arg_p) |

| static bool | determine_max_movement (gimple *stmt, bool must_preserve_exec) |

| static void | set_level (gimple *stmt, class loop *orig_loop, class loop *level) |

| static void | set_profitable_level (gimple *stmt) |

| static bool | nonpure_call_p (gimple *stmt) |

| static gimple * | rewrite_reciprocal (gimple_stmt_iterator *bsi) |

| static gimple * | rewrite_bittest (gimple_stmt_iterator *bsi) |

| static void | compute_invariantness (basic_block bb) |

| unsigned int | move_computations_worker (basic_block bb) |

| static bool | may_move_till (tree ref, tree *index, void *data) |

| static void | force_move_till_op (tree op, class loop *orig_loop, class loop *loop) |

| static bool | force_move_till (tree ref, tree *index, void *data) |

| static void | memref_free (class im_mem_ref *mem) |

| static im_mem_ref * | mem_ref_alloc (ao_ref *mem, unsigned hash, unsigned id) |

| static void | record_mem_ref_loc (im_mem_ref *ref, gimple *stmt, tree *loc) |

| static bool | set_ref_stored_in_loop (im_mem_ref *ref, class loop *loop) |

| static void | mark_ref_stored (im_mem_ref *ref, class loop *loop) |

| static bool | set_ref_loaded_in_loop (im_mem_ref *ref, class loop *loop) |

| static void | mark_ref_loaded (im_mem_ref *ref, class loop *loop) |

| static void | gather_mem_refs_stmt (class loop *loop, gimple *stmt) |

| static int | sort_bbs_in_loop_postorder_cmp (const void *bb1_, const void *bb2_, void *bb_loop_postorder_) |

| static int | sort_locs_in_loop_postorder_cmp (const void *loc1_, const void *loc2_, void *bb_loop_postorder_) |

| static void | analyze_memory_references (bool store_motion) |

| static bool | mem_refs_may_alias_p (im_mem_ref *mem1, im_mem_ref *mem2, hash_map< tree, name_expansion * > **ttae_cache, bool tbaa_p) |

| static int | find_ref_loc_in_loop_cmp (const void *loop_, const void *loc_, void *bb_loop_postorder_) |

| template<typename FN> | |

| static bool | for_all_locs_in_loop (class loop *loop, im_mem_ref *ref, FN fn) |

| static void | rewrite_mem_refs (class loop *loop, im_mem_ref *ref, tree tmp_var) |

| static mem_ref_loc * | first_mem_ref_loc (class loop *loop, im_mem_ref *ref) |

| static basic_block | execute_sm_if_changed (edge ex, tree mem, tree tmp_var, tree flag, edge preheader, hash_set< basic_block > *flag_bbs, edge &append_cond_position, edge &last_cond_fallthru) |

| static tree | execute_sm_if_changed_flag_set (class loop *loop, im_mem_ref *ref, hash_set< basic_block > *bbs) |

| static sm_aux * | execute_sm (class loop *loop, im_mem_ref *ref, hash_map< im_mem_ref *, sm_aux * > &aux_map, bool maybe_mt, bool use_other_flag_var) |

| static void | execute_sm_exit (class loop *loop, edge ex, vec< seq_entry > &seq, hash_map< im_mem_ref *, sm_aux * > &aux_map, sm_kind kind, edge &append_cond_position, edge &last_cond_fallthru, bitmap clobbers_to_prune) |

| static bool | sm_seq_push_down (vec< seq_entry > &seq, unsigned ptr, unsigned *at) |

| static int | sm_seq_valid_bb (class loop *loop, basic_block bb, tree vdef, vec< seq_entry > &seq, bitmap refs_not_in_seq, bitmap refs_not_supported, bool forked, bitmap fully_visited) |

| static void | hoist_memory_references (class loop *loop, bitmap mem_refs, const vec< edge > &exits) |

| static bool | is_self_write (im_mem_ref *load_ref, im_mem_ref *store_ref) |

| static bool | can_sm_ref_p (class loop *loop, im_mem_ref *ref) |

| static void | find_refs_for_sm (class loop *loop, bitmap sm_executed, bitmap refs_to_sm) |

| static bool | loop_suitable_for_sm (class loop *loop, const vec< edge > &exits) |

| static void | store_motion_loop (class loop *loop, bitmap sm_executed) |

| static void | do_store_motion (void) |

| static void | fill_always_executed_in_1 (class loop *loop, sbitmap contains_call) |

| static void | fill_always_executed_in (void) |

| void | fill_coldest_and_hotter_out_loop (class loop *coldest_loop, class loop *hotter_loop, class loop *loop) |

| static void | tree_ssa_lim_initialize (bool store_motion) |

| static void | tree_ssa_lim_finalize (void) |

| unsigned int | loop_invariant_motion_in_fun (function *fun, bool store_motion) |

| gimple_opt_pass * | make_pass_lim (gcc::context *ctxt) |

Variables | |

| static hash_map< gimple *, lim_aux_data * > * | lim_aux_data_map |

| static bool | in_loop_pipeline |

| vec< class loop * > | coldest_outermost_loop |

| vec< class loop * > | hotter_than_inner_loop |

| static struct { ... } | memory_accesses |

| static bitmap_obstack | lim_bitmap_obstack |

| static obstack | mem_ref_obstack |

| static unsigned * | bb_loop_postorder |

Macro Definition Documentation

◆ ALWAYS_EXECUTED_IN

| #define ALWAYS_EXECUTED_IN | ( | BB | ) |

The outermost loop for which execution of the header guarantees that the block will be executed.

Referenced by compute_invariantness(), determine_max_movement(), fill_always_executed_in_1(), and move_computations_worker().

◆ LIM_EXPENSIVE

| #define LIM_EXPENSIVE ((unsigned) param_lim_expensive) |

Minimum cost of an expensive expression.

Referenced by compute_invariantness(), determine_max_movement(), and stmt_cost().

◆ MEM_ANALYZABLE

| #define MEM_ANALYZABLE | ( | REF | ) |

Whether the reference was analyzable.

Referenced by can_sm_ref_p(), and determine_max_movement().

◆ SET_ALWAYS_EXECUTED_IN

| #define SET_ALWAYS_EXECUTED_IN | ( | BB, | |

| VAL ) |

Referenced by fill_always_executed_in_1(), and tree_ssa_lim_finalize().

◆ UNANALYZABLE_MEM_ID

| #define UNANALYZABLE_MEM_ID 0 |

ID of the shared unanalyzable mem.

Referenced by gather_mem_refs_stmt(), ref_indep_loop_p(), sm_seq_valid_bb(), and tree_ssa_lim_initialize().

Enumeration Type Documentation

◆ dep_kind

| enum dep_kind |

◆ dep_state

| enum dep_state |

◆ move_pos

| enum move_pos |

◆ sm_kind

| enum sm_kind |

sm_ord is used for ordinary stores we can retain order with respect

to other stores

sm_unord is used for conditional executed stores which need to be

able to execute in arbitrary order with respect to other stores

sm_other is used for stores we do not try to apply store motion to. | Enumerator | |

|---|---|

| sm_ord | |

| sm_unord | |

| sm_other | |

Function Documentation

◆ add_dependency()

|

static |

DATA is a structure containing information associated with a statement inside LOOP. DEF is one of the operands of this statement. Find the outermost loop enclosing LOOP in that value of DEF is invariant and record this in DATA->max_loop field. If DEF itself is defined inside this loop as well (i.e. we need to hoist it out of the loop if we want to hoist the statement represented by DATA), record the statement in that DEF is defined to the DATA->depends list. Additionally if ADD_COST is true, add the cost of the computation of DEF to the DATA->cost. If DEF is not invariant in LOOP, return false. Otherwise return TRUE.

References add_cost(), lim_aux_data::cost, flow_loop_nested_p(), get_lim_data(), gimple_bb(), basic_block_def::loop_father, lim_aux_data::max_loop, outermost_invariant_loop(), and SSA_NAME_DEF_STMT.

Referenced by determine_max_movement().

◆ analyze_memory_references()

|

static |

Gathers memory references in loops.

References im_mem_ref::accesses_in_loop, bb_loop_postorder, bitmap_ior_into(), cfun, current_loops, FOR_EACH_BB_FN, FOR_EACH_VEC_ELT, free(), gather_mem_refs_stmt(), gcc_assert, gcc_sort_r(), gsi_end_p(), gsi_next(), gsi_start_bb(), gsi_stmt(), i, LI_FROM_INNERMOST, basic_block_def::loop_father, loop_outer(), memory_accesses, n_basic_blocks_for_fn, loop::num, NUM_FIXED_BLOCKS, sort_bbs_in_loop_postorder_cmp(), and sort_locs_in_loop_postorder_cmp().

Referenced by loop_invariant_motion_in_fun().

◆ bb_colder_than_loop_preheader()

| bool bb_colder_than_loop_preheader | ( | basic_block | bb, |

| class loop * | loop ) |

Compare the profile count inequality of bb and loop's preheader, it is three-state as stated in profile-count.h, FALSE is returned if inequality cannot be decided.

References basic_block_def::count, gcc_assert, and loop_preheader_edge().

Referenced by fill_coldest_and_hotter_out_loop(), and get_coldest_out_loop().

◆ can_sm_ref_p()

|

static |

Returns true if we can perform store motion of REF from LOOP.

References bitmap_bit_p, DECL_P, error_mark_node, for_all_locs_in_loop(), for_each_index(), get_base_address(), is_gimple_reg_type(), lim_raw, im_mem_ref::loaded, may_move_till(), im_mem_ref::mem, MEM_ANALYZABLE, loop::num, ao_ref::ref, ref_always_accessed_p(), ref_indep_loop_p(), sm_war, TREE_CODE, tree_could_throw_p(), tree_could_trap_p(), TREE_READONLY, TREE_THIS_VOLATILE, and TREE_TYPE.

Referenced by find_refs_for_sm().

◆ clear_lim_data()

|

static |

References free_lim_aux_data(), lim_aux_data_map, and NULL.

Referenced by move_computations_worker().

◆ compute_invariantness()

|

static |

Determine the outermost loops in that statements in basic block BB are invariant, and record them to the LIM_DATA associated with the statements.

References ALWAYS_EXECUTED_IN, lim_aux_data::always_executed_in, lim_aux_data::cost, determine_max_movement(), dump_file, dump_flags, get_gimple_rhs_class(), get_lim_data(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), GIMPLE_BINARY_RHS, gsi_end_p(), gsi_next(), gsi_start_bb(), gsi_start_phis(), gsi_stmt(), has_single_use(), in_loop_pipeline, basic_block_def::index, init_lim_data(), integer_onep(), is_gimple_assign(), LIM_EXPENSIVE, loop_containing_stmt(), loop_depth(), basic_block_def::loop_father, loop_outer(), lim_aux_data::max_loop, MOVE_IMPOSSIBLE, MOVE_POSSIBLE, MOVE_PRESERVE_EXECUTION, movement_possibility(), nonpure_call_p(), NULL, loop::num, outermost_invariant_loop(), print_gimple_stmt(), rewrite_bittest(), rewrite_reciprocal(), set_level(), set_profitable_level(), stmt_makes_single_store(), TDF_DETAILS, and TREE_CODE.

Referenced by loop_invariant_motion_in_fun().

◆ determine_max_movement()

Determine the outermost loop to that it is possible to hoist a statement STMT and store it to LIM_DATA (STMT)->max_loop. To do this we determine the outermost loop in that the value computed by STMT is invariant. If MUST_PRESERVE_EXEC is true, additionally choose such a loop that we preserve the fact whether STMT is executed. It also fills other related information to LIM_DATA (STMT). The function returns false if STMT cannot be hoisted outside of the loop it is defined in, and true otherwise.

References add_dependency(), ALWAYS_EXECUTED_IN, CDI_DOMINATORS, lim_aux_data::cost, dyn_cast(), ECF_RETURNS_TWICE, extract_true_false_args_from_phi(), FOR_EACH_PHI_ARG, FOR_EACH_SSA_TREE_OPERAND, get_coldest_out_loop(), get_immediate_dominator(), get_lim_data(), gimple_bb(), gimple_call_flags(), gimple_cond_code(), gimple_cond_lhs(), gimple_phi_num_args(), gimple_vuse(), gsi_end_p(), gsi_last_bb(), gsi_stmt(), is_a(), LIM_EXPENSIVE, basic_block_def::loop_father, lim_aux_data::max_loop, MEM_ANALYZABLE, memory_accesses, MIN, NULL, optab_vector, outermost_indep_loop(), lim_aux_data::ref, SSA_NAME_DEF_STMT, SSA_OP_USE, stmt_cost(), superloop_at_depth(), target_supports_op_p(), TREE_CODE, TREE_TYPE, UINT_MAX, USE_FROM_PTR, and VECTOR_TYPE_P.

Referenced by compute_invariantness().

◆ do_store_motion()

|

static |

Try to perform store motion for all memory references modified inside loops.

References BITMAP_ALLOC, BITMAP_FREE, current_loops, lim_bitmap_obstack, loop::next, NULL, and store_motion_loop().

Referenced by loop_invariant_motion_in_fun().

◆ execute_sm()

|

static |

Executes store motion of memory reference REF from LOOP. Exits from the LOOP are stored in EXITS. The initialization of the temporary variable is put to the preheader of the loop, and assignments to the reference from the temporary variable are emitted to exits.

References bb_in_transaction(), bitmap_bit_p, boolean_false_node, cfun, create_tmp_reg(), dump_file, dump_flags, execute_sm_if_changed_flag_set(), first_mem_ref_loc(), sm_aux::flag_bbs, for_each_index(), force_move_till(), get_lsm_tmp_name(), get_or_create_ssa_default_def(), gimple_build_assign(), gsi_for_stmt(), gsi_insert_before(), GSI_SAME_STMT, init_lim_data(), fmt_data::loop, loop_preheader_edge(), lim_aux_data::max_loop, NULL_TREE, loop::num, fmt_data::orig_loop, print_generic_expr(), hash_map< KeyId, Value, Traits >::put(), lim_aux_data::ref, ref_always_accessed_p(), ref_can_have_store_data_races(), rewrite_mem_refs(), sm_aux::store_flag, suppress_warning(), TDF_DETAILS, lim_aux_data::tgt_loop, sm_aux::tmp_var, TREE_TYPE, and unshare_expr().

Referenced by hoist_memory_references().

◆ execute_sm_exit()

|

static |

References im_mem_ref::accesses_in_loop, bitmap_set_bit, dump_file, dump_flags, error_mark_node, execute_sm_if_changed(), sm_aux::flag_bbs, gcc_assert, hash_map< KeyId, Value, Traits >::get(), gimple_assign_lhs(), gimple_build_assign(), gsi_insert_on_edge(), i, loop_preheader_edge(), im_mem_ref::mem, memory_accesses, NULL_TREE, loop::num, print_generic_expr(), ao_ref::ref, sm_ord, sm_other, sm_aux::store_flag, TDF_DETAILS, sm_aux::tmp_var, and unshare_expr().

Referenced by hoist_memory_references().

◆ execute_sm_if_changed()

|

static |

Helper function for execute_sm. Emit code to store TMP_VAR into

MEM along edge EX.

The store is only done if MEM has changed. We do this so no

changes to MEM occur on code paths that did not originally store

into it.

The common case for execute_sm will transform:

for (...) {

if (foo)

stuff;

else

MEM = TMP_VAR;

}

into:

lsm = MEM;

for (...) {

if (foo)

stuff;

else

lsm = TMP_VAR;

}

MEM = lsm;

This function will generate:

lsm = MEM;

lsm_flag = false;

...

for (...) {

if (foo)

stuff;

else {

lsm = TMP_VAR;

lsm_flag = true;

}

}

if (lsm_flag) <--

MEM = lsm; <-- (X)

In case MEM and TMP_VAR are NULL the function will return the then

block so the caller can insert (X) and other related stmts.

References add_bb_to_loop(), add_phi_arg(), profile_probability::always(), profile_count::apply_probability(), profile_probability::apply_scale(), hash_set< KeyId, Lazy, Traits >::begin(), boolean_false_node, CDI_DOMINATORS, basic_block_def::count, create_empty_bb(), dominated_by_p(), hash_set< KeyId, Lazy, Traits >::end(), find_edge(), basic_block_def::flags, gimple_build_assign(), gimple_build_cond(), gimple_phi_arg_def(), gimple_phi_arg_edge(), gimple_phi_num_args(), gimple_phi_result(), GSI_CONTINUE_LINKING, gsi_end_p(), gsi_insert_after(), gsi_next(), gsi_start_bb(), gsi_start_phis(), profile_probability::guessed_always(), i, profile_probability::initialized_p(), profile_probability::invert(), basic_block_def::loop_father, make_edge(), make_single_succ_edge(), profile_count::nonzero_p(), NULL_TREE, profile_count::probability_in(), recompute_dominator(), redirect_edge_succ(), set_immediate_dominator(), single_pred_p(), single_succ_edge(), split_block_after_labels(), split_edge(), profile_probability::uninitialized(), UNKNOWN_LOCATION, unshare_expr(), update_stmt(), virtual_operand_p(), and profile_count::zero().

Referenced by execute_sm_exit(), and hoist_memory_references().

◆ execute_sm_if_changed_flag_set()

|

static |

Helper function for execute_sm. On every location where REF is set, set an appropriate flag indicating the store.

References boolean_type_node, create_tmp_reg(), for_all_locs_in_loop(), get_lsm_tmp_name(), im_mem_ref::mem, and ao_ref::ref.

Referenced by execute_sm().

◆ extract_true_false_args_from_phi()

|

static |

From a controlling predicate in DOM determine the arguments from the PHI node PHI that are chosen if the predicate evaluates to true and false and store them to *TRUE_ARG_P and *FALSE_ARG_P if they are non-NULL. Returns true if the arguments can be determined, else return false.

References extract_true_false_controlled_edges(), gimple_bb(), and PHI_ARG_DEF.

Referenced by determine_max_movement(), and move_computations_worker().

◆ fill_always_executed_in()

|

static |

Fills ALWAYS_EXECUTED_IN information for basic blocks, i.e. for each such basic block bb records the outermost loop for that execution of its header implies execution of bb.

References bitmap_clear(), bitmap_set_bit, cfun, current_loops, fill_always_executed_in_1(), FOR_EACH_BB_FN, gsi_end_p(), gsi_next(), gsi_start_bb(), gsi_stmt(), basic_block_def::index, last_basic_block_for_fn, loop_depth(), basic_block_def::loop_father, loop::next, and nonpure_call_p().

Referenced by loop_invariant_motion_in_fun().

◆ fill_always_executed_in_1()

Fills ALWAYS_EXECUTED_IN information for basic blocks of LOOP, i.e. for each such basic block bb records the outermost loop for that execution of its header implies execution of bb. CONTAINS_CALL is the bitmap of blocks that contain a nonpure call.

References ALWAYS_EXECUTED_IN, bitmap_bit_p, CDI_DOMINATORS, dominated_by_p(), dump_enabled_p(), dump_printf(), fill_always_executed_in_1(), finite_loop_p(), first_dom_son(), basic_block_def::flags, flow_bb_inside_loop_p(), FOR_EACH_EDGE, get_immediate_dominator(), loop::header, basic_block_def::index, loop::inner, last, loop::latch, basic_block_def::loop_father, loop_outer(), MSG_NOTE, loop::next, next_dom_son(), NULL, loop::num, loop::num_nodes, SET_ALWAYS_EXECUTED_IN, basic_block_def::succs, and worklist.

Referenced by fill_always_executed_in(), and fill_always_executed_in_1().

◆ fill_coldest_and_hotter_out_loop()

| void fill_coldest_and_hotter_out_loop | ( | class loop * | coldest_loop, |

| class loop * | hotter_loop, | ||

| class loop * | loop ) |

Find the coldest loop preheader for LOOP, also find the nearest hotter loop to LOOP. Then recursively iterate each inner loop.

References bb_colder_than_loop_preheader(), coldest_outermost_loop, current_loops, dump_enabled_p(), dump_printf(), fill_coldest_and_hotter_out_loop(), hotter_than_inner_loop, loop::inner, loop_outer(), loop_preheader_edge(), MSG_NOTE, loop::next, NULL, and loop::num.

Referenced by fill_coldest_and_hotter_out_loop(), and loop_invariant_motion_in_fun().

◆ find_ref_loc_in_loop_cmp()

|

static |

Compare function for bsearch searching for reference locations in a loop.

References bb_loop_postorder, flow_loop_nested_p(), gimple_bb(), basic_block_def::loop_father, loop::num, and mem_ref_loc::stmt.

Referenced by for_all_locs_in_loop().

◆ find_refs_for_sm()

Marks the references in LOOP for that store motion should be performed in REFS_TO_SM. SM_EXECUTED is the set of references for that store motion was performed in one of the outer loops.

References bitmap_set_bit, can_sm_ref_p(), dbg_cnt(), EXECUTE_IF_AND_COMPL_IN_BITMAP, i, memory_accesses, and loop::num.

Referenced by store_motion_loop().

◆ first_mem_ref_loc()

|

static |

Returns the first reference location to REF in LOOP.

References for_all_locs_in_loop(), and NULL.

Referenced by execute_sm().

◆ for_all_locs_in_loop()

|

static |

Iterates over all locations of REF in LOOP and its subloops calling fn.operator() with the location as argument. When that operator returns true the iteration is stopped and true is returned. Otherwise false is returned.

References im_mem_ref::accesses_in_loop, bb_loop_postorder, find_ref_loc_in_loop_cmp(), flow_bb_inside_loop_p(), gimple_bb(), i, and mem_ref_loc::stmt.

Referenced by can_sm_ref_p(), execute_sm_if_changed_flag_set(), first_mem_ref_loc(), ref_always_accessed_p(), and rewrite_mem_refs().

◆ force_move_till()

References force_move_till_op(), fmt_data::loop, fmt_data::orig_loop, TREE_CODE, and TREE_OPERAND.

Referenced by execute_sm().

◆ force_move_till_op()

If OP is SSA NAME, force the statement that defines it to be moved out of the LOOP. ORIG_LOOP is the loop in that EXPR is used.

References gcc_assert, gimple_nop_p(), is_gimple_min_invariant(), set_level(), SSA_NAME_DEF_STMT, and TREE_CODE.

Referenced by force_move_till().

◆ free_lim_aux_data()

|

static |

◆ gather_mem_refs_stmt()

Gathers memory references in statement STMT in LOOP, storing the information about them in the memory_accesses structure. Marks the vops accessed through unrecognized statements there as well.

References ao_ref_alias_set(), ao_ref_base(), ao_ref_base_alias_set(), ao_ref_init(), bitmap_set_bit, build1(), build_aligned_type(), build_int_cst(), dump_file, dump_flags, error_mark_node, fold_build2, gcc_checking_assert, get_addr_base_and_unit_offset(), get_object_alignment(), gimple_vdef(), gimple_vuse(), im_mem_ref::id, init_lim_data(), poly_int< N, C >::is_constant(), is_gimple_assign(), is_gimple_mem_ref_addr(), iterative_hash_expr(), iterative_hash_host_wide_int(), known_eq, mark_ref_loaded(), mark_ref_stored(), ao_ref::max_size, ao_ref::max_size_known_p(), im_mem_ref::mem, mem_ref_alloc(), mem_ref_offset(), memory_accesses, NULL, loop::num, ao_ref::offset, operand_equal_p(), poly_int_tree_p(), print_generic_expr(), print_gimple_stmt(), ptr_type_node, record_mem_ref_loc(), ao_ref::ref, lim_aux_data::ref, im_mem_ref::ref_decomposed, reference_alias_ptr_type(), simple_mem_ref_in_stmt(), ao_ref::size, TDF_DETAILS, TDF_SLIM, wi::to_poly_offset(), TREE_CODE, TREE_OPERAND, TREE_THIS_VOLATILE, TREE_TYPE, TYPE_ALIGN, TYPE_SIZE, UNANALYZABLE_MEM_ID, and unshare_expr().

Referenced by analyze_memory_references().

◆ get_coldest_out_loop()

|

static |

Check coldest loop between OUTERMOST_LOOP and LOOP by comparing profile count. It does three steps check: 1) Check whether CURR_BB is cold in it's own loop_father, if it is cold, just return NULL which means it should not be moved out at all; 2) CURR_BB is NOT cold, check if pre-computed COLDEST_LOOP is outside of OUTERMOST_LOOP, if it is inside of OUTERMOST_LOOP, return the COLDEST_LOOP; 3) If COLDEST_LOOP is outside of OUTERMOST_LOOP, check whether there is a hotter loop between OUTERMOST_LOOP and loop in pre-computed HOTTER_THAN_INNER_LOOP, return it's nested inner loop, otherwise return OUTERMOST_LOOP. At last, the coldest_loop is inside of OUTERMOST_LOOP, just return it as the hoist target.

References bb_colder_than_loop_preheader(), coldest_outermost_loop, curr_bb, flow_loop_nested_p(), gcc_assert, hotter_than_inner_loop, loop::inner, loop_depth(), loop::next, NULL, and loop::num.

Referenced by determine_max_movement(), and ref_in_loop_hot_body::operator()().

◆ get_lim_data()

|

static |

◆ hoist_memory_references()

|

static |

Hoists memory references MEM_REFS out of LOOP. EXITS is the list of exit edges of the LOOP.

References im_mem_ref::accesses_in_loop, hash_map< KeyId, Value, Traits >::begin(), bitmap_and_compl(), bitmap_and_compl_into(), bitmap_bit_p, bitmap_clear(), bitmap_clear_bit(), bitmap_copy(), bitmap_empty_p(), bitmap_set_bit, CDI_DOMINATORS, changed, dominated_by_p(), hash_map< KeyId, Value, Traits >::end(), EXECUTE_IF_SET_IN_BITMAP, execute_sm(), execute_sm_exit(), execute_sm_if_changed(), sm_aux::flag_bbs, FOR_EACH_VEC_ELT, gcc_assert, gimple_bb(), gimple_set_vdef(), gimple_vdef(), gsi_commit_one_edge_insert(), gsi_for_stmt(), gsi_remove(), i, lim_bitmap_obstack, loop_preheader_edge(), memory_accesses, NULL, NULL_TREE, loop::num, ref_indep_loop_p(), release_defs(), single_pred_edge(), sm_ord, sm_other, sm_seq_push_down(), sm_seq_valid_bb(), sm_unord, sm_waw, sm_aux::store_flag, and unlink_stmt_vdef().

Referenced by store_motion_loop().

◆ init_lim_data()

|

static |

References lim_aux_data_map.

Referenced by compute_invariantness(), execute_sm(), and gather_mem_refs_stmt().

◆ is_self_write()

|

static |

Returns true if LOAD_REF and STORE_REF form a "self write" pattern where the stored value comes from the loaded value via SSA. Example: a[i] = a[0] is safe to hoist a[0] even when i==0.

References im_mem_ref::accesses_in_loop, ao_ref::base, gimple_assign_lhs(), gimple_assign_rhs1(), is_gimple_assign(), known_eq, im_mem_ref::mem, ao_ref::offset, operand_equal_p(), ao_ref::size, and TREE_CODE.

Referenced by ref_indep_loop_p().

◆ loop_invariant_motion_in_fun()

Moves invariants from loops. Only "expensive" invariants are moved out -- i.e. those that are likely to be win regardless of the register pressure. Only perform store motion if STORE_MOTION is true.

References analyze_memory_references(), BASIC_BLOCK_FOR_FN, cfun, coldest_outermost_loop, compute_invariantness(), current_loops, do_store_motion(), fill_always_executed_in(), fill_coldest_and_hotter_out_loop(), free(), gsi_commit_edge_inserts(), hotter_than_inner_loop, i, last_basic_block_for_fn, mark_ssa_maybe_undefs(), move_computations_worker(), need_ssa_update_p(), loop::next, NULL, number_of_loops(), pre_and_rev_post_order_compute_fn(), rewrite_into_loop_closed_ssa(), todo, TODO_update_ssa, tree_ssa_lim_finalize(), and tree_ssa_lim_initialize().

Referenced by tree_loop_unroll_and_jam().

◆ loop_suitable_for_sm()

Checks whether LOOP (with exits stored in EXITS array) is suitable for a store motion optimization (i.e. whether we can insert statement on its exits).

References loop::exits, FOR_EACH_VEC_ELT, and i.

Referenced by store_motion_loop().

◆ make_pass_lim()

| gimple_opt_pass * make_pass_lim | ( | gcc::context * | ctxt | ) |

◆ mark_ref_loaded()

|

static |

Marks reference REF as loaded in LOOP.

References current_loops, loop_outer(), and set_ref_loaded_in_loop().

Referenced by gather_mem_refs_stmt().

◆ mark_ref_stored()

|

static |

Marks reference REF as stored in LOOP.

References current_loops, loop_outer(), and set_ref_stored_in_loop().

Referenced by gather_mem_refs_stmt().

◆ may_move_till()

Checks whether the statement defining variable *INDEX can be hoisted out of the loop passed in DATA. Callback for for_each_index.

References outermost_invariant_loop(), TREE_CODE, and TREE_OPERAND.

Referenced by can_sm_ref_p().

◆ mem_ref_alloc()

|

static |

Allocates and returns a memory reference description for MEM whose hash value is HASH and id is ID.

References im_mem_ref::accesses_in_loop, ao_ref_init(), bitmap_initialize(), im_mem_ref::dep_loop, error_mark_node, im_mem_ref::hash, im_mem_ref::id, lim_bitmap_obstack, im_mem_ref::loaded, im_mem_ref::mem, mem_ref_obstack, NULL, im_mem_ref::ref_canonical, im_mem_ref::ref_decomposed, and im_mem_ref::stored.

Referenced by gather_mem_refs_stmt(), and tree_ssa_lim_initialize().

◆ mem_refs_may_alias_p()

|

static |

Returns true if MEM1 and MEM2 may alias. TTAE_CACHE is used as a cache in tree_to_aff_combination_expand.

References aff_comb_cannot_overlap_p(), aff_combination_add(), aff_combination_expand(), aff_combination_scale(), error_mark_node, gcc_checking_assert, get_inner_reference_aff(), im_mem_ref::mem, ao_ref::ref, and refs_may_alias_p_1().

Referenced by refs_independent_p().

◆ memref_free()

|

static |

A function to free the mem_ref object OBJ.

References im_mem_ref::accesses_in_loop.

Referenced by tree_ssa_lim_finalize().

◆ move_computations_worker()

| unsigned int move_computations_worker | ( | basic_block | bb | ) |

Hoist the statements in basic block BB out of the loops prescribed by data stored in LIM_DATA structures associated with each statement. Callback for walk_dominator_tree.

References ALWAYS_EXECUTED_IN, boolean_type_node, CDI_DOMINATORS, clear_lim_data(), lim_aux_data::cost, dump_file, dump_flags, extract_true_false_args_from_phi(), flow_loop_nested_p(), gcc_assert, get_immediate_dominator(), get_lim_data(), gimple_assign_lhs(), gimple_build_assign(), gimple_cond_code(), gimple_cond_lhs(), gimple_cond_rhs(), gimple_get_lhs(), gimple_has_lhs(), gimple_needing_rewrite_undefined(), gimple_phi_num_args(), gimple_phi_result(), gimple_vdef(), gimple_vuse(), gimple_vuse_op(), gsi_end_p(), gsi_insert_on_edge(), gsi_insert_seq_on_edge(), gsi_last_bb(), gsi_next(), gsi_remove(), gsi_start_bb(), gsi_start_phis(), gsi_stmt(), integer_zerop(), basic_block_def::loop_father, loop_outer(), loop_preheader_edge(), make_ssa_name(), NULL, NULL_TREE, loop::num, gphi_iterator::phi(), PHI_ARG_DEF, PHI_ARG_DEF_FROM_EDGE, print_gimple_stmt(), remove_phi_node(), reset_flow_sensitive_info(), rewrite_to_defined_unconditional(), SET_USE, TDF_DETAILS, lim_aux_data::tgt_loop, todo, TODO_cleanup_cfg, TREE_CODE, TREE_TYPE, types_compatible_p(), and virtual_operand_p().

Referenced by loop_invariant_motion_in_fun().

◆ movement_possibility()

References FOR_EACH_PHI_OR_STMT_USE, MOVE_POSSIBLE, MOVE_PRESERVE_EXECUTION, movement_possibility_1(), ssa_name_maybe_undef_p(), SSA_OP_USE, TREE_CODE, and USE_FROM_PTR.

Referenced by compute_invariantness().

◆ movement_possibility_1()

If it is possible to hoist the statement STMT unconditionally, returns MOVE_POSSIBLE. If it is possible to hoist the statement STMT, but we must avoid making it executed if it would not be executed in the original program (e.g. because it may trap), return MOVE_PRESERVE_EXECUTION. Otherwise return MOVE_IMPOSSIBLE.

References cfun, DECL_P, dump_file, element_precision(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), gimple_assign_single_p(), gimple_call_lhs(), gimple_could_trap_p(), gimple_get_lhs(), gimple_has_side_effects(), gimple_has_volatile_ops(), gimple_in_transaction(), gimple_phi_num_args(), gimple_phi_result(), gimple_vdef(), is_gimple_assign(), is_gimple_call(), is_global_var(), wi::ltu_p(), MOVE_IMPOSSIBLE, MOVE_POSSIBLE, MOVE_PRESERVE_EXECUTION, NULL_TREE, print_generic_expr(), SSA_NAME_OCCURS_IN_ABNORMAL_PHI, stmt_could_throw_p(), stmt_ends_bb_p(), TDF_SLIM, wi::to_wide(), TREE_CODE, TREE_TYPE, and virtual_operand_p().

Referenced by movement_possibility().

◆ nonpure_call_p()

Returns true if STMT is a call that has side effects.

References gimple_has_side_effects().

Referenced by compute_invariantness(), and fill_always_executed_in().

◆ outermost_indep_loop()

|

static |

Finds the outermost loop between OUTER and LOOP in that the memory reference REF is independent. If REF is not independent in LOOP, NULL is returned instead.

References bitmap_bit_p, lim_raw, loop_depth(), NULL, loop::num, ref_indep_loop_p(), im_mem_ref::stored, and superloop_at_depth().

Referenced by determine_max_movement().

◆ outermost_invariant_loop()

Suppose that operand DEF is used inside the LOOP. Returns the outermost loop to that we could move the expression using DEF if it did not have other operands, i.e. the outermost loop enclosing LOOP in that the value of DEF is invariant.

References find_common_loop(), gcc_assert, get_lim_data(), gimple_bb(), is_gimple_min_invariant(), loop_depth(), basic_block_def::loop_father, loop_outer(), lim_aux_data::max_loop, NULL, SSA_NAME_DEF_STMT, superloop_at_depth(), and TREE_CODE.

Referenced by add_dependency(), compute_invariantness(), may_move_till(), and rewrite_bittest().

◆ query_loop_dependence()

|

static |

Query the loop dependence cache of REF for LOOP, KIND.

References bitmap_bit_p, dep_dependent, dep_independent, im_mem_ref::dep_loop, dep_unknown, and loop::num.

Referenced by ref_indep_loop_p().

◆ record_loop_dependence()

|

static |

Populate the loop dependence cache of REF for LOOP, KIND with STATE.

References bitmap_set_bit, dep_dependent, im_mem_ref::dep_loop, dep_unknown, gcc_assert, and loop::num.

Referenced by ref_indep_loop_p().

◆ record_mem_ref_loc()

|

static |

Records memory reference location *LOC in LOOP to the memory reference description REF. The reference occurs in statement STMT.

References im_mem_ref::accesses_in_loop, mem_ref_loc::ref, and mem_ref_loc::stmt.

Referenced by gather_mem_refs_stmt().

◆ ref_always_accessed_p()

|

static |

Returns true if REF is always accessed in LOOP. If STORED_P is true make sure REF is always stored to in LOOP.

References for_all_locs_in_loop(), and lim_aux_data::ref.

Referenced by can_sm_ref_p(), and execute_sm().

◆ ref_indep_loop_p()

|

static |

Returns true if REF is independent on all other accessess in LOOP.

KIND specifies the kind of dependence to consider.

lim_raw assumes REF is not stored in LOOP and disambiguates RAW

dependences so if true REF can be hoisted out of LOOP

sm_war disambiguates a store REF against all other loads to see

whether the store can be sunk across loads out of LOOP

sm_waw disambiguates a store REF against all other stores to see

whether the store can be sunk across stores out of LOOP.

References im_mem_ref::accesses_in_loop, bitmap_bit_p, dep_dependent, dep_independent, dep_unknown, dump_file, dump_flags, error_mark_node, EXECUTE_IF_SET_IN_BITMAP, i, im_mem_ref::id, loop::inner, is_self_write(), lim_raw, im_mem_ref::loaded, im_mem_ref::mem, memory_accesses, loop::next, loop::num, query_loop_dependence(), record_loop_dependence(), ao_ref::ref, lim_aux_data::ref, ref_indep_loop_p(), ref_maybe_used_by_stmt_p(), refs_independent_p(), sm_war, sm_waw, stmt_may_clobber_ref_p_1(), im_mem_ref::stored, TDF_DETAILS, true, and UNANALYZABLE_MEM_ID.

Referenced by can_sm_ref_p(), hoist_memory_references(), outermost_indep_loop(), and ref_indep_loop_p().

◆ refs_independent_p()

|

static |

Returns true if REF1 and REF2 are independent.

References dump_file, dump_flags, im_mem_ref::id, mem_refs_may_alias_p(), memory_accesses, and TDF_DETAILS.

Referenced by ref_indep_loop_p(), and sm_seq_push_down().

◆ rewrite_bittest()

|

static |

Check if the pattern at *BSI is a bittest of the form (A >> B) & 1 != 0 and in this case rewrite it to A & (1 << B) != 0.

References a, as_a(), b, build_int_cst(), build_int_cst_type(), CONVERT_EXPR_CODE_P, dyn_cast(), fold_build2, gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), gimple_build_assign(), gimple_cond_code(), gimple_cond_lhs(), gimple_cond_rhs(), gimple_cond_set_rhs(), gsi_insert_before(), GSI_NEW_STMT, gsi_remove(), GSI_SAME_STMT, gsi_stmt(), has_single_use(), integer_zerop(), loop_containing_stmt(), make_temp_ssa_name(), NULL, outermost_invariant_loop(), release_defs(), SET_USE, single_imm_use(), SSA_NAME_DEF_STMT, TREE_CODE, and TREE_TYPE.

Referenced by compute_invariantness().

◆ rewrite_mem_refs()

|

static |

Rewrites all references to REF in LOOP by variable TMP_VAR.

References for_all_locs_in_loop().

Referenced by execute_sm().

◆ rewrite_reciprocal()

|

static |

Rewrite a/b to a*(1/b). Return the invariant stmt to process.

References as_a(), build_one_cst(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_build_assign(), gsi_insert_before(), GSI_NEW_STMT, gsi_replace(), gsi_stmt(), make_temp_ssa_name(), NULL, and TREE_TYPE.

Referenced by compute_invariantness().

◆ set_level()

Suppose that some statement in ORIG_LOOP is hoisted to the loop LEVEL, and that one of the operands of this statement is computed by STMT. Ensure that STMT (together with all the statements that define its operands) is hoisted at least out of the loop LEVEL.

References lim_aux_data::depends, find_common_loop(), flow_loop_nested_p(), FOR_EACH_VEC_ELT, gcc_assert, get_lim_data(), gimple_bb(), i, basic_block_def::loop_father, loop_outer(), lim_aux_data::max_loop, NULL, set_level(), and lim_aux_data::tgt_loop.

Referenced by compute_invariantness(), force_move_till_op(), set_level(), and set_profitable_level().

◆ set_profitable_level()

|

static |

Determines an outermost loop from that we want to hoist the statement STMT. For now we chose the outermost possible loop. TODO -- use profiling information to set it more sanely.

References get_lim_data(), gimple_bb(), lim_aux_data::max_loop, and set_level().

Referenced by compute_invariantness().

◆ set_ref_loaded_in_loop()

|

static |

Set the LOOP bit in REF loaded bitmap and allocate that if necessary. Return whether a bit was changed.

References BITMAP_ALLOC, bitmap_set_bit, lim_bitmap_obstack, im_mem_ref::loaded, and loop::num.

Referenced by mark_ref_loaded().

◆ set_ref_stored_in_loop()

|

static |

Set the LOOP bit in REF stored bitmap and allocate that if necessary. Return whether a bit was changed.

References BITMAP_ALLOC, bitmap_set_bit, lim_bitmap_obstack, loop::num, and im_mem_ref::stored.

Referenced by mark_ref_stored().

◆ simple_mem_ref_in_stmt()

If there is a simple load or store to a memory reference in STMT, returns the location of the memory reference, and sets IS_STORE according to whether it is a store or load. Otherwise, returns NULL.

References gimple_assign_lhs_ptr(), gimple_assign_rhs1_ptr(), gimple_assign_single_p(), gimple_vdef(), gimple_vuse(), is_gimple_min_invariant(), NULL, and TREE_CODE.

Referenced by gather_mem_refs_stmt().

◆ sm_seq_push_down()

Push the SM candidate at index PTR in the sequence SEQ down until we hit the next SM candidate. Return true if that went OK and false if we could not disambiguate agains another unrelated ref. Update *AT to the index where the candidate now resides.

References error_mark_node, seq_entry::first, seq_entry::from, memory_accesses, NULL_TREE, refs_independent_p(), seq_entry::second, sm_ord, and sm_other.

Referenced by hoist_memory_references(), and sm_seq_valid_bb().

◆ sm_seq_valid_bb()

|

static |

Computes the sequence of stores from candidates in REFS_NOT_IN_SEQ to SEQ walking backwards from VDEF (or the end of BB if VDEF is NULL).

References im_mem_ref::accesses_in_loop, bitmap_and_compl(), bitmap_bit_p, bitmap_clear_bit(), bitmap_copy(), bitmap_empty_p(), bitmap_ior_into(), bitmap_set_bit, CLOBBER_OBJECT_END, CLOBBER_STORAGE_END, dyn_cast(), error_mark_node, basic_block_def::flags, get_lim_data(), get_virtual_phi(), gimple_assign_rhs1(), gimple_bb(), gimple_clobber_p(), gimple_phi_arg_def(), gimple_phi_arg_edge(), gimple_phi_num_args(), gimple_phi_result(), gimple_vdef(), gimple_vuse(), gsi_end_p(), gsi_last_bb(), gsi_prev(), gsi_stmt(), loop::header, i, lim_bitmap_obstack, basic_block_def::loop_father, im_mem_ref::mem, memory_accesses, MIN, NULL_TREE, operand_equal_p(), ao_ref::ref, single_pred(), single_pred_p(), sm_ord, sm_other, sm_seq_push_down(), sm_seq_valid_bb(), SSA_NAME_DEF_STMT, SSA_NAME_VERSION, TREE_THIS_VOLATILE, and UNANALYZABLE_MEM_ID.

Referenced by hoist_memory_references(), and sm_seq_valid_bb().

◆ sort_bbs_in_loop_postorder_cmp()

|

static |

qsort sort function to sort blocks after their loop fathers postorder.

References bb_loop_postorder, basic_block_def::index, basic_block_def::loop_father, and loop::num.

Referenced by analyze_memory_references().

◆ sort_locs_in_loop_postorder_cmp()

|

static |

qsort sort function to sort ref locs after their loop fathers postorder.

References bb_loop_postorder, gimple_bb(), basic_block_def::loop_father, loop::num, and mem_ref_loc::stmt.

Referenced by analyze_memory_references().

◆ stmt_cost()

|

static |

Returns an estimate for a cost of statement STMT. The values here are just ad-hoc constants, similar to costs for inlining.

References CONSTRUCTOR_NELTS, fndecl_built_in_p(), gimple_assign_rhs1(), gimple_assign_rhs_code(), gimple_call_fndecl(), gimple_references_memory_p(), is_gimple_call(), LIM_EXPENSIVE, tcc_comparison, and TREE_CODE_CLASS.

Referenced by determine_max_movement().

◆ store_motion_loop()

Try to perform store motion for all memory references modified inside LOOP. SM_EXECUTED is the bitmap of the memory references for that store motion was executed in one of the outer loops.

References BITMAP_ALLOC, bitmap_and_compl_into(), bitmap_empty_p(), BITMAP_FREE, bitmap_ior_into(), loop::exits, find_refs_for_sm(), get_loop_exit_edges(), hoist_memory_references(), loop::inner, lim_bitmap_obstack, loop_suitable_for_sm(), loop::next, NULL, and store_motion_loop().

Referenced by do_store_motion(), and store_motion_loop().

◆ tree_ssa_lim_finalize()

|

static |

Cleans up after the invariant motion pass.

References bb_loop_postorder, bitmap_obstack_release(), cfun, coldest_outermost_loop, FOR_EACH_BB_FN, FOR_EACH_VEC_ELT, free(), free_affine_expand_cache(), hotter_than_inner_loop, i, lim_aux_data_map, lim_bitmap_obstack, mem_ref_obstack, memory_accesses, memref_free(), NULL, and SET_ALWAYS_EXECUTED_IN.

Referenced by loop_invariant_motion_in_fun().

◆ tree_ssa_lim_initialize()

|

static |

Compute the global information needed by the loop invariant motion pass.

References bb_loop_postorder, bitmap_initialize(), bitmap_obstack_initialize(), cfun, compute_transaction_bits(), gcc_obstack_init, i, LI_FROM_INNERMOST, lim_aux_data_map, lim_bitmap_obstack, mem_ref_alloc(), mem_ref_obstack, memory_accesses, NULL, loop::num, number_of_loops(), and UNANALYZABLE_MEM_ID.

Referenced by loop_invariant_motion_in_fun().

Variable Documentation

◆ bb_loop_postorder

|

static |

◆ coldest_outermost_loop

coldest outermost loop for given loop.

Referenced by fill_coldest_and_hotter_out_loop(), get_coldest_out_loop(), loop_invariant_motion_in_fun(), and tree_ssa_lim_finalize().

◆ hotter_than_inner_loop

hotter outer loop nearest to given loop.

Referenced by fill_coldest_and_hotter_out_loop(), get_coldest_out_loop(), loop_invariant_motion_in_fun(), and tree_ssa_lim_finalize().

◆ in_loop_pipeline

|

static |

Referenced by compute_invariantness(), and perform_tree_ssa_dce().

◆ lim_aux_data_map

|

static |

Maps statements to their lim_aux_data.

Referenced by clear_lim_data(), get_lim_data(), init_lim_data(), tree_ssa_lim_finalize(), and tree_ssa_lim_initialize().

◆ lim_bitmap_obstack

|

static |

Obstack for the bitmaps in the above data structures.

Referenced by do_store_motion(), hoist_memory_references(), mem_ref_alloc(), set_ref_loaded_in_loop(), set_ref_stored_in_loop(), sm_seq_valid_bb(), store_motion_loop(), tree_ssa_lim_finalize(), and tree_ssa_lim_initialize().

◆ mem_ref_obstack

|

static |

Referenced by mem_ref_alloc(), tree_ssa_lim_finalize(), and tree_ssa_lim_initialize().

◆ []

| struct { ... } memory_accesses |

Description of memory accesses in loops.

Referenced by analyze_memory_references(), determine_max_movement(), execute_sm_exit(), find_refs_for_sm(), gather_mem_refs_stmt(), hoist_memory_references(), ref_indep_loop_p(), refs_independent_p(), sm_seq_push_down(), sm_seq_valid_bb(), tree_ssa_lim_finalize(), and tree_ssa_lim_initialize().