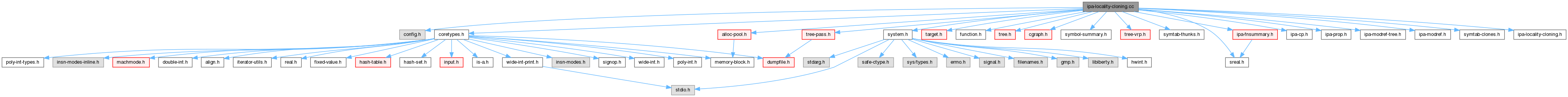

#include "config.h"#include "system.h"#include "coretypes.h"#include "target.h"#include "function.h"#include "tree.h"#include "alloc-pool.h"#include "tree-pass.h"#include "cgraph.h"#include "symbol-summary.h"#include "tree-vrp.h"#include "symtab-thunks.h"#include "sreal.h"#include "ipa-cp.h"#include "ipa-prop.h"#include "ipa-fnsummary.h"#include "ipa-modref-tree.h"#include "ipa-modref.h"#include "symtab-clones.h"#include "demangle.h"#include "ipa-locality-cloning.h"

Data Structures | |

| struct | locality_order |

| struct | templ_info |

| struct | locality_callee_info |

| struct | locality_info |

| struct | profile_stats |

Macros | |

| #define | INCLUDE_ALGORITHM |

Typedefs | |

| using | loc_map_hash = int_hash<int, 0, -1> |

| typedef hash_map< int_hash< hashval_t, 0 >, templ_info > | hash_freq_map_t |

Variables | |

| vec< locality_partition > | locality_partitions |

| static std::unique_ptr< hash_map< loc_map_hash, cgraph_node * > > | node_to_clone |

| static std::unique_ptr< hash_map< loc_map_hash, cgraph_node * > > | clone_to_node |

| static std::unique_ptr< hash_freq_map_t > | templ_hash_map |

| static object_allocator< locality_info > | loc_infos ("IPA locality callchain") |

| static std::unique_ptr< hash_map< loc_map_hash, locality_info * > > | node_to_ch_info |

Macro Definition Documentation

◆ INCLUDE_ALGORITHM

| #define INCLUDE_ALGORITHM |

Code locality based function cloning. Copyright The GNU Toolchain Authors This file is part of GCC. GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version. GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details. You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see <http://www.gnu.org/licenses/>.

This file implements cloning required to improve partitioning of the

callgraph for locality considerations.

Partitioning for improving code locality.

This pass aims to place frequently executed callchains closer together in

memory to improve performance through improved locality. If any frequent

callchains cannot be placed together because they are already placed

elsewhere, local function clones are created and all callers near to the

clones are redirected to use this copy.

Locality code placement is done in 2 parts.

1. IPA pass to be executed after ipa-inline and before ipa-pure-const.

Execute stage prepares the plan to place all nodes into partitions.

2. WPA Partition stage actually implements the plan.

Brief overview of the IPA pass:

1. Create and sort callchains. If PGO is available, use real profile

counts. Otherwise, use a set of heuristics to sort the callchains.

2. Create a partition plan for the callchains, processing them in the sorted

order.

1. If a function is unpartitioned, place it in the current partition.

2. If a function is already placed in a partition away from current

partition as part of another callchain:

Create a local clone in current partition, if cloning criteria is

satisfied.

3. Redirect any new caller to a local clone if one exists.

Another heuristics can be used in the absense of profile information. This

approach uses C++ template instantiation types to group functions together.

Entry functions are sorted in the beginning by most used template types, and

callees are sorted as part of partition_callchain (), again by template

types.

For bothe schemes, partition size is param controlled to fine tune per

program behavior.

Typedef Documentation

◆ hash_freq_map_t

| typedef hash_map<int_hash<hashval_t, 0>, templ_info> hash_freq_map_t |

Template instantiation hash_map.

◆ loc_map_hash

| using loc_map_hash = int_hash<int, 0, -1> |

Function Documentation

◆ accumulate_profile_counts_after_cloning()

|

static |

Helper function of to accumulate call counts.

Referenced by adjust_profile_info().

◆ add_node_to_partition()

|

static |

Add symbol NODE to partition PART.

References add_node_to_partition(), dyn_cast(), FOR_EACH_ALIAS, gcc_checking_assert, locality_partition_def::insns, ipa_size_summaries, node_partitioned_p(), locality_partition_def::nodes, locality_partition_def::part_id, ipa_ref::referring, SYMBOL_DUPLICATE, SYMBOL_PARTITION, and symtab_node::transparent_alias.

Referenced by add_node_to_partition(), locality_partition_and_clone(), and partition_callchain().

◆ adjust_profile_info()

|

static |

Adjust profile counts of NEW_NODE and ORIG_NODE, where NEW_NODE is a clone of OLD_NODE. Assumes that all eligible edges from current partition so far are redirected to NEW_NODE and recursive edges are adjusted.

References accumulate_profile_counts_after_cloning(), profile_count::adjust_for_ipa_scaling(), profile_count::apply_scale(), cgraph_node::call_for_symbol_thunks_and_aliases(), cgraph_node::callees, profile_count::combine_with_ipa_count(), cgraph_node::count, profile_count::dump(), symtab_node::dump_asm_name(), dump_file, cgraph_node::indirect_calls, init_profile_stats(), profile_count::ipa(), cgraph_node::local, cgraph_edge::next_callee, profile_stats::nonrec_count, profile_count::nonzero_p(), profile_stats::rec_count, and profile_count::zero().

Referenced by clone_node_as_needed().

◆ adjust_profile_info_for_non_self_rec_edges()

|

static |

NEW_NODE is a previously created clone of ORIG_NODE already present in current partition. EDGES contains newly redirected edges to NEW_NODE. Adjust profile information for both nodes and the edge.

References profile_count::adjust_for_ipa_scaling(), cgraph_node::callees, profile_count::combine_with_ipa_count(), cgraph_node::count, count, profile_count::dump(), symtab_node::dump_asm_name(), dump_file, i, cgraph_node::indirect_calls, profile_count::ipa(), cgraph_edge::next_callee, and profile_count::zero().

Referenced by clone_node_as_needed().

◆ adjust_recursive_callees()

|

static |

Redirect recursive edges of CLONE to correctly point to CLONE. As part of cloning process, all callee edges of a node are just duplicated but not redirected. Therefore, these edges still call to original of CLONE. For non-inlined CLONEs, NEW_CALLEE == CLONE and ORIG_CALLEE is CLONE's original node. For inlined node, self recursion to CLONE's original same as non-inlined, additionally, calls to CLONE->inlined_to are also recursive: NEW_CALLEE == CLONE->inlined_into and ORIG_CALLEE == original node of CLONE->inlined_into.

References symtab_node::alias, cgraph_node::callees, symtab_node::dump_asm_name(), dump_file, dyn_cast(), cgraph_node::expand_all_artificial_thunks(), gcc_assert, symtab_node::get_uid(), node_to_clone, symtab_node::noninterposable_alias(), and NULL.

Referenced by clone_node_as_needed(), and inline_clones().

◆ callee_default_cmp()

|

static |

Helper function to qsort all_callees in locality_info by edge hotness.

References a, b, and compare_node_uids().

Referenced by callee_templ_cmp(), and sort_all_callees_default().

◆ callee_templ_cmp()

|

static |

Sort all_callees of NODE by template hashes and edge hotness. all_callees is already sorted by call frequency. If templ_hash of the caller is same as a callee's templ_hash then that callee is ordered first. Callees having same templ_hash are sorted by call frequency, callees with different templ_hash are sorted by the template order. if (Both PA and PB have templates && PA's template == PB'template) || (Both A and B don't have templates): sort by frequency if (PA doesn't have template && PB has) || (Both have different templates && caller's template == PB's template): PB before PA if (PA has template && PB doesn't) || (Both have different templates && caller's template == PA's template): PA before PB if (Both have different templates && both are different than caller's template: sort by template order.

References a, b, callee_default_cmp(), gcc_assert, get_locality_info(), NULL, templ_info::order, locality_info::templ_hash, and templ_hash_map.

Referenced by partition_callchain().

◆ clone_node_as_needed()

|

static |

Clone EDGE->CALLEE if it or a clone of it is not already in PARTITION. Redirect all callers of EDGE->CALLEE that are in PARTITION, not just the EDGE. If a clone is already present in PARTITION, redirect all edges from EDGE->CALLER to EDGE->CALLEE. This is because we only visit one edge per caller to callee and redirect for all others from there. If cloning, also recursively clone inlined functions till the end of the callchain because inlined clones have 1-1 exclusive copy and edge from caller to inlined node. There are 2 flows possible: 1. Only redirect 1.1. cnode is already in current partition - cnode mustn't be a locality_clone -> nothing to do 1.2. A clone of cnode is in current partition - find out if it's the correct clone for edge - must be a locality_clone but the exact same kind as callee i.e. orig or cp/sra clone, if yes, redirect, else go to #2 1.3. Cnode/a clone of cnode is in current partition but caller is inlined 2. Clone and redirect 2.1. cnode is original node 2.2. cnode itself is a clone Clone inlines Flavors of edges: 1. Normal -> orig nodes, locality clones or cp/sra clones 2. Recursive -> direct recursion 3. Alias -> recursion via aliasing or as a result of IPA code duplication 4. Inline -> shouldn't be included in callchain.

References adjust_profile_info(), adjust_profile_info_for_non_self_rec_edges(), adjust_recursive_callees(), cgraph_node::callees, clone_to_node, create_locality_clone(), symtab_node::dump_asm_name(), dump_file, edge_redirectable_p(), gcc_assert, symtab_node::get_uid(), inline_clones(), cgraph_edge::next_callee, node_in_partition_p(), node_to_clone, NULL, and symtab.

Referenced by partition_callchain().

◆ clone_node_p()

|

static |

Return true if edge->callee->ultimate_alias_target can be cloned.

References locality_info::caller_freq, gcc_assert, get_locality_info(), ipa_size_summaries, and suitable_for_locality_cloning_p().

Referenced by partition_callchain().

◆ compare_edge_profile_counts()

|

static |

Helper function for qsort; sort nodes by profile count.

References a, b, profile_count::compatible_p(), and static_profile_cmp().

Referenced by locality_determine_ipa_order().

◆ compare_node_uids()

|

static |

Forward declaration.

Helper function for qsort; to break ties.

References gcc_assert, and symtab_node::get_uid().

Referenced by callee_default_cmp(), and static_profile_cmp().

◆ create_locality_clone()

|

static |

Create a locality clone of CNODE and redirect all callers present in PARTITION. Create a clone dpending on whether CNODE itself is a clone or not.

References cgraph_node::callees, cgraph_node::callers, clone_function_name(), copy_node(), cgraph_node::count, cgraph_node::create_clone(), symtab_node::decl, DECL_ASSEMBLER_NAME, DECL_NAME, symtab_node::definition, symtab_node::dump_asm_name(), dump_file, edge_redirectable_p(), IDENTIFIER_POINTER, cgraph_node::ipa_transforms_to_apply, symtab_node::name(), node_in_partition_p(), NULL, SET_DECL_ASSEMBLER_NAME, set_new_clone_decl_and_node_flags(), and vNULL.

Referenced by clone_node_as_needed().

◆ create_locality_info()

|

inlinestatic |

Initialize locality_info for node V. If CLONE_P is true, V is a locality clone; populate callee information for locality clones because caller info is needed for cloning decisions and clones are not cloned again. Populate both caller and callee info for non-clone nodes.

References gcc_assert, symtab_node::get_uid(), loc_infos, locality_info::node, node_to_ch_info, populate_callee_locality_info(), populate_caller_locality_info(), populate_templ_info(), and sort_all_callees_default().

Referenced by locality_determine_ipa_order(), locality_determine_static_order(), and partition_callchain().

◆ create_partition()

|

static |

Create and return a new partition and increment NPARTITIONS.

References locality_partition_def::insns, locality_partitions, locality_partition_def::nodes, and locality_partition_def::part_id.

Referenced by locality_partition_and_clone(), and partition_callchain().

◆ edge_redirectable_p()

|

inlinestatic |

Return true if EDGE can be safely redirected to another callee.

References AVAIL_INTERPOSABLE, and LTO_LOCALITY_NON_INTERPOSABLE_CLONING.

Referenced by clone_node_as_needed(), and create_locality_clone().

◆ get_locality_info()

|

inlinestatic |

Return locality_info for NODE if present, otherwise return NULL.

References node_to_ch_info, and NULL.

Referenced by callee_templ_cmp(), clone_node_p(), partition_callchain(), and static_profile_templ_cmp().

◆ init_profile_stats()

|

inlinestatic |

Initialize fields of STATS.

References NULL, and profile_count::zero().

Referenced by adjust_profile_info().

◆ inline_clones()

|

static |

Create clones for CALLER's inlined callees, ORIG_INLINED_TO is the original node from clone_as_needed () such that new_inlined_to is a clone of it.

References adjust_recursive_callees(), cgraph_node::callees, cgraph_edge::caller, clone_to_node, cgraph_node::create_clone(), symtab_node::decl, cgraph_node::dump(), dump_file, thunk_info::get(), thunk_info::get_create(), symtab_node::get_uid(), inline_clones(), cgraph_node::inlined_to, node_to_clone, NULL, cgraph_node::thunk, and vNULL.

Referenced by clone_node_as_needed(), and inline_clones().

◆ is_entry_node_p()

|

static |

Determine whether NODE is an entrypoint to a callchain.

References SYMBOL_PARTITION.

Referenced by locality_determine_ipa_order(), and locality_determine_static_order().

◆ lc_execute()

|

static |

Entry point to locality-clone pass.

References clone_to_node, FOR_EACH_SYMBOL, locality_partition_and_clone(), node_to_ch_info, node_to_clone, NULL, and templ_hash_map.

◆ locality_dc_template_p()

|

static |

Return true if template component is found in the demangled function name in DC.

References locality_dc_template_p().

Referenced by locality_dc_template_p(), and populate_templ_info().

◆ locality_determine_ipa_order()

|

static |

Determine order of all external nodes if PGO profile is available. Store the order in ORDER.

References compare_edge_profile_counts(), count, create_locality_info(), dump_file, FOR_EACH_DEFINED_FUNCTION, profile_count::initialized_p(), profile_count::ipa_p(), is_entry_node_p(), and SYMBOL_PARTITION.

Referenced by locality_partition_and_clone().

◆ locality_determine_static_order()

|

static |

Determine order of all external nodes if only static profile is available. Store the order in ORDER.

References count, create_locality_info(), dump_file, FOR_EACH_DEFINED_FUNCTION, is_entry_node_p(), LTO_LOCALITY_CPP_TEMPLATE, order_templ_hashes(), static_profile_cmp(), static_profile_templ_cmp(), and SYMBOL_PARTITION.

Referenced by locality_partition_and_clone().

◆ locality_partition_and_clone()

|

static |

Partitioning for code locality. 1. Create and sort callchains. If PGO is available, use real profile counts. Otherwise, use a set of heuristics to sort the callchains. 2. Partition the external nodes and their callchains in the determined order 2.1. If !partition, partition, else try and clone if it satisfies cloning criteria. 3. Partition all other unpartitioned nodes.

References add_node_to_partition(), create_partition(), dump_file, i, loc_infos, locality_determine_ipa_order(), locality_determine_static_order(), node_partitioned_p(), toplevel_node::order, partition_callchain(), symtab, and vNULL.

Referenced by lc_execute().

◆ make_pass_ipa_locality_cloning()

| ipa_opt_pass_d * make_pass_ipa_locality_cloning | ( | gcc::context * | ctxt | ) |

◆ node_in_partition_p()

|

static |

Return TRUE if NODE is in PARTITION.

Referenced by clone_node_as_needed(), create_locality_clone(), and partition_callchain().

◆ node_partitioned_p()

|

inlinestatic |

Return true if NODE is already in some partition.

Referenced by add_node_to_partition(), locality_partition_and_clone(), and partition_callchain().

◆ order_templ_hashes()

|

static |

Sort template instantiation types and record the sorted order.

References gcc_assert, i, templ_info::order, sort_templ_hashes_cmp(), and templ_hash_map.

Referenced by locality_determine_static_order().

◆ partition_callchain()

|

static |

Partition NODE's callees into PARTITION or clone if already partitioned and satisfies cloning criteria such as CLONING_MODEL, REAL_FREQ and SIZE cut-offs.

callgraph can have multiple caller to callee edges for multiple callsites For the first such edge, we make decisions about cutoffs and cloning because we redirect ALL callsites to cloned callee, not just one of them.

References add_node_to_partition(), locality_info::all_callees, callee_templ_cmp(), clone_node_as_needed(), clone_node_p(), clone_to_node, create_locality_info(), create_partition(), dump_file, get_locality_info(), symtab_node::get_uid(), i, LTO_LOCALITY_CPP_TEMPLATE, LTO_LOCALITY_NON_INTERPOSABLE_CLONING, node_in_partition_p(), node_partitioned_p(), node_to_clone, NULL, partition_callchain(), and SYMBOL_PARTITION.

Referenced by locality_partition_and_clone(), and partition_callchain().

◆ populate_callee_locality_info()

|

static |

Populate locality_info for NODE from its direct callees and callees via inlined nodes. N is used to iterate callees of NODE and callees of inlined callees of NODE.

References locality_info::callee_info, locality_callee_info::edge, locality_callee_info::freq, populate_callee_locality_info(), locality_callee_info::ultimate_callee, and locality_callee_info::ultimate_caller.

Referenced by create_locality_info(), and populate_callee_locality_info().

◆ populate_caller_locality_info()

|

static |

Populate locality_info for NODE from its direct callers.

References locality_info::caller_freq.

Referenced by create_locality_info().

◆ populate_templ_info()

|

static |

Generate hash for the template type from NODE's name if NODE represents a templated functions. If present, record in INFO.

References templ_info::count, DECL_ASSEMBLER_NAME, free(), IDENTIFIER_POINTER, locality_dc_template_p(), NULL, locality_info::templ_hash, and templ_hash_map.

Referenced by create_locality_info().

◆ sort_all_callees_default()

|

static |

Sort all_callees in INFO by edge hotness.

References locality_info::all_callees, callee_default_cmp(), and locality_info::callee_info.

Referenced by create_locality_info().

◆ sort_templ_hashes_cmp()

|

static |

Helper function for qsort; sort template instantiation types in descending order of their frequency count.

References a, b, and gcc_assert.

Referenced by order_templ_hashes().

◆ static_profile_cmp()

|

static |

Forward declaration.

Helper function for qsort; sort nodes by order.

References a, b, and compare_node_uids().

Referenced by compare_edge_profile_counts(), locality_determine_static_order(), and static_profile_templ_cmp().

◆ static_profile_templ_cmp()

|

static |

Helper function for qsort; sort nodes in the descending order derived of template instantiation types by frequency count.

References a, b, get_locality_info(), templ_info::order, static_profile_cmp(), locality_info::templ_hash, and templ_hash_map.

Referenced by locality_determine_static_order().

◆ suitable_for_locality_cloning_p()

|

static |

Determine if EDGE->CALLEE is suitable for cloning. It is assummed that the callee is not an inlined node.

References AVAIL_INTERPOSABLE, and LTO_LOCALITY_NON_INTERPOSABLE_CLONING.

Referenced by clone_node_p().

Variable Documentation

◆ clone_to_node

|

static |

Map from clone to its original node.

Referenced by clone_node_as_needed(), inline_clones(), lc_execute(), and partition_callchain().

◆ loc_infos

|

static |

Pool allocation for locality_info.

Referenced by create_locality_info(), and locality_partition_and_clone().

◆ locality_partitions

| vec<locality_partition> locality_partitions |

Locality partitions, assigns nodes to partitions. These are used later in WPA partitioning.

Referenced by create_partition().

◆ node_to_ch_info

|

static |

Referenced by create_locality_info(), get_locality_info(), and lc_execute().

◆ node_to_clone

|

static |

Map from original node to its latest clone. Gets overwritten whenever a new clone is created from the same node.

Referenced by adjust_recursive_callees(), clone_node_as_needed(), inline_clones(), lc_execute(), and partition_callchain().

◆ templ_hash_map

|

static |

Referenced by callee_templ_cmp(), lc_execute(), order_templ_hashes(), populate_templ_info(), and static_profile_templ_cmp().