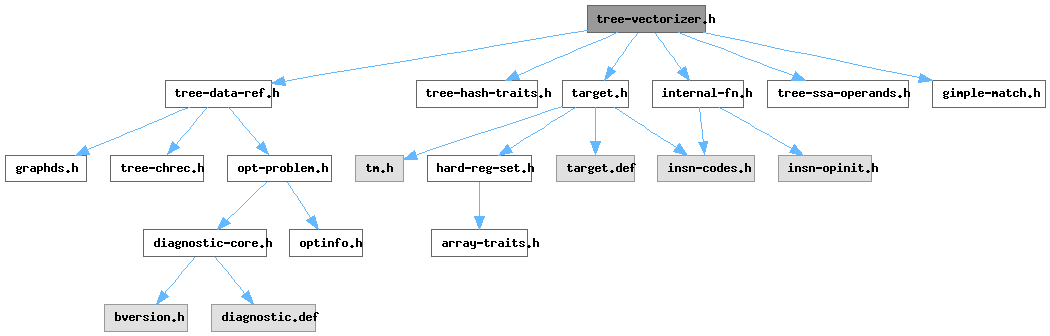

#include "tree-data-ref.h"#include "tree-hash-traits.h"#include "target.h"#include "internal-fn.h"#include "tree-ssa-operands.h"#include "gimple-match.h"#include "dominance.h"

Go to the source code of this file.

Data Structures | |

| struct | stmt_info_for_cost |

| struct | vect_scalar_ops_slice |

| struct | vect_scalar_ops_slice_hash |

| struct | vect_data |

| struct | vect_simd_clone_data |

| struct | vect_load_store_data |

| struct | _slp_tree |

| class | _slp_instance |

| struct | scalar_cond_masked_key |

| struct | default_hash_traits< scalar_cond_masked_key > |

| class | vec_lower_bound |

| class | vec_info_shared |

| class | vec_info |

| struct | rgroup_controls |

| struct | vec_loop_masks |

| class | vect_reduc_info_s |

| struct | vect_reusable_accumulator |

| class | _loop_vec_info |

| struct | slp_root |

| class | _bb_vec_info |

| class | dr_vec_info |

| class | _stmt_vec_info |

| struct | gather_scatter_info |

| class | vector_costs |

| class | auto_purge_vect_location |

| struct | vect_loop_form_info |

| class | vect_pattern |

Variables | |

| dump_user_location_t | vect_location |

| vect_pattern_decl_t | slp_patterns [] |

| size_t | num__slp_patterns |

Macro Definition Documentation

◆ BB_VINFO_BBS

| #define BB_VINFO_BBS | ( | B | ) |

◆ BB_VINFO_DATAREFS

| #define BB_VINFO_DATAREFS | ( | B | ) |

Referenced by vect_slp_region().

◆ BB_VINFO_DDRS

| #define BB_VINFO_DDRS | ( | B | ) |

◆ BB_VINFO_GROUPED_STORES

| #define BB_VINFO_GROUPED_STORES | ( | B | ) |

Referenced by vect_analyze_group_access_1().

◆ BB_VINFO_NBBS

| #define BB_VINFO_NBBS | ( | B | ) |

◆ BB_VINFO_SLP_INSTANCES

| #define BB_VINFO_SLP_INSTANCES | ( | B | ) |

Referenced by vect_slp_analyze_bb_1(), and vect_slp_region().

◆ DR_GROUP_FIRST_ELEMENT

| #define DR_GROUP_FIRST_ELEMENT | ( | S | ) |

Referenced by vect_optimize_slp_pass::decide_masked_load_lanes(), dr_misalignment(), dr_safe_speculative_read_required(), dr_set_safe_speculative_read_required(), dr_target_alignment(), ensure_base_align(), get_load_store_type(), vect_optimize_slp_pass::internal_node_cost(), vect_optimize_slp_pass::remove_redundant_permutations(), vect_optimize_slp_pass::start_choosing_layouts(), vect_analyze_data_ref_access(), vect_analyze_data_ref_accesses(), vect_analyze_data_ref_dependence(), vect_analyze_data_refs_alignment(), vect_analyze_group_access(), vect_analyze_group_access_1(), vect_analyze_loop_2(), vect_analyze_slp(), vect_build_slp_tree_1(), vect_build_slp_tree_2(), vect_compute_data_ref_alignment(), vect_create_data_ref_ptr(), vect_fixup_store_groups_with_patterns(), vect_get_place_in_interleaving_chain(), vect_lower_load_permutations(), vect_lower_load_permutations(), vect_preserves_scalar_order_p(), vect_prune_runtime_alias_test_list(), vect_relevant_for_alignment_p(), vect_slp_analyze_data_ref_dependence(), vect_slp_analyze_instance_dependence(), vect_slp_analyze_load_dependences(), vect_slp_analyze_node_alignment(), vect_small_gap_p(), vect_split_slp_store_group(), vect_supportable_dr_alignment(), vect_transform_slp_perm_load_1(), vect_vfa_access_size(), vector_alignment_reachable_p(), vectorizable_load(), vectorizable_store(), and vllp_cmp().

◆ DR_GROUP_GAP

| #define DR_GROUP_GAP | ( | S | ) |

Referenced by get_load_store_type(), vect_optimize_slp_pass::remove_redundant_permutations(), vect_analyze_group_access_1(), vect_build_slp_tree_2(), vect_fixup_store_groups_with_patterns(), vect_get_place_in_interleaving_chain(), vect_lower_load_permutations(), vect_split_slp_store_group(), vect_vfa_access_size(), and vectorizable_load().

◆ DR_GROUP_NEXT_ELEMENT

| #define DR_GROUP_NEXT_ELEMENT | ( | S | ) |

Referenced by get_group_alias_ptr_type(), get_load_store_type(), vect_optimize_slp_pass::remove_redundant_permutations(), vect_analyze_data_ref_access(), vect_analyze_data_ref_accesses(), vect_analyze_group_access(), vect_analyze_group_access_1(), vect_analyze_loop_2(), vect_analyze_slp_instance(), vect_build_slp_tree_2(), vect_create_data_ref_ptr(), vect_fixup_store_groups_with_patterns(), vect_get_place_in_interleaving_chain(), vect_lower_load_permutations(), vect_preserves_scalar_order_p(), vect_remove_stores(), vect_slp_analyze_load_dependences(), vect_split_slp_store_group(), and vectorizable_load().

◆ DR_GROUP_SIZE

| #define DR_GROUP_SIZE | ( | S | ) |

Referenced by vect_optimize_slp_pass::decide_masked_load_lanes(), get_load_store_type(), vect_optimize_slp_pass::internal_node_cost(), vect_optimize_slp_pass::remove_redundant_permutations(), vect_optimize_slp_pass::start_choosing_layouts(), vect_analyze_group_access_1(), vect_analyze_loop_2(), vect_analyze_slp(), vect_analyze_slp_instance(), vect_build_slp_tree_2(), vect_compute_data_ref_alignment(), vect_create_data_ref_ptr(), vect_enhance_data_refs_alignment(), vect_fixup_store_groups_with_patterns(), vect_lower_load_permutations(), vect_slp_analyze_instance_dependence(), vect_small_gap_p(), vect_split_slp_store_group(), vect_supportable_dr_alignment(), vect_transform_slp_perm_load_1(), vect_vfa_access_size(), vector_alignment_reachable_p(), vectorizable_load(), and vectorizable_store().

◆ DR_MISALIGNMENT_UNINITIALIZED

| #define DR_MISALIGNMENT_UNINITIALIZED (-2) |

Referenced by dr_misalignment(), ensure_base_align(), vec_info::new_stmt_vec_info(), and vect_slp_analyze_node_alignment().

◆ DR_MISALIGNMENT_UNKNOWN

| #define DR_MISALIGNMENT_UNKNOWN (-1) |

Info on data references alignment.

Referenced by dr_misalignment(), get_load_store_type(), known_alignment_for_access_p(), vect_compute_data_ref_alignment(), vect_dr_misalign_for_aligned_access(), vect_enhance_data_refs_alignment(), vect_get_peeling_costs_all_drs(), vect_known_alignment_in_bytes(), vect_peeling_supportable(), vect_slp_analyze_node_alignment(), vect_supportable_dr_alignment(), vect_update_misalignment_for_peel(), vectorizable_load(), and vectorizable_store().

◆ DR_SCALAR_KNOWN_BOUNDS

| #define DR_SCALAR_KNOWN_BOUNDS | ( | DR | ) |

Referenced by get_load_store_type(), vect_analyze_early_break_dependences(), vect_enhance_data_refs_alignment(), and vectorizable_load().

◆ DR_TARGET_ALIGNMENT

| #define DR_TARGET_ALIGNMENT | ( | DR | ) |

Referenced by ensure_base_align(), get_load_store_type(), get_misalign_in_elems(), vect_dr_aligned_if_related_peeled_dr_is(), vect_enhance_data_refs_alignment(), vect_gen_prolog_loop_niters(), vect_get_peeling_costs_all_drs(), vect_known_alignment_in_bytes(), vect_peeling_supportable(), vect_setup_realignment(), vect_update_misalignment_for_peel(), vectorizable_load(), and vectorizable_store().

◆ DUMP_VECT_SCOPE

| #define DUMP_VECT_SCOPE | ( | MSG | ) |

A macro for calling: dump_begin_scope (MSG, vect_location); via an RAII object, thus printing "=== MSG ===\n" to the dumpfile etc, and then calling dump_end_scope (); once the object goes out of scope, thus capturing the nesting of the scopes. These scopes affect dump messages within them: dump messages at the top level implicitly default to MSG_PRIORITY_USER_FACING, whereas those in a nested scope implicitly default to MSG_PRIORITY_INTERNALS.

Referenced by move_early_exit_stmts(), vect_analyze_data_ref_accesses(), vect_analyze_data_ref_dependences(), vect_analyze_data_refs(), vect_analyze_data_refs_alignment(), vect_analyze_early_break_dependences(), vect_analyze_loop(), vect_analyze_loop_form(), vect_analyze_scalar_cycles_1(), vect_analyze_slp(), vect_bb_partition_graph(), vect_compute_single_scalar_iteration_cost(), vect_determine_precisions(), vect_enhance_data_refs_alignment(), vect_get_loop_niters(), vect_make_slp_decision(), vect_mark_stmts_to_be_vectorized(), vect_match_slp_patterns(), vect_pattern_recog(), vect_prune_runtime_alias_test_list(), vect_slp_analyze_bb_1(), vect_slp_analyze_instance_alignment(), vect_slp_analyze_instance_dependence(), vect_slp_analyze_operations(), vect_transform_loop(), vect_update_inits_of_drs(), vect_update_ivs_after_vectorizer_for_early_breaks(), vectorizable_assignment(), vectorizable_bswap(), vectorizable_call(), vectorizable_conversion(), vectorizable_early_exit(), vectorizable_induction(), vectorizable_nonlinear_induction(), vectorizable_operation(), vectorizable_shift(), and vectorizable_simd_clone_call().

◆ LOOP_REQUIRES_VERSIONING

| #define LOOP_REQUIRES_VERSIONING | ( | L | ) |

Referenced by vect_analyze_loop(), vect_analyze_loop_2(), vect_analyze_loop_costing(), vect_estimate_min_profitable_iters(), and vect_transform_loop().

◆ LOOP_REQUIRES_VERSIONING_FOR_ALIAS

| #define LOOP_REQUIRES_VERSIONING_FOR_ALIAS | ( | L | ) |

Referenced by vect_estimate_min_profitable_iters(), and vect_loop_versioning().

◆ LOOP_REQUIRES_VERSIONING_FOR_ALIGNMENT

| #define LOOP_REQUIRES_VERSIONING_FOR_ALIGNMENT | ( | L | ) |

Referenced by vect_enhance_data_refs_alignment(), vect_estimate_min_profitable_iters(), and vect_loop_versioning().

◆ LOOP_REQUIRES_VERSIONING_FOR_NITERS

| #define LOOP_REQUIRES_VERSIONING_FOR_NITERS | ( | L | ) |

Referenced by vect_estimate_min_profitable_iters(), and vect_loop_versioning().

◆ LOOP_REQUIRES_VERSIONING_FOR_SIMD_IF_COND

| #define LOOP_REQUIRES_VERSIONING_FOR_SIMD_IF_COND | ( | L | ) |

Referenced by vect_loop_versioning().

◆ LOOP_REQUIRES_VERSIONING_FOR_SPEC_READ

| #define LOOP_REQUIRES_VERSIONING_FOR_SPEC_READ | ( | L | ) |

Referenced by vect_loop_versioning().

◆ LOOP_VINFO_ALLOW_MUTUAL_ALIGNMENT

| #define LOOP_VINFO_ALLOW_MUTUAL_ALIGNMENT | ( | L | ) |

Referenced by vect_create_cond_for_align_checks(), and vect_enhance_data_refs_alignment().

◆ LOOP_VINFO_ALTERNATE_DEFS

| #define LOOP_VINFO_ALTERNATE_DEFS | ( | L | ) |

Referenced by vect_analyze_slp(), and vect_stmt_relevant_p().

◆ LOOP_VINFO_BBS

| #define LOOP_VINFO_BBS | ( | L | ) |

Referenced by update_epilogue_loop_vinfo(), vect_analyze_loop_2(), vect_compute_single_scalar_iteration_cost(), vect_do_peeling(), vect_mark_stmts_to_be_vectorized(), and vect_transform_loop().

◆ LOOP_VINFO_CAN_USE_PARTIAL_VECTORS_P

| #define LOOP_VINFO_CAN_USE_PARTIAL_VECTORS_P | ( | L | ) |

Referenced by check_load_store_for_partial_vectors(), get_load_store_type(), vect_analyze_loop(), vect_analyze_loop_2(), vect_compute_type_for_early_break_scalar_iv(), vect_determine_partial_vectors_and_peeling(), vect_reduction_update_partial_vector_usage(), vectorizable_call(), vectorizable_condition(), vectorizable_conversion(), vectorizable_early_exit(), vectorizable_lane_reducing(), vectorizable_live_operation(), vectorizable_load(), vectorizable_operation(), vectorizable_reduction(), vectorizable_simd_clone_call(), and vectorizable_store().

◆ LOOP_VINFO_CHECK_NONZERO

| #define LOOP_VINFO_CHECK_NONZERO | ( | L | ) |

Referenced by vect_check_nonzero_value(), and vect_prune_runtime_alias_test_list().

◆ LOOP_VINFO_CHECK_UNEQUAL_ADDRS

| #define LOOP_VINFO_CHECK_UNEQUAL_ADDRS | ( | L | ) |

Referenced by vect_analyze_loop_2(), vect_create_cond_for_unequal_addrs(), vect_estimate_min_profitable_iters(), and vect_prune_runtime_alias_test_list().

◆ LOOP_VINFO_COMP_ALIAS_DDRS

| #define LOOP_VINFO_COMP_ALIAS_DDRS | ( | L | ) |

Referenced by vect_analyze_loop_2(), vect_create_cond_for_alias_checks(), vect_estimate_min_profitable_iters(), and vect_prune_runtime_alias_test_list().

◆ LOOP_VINFO_COST_MODEL_THRESHOLD

| #define LOOP_VINFO_COST_MODEL_THRESHOLD | ( | L | ) |

Referenced by vect_analyze_loop_2(), vect_analyze_loop_costing(), vect_apply_runtime_profitability_check_p(), vect_loop_versioning(), and vect_transform_loop().

◆ LOOP_VINFO_DATAREFS

| #define LOOP_VINFO_DATAREFS | ( | L | ) |

Referenced by update_epilogue_loop_vinfo(), vect_analyze_data_ref_dependences(), vect_analyze_data_refs_alignment(), vect_analyze_loop_2(), vect_enhance_data_refs_alignment(), vect_get_peeling_costs_all_drs(), vect_peeling_supportable(), and vect_update_inits_of_drs().

◆ LOOP_VINFO_DDRS

| #define LOOP_VINFO_DDRS | ( | L | ) |

Referenced by vect_analyze_data_ref_dependences().

◆ LOOP_VINFO_DRS_ADVANCED_BY

| #define LOOP_VINFO_DRS_ADVANCED_BY | ( | L | ) |

Referenced by update_epilogue_loop_vinfo(), and vect_transform_loop().

◆ LOOP_VINFO_EARLY_BREAKS

| #define LOOP_VINFO_EARLY_BREAKS | ( | L | ) |

Referenced by get_load_store_type(), vect_analyze_data_ref_dependences(), vect_analyze_loop(), vect_analyze_loop_2(), vect_can_peel_nonlinear_iv_p(), vect_create_loop_vinfo(), vect_do_peeling(), vect_recog_gcond_pattern(), vect_transform_loop(), vect_update_ivs_after_vectorizer_for_early_breaks(), vect_use_loop_mask_for_alignment_p(), vectorizable_live_operation(), vectorizable_live_operation_1(), and vectorizable_load().

◆ LOOP_VINFO_EARLY_BREAKS_LIVE_IVS

| #define LOOP_VINFO_EARLY_BREAKS_LIVE_IVS | ( | L | ) |

◆ LOOP_VINFO_EARLY_BREAKS_VECT_PEELED

| #define LOOP_VINFO_EARLY_BREAKS_VECT_PEELED | ( | L | ) |

Referenced by vect_analyze_early_break_dependences(), vect_do_peeling(), vect_enhance_data_refs_alignment(), vect_gen_vector_loop_niters_mult_vf(), vect_set_loop_condition_partial_vectors(), vect_set_loop_condition_partial_vectors_avx512(), and vectorizable_live_operation().

◆ LOOP_VINFO_EARLY_BRK_DEST_BB

| #define LOOP_VINFO_EARLY_BRK_DEST_BB | ( | L | ) |

Referenced by move_early_exit_stmts(), and vect_analyze_early_break_dependences().

◆ LOOP_VINFO_EARLY_BRK_IV_TYPE

| #define LOOP_VINFO_EARLY_BRK_IV_TYPE | ( | L | ) |

Referenced by vect_compute_type_for_early_break_scalar_iv(), and vect_do_peeling().

◆ LOOP_VINFO_EARLY_BRK_NITERS_VAR

| #define LOOP_VINFO_EARLY_BRK_NITERS_VAR | ( | L | ) |

Referenced by vect_do_peeling(), and vect_update_ivs_after_vectorizer_for_early_breaks().

◆ LOOP_VINFO_EARLY_BRK_STORES

| #define LOOP_VINFO_EARLY_BRK_STORES | ( | L | ) |

Referenced by move_early_exit_stmts(), and vect_analyze_early_break_dependences().

◆ LOOP_VINFO_EARLY_BRK_VUSES

| #define LOOP_VINFO_EARLY_BRK_VUSES | ( | L | ) |

Referenced by move_early_exit_stmts(), and vect_analyze_early_break_dependences().

◆ LOOP_VINFO_EPILOGUE_MAIN_EXIT

| #define LOOP_VINFO_EPILOGUE_MAIN_EXIT | ( | L | ) |

Referenced by vect_do_peeling().

◆ LOOP_VINFO_EPILOGUE_P

| #define LOOP_VINFO_EPILOGUE_P | ( | L | ) |

Referenced by check_scan_store(), vect_analyze_data_ref_dependences(), vect_analyze_loop_1(), vect_analyze_loop_2(), vect_analyze_loop_costing(), vect_compute_data_ref_alignment(), vect_create_loop_vinfo(), vect_determine_partial_vectors_and_peeling(), vect_estimate_min_profitable_iters(), vect_need_peeling_or_partial_vectors_p(), vect_transform_loop(), and vect_transform_loops().

◆ LOOP_VINFO_FULLY_MASKED_P

| #define LOOP_VINFO_FULLY_MASKED_P | ( | L | ) |

Referenced by check_scan_store(), supports_vector_compare_and_branch(), vect_estimate_min_profitable_iters(), vect_schedule_slp_node(), vect_set_loop_condition_partial_vectors(), vect_set_loop_controls_directly(), vect_transform_reduction(), vect_use_loop_mask_for_alignment_p(), vectorizable_call(), vectorizable_condition(), vectorizable_early_exit(), vectorizable_live_operation(), vectorizable_live_operation_1(), vectorizable_load(), vectorizable_operation(), vectorizable_simd_clone_call(), vectorizable_store(), and vectorize_fold_left_reduction().

◆ LOOP_VINFO_FULLY_WITH_LENGTH_P

| #define LOOP_VINFO_FULLY_WITH_LENGTH_P | ( | L | ) |

Referenced by supports_vector_compare_and_branch(), vect_estimate_min_profitable_iters(), vect_schedule_slp_node(), vectorizable_call(), vectorizable_condition(), vectorizable_early_exit(), vectorizable_live_operation(), vectorizable_live_operation_1(), vectorizable_load(), vectorizable_operation(), vectorizable_scan_store(), vectorizable_store(), and vectorize_fold_left_reduction().

◆ LOOP_VINFO_GROUPED_STORES

| #define LOOP_VINFO_GROUPED_STORES | ( | L | ) |

Referenced by vect_analyze_group_access_1().

◆ LOOP_VINFO_HAS_MASK_STORE

| #define LOOP_VINFO_HAS_MASK_STORE | ( | L | ) |

Referenced by vectorizable_store().

◆ LOOP_VINFO_INNER_LOOP_COST_FACTOR

| #define LOOP_VINFO_INNER_LOOP_COST_FACTOR | ( | L | ) |

Referenced by vector_costs::adjust_cost_for_freq(), vect_compute_single_scalar_iteration_cost(), and vect_create_loop_vinfo().

◆ LOOP_VINFO_INT_NITERS

| #define LOOP_VINFO_INT_NITERS | ( | L | ) |

Referenced by vector_costs::better_epilogue_loop_than_p(), vect_analyze_loop_2(), vect_analyze_loop_costing(), vect_can_peel_nonlinear_iv_p(), vect_do_peeling(), vect_enhance_data_refs_alignment(), vect_get_peel_iters_epilogue(), vect_known_niters_smaller_than_vf(), vect_need_peeling_or_partial_vectors_p(), and vect_transform_loop().

◆ LOOP_VINFO_INV_PATTERN_DEF_SEQ

| #define LOOP_VINFO_INV_PATTERN_DEF_SEQ | ( | L | ) |

Referenced by vect_transform_loop().

◆ LOOP_VINFO_LENS

| #define LOOP_VINFO_LENS | ( | L | ) |

Referenced by check_load_store_for_partial_vectors(), vect_analyze_loop_2(), vect_estimate_min_profitable_iters(), vect_reduction_update_partial_vector_usage(), vect_set_loop_condition_partial_vectors(), vect_transform_reduction(), vect_update_ivs_after_vectorizer_for_early_breaks(), vect_verify_loop_lens(), vectorizable_call(), vectorizable_condition(), vectorizable_early_exit(), vectorizable_induction(), vectorizable_live_operation(), vectorizable_live_operation_1(), vectorizable_load(), vectorizable_operation(), vectorizable_scan_store(), and vectorizable_store().

◆ LOOP_VINFO_LOOP

| #define LOOP_VINFO_LOOP | ( | L | ) |

Access Functions.

Referenced by vector_costs::compare_inside_loop_cost(), cse_and_gimplify_to_preheader(), get_load_store_type(), vec_info::insert_seq_on_entry(), loop_niters_no_overflow(), move_early_exit_stmts(), parloops_is_simple_reduction(), stmt_in_inner_loop_p(), vect_analyze_data_ref_access(), vect_analyze_data_ref_dependence(), vect_analyze_data_refs(), vect_analyze_early_break_dependences(), vect_analyze_loop_1(), vect_analyze_loop_2(), vect_analyze_loop_costing(), vect_analyze_possibly_independent_ddr(), vect_analyze_scalar_cycles(), vect_analyze_scalar_cycles_1(), vect_analyze_slp(), vect_analyze_slp_reduc_chain(), vect_better_loop_vinfo_p(), vect_build_loop_niters(), vect_build_slp_tree_2(), vect_can_advance_ivs_p(), vect_check_gather_scatter(), vect_compute_data_ref_alignment(), vect_compute_single_scalar_iteration_cost(), vect_create_cond_for_alias_checks(), vect_create_data_ref_ptr(), vect_create_epilog_for_reduction(), vect_do_peeling(), vect_dr_behavior(), vect_emit_reduction_init_stmts(), vect_enhance_data_refs_alignment(), vect_estimate_min_profitable_iters(), vect_gen_vector_loop_niters(), vect_is_simple_reduction(), vect_iv_limit_for_partial_vectors(), vect_known_niters_smaller_than_vf(), vect_loop_versioning(), vect_mark_for_runtime_alias_test(), vect_mark_stmts_to_be_vectorized(), vect_min_prec_for_max_niters(), vect_model_reduction_cost(), vect_peeling_hash_choose_best_peeling(), vect_peeling_hash_insert(), vect_phi_first_order_recurrence_p(), vect_prepare_for_masked_peels(), vect_prune_runtime_alias_test_list(), vect_reassociating_reduction_p(), vect_record_base_alignments(), vect_schedule_slp_node(), vect_setup_realignment(), vect_stmt_relevant_p(), vect_supportable_dr_alignment(), vect_transform_cycle_phi(), vect_transform_loop(), vect_transform_reduction(), vect_truncate_gather_scatter_offset(), vect_update_ivs_after_vectorizer(), vect_update_ivs_after_vectorizer_for_early_breaks(), vectorizable_call(), vectorizable_condition(), vectorizable_conversion(), vectorizable_early_exit(), vectorizable_induction(), vectorizable_lane_reducing(), vectorizable_live_operation(), vectorizable_load(), vectorizable_nonlinear_induction(), vectorizable_recurr(), vectorizable_reduction(), vectorizable_simd_clone_call(), vectorizable_store(), and vectorize_fold_left_reduction().

◆ LOOP_VINFO_LOOP_CONDS

| #define LOOP_VINFO_LOOP_CONDS | ( | L | ) |

Referenced by vect_analyze_slp(), and vect_create_loop_vinfo().

◆ LOOP_VINFO_LOOP_IV_COND

| #define LOOP_VINFO_LOOP_IV_COND | ( | L | ) |

Referenced by vect_create_loop_vinfo(), and vect_stmt_relevant_p().

◆ LOOP_VINFO_LOOP_NEST

| #define LOOP_VINFO_LOOP_NEST | ( | L | ) |

Referenced by vect_analyze_data_ref_dependences(), and vect_prune_runtime_alias_test_list().

◆ LOOP_VINFO_LOWER_BOUNDS

| #define LOOP_VINFO_LOWER_BOUNDS | ( | L | ) |

Referenced by vect_analyze_loop_2(), vect_check_lower_bound(), vect_create_cond_for_lower_bounds(), and vect_estimate_min_profitable_iters().

◆ LOOP_VINFO_MAIN_EXIT

| #define LOOP_VINFO_MAIN_EXIT | ( | L | ) |

Referenced by vect_analyze_loop_2(), vect_create_epilog_for_reduction(), vect_create_loop_vinfo(), vect_do_peeling(), vect_enhance_data_refs_alignment(), vect_gen_vector_loop_niters_mult_vf(), vect_loop_versioning(), vect_set_loop_controls_directly(), vect_transform_loop(), vect_update_ivs_after_vectorizer_for_early_breaks(), and vectorizable_live_operation().

◆ LOOP_VINFO_MAIN_LOOP_INFO

| #define LOOP_VINFO_MAIN_LOOP_INFO | ( | L | ) |

Referenced by vect_analyze_loop_costing(), vect_create_loop_vinfo(), and vect_need_peeling_or_partial_vectors_p().

◆ LOOP_VINFO_MASK_NITERS_PFA_OFFSET

| #define LOOP_VINFO_MASK_NITERS_PFA_OFFSET | ( | L | ) |

◆ LOOP_VINFO_MASK_SKIP_NITERS

| #define LOOP_VINFO_MASK_SKIP_NITERS | ( | L | ) |

Referenced by vect_can_peel_nonlinear_iv_p(), vect_iv_limit_for_partial_vectors(), vect_prepare_for_masked_peels(), vect_set_loop_condition_partial_vectors(), vect_set_loop_condition_partial_vectors_avx512(), vect_update_ivs_after_vectorizer_for_early_breaks(), vectorizable_induction(), and vectorizable_nonlinear_induction().

◆ LOOP_VINFO_MASKS

| #define LOOP_VINFO_MASKS | ( | L | ) |

Referenced by can_produce_all_loop_masks_p(), check_load_store_for_partial_vectors(), vect_analyze_loop_2(), vect_estimate_min_profitable_iters(), vect_get_max_nscalars_per_iter(), vect_reduction_update_partial_vector_usage(), vect_set_loop_condition_partial_vectors(), vect_set_loop_condition_partial_vectors_avx512(), vect_transform_reduction(), vect_verify_full_masking(), vect_verify_full_masking_avx512(), vectorizable_call(), vectorizable_condition(), vectorizable_early_exit(), vectorizable_live_operation(), vectorizable_live_operation_1(), vectorizable_load(), vectorizable_operation(), vectorizable_simd_clone_call(), and vectorizable_store().

◆ LOOP_VINFO_MAX_SPEC_READ_AMOUNT

| #define LOOP_VINFO_MAX_SPEC_READ_AMOUNT | ( | L | ) |

Referenced by get_load_store_type(), and vect_create_cond_for_vla_spec_read().

◆ LOOP_VINFO_MAX_VECT_FACTOR

| #define LOOP_VINFO_MAX_VECT_FACTOR | ( | L | ) |

Referenced by vect_analyze_loop_2(), and vect_estimate_min_profitable_iters().

◆ LOOP_VINFO_MAY_ALIAS_DDRS

| #define LOOP_VINFO_MAY_ALIAS_DDRS | ( | L | ) |

Referenced by vect_mark_for_runtime_alias_test(), and vect_prune_runtime_alias_test_list().

◆ LOOP_VINFO_MAY_MISALIGN_STMTS

| #define LOOP_VINFO_MAY_MISALIGN_STMTS | ( | L | ) |

Referenced by vect_create_cond_for_align_checks(), vect_enhance_data_refs_alignment(), and vect_estimate_min_profitable_iters().

◆ LOOP_VINFO_MUST_USE_PARTIAL_VECTORS_P

| #define LOOP_VINFO_MUST_USE_PARTIAL_VECTORS_P | ( | L | ) |

Referenced by get_load_store_type(), vect_analyze_loop_2(), vect_determine_partial_vectors_and_peeling(), and vect_enhance_data_refs_alignment().

◆ LOOP_VINFO_NBBS

| #define LOOP_VINFO_NBBS | ( | L | ) |

Referenced by update_epilogue_loop_vinfo().

◆ LOOP_VINFO_NITERS

| #define LOOP_VINFO_NITERS | ( | L | ) |

Referenced by loop_niters_no_overflow(), vect_build_loop_niters(), vect_compute_type_for_early_break_scalar_iv(), vect_create_loop_vinfo(), vect_do_peeling(), vect_gen_prolog_loop_niters(), vect_need_peeling_or_partial_vectors_p(), vect_prepare_for_masked_peels(), vect_prune_runtime_alias_test_list(), vect_transform_loop(), vect_verify_loop_lens(), and vectorizable_simd_clone_call().

◆ LOOP_VINFO_NITERS_ASSUMPTIONS

| #define LOOP_VINFO_NITERS_ASSUMPTIONS | ( | L | ) |

Referenced by vect_create_cond_for_niters_checks(), and vect_create_loop_vinfo().

◆ LOOP_VINFO_NITERS_KNOWN_P

| #define LOOP_VINFO_NITERS_KNOWN_P | ( | L | ) |

Referenced by vector_costs::better_epilogue_loop_than_p(), loop_niters_no_overflow(), vect_analyze_loop_2(), vect_analyze_loop_costing(), vect_apply_runtime_profitability_check_p(), vect_can_peel_nonlinear_iv_p(), vect_do_peeling(), vect_enhance_data_refs_alignment(), vect_estimate_min_profitable_iters(), vect_get_known_peeling_cost(), vect_get_peel_iters_epilogue(), vect_known_niters_smaller_than_vf(), vect_need_peeling_or_partial_vectors_p(), and vect_transform_loop().

◆ LOOP_VINFO_NITERS_UNCHANGED

| #define LOOP_VINFO_NITERS_UNCHANGED | ( | L | ) |

Since LOOP_VINFO_NITERS and LOOP_VINFO_NITERSM1 can change after prologue peeling retain total unchanged scalar loop iterations for cost model.

Referenced by vect_create_loop_vinfo(), vect_transform_loop(), and vectorizable_simd_clone_call().

◆ LOOP_VINFO_NITERS_UNCOUNTED_P

| #define LOOP_VINFO_NITERS_UNCOUNTED_P | ( | L | ) |

Referenced by loop_niters_no_overflow(), vect_analyze_loop_2(), vect_analyze_loop_costing(), vect_build_loop_niters(), vect_compute_type_for_early_break_scalar_iv(), vect_create_loop_vinfo(), vect_do_peeling(), vect_enhance_data_refs_alignment(), vect_gen_prolog_loop_niters(), vect_loop_versioning(), vect_min_prec_for_max_niters(), and vect_transform_loop().

◆ LOOP_VINFO_NITERSM1

| #define LOOP_VINFO_NITERSM1 | ( | L | ) |

Referenced by loop_niters_no_overflow(), vect_analyze_loop_costing(), vect_create_loop_vinfo(), vect_do_peeling(), vect_loop_versioning(), vect_min_prec_for_max_niters(), and vect_transform_loop().

◆ LOOP_VINFO_NO_DATA_DEPENDENCIES

| #define LOOP_VINFO_NO_DATA_DEPENDENCIES | ( | L | ) |

Referenced by vect_analyze_data_ref_dependence(), vect_analyze_data_ref_dependences(), vect_analyze_possibly_independent_ddr(), and vectorizable_load().

◆ LOOP_VINFO_NON_LINEAR_IV

| #define LOOP_VINFO_NON_LINEAR_IV | ( | L | ) |

Referenced by vect_analyze_scalar_cycles_1(), and vect_use_loop_mask_for_alignment_p().

◆ LOOP_VINFO_ORIG_LOOP_INFO

| #define LOOP_VINFO_ORIG_LOOP_INFO | ( | L | ) |

Referenced by vect_analyze_loop_2(), vect_analyze_loop_costing(), vect_better_loop_vinfo_p(), vect_compute_data_ref_alignment(), vect_create_loop_vinfo(), vect_find_reusable_accumulator(), and vect_transform_loop().

◆ LOOP_VINFO_ORIG_MAX_VECT_FACTOR

| #define LOOP_VINFO_ORIG_MAX_VECT_FACTOR | ( | L | ) |

Referenced by vect_analyze_data_ref_dependences().

◆ LOOP_VINFO_PARTIAL_LOAD_STORE_BIAS

| #define LOOP_VINFO_PARTIAL_LOAD_STORE_BIAS | ( | L | ) |

Referenced by vect_analyze_loop_2(), vect_estimate_min_profitable_iters(), vect_gen_loop_len_mask(), vect_get_loop_len(), vect_set_loop_controls_directly(), vect_verify_loop_lens(), vectorizable_call(), vectorizable_condition(), vectorizable_load(), vectorizable_operation(), vectorizable_store(), and vectorize_fold_left_reduction().

◆ LOOP_VINFO_PARTIAL_VECTORS_STYLE

| #define LOOP_VINFO_PARTIAL_VECTORS_STYLE | ( | L | ) |

Referenced by vect_analyze_loop_2(), vect_estimate_min_profitable_iters(), vect_get_loop_mask(), vect_set_loop_condition(), vect_verify_full_masking(), vect_verify_full_masking_avx512(), and vect_verify_loop_lens().

◆ LOOP_VINFO_PEELING_FOR_ALIGNMENT

| #define LOOP_VINFO_PEELING_FOR_ALIGNMENT | ( | L | ) |

Referenced by vect_analyze_loop_2(), vect_analyze_loop_costing(), vect_can_peel_nonlinear_iv_p(), vect_compute_data_ref_alignment(), vect_compute_type_for_early_break_scalar_iv(), vect_do_peeling(), vect_enhance_data_refs_alignment(), vect_estimate_min_profitable_iters(), vect_gen_prolog_loop_niters(), vect_iv_limit_for_partial_vectors(), vect_need_peeling_or_partial_vectors_p(), vect_prepare_for_masked_peels(), vect_transform_loop(), vect_use_loop_mask_for_alignment_p(), and vectorizable_load().

◆ LOOP_VINFO_PEELING_FOR_GAPS

| #define LOOP_VINFO_PEELING_FOR_GAPS | ( | L | ) |

Referenced by get_load_store_type(), vect_analyze_loop_2(), vect_analyze_loop_costing(), vect_do_peeling(), vect_estimate_min_profitable_iters(), vect_gen_vector_loop_niters(), vect_get_peel_iters_epilogue(), vect_need_peeling_or_partial_vectors_p(), vect_transform_loop(), and vectorizable_load().

◆ LOOP_VINFO_PEELING_FOR_NITER

| #define LOOP_VINFO_PEELING_FOR_NITER | ( | L | ) |

Referenced by vect_analyze_loop(), vect_analyze_loop_2(), vect_analyze_loop_costing(), vect_determine_partial_vectors_and_peeling(), and vect_do_peeling().

◆ LOOP_VINFO_PTR_MASK

| #define LOOP_VINFO_PTR_MASK | ( | L | ) |

Referenced by vect_create_cond_for_align_checks(), and vect_enhance_data_refs_alignment().

◆ LOOP_VINFO_REDUCTIONS

| #define LOOP_VINFO_REDUCTIONS | ( | L | ) |

Referenced by vect_analyze_scalar_cycles_1().

◆ LOOP_VINFO_RGROUP_COMPARE_TYPE

| #define LOOP_VINFO_RGROUP_COMPARE_TYPE | ( | L | ) |

Referenced by vect_get_loop_len(), vect_rgroup_iv_might_wrap_p(), vect_set_loop_condition_partial_vectors(), vect_set_loop_controls_directly(), vect_verify_full_masking(), vect_verify_full_masking_avx512(), and vect_verify_loop_lens().

◆ LOOP_VINFO_RGROUP_IV_TYPE

| #define LOOP_VINFO_RGROUP_IV_TYPE | ( | L | ) |

Referenced by vect_analyze_loop_2(), vect_estimate_min_profitable_iters(), vect_get_loop_len(), vect_set_loop_condition_partial_vectors(), vect_set_loop_condition_partial_vectors_avx512(), vect_set_loop_controls_directly(), vect_verify_full_masking(), vect_verify_full_masking_avx512(), and vect_verify_loop_lens().

◆ LOOP_VINFO_SCALAR_ITERATION_COST

| #define LOOP_VINFO_SCALAR_ITERATION_COST | ( | L | ) |

Referenced by vect_compute_single_scalar_iteration_cost(), vect_enhance_data_refs_alignment(), vect_estimate_min_profitable_iters(), and vect_peeling_hash_get_lowest_cost().

◆ LOOP_VINFO_SCALAR_LOOP

| #define LOOP_VINFO_SCALAR_LOOP | ( | L | ) |

Referenced by set_uid_loop_bbs(), vect_do_peeling(), vect_loop_versioning(), and vect_transform_loop().

◆ LOOP_VINFO_SCALAR_LOOP_SCALING

| #define LOOP_VINFO_SCALAR_LOOP_SCALING | ( | L | ) |

Referenced by vect_loop_versioning(), and vect_transform_loop().

◆ LOOP_VINFO_SCALAR_MAIN_EXIT

| #define LOOP_VINFO_SCALAR_MAIN_EXIT | ( | L | ) |

Referenced by set_uid_loop_bbs(), vect_do_peeling(), and vect_transform_loop().

◆ LOOP_VINFO_SIMD_IF_COND

| #define LOOP_VINFO_SIMD_IF_COND | ( | L | ) |

Referenced by vect_analyze_loop_2().

◆ LOOP_VINFO_SLP_INSTANCES

| #define LOOP_VINFO_SLP_INSTANCES | ( | L | ) |

Referenced by vect_analyze_loop_2(), vect_analyze_slp(), vect_make_slp_decision(), and vect_transform_loop().

◆ LOOP_VINFO_UNALIGNED_DR

| #define LOOP_VINFO_UNALIGNED_DR | ( | L | ) |

Referenced by get_misalign_in_elems(), vect_analyze_loop_2(), vect_enhance_data_refs_alignment(), and vect_gen_prolog_loop_niters().

◆ LOOP_VINFO_USE_VERSIONING_WITHOUT_PEELING

| #define LOOP_VINFO_USE_VERSIONING_WITHOUT_PEELING | ( | L | ) |

Referenced by vect_do_peeling().

◆ LOOP_VINFO_USER_UNROLL

| #define LOOP_VINFO_USER_UNROLL | ( | L | ) |

Referenced by vect_analyze_loop_1(), and vect_transform_loop().

◆ LOOP_VINFO_USING_DECREMENTING_IV_P

| #define LOOP_VINFO_USING_DECREMENTING_IV_P | ( | L | ) |

Referenced by vect_analyze_loop_2(), vect_estimate_min_profitable_iters(), vect_set_loop_condition_partial_vectors(), and vect_set_loop_controls_directly().

◆ LOOP_VINFO_USING_PARTIAL_VECTORS_P

| #define LOOP_VINFO_USING_PARTIAL_VECTORS_P | ( | L | ) |

Referenced by vector_costs::better_epilogue_loop_than_p(), vect_analyze_loop(), vect_analyze_loop_2(), vect_analyze_loop_costing(), vect_can_peel_nonlinear_iv_p(), vect_determine_partial_vectors_and_peeling(), vect_do_peeling(), vect_estimate_min_profitable_iters(), vect_gen_vector_loop_niters(), vect_set_loop_condition(), vect_transform_loop(), vect_transform_loops(), and vectorizable_load().

◆ LOOP_VINFO_USING_SELECT_VL_P

| #define LOOP_VINFO_USING_SELECT_VL_P | ( | L | ) |

Referenced by vect_analyze_loop_2(), vect_get_data_ptr_increment(), vect_get_strided_load_store_ops(), vect_set_loop_condition_partial_vectors(), vect_set_loop_controls_directly(), vect_update_ivs_after_vectorizer_for_early_breaks(), vectorizable_induction(), vectorizable_load(), and vectorizable_store().

◆ LOOP_VINFO_VECT_FACTOR

| #define LOOP_VINFO_VECT_FACTOR | ( | L | ) |

Referenced by vector_costs::better_epilogue_loop_than_p(), check_load_store_for_partial_vectors(), vector_costs::compare_inside_loop_cost(), get_load_store_type(), vect_optimize_slp_pass::internal_node_cost(), vect_optimize_slp_pass::remove_redundant_permutations(), vect_analyze_loop(), vect_analyze_loop_2(), vect_analyze_loop_costing(), vect_better_loop_vinfo_p(), vect_can_peel_nonlinear_iv_p(), vect_compute_data_ref_alignment(), vect_create_cond_for_vla_spec_read(), vect_do_peeling(), vect_enhance_data_refs_alignment(), vect_estimate_min_profitable_iters(), vect_gen_vector_loop_niters(), vect_gen_vector_loop_niters_mult_vf(), vect_get_loop_len(), vect_get_loop_mask(), vect_get_num_copies(), vect_iv_limit_for_partial_vectors(), vect_make_slp_decision(), vect_max_vf(), vect_need_peeling_or_partial_vectors_p(), vect_prepare_for_masked_peels(), vect_prune_runtime_alias_test_list(), vect_record_loop_len(), vect_set_loop_condition_partial_vectors(), vect_set_loop_condition_partial_vectors_avx512(), vect_set_loop_controls_directly(), vect_small_gap_p(), vect_supportable_dr_alignment(), vect_transform_loop(), vect_transform_loops(), vect_update_ivs_after_vectorizer_for_early_breaks(), vect_verify_full_masking(), vect_verify_full_masking_avx512(), vect_vf_for_cost(), vectorizable_induction(), vectorizable_load(), vectorizable_nonlinear_induction(), vectorizable_reduction(), vectorizable_simd_clone_call(), vectorizable_slp_permutation_1(), and vectorizable_store().

◆ LOOP_VINFO_VECTORIZABLE_P

| #define LOOP_VINFO_VECTORIZABLE_P | ( | L | ) |

Referenced by try_vectorize_loop_1(), vect_analyze_loop(), and vect_analyze_loop_2().

◆ LOOP_VINFO_VERSIONING_THRESHOLD

| #define LOOP_VINFO_VERSIONING_THRESHOLD | ( | L | ) |

Referenced by vect_analyze_loop(), vect_analyze_loop_2(), vect_loop_versioning(), and vect_transform_loop().

◆ MAX_INTERM_CVT_STEPS

| #define MAX_INTERM_CVT_STEPS 3 |

The maximum number of intermediate steps required in multi-step type conversion.

Referenced by supportable_narrowing_operation(), and supportable_widening_operation().

◆ MAX_VECTORIZATION_FACTOR

| #define MAX_VECTORIZATION_FACTOR INT_MAX |

Referenced by vect_analyze_loop_2(), vect_estimate_min_profitable_iters(), and vect_max_vf().

◆ PURE_SLP_STMT

| #define PURE_SLP_STMT | ( | S | ) |

Referenced by vec_slp_has_scalar_use(), vect_bb_slp_mark_live_stmts(), and vectorizable_live_operation().

◆ SET_DR_MISALIGNMENT

| #define SET_DR_MISALIGNMENT | ( | DR, | |

| VAL ) |

Referenced by vect_compute_data_ref_alignment(), vect_enhance_data_refs_alignment(), and vect_update_misalignment_for_peel().

◆ SET_DR_TARGET_ALIGNMENT

| #define SET_DR_TARGET_ALIGNMENT | ( | DR, | |

| VAL ) |

Referenced by vect_compute_data_ref_alignment().

◆ SLP_INSTANCE_KIND

| #define SLP_INSTANCE_KIND | ( | S | ) |

Referenced by vect_optimize_slp_pass::start_choosing_layouts(), vect_analyze_loop_2(), vect_analyze_slp(), vect_analyze_slp_instance(), vect_analyze_slp_reduc_chain(), vect_analyze_slp_reduction(), vect_analyze_slp_reduction_group(), vect_bb_slp_mark_live_stmts(), vect_build_slp_instance(), vect_slp_analyze_instance_alignment(), vect_slp_analyze_instance_dependence(), vect_slp_analyze_operations(), and vectorizable_live_operation().

◆ SLP_INSTANCE_LOADS

| #define SLP_INSTANCE_LOADS | ( | S | ) |

Referenced by vect_analyze_loop_2(), vect_analyze_slp_instance(), vect_analyze_slp_reduc_chain(), vect_analyze_slp_reduction(), vect_analyze_slp_reduction_group(), vect_build_slp_instance(), vect_free_slp_instance(), vect_gather_slp_loads(), vect_slp_analyze_instance_alignment(), and vect_slp_analyze_instance_dependence().

◆ SLP_INSTANCE_REMAIN_DEFS

| #define SLP_INSTANCE_REMAIN_DEFS | ( | S | ) |

◆ SLP_INSTANCE_ROOT_STMTS

| #define SLP_INSTANCE_ROOT_STMTS | ( | S | ) |

Referenced by vect_analyze_slp_instance(), vect_analyze_slp_reduc_chain(), vect_analyze_slp_reduction(), vect_analyze_slp_reduction_group(), vect_bb_vectorization_profitable_p(), vect_build_slp_instance(), vect_free_slp_instance(), vect_schedule_slp(), vect_slp_analyze_bb_1(), and vect_slp_analyze_operations().

◆ SLP_INSTANCE_TREE

| #define SLP_INSTANCE_TREE | ( | S | ) |

Access Functions.

Referenced by vect_optimize_slp_pass::build_vertices(), debug(), dot_slp_tree(), vect_optimize_slp_pass::start_choosing_layouts(), vect_analyze_loop_2(), vect_analyze_slp(), vect_analyze_slp_instance(), vect_analyze_slp_reduc_chain(), vect_analyze_slp_reduction(), vect_analyze_slp_reduction_group(), vect_bb_partition_graph(), vect_bb_slp_mark_live_stmts(), vect_bb_vectorization_profitable_p(), vect_build_slp_instance(), vect_free_slp_instance(), vect_gather_slp_loads(), vect_lower_load_permutations(), vect_make_slp_decision(), vect_match_slp_patterns(), vect_optimize_slp(), vect_schedule_slp(), vect_slp_analyze_bb_1(), vect_slp_analyze_instance_alignment(), vect_slp_analyze_instance_dependence(), vect_slp_analyze_operations(), vect_slp_convert_to_external(), vect_slp_region(), vectorizable_bb_reduc_epilogue(), and vectorizable_reduction().

◆ SLP_TREE_CHILDREN

| #define SLP_TREE_CHILDREN | ( | S | ) |

Referenced by _slp_tree::_slp_tree(), addsub_pattern::build(), complex_add_pattern::build(), complex_fms_pattern::build(), complex_mul_pattern::build(), vect_optimize_slp_pass::build_graph(), vect_optimize_slp_pass::build_vertices(), vect_optimize_slp_pass::change_vec_perm_layout(), check_load_store_for_partial_vectors(), compatible_complex_nodes_p(), vect_optimize_slp_pass::decide_masked_load_lanes(), dot_slp_tree(), get_load_store_type(), vect_optimize_slp_pass::get_result_with_layout(), vect_optimize_slp_pass::internal_node_cost(), linear_loads_p(), complex_add_pattern::matches(), complex_fms_pattern::matches(), complex_mul_pattern::matches(), vect_optimize_slp_pass::materialize(), optimize_load_redistribution(), optimize_load_redistribution_1(), addsub_pattern::recognize(), vect_optimize_slp_pass::start_choosing_layouts(), vect_analyze_slp_instance(), vect_analyze_slp_reduc_chain(), vect_bb_partition_graph_r(), vect_bb_slp_mark_live_stmts(), vect_bb_slp_mark_live_stmts(), vect_bb_slp_scalar_cost(), vect_build_combine_node(), vect_build_slp_store_interleaving(), vect_build_slp_tree_2(), vect_build_swap_evenodd_node(), vect_create_epilog_for_reduction(), vect_create_new_slp_node(), vect_create_new_slp_node(), vect_cse_slp_nodes(), vect_detect_pair_op(), vect_detect_pair_op(), vect_free_slp_tree(), vect_gather_slp_loads(), vect_get_gather_scatter_ops(), vect_get_slp_defs(), vect_get_vec_defs(), vect_is_emulated_mixed_dot_prod(), vect_is_simple_use(), vect_lower_load_permutations(), vect_mark_slp_stmts(), vect_mark_slp_stmts_relevant(), vect_match_slp_patterns_2(), vect_print_slp_graph(), vect_print_slp_tree(), vect_remove_slp_scalar_calls(), vect_schedule_scc(), vect_schedule_slp_node(), vect_slp_analyze_node_operations(), vect_slp_build_two_operator_nodes(), vect_slp_gather_vectorized_scalar_stmts(), vect_slp_prune_covered_roots(), vect_transform_cycle_phi(), vect_transform_reduction(), vect_update_slp_vf_for_node(), vect_validate_multiplication(), vectorizable_condition(), vectorizable_induction(), vectorizable_lane_reducing(), vectorizable_lc_phi(), vectorizable_load(), vectorizable_phi(), vectorizable_recurr(), vectorizable_reduction(), vectorizable_scan_store(), vectorizable_slp_permutation(), vectorizable_store(), vectorize_fold_left_reduction(), and _slp_tree::~_slp_tree().

◆ SLP_TREE_CODE

| #define SLP_TREE_CODE | ( | S | ) |

◆ SLP_TREE_DEF_TYPE

| #define SLP_TREE_DEF_TYPE | ( | S | ) |

Referenced by _slp_tree::_slp_tree(), compatible_complex_nodes_p(), vect_optimize_slp_pass::create_partitions(), vect_optimize_slp_pass::decide_masked_load_lanes(), vect_optimize_slp_pass::get_result_with_layout(), vect_optimize_slp_pass::is_cfg_latch_edge(), linear_loads_p(), optimize_load_redistribution_1(), vect_analyze_loop_2(), vect_analyze_stmt(), vect_bb_partition_graph_r(), vect_bb_slp_mark_live_stmts(), vect_bb_slp_mark_live_stmts(), vect_bb_slp_scalar_cost(), vect_build_slp_tree(), vect_build_slp_tree_2(), vect_create_new_slp_node(), vect_create_new_slp_node(), vect_create_new_slp_node(), vect_cse_slp_nodes(), vect_gather_slp_loads(), vect_get_slp_scalar_def(), vect_is_simple_use(), vect_is_slp_load_node(), vect_mark_slp_stmts(), vect_mark_slp_stmts_relevant(), vect_maybe_update_slp_op_vectype(), vect_print_slp_tree(), vect_prologue_cost_for_slp(), vect_remove_slp_scalar_calls(), vect_schedule_scc(), vect_schedule_slp_node(), vect_slp_analyze_node_operations(), vect_slp_analyze_operations(), vect_slp_build_two_operator_nodes(), vect_slp_convert_to_external(), vect_slp_gather_vectorized_scalar_stmts(), vect_slp_prune_covered_roots(), vect_slp_tree_uniform_p(), vect_update_slp_vf_for_node(), vectorizable_phi(), vectorizable_reduction(), vectorizable_shift(), and vectorizable_slp_permutation_1().

◆ SLP_TREE_GS_BASE

| #define SLP_TREE_GS_BASE | ( | S | ) |

Referenced by _slp_tree::_slp_tree(), get_load_store_type(), vect_build_slp_tree_2(), and vect_get_gather_scatter_ops().

◆ SLP_TREE_GS_SCALE

| #define SLP_TREE_GS_SCALE | ( | S | ) |

◆ SLP_TREE_LANE_PERMUTATION

| #define SLP_TREE_LANE_PERMUTATION | ( | S | ) |

Referenced by _slp_tree::_slp_tree(), addsub_pattern::build(), complex_pattern::build(), vect_optimize_slp_pass::decide_masked_load_lanes(), vect_optimize_slp_pass::get_result_with_layout(), vect_optimize_slp_pass::internal_node_cost(), vect_optimize_slp_pass::materialize(), optimize_load_redistribution_1(), addsub_pattern::recognize(), vect_optimize_slp_pass::start_choosing_layouts(), vect_bb_slp_scalar_cost(), vect_build_combine_node(), vect_build_slp_store_interleaving(), vect_build_slp_tree_2(), vect_build_swap_evenodd_node(), vect_detect_pair_op(), vect_lower_load_permutations(), vect_print_slp_tree(), vect_slp_build_two_operator_nodes(), vectorizable_slp_permutation(), vectorizable_slp_permutation_1(), and _slp_tree::~_slp_tree().

◆ SLP_TREE_LANES

| #define SLP_TREE_LANES | ( | S | ) |

Referenced by vect_optimize_slp_pass::change_layout_cost(), check_load_store_for_partial_vectors(), check_scan_store(), vect_optimize_slp_pass::decide_masked_load_lanes(), get_load_store_type(), vect_optimize_slp_pass::get_result_with_layout(), get_vectype_for_scalar_type(), vect_optimize_slp_pass::internal_node_cost(), vect_optimize_slp_pass::is_compatible_layout(), optimize_load_redistribution_1(), vect_optimize_slp_pass::remove_redundant_permutations(), vect_optimize_slp_pass::start_choosing_layouts(), supportable_indirect_convert_operation(), vect_analyze_loop_2(), vect_analyze_slp(), vect_analyze_slp_instance(), vect_analyze_slp_reduc_chain(), vect_bb_slp_scalar_cost(), vect_bb_vectorization_profitable_p(), vect_build_combine_node(), vect_build_slp_store_interleaving(), vect_build_slp_tree_2(), vect_build_swap_evenodd_node(), vect_create_epilog_for_reduction(), vect_create_new_slp_node(), vect_create_new_slp_node(), vect_get_num_copies(), vect_lower_load_permutations(), vect_maybe_update_slp_op_vectype(), vect_slp_build_two_operator_nodes(), vect_slp_convert_to_external(), vect_transform_cycle_phi(), vect_update_slp_vf_for_node(), vectorizable_call(), vectorizable_condition(), vectorizable_conversion(), vectorizable_induction(), vectorizable_live_operation(), vectorizable_live_operation_1(), vectorizable_load(), vectorizable_nonlinear_induction(), vectorizable_recurr(), vectorizable_reduction(), vectorizable_shift(), vectorizable_simd_clone_call(), vectorizable_slp_permutation_1(), vectorizable_store(), and vllp_cmp().

◆ SLP_TREE_LOAD_PERMUTATION

| #define SLP_TREE_LOAD_PERMUTATION | ( | S | ) |

Referenced by _slp_tree::_slp_tree(), get_load_store_type(), has_consecutive_load_permutation(), vect_optimize_slp_pass::internal_node_cost(), linear_loads_p(), vect_optimize_slp_pass::materialize(), vect_optimize_slp_pass::remove_redundant_permutations(), vect_optimize_slp_pass::start_choosing_layouts(), vect_analyze_slp(), vect_build_slp_tree_2(), vect_load_perm_consecutive_p(), vect_lower_load_permutations(), vect_print_slp_tree(), vect_slp_convert_to_external(), vect_transform_slp_perm_load(), vectorizable_load(), vllp_cmp(), and _slp_tree::~_slp_tree().

◆ SLP_TREE_PERMUTE_P

| #define SLP_TREE_PERMUTE_P | ( | S | ) |

Referenced by vect_optimize_slp_pass::backward_cost(), vect_optimize_slp_pass::decide_masked_load_lanes(), vect_optimize_slp_pass::dump(), vect_optimize_slp_pass::forward_pass(), vect_optimize_slp_pass::get_result_with_layout(), vect_optimize_slp_pass::internal_node_cost(), vect_optimize_slp_pass::is_cfg_latch_edge(), linear_loads_p(), vect_optimize_slp_pass::materialize(), optimize_load_redistribution_1(), addsub_pattern::recognize(), vect_optimize_slp_pass::start_choosing_layouts(), vect_analyze_stmt(), vect_bb_slp_scalar_cost(), vect_detect_pair_op(), vect_gather_slp_loads(), vect_is_simple_use(), vect_is_slp_load_node(), vect_print_slp_tree(), vect_schedule_scc(), vect_schedule_slp_node(), vect_slp_analyze_node_operations_1(), vect_transform_stmt(), and vect_update_slp_vf_for_node().

◆ SLP_TREE_REDUC_IDX

| #define SLP_TREE_REDUC_IDX | ( | S | ) |

Referenced by vect_analyze_slp_reduc_chain(), vect_build_slp_tree_2(), vect_create_epilog_for_reduction(), vect_is_reduction(), vect_transform_reduction(), vectorizable_call(), vectorizable_condition(), vectorizable_operation(), and vectorizable_reduction().

◆ SLP_TREE_REF_COUNT

| #define SLP_TREE_REF_COUNT | ( | S | ) |

Referenced by _slp_tree::_slp_tree(), addsub_pattern::build(), complex_add_pattern::build(), complex_fms_pattern::build(), complex_mul_pattern::build(), vect_optimize_slp_pass::decide_masked_load_lanes(), optimize_load_redistribution(), optimize_load_redistribution_1(), vect_analyze_slp_reduc_chain(), vect_build_combine_node(), vect_build_slp_tree(), vect_build_slp_tree_2(), vect_build_swap_evenodd_node(), vect_free_slp_tree(), vect_print_slp_tree(), and vect_slp_build_two_operator_nodes().

◆ SLP_TREE_REPRESENTATIVE

| #define SLP_TREE_REPRESENTATIVE | ( | S | ) |

Referenced by _slp_tree::_slp_tree(), addsub_pattern::build(), complex_pattern::build(), vect_optimize_slp_pass::build_vertices(), check_load_store_for_partial_vectors(), compatible_complex_nodes_p(), vect_optimize_slp_pass::containing_loop(), vect_optimize_slp_pass::decide_masked_load_lanes(), vect_optimize_slp_pass::dump(), vect_optimize_slp_pass::get_result_with_layout(), vect_optimize_slp_pass::internal_node_cost(), vect_optimize_slp_pass::is_cfg_latch_edge(), linear_loads_p(), record_stmt_cost(), vect_optimize_slp_pass::start_choosing_layouts(), vect_analyze_loop_2(), vect_analyze_slp(), vect_analyze_slp_reduc_chain(), vect_analyze_stmt(), vect_build_combine_node(), vect_build_slp_store_interleaving(), vect_build_slp_tree_2(), vect_build_swap_evenodd_node(), vect_create_epilog_for_reduction(), vect_create_new_slp_node(), vect_free_slp_tree(), vect_gather_slp_loads(), vect_is_emulated_mixed_dot_prod(), vect_is_simple_use(), vect_is_slp_load_node(), vect_lower_load_permutations(), vect_match_expression_p(), vect_model_reduction_cost(), vect_pattern_validate_optab(), vect_print_slp_tree(), vect_schedule_scc(), vect_schedule_slp(), vect_schedule_slp_node(), vect_slp_analyze_operations(), vect_slp_build_two_operator_nodes(), vect_slp_node_weight(), vectorizable_live_operation(), and vectorizable_reduction().

◆ SLP_TREE_SCALAR_OPS

| #define SLP_TREE_SCALAR_OPS | ( | S | ) |

Referenced by _slp_tree::_slp_tree(), compatible_complex_nodes_p(), vect_optimize_slp_pass::get_result_with_layout(), vect_bb_slp_mark_live_stmts(), vect_create_new_slp_node(), vect_get_slp_scalar_def(), vect_is_simple_use(), vect_print_slp_tree(), vect_prologue_cost_for_slp(), vect_schedule_slp_node(), vect_slp_analyze_node_operations(), vect_slp_convert_to_external(), vect_slp_gather_vectorized_scalar_stmts(), vect_slp_tree_uniform_p(), vectorizable_shift(), vectorizable_slp_permutation_1(), and _slp_tree::~_slp_tree().

◆ SLP_TREE_SCALAR_STMTS

| #define SLP_TREE_SCALAR_STMTS | ( | S | ) |

Referenced by _slp_tree::_slp_tree(), can_vectorize_live_stmts(), get_load_store_type(), vect_optimize_slp_pass::get_result_with_layout(), _slp_instance::location(), vect_optimize_slp_pass::materialize(), optimize_load_redistribution_1(), vect_optimize_slp_pass::remove_redundant_permutations(), vect_analyze_loop_2(), vect_analyze_slp(), vect_analyze_slp_instance(), vect_analyze_slp_reduc_chain(), vect_analyze_slp_reduction(), vect_analyze_slp_reduction_group(), vect_bb_partition_graph_r(), vect_bb_slp_mark_live_stmts(), vect_bb_slp_scalar_cost(), vect_build_slp_instance(), vect_build_slp_store_interleaving(), vect_build_slp_tree(), vect_build_slp_tree_2(), vect_create_epilog_for_reduction(), vect_create_new_slp_node(), vect_create_new_slp_node(), vect_cse_slp_nodes(), vect_find_first_scalar_stmt_in_slp(), vect_find_last_scalar_stmt_in_slp(), vect_get_slp_scalar_def(), vect_lower_load_permutations(), vect_lower_load_permutations(), vect_mark_slp_stmts(), vect_mark_slp_stmts_relevant(), vect_print_slp_tree(), vect_remove_slp_scalar_calls(), vect_schedule_slp(), vect_slp_analyze_bb_1(), vect_slp_analyze_instance_dependence(), vect_slp_analyze_load_dependences(), vect_slp_analyze_node_alignment(), vect_slp_analyze_node_operations(), vect_slp_analyze_node_operations_1(), vect_slp_analyze_operations(), vect_slp_analyze_store_dependences(), vect_slp_convert_to_external(), vect_slp_gather_vectorized_scalar_stmts(), vect_slp_prune_covered_roots(), vect_transform_cycle_phi(), vect_transform_slp_perm_load_1(), vectorizable_induction(), vectorizable_load(), vectorizable_recurr(), vectorizable_scan_store(), vectorizable_shift(), vectorizable_store(), vectorize_fold_left_reduction(), vllp_cmp(), and _slp_tree::~_slp_tree().

◆ SLP_TREE_TYPE

| #define SLP_TREE_TYPE | ( | S | ) |

Referenced by _slp_tree::_slp_tree(), SLP_TREE_MEMORY_ACCESS_TYPE(), vect_analyze_stmt(), vect_schedule_slp_node(), vect_slp_analyze_node_operations(), vect_slp_analyze_node_operations_1(), vect_slp_analyze_operations(), vect_transform_stmt(), vectorizable_assignment(), vectorizable_bswap(), vectorizable_call(), vectorizable_comparison(), vectorizable_condition(), vectorizable_conversion(), vectorizable_induction(), vectorizable_lane_reducing(), vectorizable_lc_phi(), vectorizable_load(), vectorizable_nonlinear_induction(), vectorizable_operation(), vectorizable_phi(), vectorizable_recurr(), vectorizable_reduction(), vectorizable_shift(), vectorizable_simd_clone_call(), and vectorizable_store().

◆ SLP_TREE_VEC_DEFS

| #define SLP_TREE_VEC_DEFS | ( | S | ) |

Referenced by _slp_tree::_slp_tree(), vect_optimize_slp_pass::create_partitions(), vect_optimize_slp_pass::get_result_with_layout(), vect_build_slp_tree_2(), vect_create_constant_vectors(), vect_create_epilog_for_reduction(), vect_get_slp_defs(), vect_get_slp_vect_def(), vect_prologue_cost_for_slp(), vect_schedule_scc(), vect_schedule_slp_node(), vect_transform_slp_perm_load_1(), vectorizable_early_exit(), vectorizable_induction(), vectorizable_live_operation(), vectorizable_phi(), vectorizable_simd_clone_call(), vectorizable_slp_permutation_1(), vectorizable_store(), vectorize_slp_instance_root_stmt(), and _slp_tree::~_slp_tree().

◆ SLP_TREE_VECTYPE

| #define SLP_TREE_VECTYPE | ( | S | ) |

Referenced by _slp_tree::_slp_tree(), addsub_pattern::build(), complex_pattern::build(), check_load_store_for_partial_vectors(), vect_optimize_slp_pass::decide_masked_load_lanes(), get_load_store_type(), vect_optimize_slp_pass::get_result_with_layout(), complex_mul_pattern::matches(), addsub_pattern::recognize(), record_stmt_cost(), vect_optimize_slp_pass::start_choosing_layouts(), vect_add_slp_permutation(), vect_analyze_loop_2(), vect_analyze_slp(), vect_analyze_slp_instance(), vect_analyze_slp_reduc_chain(), vect_analyze_stmt(), vect_bb_slp_scalar_cost(), vect_build_combine_node(), vect_build_slp_store_interleaving(), vect_build_slp_tree_2(), vect_build_swap_evenodd_node(), vect_check_scalar_mask(), vect_check_store_rhs(), vect_create_constant_vectors(), vect_get_load_cost(), vect_get_num_copies(), vect_get_store_cost(), vect_is_emulated_mixed_dot_prod(), vect_is_simple_use(), vect_lower_load_permutations(), vect_make_slp_decision(), vect_maybe_update_slp_op_vectype(), vect_model_reduction_cost(), vect_pattern_validate_optab(), vect_print_slp_tree(), vect_prologue_cost_for_slp(), vect_schedule_slp_node(), vect_slp_analyze_instance_dependence(), vect_slp_analyze_node_alignment(), vect_slp_analyze_node_operations(), vect_slp_analyze_operations(), vect_slp_build_two_operator_nodes(), vect_slp_convert_to_external(), vect_slp_region(), vect_transform_cycle_phi(), vect_transform_lc_phi(), vect_transform_reduction(), vect_transform_slp_perm_load_1(), vectorizable_assignment(), vectorizable_bb_reduc_epilogue(), vectorizable_bswap(), vectorizable_call(), vectorizable_comparison(), vectorizable_condition(), vectorizable_conversion(), vectorizable_early_exit(), vectorizable_induction(), vectorizable_lane_reducing(), vectorizable_lc_phi(), vectorizable_live_operation(), vectorizable_load(), vectorizable_nonlinear_induction(), vectorizable_operation(), vectorizable_phi(), vectorizable_recurr(), vectorizable_reduction(), vectorizable_scan_store(), vectorizable_shift(), vectorizable_simd_clone_call(), vectorizable_slp_permutation(), vectorizable_slp_permutation_1(), vectorizable_store(), and vectorize_fold_left_reduction().

◆ STMT_SLP_TYPE

| #define STMT_SLP_TYPE | ( | S | ) |

◆ STMT_VINFO_DATA_REF

| #define STMT_VINFO_DATA_REF | ( | S | ) |

Referenced by bump_vector_ptr(), compatible_complex_nodes_p(), exist_non_indexing_operands_for_use_p(), get_group_alias_ptr_type(), vect_optimize_slp_pass::internal_node_cost(), linear_loads_p(), vect_optimize_slp_pass::start_choosing_layouts(), vect_analyze_early_break_dependences(), vect_analyze_group_access_1(), vect_analyze_slp_instance(), vect_bb_slp_scalar_cost(), vect_build_slp_tree_1(), vect_build_slp_tree_2(), vect_check_gather_scatter(), vect_compute_single_scalar_iteration_cost(), vect_cond_store_pattern_same_ref(), vect_create_cond_for_align_checks(), vect_create_data_ref_ptr(), vect_describe_gather_scatter_call(), vect_gather_slp_loads(), vect_get_and_check_slp_defs(), vect_get_strided_load_store_ops(), vect_get_vector_types_for_stmt(), vect_is_extending_load(), vect_is_slp_load_node(), vect_is_store_elt_extraction(), vect_preserves_scalar_order_p(), vect_recog_bool_pattern(), vect_recog_cond_store_pattern(), vect_recog_gather_scatter_pattern(), vect_recog_mask_conversion_pattern(), vect_schedule_slp(), vect_schedule_slp_node(), vect_slp_analyze_instance_dependence(), vect_slp_analyze_load_dependences(), vect_slp_analyze_store_dependences(), vect_slp_prefer_store_lanes_p(), vect_stmt_relevant_p(), vectorizable_assignment(), vectorizable_load(), vectorizable_operation(), and vectorizable_store().

◆ STMT_VINFO_DEF_TYPE

| #define STMT_VINFO_DEF_TYPE | ( | S | ) |

Referenced by integer_type_for_mask(), iv_phi_p(), vec_info::new_stmt_vec_info(), parloops_valid_reduction_input_p(), process_use(), vect_analyze_loop_2(), vect_analyze_scalar_cycles_1(), vect_analyze_slp(), vect_analyze_slp_reduc_chain(), vect_analyze_slp_reduction(), vect_analyze_slp_reductions(), vect_analyze_stmt(), vect_build_slp_tree_2(), vect_compute_single_scalar_iteration_cost(), vect_create_epilog_for_reduction(), vect_create_loop_vinfo(), vect_get_internal_def(), vect_init_pattern_stmt(), vect_is_simple_use(), vect_mark_pattern_stmts(), vect_mark_stmts_to_be_vectorized(), vect_recog_vector_vector_shift_pattern(), vect_schedule_scc(), vect_transform_cycle_phi(), vectorizable_assignment(), vectorizable_call(), vectorizable_comparison(), vectorizable_condition(), vectorizable_conversion(), vectorizable_early_exit(), vectorizable_induction(), vectorizable_lc_phi(), vectorizable_load(), vectorizable_operation(), vectorizable_phi(), vectorizable_recurr(), vectorizable_reduction(), vectorizable_shift(), vectorizable_simd_clone_call(), and vectorizable_store().

◆ STMT_VINFO_DR_BASE_ADDRESS

| #define STMT_VINFO_DR_BASE_ADDRESS | ( | S | ) |

Referenced by vect_analyze_data_refs().

◆ STMT_VINFO_DR_BASE_ALIGNMENT

| #define STMT_VINFO_DR_BASE_ALIGNMENT | ( | S | ) |

Referenced by vect_analyze_data_refs().

◆ STMT_VINFO_DR_BASE_MISALIGNMENT

| #define STMT_VINFO_DR_BASE_MISALIGNMENT | ( | S | ) |

Referenced by vect_analyze_data_refs().

◆ STMT_VINFO_DR_INFO

| #define STMT_VINFO_DR_INFO | ( | S | ) |

Referenced by check_load_store_for_partial_vectors(), check_scan_store(), compare_step_with_zero(), dr_misalignment(), dr_safe_speculative_read_required(), dr_set_safe_speculative_read_required(), dr_target_alignment(), ensure_base_align(), get_load_store_type(), get_negative_load_store_type(), vec_info::lookup_dr(), vec_info::move_dr(), vect_analyze_data_ref_accesses(), vect_analyze_early_break_dependences(), vect_create_addr_base_for_vector_ref(), vect_create_data_ref_ptr(), vect_enhance_data_refs_alignment(), vect_prune_runtime_alias_test_list(), vect_setup_realignment(), vect_slp_analyze_node_alignment(), vect_truncate_gather_scatter_offset(), vectorizable_load(), vectorizable_scan_store(), and vectorizable_store().

◆ STMT_VINFO_DR_INIT

| #define STMT_VINFO_DR_INIT | ( | S | ) |

Referenced by vect_analyze_data_refs().

◆ STMT_VINFO_DR_OFFSET

| #define STMT_VINFO_DR_OFFSET | ( | S | ) |

Referenced by vect_analyze_data_refs().

◆ STMT_VINFO_DR_OFFSET_ALIGNMENT

| #define STMT_VINFO_DR_OFFSET_ALIGNMENT | ( | S | ) |

Referenced by vect_analyze_data_refs().

◆ STMT_VINFO_DR_STEP

| #define STMT_VINFO_DR_STEP | ( | S | ) |

Referenced by vect_analyze_data_ref_access(), vect_analyze_data_refs(), and vect_setup_realignment().

◆ STMT_VINFO_DR_STEP_ALIGNMENT

| #define STMT_VINFO_DR_STEP_ALIGNMENT | ( | S | ) |

Referenced by vect_analyze_data_refs().

◆ STMT_VINFO_DR_WRT_VEC_LOOP

| #define STMT_VINFO_DR_WRT_VEC_LOOP | ( | S | ) |

Referenced by vec_info::move_dr(), vect_analyze_data_refs(), vect_dr_behavior(), and vect_record_base_alignments().

◆ STMT_VINFO_GATHER_SCATTER_P

| #define STMT_VINFO_GATHER_SCATTER_P | ( | S | ) |

Referenced by check_load_store_for_partial_vectors(), get_load_store_type(), vec_info::move_dr(), record_stmt_cost(), vect_analyze_data_ref_access(), vect_analyze_data_ref_accesses(), vect_analyze_data_ref_dependence(), vect_analyze_data_refs(), vect_analyze_possibly_independent_ddr(), vect_analyze_slp_instance(), vect_build_slp_tree_1(), vect_build_slp_tree_2(), vect_check_store_rhs(), vect_compute_data_ref_alignment(), vect_get_and_check_slp_defs(), vect_mark_stmts_to_be_vectorized(), vect_recog_gather_scatter_pattern(), vect_record_base_alignments(), vect_relevant_for_alignment_p(), vect_update_inits_of_drs(), vectorizable_load(), and vectorizable_store().

◆ STMT_VINFO_GROUPED_ACCESS

| #define STMT_VINFO_GROUPED_ACCESS | ( | S | ) |

Referenced by check_scan_store(), vect_optimize_slp_pass::decide_masked_load_lanes(), dr_misalignment(), dr_safe_speculative_read_required(), dr_set_safe_speculative_read_required(), dr_target_alignment(), ensure_base_align(), get_load_store_type(), vect_optimize_slp_pass::internal_node_cost(), vect_optimize_slp_pass::remove_redundant_permutations(), vect_optimize_slp_pass::start_choosing_layouts(), vect_analyze_data_ref_access(), vect_analyze_data_refs_alignment(), vect_analyze_loop_2(), vect_analyze_slp(), vect_build_slp_tree_1(), vect_build_slp_tree_2(), vect_compute_data_ref_alignment(), vect_enhance_data_refs_alignment(), vect_fixup_store_groups_with_patterns(), vect_is_slp_load_node(), vect_lower_load_permutations(), vect_preserves_scalar_order_p(), vect_relevant_for_alignment_p(), vect_slp_analyze_data_ref_dependence(), vect_slp_analyze_instance_dependence(), vect_slp_analyze_load_dependences(), vect_supportable_dr_alignment(), vect_transform_slp_perm_load_1(), vector_alignment_reachable_p(), vectorizable_load(), vectorizable_store(), and vllp_cmp().

◆ STMT_VINFO_IN_PATTERN_P

| #define STMT_VINFO_IN_PATTERN_P | ( | S | ) |

Referenced by vect_analyze_early_break_dependences(), vect_analyze_loop_2(), vect_bb_slp_mark_live_stmts(), vect_bb_slp_scalar_cost(), vect_fixup_store_groups_with_patterns(), vect_free_slp_tree(), vect_mark_relevant(), vect_pattern_recog_1(), vect_set_pattern_stmt(), vect_stmt_to_vectorize(), and vectorizable_reduction().

◆ STMT_VINFO_LIVE_P

| #define STMT_VINFO_LIVE_P | ( | S | ) |

Referenced by can_vectorize_live_stmts(), vect_analyze_slp(), vect_analyze_slp_reductions(), vect_analyze_stmt(), vect_bb_slp_mark_live_stmts(), vect_bb_slp_scalar_cost(), vect_compute_single_scalar_iteration_cost(), vect_mark_relevant(), vect_print_slp_tree(), vect_slp_analyze_node_operations_1(), vect_transform_loop(), vect_transform_stmt(), vectorizable_induction(), vectorizable_live_operation(), and vectorizable_reduction().

◆ STMT_VINFO_LOOP_PHI_EVOLUTION_BASE_UNCHANGED

| #define STMT_VINFO_LOOP_PHI_EVOLUTION_BASE_UNCHANGED | ( | S | ) |

Referenced by is_nonwrapping_integer_induction(), vect_analyze_scalar_cycles_1(), vect_is_nonlinear_iv_evolution(), vect_is_simple_iv_evolution(), and vectorizable_reduction().

◆ STMT_VINFO_LOOP_PHI_EVOLUTION_PART

| #define STMT_VINFO_LOOP_PHI_EVOLUTION_PART | ( | S | ) |

Referenced by is_nonwrapping_integer_induction(), vect_analyze_scalar_cycles_1(), vect_can_advance_ivs_p(), vect_can_peel_nonlinear_iv_p(), vect_is_nonlinear_iv_evolution(), vect_is_simple_iv_evolution(), vect_update_ivs_after_vectorizer(), vectorizable_induction(), vectorizable_nonlinear_induction(), and vectorizable_reduction().

◆ STMT_VINFO_LOOP_PHI_EVOLUTION_TYPE

| #define STMT_VINFO_LOOP_PHI_EVOLUTION_TYPE | ( | S | ) |

Referenced by vect_analyze_scalar_cycles_1(), vect_can_advance_ivs_p(), vect_can_peel_nonlinear_iv_p(), vect_is_nonlinear_iv_evolution(), vect_update_ivs_after_vectorizer(), vectorizable_induction(), and vectorizable_nonlinear_induction().

◆ STMT_VINFO_MIN_NEG_DIST

| #define STMT_VINFO_MIN_NEG_DIST | ( | S | ) |

Referenced by vect_analyze_data_ref_dependence(), and vectorizable_load().

◆ STMT_VINFO_PATTERN_DEF_SEQ

| #define STMT_VINFO_PATTERN_DEF_SEQ | ( | S | ) |

Referenced by append_pattern_def_seq(), vect_analyze_early_break_dependences(), vect_analyze_loop_2(), vect_mark_pattern_stmts(), vect_pattern_recog_1(), vect_recog_popcount_clz_ctz_ffs_pattern(), and vect_split_statement().

◆ STMT_VINFO_REDUC_CODE

| #define STMT_VINFO_REDUC_CODE | ( | S | ) |

Referenced by vec_info::new_stmt_vec_info(), vect_analyze_slp_reduc_chain(), vect_build_slp_tree_2(), and vect_is_simple_reduction().

◆ STMT_VINFO_REDUC_DEF

| #define STMT_VINFO_REDUC_DEF | ( | S | ) |

Referenced by addsub_pattern::build(), complex_pattern::build(), vec_info::new_stmt_vec_info(), vect_analyze_loop_2(), vect_analyze_scalar_cycles_1(), vect_analyze_slp_reduc_chain(), vect_build_slp_tree_2(), vect_mark_pattern_stmts(), vectorizable_condition(), and vectorizable_conversion().

◆ STMT_VINFO_REDUC_IDX

| #define STMT_VINFO_REDUC_IDX | ( | S | ) |

Referenced by vec_info::new_stmt_vec_info(), vect_analyze_slp(), vect_analyze_slp_reduc_chain(), vect_build_slp_tree_1(), vect_build_slp_tree_2(), vect_get_and_check_slp_defs(), vect_is_reduction(), vect_is_simple_reduction(), vect_mark_pattern_stmts(), vect_reassociating_reduction_p(), and vectorizable_lane_reducing().

◆ STMT_VINFO_REDUC_TYPE

| #define STMT_VINFO_REDUC_TYPE | ( | S | ) |

Referenced by vec_info::new_stmt_vec_info(), vect_analyze_slp_reduc_chain(), vect_build_slp_tree_2(), and vect_is_simple_reduction().

◆ STMT_VINFO_REDUC_VECTYPE_IN

◆ STMT_VINFO_RELATED_STMT

| #define STMT_VINFO_RELATED_STMT | ( | S | ) |

Referenced by vec_info::add_pattern_stmt(), update_epilogue_loop_vinfo(), vect_analyze_loop_2(), vect_bb_slp_mark_live_stmts(), vect_fixup_store_groups_with_patterns(), vect_get_and_check_slp_defs(), vect_init_pattern_stmt(), vect_look_through_possible_promotion(), vect_mark_pattern_stmts(), vect_mark_relevant(), vect_orig_stmt(), vect_set_pattern_stmt(), vect_split_statement(), vect_stmt_to_vectorize(), and vectorizable_reduction().

◆ STMT_VINFO_RELEVANT

| #define STMT_VINFO_RELEVANT | ( | S | ) |

◆ STMT_VINFO_RELEVANT_P

| #define STMT_VINFO_RELEVANT_P | ( | S | ) |

Referenced by vect_analyze_slp_reductions(), vect_analyze_stmt(), vect_compute_single_scalar_iteration_cost(), vect_relevant_for_alignment_p(), vect_transform_loop(), vectorizable_assignment(), vectorizable_call(), vectorizable_comparison(), vectorizable_comparison_1(), vectorizable_condition(), vectorizable_conversion(), vectorizable_early_exit(), vectorizable_induction(), vectorizable_live_operation(), vectorizable_load(), vectorizable_operation(), vectorizable_shift(), vectorizable_simd_clone_call(), and vectorizable_store().

◆ STMT_VINFO_SIMD_LANE_ACCESS_P

| #define STMT_VINFO_SIMD_LANE_ACCESS_P | ( | S | ) |

Referenced by check_scan_store(), vec_info::move_dr(), vect_analyze_data_ref_accesses(), vect_analyze_data_refs(), vect_update_inits_of_drs(), vectorizable_load(), vectorizable_scan_store(), and vectorizable_store().

◆ STMT_VINFO_SLP_VECT_ONLY

| #define STMT_VINFO_SLP_VECT_ONLY | ( | S | ) |

Referenced by vect_optimize_slp_pass::decide_masked_load_lanes(), vec_info::new_stmt_vec_info(), vect_analyze_data_ref_accesses(), vect_analyze_slp_instance(), and vect_build_slp_tree_2().

◆ STMT_VINFO_SLP_VECT_ONLY_PATTERN

| #define STMT_VINFO_SLP_VECT_ONLY_PATTERN | ( | S | ) |

Referenced by addsub_pattern::build(), complex_pattern::build(), vec_info::new_stmt_vec_info(), vect_analyze_loop_2(), and vect_free_slp_tree().

◆ STMT_VINFO_STMT

| #define STMT_VINFO_STMT | ( | S | ) |

Access Functions.

Referenced by complex_pattern::build(), check_scan_store(), compatible_complex_nodes_p(), vect_optimize_slp_pass::decide_masked_load_lanes(), dump_stmt_cost(), get_load_store_type(), vect_optimize_slp_pass::start_choosing_layouts(), stmt_in_inner_loop_p(), update_epilogue_loop_vinfo(), vect_analyze_data_ref_accesses(), vect_analyze_early_break_dependences(), vect_analyze_slp(), vect_analyze_slp_reduc_chain(), vect_analyze_slp_reductions(), vect_build_slp_tree_2(), vect_determine_mask_precision(), vect_get_slp_scalar_def(), vect_match_expression_p(), vect_pattern_validate_optab(), vect_recog_abd_pattern(), vect_recog_absolute_difference(), vect_recog_bitfield_ref_pattern(), vect_recog_build_binary_gimple_stmt(), vect_recog_cond_store_pattern(), vect_recog_gcond_pattern(), vect_recog_mod_var_pattern(), vect_recog_sat_add_pattern(), vect_recog_sat_sub_pattern(), vect_recog_sat_trunc_pattern(), vect_recog_widen_abd_pattern(), vect_slp_analyze_instance_dependence(), vect_stmt_relevant_p(), vectorizable_call(), vectorizable_comparison_1(), vectorizable_early_exit(), vectorizable_load(), vectorizable_reduction(), vectorizable_scan_store(), vectorize_fold_left_reduction(), vectorize_slp_instance_root_stmt(), and vllp_cmp().

◆ STMT_VINFO_STRIDED_P

| #define STMT_VINFO_STRIDED_P | ( | S | ) |

Referenced by vect_optimize_slp_pass::decide_masked_load_lanes(), get_load_store_type(), vec_info::move_dr(), vect_analyze_data_ref_access(), vect_analyze_data_ref_dependence(), vect_analyze_data_refs(), vect_analyze_group_access_1(), vect_analyze_slp(), vect_analyze_slp_instance(), vect_build_slp_tree_2(), vect_enhance_data_refs_alignment(), vect_lower_load_permutations(), and vect_relevant_for_alignment_p().

◆ STMT_VINFO_VECTORIZABLE

| #define STMT_VINFO_VECTORIZABLE | ( | S | ) |

Referenced by vec_info::new_stmt_vec_info(), vect_analyze_data_ref_accesses(), vect_analyze_data_ref_dependence(), vect_analyze_data_refs(), vect_analyze_data_refs_alignment(), vect_analyze_group_access_1(), vect_build_slp_tree_1(), vect_build_slp_tree_2(), vect_determine_precisions(), vect_pattern_recog(), and vect_record_base_alignments().

◆ STMT_VINFO_VECTYPE

| #define STMT_VINFO_VECTYPE | ( | S | ) |

Referenced by append_pattern_def_seq(), addsub_pattern::build(), complex_pattern::build(), get_misalign_in_elems(), record_stmt_cost(), vect_analyze_data_refs(), vect_analyze_data_refs_alignment(), vect_analyze_loop_2(), vect_analyze_stmt(), vect_create_cond_for_align_checks(), vect_describe_gather_scatter_call(), vect_dr_misalign_for_aligned_access(), vect_enhance_data_refs_alignment(), vect_gen_prolog_loop_niters(), vect_get_load_cost(), vect_get_peeling_costs_all_drs(), vect_get_store_cost(), vect_get_vector_types_for_stmt(), vect_init_pattern_stmt(), vect_mark_stmts_to_be_vectorized(), vect_peeling_supportable(), vect_recog_bit_insert_pattern(), vect_recog_bitfield_ref_pattern(), vect_recog_gather_scatter_pattern(), vect_recog_popcount_clz_ctz_ffs_pattern(), vect_transform_stmt(), vect_update_misalignment_for_peel(), vect_vfa_access_size(), and vector_alignment_reachable_p().

◆ VECT_MAX_COST

| #define VECT_MAX_COST 1000 |

Referenced by vect_get_load_cost(), vect_get_store_cost(), and vect_peeling_hash_insert().

◆ VECT_REDUC_INFO_CODE

| #define VECT_REDUC_INFO_CODE | ( | I | ) |

◆ VECT_REDUC_INFO_DEF_TYPE

| #define VECT_REDUC_INFO_DEF_TYPE | ( | I | ) |

Referenced by vect_analyze_slp_reduc_chain(), vect_build_slp_tree_2(), and vect_transform_reduction().

◆ VECT_REDUC_INFO_EPILOGUE_ADJUSTMENT

| #define VECT_REDUC_INFO_EPILOGUE_ADJUSTMENT | ( | I | ) |

Referenced by vect_create_epilog_for_reduction(), vect_find_reusable_accumulator(), and vect_transform_cycle_phi().

◆ VECT_REDUC_INFO_FN

| #define VECT_REDUC_INFO_FN | ( | I | ) |

Referenced by vect_analyze_slp_reduc_chain(), vect_build_slp_tree_2(), vect_create_epilog_for_reduction(), vect_reduction_update_partial_vector_usage(), vect_transform_reduction(), and vectorizable_reduction().

◆ VECT_REDUC_INFO_FORCE_SINGLE_CYCLE

| #define VECT_REDUC_INFO_FORCE_SINGLE_CYCLE | ( | I | ) |

Referenced by vect_transform_cycle_phi(), vect_transform_reduction(), and vectorizable_reduction().

◆ VECT_REDUC_INFO_INDUC_COND_INITIAL_VAL

| #define VECT_REDUC_INFO_INDUC_COND_INITIAL_VAL | ( | I | ) |

Referenced by vect_create_epilog_for_reduction(), vect_transform_cycle_phi(), and vectorizable_reduction().

◆ VECT_REDUC_INFO_INITIAL_VALUES

| #define VECT_REDUC_INFO_INITIAL_VALUES | ( | I | ) |

Referenced by get_initial_defs_for_reduction(), vect_create_epilog_for_reduction(), vect_find_reusable_accumulator(), and vect_transform_cycle_phi().

◆ VECT_REDUC_INFO_NEUTRAL_OP

| #define VECT_REDUC_INFO_NEUTRAL_OP | ( | I | ) |

Referenced by vect_find_reusable_accumulator(), vect_transform_cycle_phi(), and vectorizable_reduction().

◆ VECT_REDUC_INFO_RESULT_POS

| #define VECT_REDUC_INFO_RESULT_POS | ( | I | ) |

Referenced by vect_transform_reduction().

◆ VECT_REDUC_INFO_REUSED_ACCUMULATOR

| #define VECT_REDUC_INFO_REUSED_ACCUMULATOR | ( | I | ) |

Referenced by vect_create_epilog_for_reduction(), vect_emit_reduction_init_stmts(), vect_find_reusable_accumulator(), and vect_transform_cycle_phi().

◆ VECT_REDUC_INFO_SCALAR_RESULTS

| #define VECT_REDUC_INFO_SCALAR_RESULTS | ( | I | ) |

Referenced by vect_create_epilog_for_reduction(), and vect_find_reusable_accumulator().

◆ VECT_REDUC_INFO_TYPE

| #define VECT_REDUC_INFO_TYPE | ( | I | ) |

Referenced by vect_analyze_slp_reduc_chain(), vect_build_slp_tree_2(), vect_create_epilog_for_reduction(), vect_find_reusable_accumulator(), vect_reduc_type(), vect_reduction_update_partial_vector_usage(), vect_transform_cycle_phi(), vect_transform_reduction(), vectorizable_condition(), vectorizable_lane_reducing(), vectorizable_live_operation(), and vectorizable_reduction().

◆ VECT_REDUC_INFO_VECTYPE

| #define VECT_REDUC_INFO_VECTYPE | ( | I | ) |

Referenced by vect_create_epilog_for_reduction(), and vectorizable_reduction().

◆ VECT_REDUC_INFO_VECTYPE_FOR_MASK

| #define VECT_REDUC_INFO_VECTYPE_FOR_MASK | ( | I | ) |

Referenced by vect_create_epilog_for_reduction(), and vectorizable_reduction().

◆ VECT_SCALAR_BOOLEAN_TYPE_P

| #define VECT_SCALAR_BOOLEAN_TYPE_P | ( | TYPE | ) |

Nonzero if TYPE represents a (scalar) boolean type or type in the middle-end compatible with it (unsigned precision 1 integral types). Used to determine which types should be vectorized as VECTOR_BOOLEAN_TYPE_P.

Referenced by get_same_sized_vectype(), integer_type_for_mask(), possible_vector_mask_operation_p(), vect_check_scalar_mask(), vect_determine_mask_precision(), vect_is_simple_cond(), vect_narrowable_type_p(), vect_recog_bool_pattern(), vect_recog_cast_forwprop_pattern(), vect_recog_gcond_pattern(), vect_recog_mask_conversion_pattern(), and vectorizable_operation().

◆ VECTORIZABLE_CYCLE_DEF

| #define VECTORIZABLE_CYCLE_DEF | ( | D | ) |

Referenced by vect_analyze_scalar_cycles_1(), vect_analyze_slp(), vect_compute_single_scalar_iteration_cost(), and vectorizable_lane_reducing().

Typedef Documentation

◆ auto_lane_permutation_t

| typedef auto_vec<std::pair<unsigned, unsigned>, 16> auto_lane_permutation_t |

◆ auto_load_permutation_t

| typedef auto_vec<unsigned, 16> auto_load_permutation_t |

◆ bb_vec_info

| typedef _bb_vec_info * bb_vec_info |

◆ complex_perm_kinds_t

| typedef enum _complex_perm_kinds complex_perm_kinds_t |

All possible load permute values that could result from the partial data-flow analysis.

◆ dr_p

| typedef struct data_reference* dr_p |

◆ drs_init_vec

| typedef auto_vec<std::pair<data_reference*, tree> > drs_init_vec |

◆ lane_permutation_t

| typedef vec<std::pair<unsigned, unsigned> > lane_permutation_t |

◆ load_permutation_t

| typedef vec<unsigned> load_permutation_t |

◆ loop_vec_info

| typedef _loop_vec_info * loop_vec_info |

Info on vectorized loops.

◆ opt_loop_vec_info

Wrapper for loop_vec_info, for tracking success/failure, where a non-NULL value signifies success, and a NULL value signifies failure, supporting propagating an opt_problem * describing the failure back up the call stack.

◆ scalar_cond_masked_set_type

◆ slp_compat_nodes_map_t

| typedef hash_map<slp_node_hash, bool> slp_compat_nodes_map_t |

◆ slp_instance

| typedef class _slp_instance * slp_instance |

SLP instance is a sequence of stmts in a loop that can be packed into SIMD stmts.

◆ slp_node_hash

| typedef pair_hash<nofree_ptr_hash <_slp_tree>, nofree_ptr_hash <_slp_tree> > slp_node_hash |

Cache from nodes pair to being compatible or not.

◆ slp_tree

◆ slp_tree_to_load_perm_map_t

Cache from nodes to the load permutation they represent.

◆ stmt_vec_info

| typedef class _stmt_vec_info* stmt_vec_info |

Vectorizer Copyright (C) 2003-2026 Free Software Foundation, Inc. Contributed by Dorit Naishlos <dorit@il.ibm.com> This file is part of GCC. GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version. GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details. You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see <http://www.gnu.org/licenses/>.

◆ stmt_vector_for_cost

| typedef vec<stmt_info_for_cost> stmt_vector_for_cost |

◆ tree_cond_mask_hash

Key and map that records association between vector conditions and corresponding loop mask, and is populated by prepare_vec_mask.

◆ vec_base_alignments

| typedef hash_map<tree_operand_hash, std::pair<stmt_vec_info, innermost_loop_behavior *> > vec_base_alignments |

Maps base addresses to an innermost_loop_behavior and the stmt it was derived from that gives the maximum known alignment for that base.

◆ vec_cond_masked_set_type

◆ vec_loop_lens

| typedef auto_vec<rgroup_controls> vec_loop_lens |

◆ vec_object_pair

| typedef std::pair<tree, tree> vec_object_pair |

Describes two objects whose addresses must be unequal for the vectorized loop to be valid.

◆ vect_pattern_decl_t

| typedef vect_pattern *(* vect_pattern_decl_t) (slp_tree_to_load_perm_map_t *, slp_compat_nodes_map_t *, slp_tree *) |

Function pointer to create a new pattern matcher from a generic type.

◆ vect_reduc_info

| typedef class vect_reduc_info_s* vect_reduc_info |

Enumeration Type Documentation

◆ _complex_perm_kinds

| enum _complex_perm_kinds |

◆ dr_alignment_support

| enum dr_alignment_support |

◆ operation_type

| enum operation_type |

◆ peeling_support

| enum peeling_support |

◆ slp_instance_kind

| enum slp_instance_kind |

◆ slp_vect_type

| enum slp_vect_type |

◆ stmt_vec_info_type

| enum stmt_vec_info_type |

Info on vectorized defs.

◆ vec_load_store_type

| enum vec_load_store_type |

◆ vect_def_type

| enum vect_def_type |

◆ vect_induction_op_type